Abstract

Advances in autonomous vehicle (AV) technologies mean that driverless taxis could become a part of everyday life in cities within the next decade. We present a user-design activity leading to the development of an experience-design framework for autonomous taxi services of the future based on end-user expectations.

We used Mozilla Hubs by Mozilla™ as a design collaboration tool to inform Human-Machine Interface (HMI) design for future autonomous taxis. Twenty-five participants joined research facilitators in seven workshops. Virtual reality (VR) environments, or alternately PCs, depicted a roadside scene that enabled participants to discuss the approach, identification, and onboarding tasks; immersion in a 360-degree video of a taxi interior enabled participants to discuss in-transit, arrival, and exit/payment interactions. Verbal prompts encouraged participants to envision, depict, and discuss HMIs.

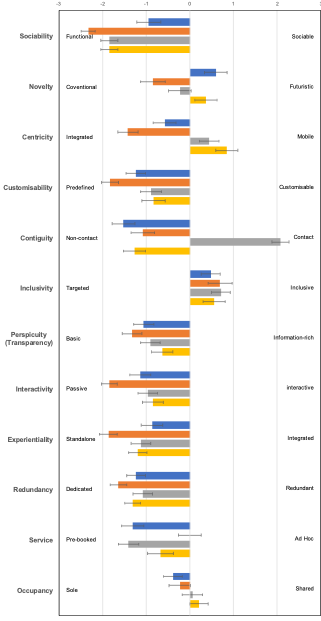

Analysis identified 12 semantic dimensions of an autonomous taxi user experience to describe a continuum of experiences, for example “functional” versus “sociable” and “conventional” versus “futuristic,” which provided a preliminary framework for experience design. Four autonomous taxi HMI concepts were developed, and the interactions of 53 participants were video recorded for an evaluation study using the dimensions. Significant differences were found between experiences on most dimensions, suggesting participants were able to use the dimensions as a meaningful framework for evaluating HMIs.

Keywords

Autonomous Taxis, HMI design, user experience (UX) design, AV MaaS, Virtual Reality

Introduction

Future Mobility

The Autonomous Vehicle Mobility-as-a-Service (AV MaaS) concept is predicted to facilitate and improve traffic flow and potentially remove the need for parking spaces in cities (Merat et al., 2017). On-demand often shared autonomous vehicles (AVs) could supplement other forms of public transport. It will complement mass public transport by providing first/last mile solutions (Krueger et al., 2016). AV MaaS also addresses the need for a more sustainable transport and ethical travel, which invites behavioral shifts from conventional, single occupancy cars to shared, electric vehicles, or “RoboTaxis” (Fagnant & Kockelman, 2014). In addition to these benefits, Butler et al. (2021) identify that this vision of mobility should improve social equity in many varied ways.

This view of future MaaS is predicated on the provision of fully autonomous (“driverless”) vehicles. Estimates suggest 1-2% of fleet (taxi/ride-share) vehicles in the US will be AVs by 2025 and rise to 70-85% in 2060 (Mahdavian et al., 2021). While much attention has been placed on enabling the underlying autonomy of these vehicles, there is little established knowledge on exactly how passengers will use and interact with these vehicles during a journey experience. This understanding is particularly important because, in the absence of a human driver, all interactions will likely take place through, or be moderated by, technology in the form of human-machine interfaces (HMIs). Exploring and understanding the appropriate user experience introduced by these HMIs is paramount.

Most existing knowledge that explores different interactive in-vehicle technologies, and HMIs and their impact on users’ attitudes and behaviours, applies to vehicles in which there is a driver present. It is important to consider how HMIs can support the practical accomplishment of different tasks when there is no driver present, for example, to determine the most appropriate technology and interaction style to allow a passenger to request that their taxi stop briefly so they can purchase supplies from a shop before resuming their onward journey. From the limited knowledge specifically related to the AV MaaS context, it is apparent that there is no consensus on ideal HMI experiences. For example, the use of spoken, natural language interfaces (NLIs) (achieved in this study through Wizard-of-Oz techniques) has been shown to improve passengers’ experiences in fully autonomous ‘pod’ vehicles and enhance their acceptance of the technology (Large et al., 2019). Although a conversational tone might be appropriate for parts of the journey, there may be situations in which users need to be explicit in guiding the vehicle (Tscharn et al., 2017), for example, to find an appropriate space to stop or exit the vehicle. Users might need to use very explicit language to communicate in these instances, which may be better communicated by pointing or gesturing. Tscharn et al. (2017) combine speech with gestures for passengers to communicate non-critical spontaneous situations such as “stop-over-there” instructions. They compared their speech and gesture interface with speech and touchscreen in a simulator study. Results suggest that participants found using the speech and gesture interface to provide instructions more intuitive and natural.

The necessary interactions and exchanges during a journey experience are complex and disparate. The journey may begin in the home to select and book the service; extend to the roadside to identify the correct taxi, gather and load luggage, and climb aboard, etc.; continue within the vehicle itself to make changes to route, request an unscheduled stop, and adjust comfort settings, etc.; and conclude post journey to confirm the destination is correct, make/confirm payment, and provide feedback, etc. (Hallewell et al., 2022). Kim et al. (2020) identify several journey tasks that will need to be replaced in an unmanned taxi journey, and they highlight that the experience provided will need to actively compete with a manned taxi experience. This means that the passenger (user) is likely to require and encounter several different HMIs and present several use-cases during a single journey out of necessity. The overall UX is thus determined by all of these collectively; a failure at one stage may negate all previous and subsequent benefits. However, several different stakeholders or providers may be responsible for defining this UX, including service providers, vehicle manufacturers, local law enforcement and governance organizations, and so on. Given the ‘distributed’ nature of these interactions/HMI experiences, the ways in which they are combined and intertwined is also important. In other words, it is not just the individual HMIs that must deliver, but it is the ways in which they are integrated that must deliver, including factors such as the handover and exchange of functionality and awareness, for example, from a user’s smartphone to an integrated touchscreen embedded within the vehicle.

With the emergence of new AV technologies and a revolution in consumer electronics (from connected music services to conversational agents), our interactions with and inside vehicles are fundamentally changing (Pettersson & Ju, 2017). Pettersson and Ju (2017) also suggest that traditional interface designers may lack the understanding required to address all the social and cultural norms in which such vehicles will operate in the future. Detjen et al. (2021) conducted a review of literature related to improving acceptance of AVs through interaction design to highlight that fully autonomous vehicles introduce a unique opportunity to focus on positive design. They argue that designers will be enabled to take an experience-oriented view of vehicle/HMI design because they no longer need to consider how the vehicle’s interior and functionalities might interfere with driving tasks. They argue that design should focus around creating possibilities, for example, by creating conditions for comfort and social interaction. An open question from their review of these experiences is ‘How can conditions simulate and evaluate the hedonic qualities of a vehicle system that doesn’t yet exist?’ This study focuses on the user-experience through a user-design approach within a simulated AV environment for that very purpose: to consider how a positive user environment might be developed.

User Design

User-centred design activities conducted at the very beginning of concept design/development are a primary approach to ensure that the resulting product/service is pleasurable and meets users’ needs (Sun et al., 2019). A recent change in the automotive industry is a move away from technology-driven designs towards more customer-focused user-centered designs to better meet potential customers’ needs (Bryant & Wrigley, 2014). Because the introduction of AV MaaS presents a new and unique experience to end-users, a new research avenue has opened, in which findings from traditional automotive user-design studies directed at reducing driver distraction and facilitating driving tasks can be (largely) dismissed in favor of experientiality. For an AV MaaS model, user requirements related to UX become a central focus (Lee et al., 2021).

Within the AV design sector, there is limited (yet growing) evidence describing the UX that potential end-users might expect of an AV MaaS for practical constraints (such as safe access to driverless vehicles) and conceptual constraints (such as the difficulty of imagining an experience that very few people have already had). Of the limited user-requirements/design evidence available, Tang et al. (2020) conducted a study investigating the types of activities that people might wish to conduct during AV driving (such as Level 3 automation in which the driver can hand over control to the vehicle during certain parts of the journey). Using a simulator and a user-enactment approach, participants were exposed to scenarios in which autonomous driving occurred, leaving them free to conduct activities during the journey. They found that people discussed a variety of non-driving activities: entertainment, rest, social activity, work, study, and daily routine. The main activities observed in the sample during the simulated drive were resting and playing on a phone and, when another passenger was present, they also engaged in talking.

Tang et al. (2020) identified several requirements for the information that would be needed and the functionalities that the in-car HMI would need to perform to support this conception of the UX. However, they also identified that some participants expected more advanced functions that suggested a particular kind of experience, such as expecting the vehicle to adjust driving mode based on the weather and providing a 360-degree projection of the car’s awareness. Similarly, Large et al. (2017) (see also Burnett et al., 2019) invited participants to a simulated 30-minute highly automated journey repeated over five days in which participants were instructed to bring activities and objects with them that they would expect to be able to use in an AV. While the vehicle was in automated mode, participants engaged in several physically and cognitively engaging activities, predominantly reading (paper-based materials as well as digital), watching videos, and using personal communication facilities (social media, text messaging, and phone calls); they also engaged in eating, drinking, and personal grooming. The design implications include a dynamic physical space design to enable such activities (seating position and support for devices) as well as connectivity to enable internet-based activities. However, such studies relate to vehicles that are not fully autonomous, and the activities identified were conducted only during the time that control was handed to the vehicle, which is a different experience to one in which there is no expectation that control could be handed back to the driver at any time.

In other research, Lee et al. (2021) performed a literature review and stakeholder/potential end-user interviews to elicit user needs and design requirements for AV UX. They present a design taxonomy for user needs for fully autonomous vehicles, including needs such as personalization, customization, accessibility, personal space, and so on. Their main finding was that there is a clear need for personalized systems and interfaces, and there is a need to explore how to support and enhance passengers’ expected activities and their experience. They note variables within their taxonomy, for example, that differentiate in the experience who is riding, whether they are sharing the journey, the activities that they might be engaged in, and so on. Some of these variables were highlighted in considering design challenges, and Lee et al. (2021) also present case studies in which user needs are presented for certain combinations of variables. Their analysis raises important questions around how such variables can influence the needs and requirements of users, which indeed raises design challenges in meeting the needs of people with differing preferences who are using the vehicles under differing circumstances. It makes sense to extend the knowledge surrounding how positive UXs can be created to realize the potential of AV MaaS through considering the interplay of these (and more) variables further.

Our current study was conducted within the ServCity project (https://www.servcity.co.uk/), which examines technology-based solutions, people-based needs and considerations, and scalability questions with the ultimate goal to suggest how AVs can become an everyday experience for everyone in cities. Through developing AV tech, modelling scalability, and examining end-user perspectives, ServCity aims to ensure the UX is as intuitive, inclusive, and engaging as possible. The University of Nottingham’s role in the project is to examine and propose UX considerations for this future service. The purpose of the study is to consider what kind of UX would be expected and wanted in an AV MaaS and how HMIs could provide this, which could inform the design of UXs for future autonomous taxis. We therefore present an overview of our analysis and results pertaining to HMI design and functionality which lead to several design dimensions that could be utilized to create different UXs. We posit that through considering the types of target end-users for a service under development, a unique experience can be created by selecting the most appropriate or desired ends of the dimensions for those users. Once an experience type has been designed, then HMIs can be added to provide that experience; for example, if a user expects a futuristic experience, HMIs should be developed towards this specification. This paper comprises an attempt to understand the possible HMI/UX considerations introduced by the AV MaaS vision and how new and unique UX needs might be addressed within a service design; we achieved this through using a novel virtual reality (VR) approach to simulate a future AV MaaS experience as well as by providing an example of the dimensions we used as a tool to evaluate AV MaaS HMI concepts.

Methods

This paper reports on two studies. The first study establishes design dimensions to inform HMI/UX design. In the second, participants used these design dimensions to assess several simulated HMI concepts. Both studies were approved by the University of Nottingham’s Faculty of Engineering Ethics Review Committee.

Study 1: Developing Design Dimensions

A user-design approach was taken wherein participants attended a VR workshop to discuss a joint experience with a virtual AV MaaS vehicle. This work took place during the initial COVID-19 restrictions in the UK, which meant that the activity needed to be conducted remotely. We repurposed an online pro-social VR environment, in this case, Mozilla Hubs by Mozilla™ (https://hubs.mozilla.com/docs/welcome.html), to conduct a series of collaborative design workshops intended to inform the design of HMIs for future AV MaaS, which participants could contribute to from their own homes (Large et al., 2022).

Participants

Twenty-five participants (16 male (64%), 7 female (28%), and 2 undisclosed (8%)) took part in the study. Twenty-three participants returned demographics questionnaires. The participants were recruited via email using convenience sampling and primarily comprised staff and students at the University of Nottingham and members of the ServCity project consortium (other researchers, engineers, and project managers). The largest majority (n=12, 48%) of participants were aged between 25 and 34 (mode) although participants presented from all adult age ranges specified.

Seven workshops were scheduled to take place, and respondents were able to choose to attend one workshop, with a maximum of five participants permitted to join each workshop on a “first come, first served” basis. The maximum number of five participants was selected based on prior planning meetings and testing sessions in which the research team experienced technical issues when large numbers of people and 3D objects were present within the Hubs environment. A maximum of five enabled a better UX for the participants as well as the facilitators present.

Mozilla Hubs

Mozilla Hubs was selected to host the workshops. Hubs is promoted as a multi-platform experience that runs in a web browser. Hubs provides pre-configured virtual spaces, or rooms, which can be selected and inhabited privately, or users can create their own virtual meeting spaces using Bespoke Scene Development software, Spoke (accessed December 1, 2021, at https://hubs.mozilla.com/spoke). We used this customizability to design environments for our studies. In addition, Hubs offers porosity of media so images and 3D models from the web can easily be searched and brought into the virtual environment in real-time to augment the room and experience, and an embedded Draw function allows users to create bespoke annotations and illustrations on an ad-hoc basis. Such functionalities were necessary to support our design study.

Hardware and Software

Hubs enables novice users (or one-time participants in a research study) to join without high-end equipment and ensures they receive the pro-social benefits with minimal investment in cost, time, and effort. Although Hubs can be used across a wide range of devices (VR headsets and mobile phones, etc.), we asked all participants to join using a laptop or desktop PC to ensure a similar experience for all participants while ensuring the required functionalities were available to all participants. Participants were therefore required to use a PC/Mac with an internet connection and a browser (Chrome™ or Firefox™ were recommended). The expectation was that they would take part remotely (for example, from their own home). Microsoft Teams™ was also used throughout each workshop, initially to greet participants and guide them to the Hubs room, but then as a technical support channel and to capture participants’ conversations (both spoken through using the built-in recording facility and written using the chat function).

Mozilla Hubs Rooms

Two interactive rooms were created. The first room depicted a road scene in which several autonomous taxis were located at the roadside (Figure 1). Participants joined the room (spawned) a short distance from the taxi and were required to maneuver their avatar (walk) towards it. They were then able to move freely around the vehicle. Pre-selected objects were available to participants to attach to the vehicle to give them an idea of existing technologies and conceptions for HMIs, for example, a keypad, touchscreen, and digital assistant icon, etc., yet participants were reminded that they should not constrain their thinking to these examples. The examples were selected based on an internal project literature review (summarized in the Future Mobility section) in which HMI options for AV MaaS concepts had been investigated. The identified objects were selected from the 3D object repository through Hubs. The second room depicted a taxi journey from within the vehicle with a 360-degree real-world video surrounding the vehicle so that it appeared that participants were actually travelling on a journey (Figure 2). In the second room, participants spawned inside the vehicle and were able to look around the vehicle and through the windows to the dynamic, unfolding road scene, but they were restricted from stepping out of the vehicle. Objects were not pre-populated within this scene owing to limitations on the visual space that they presented; however, participants were reminded of the search facility for finding objects to place and the Draw function for drawing objects that were not already available. The workshop involved all participants visiting first the road scene followed by the internal taxi journey scene as a collective cohort.

Figure 1. The first 3D environment with a road scene for hailing the taxi.

Figure 2. The second 3D environment with a dynamic 360-degree video for exploring internal in-transit, arrival, and disembarkation activities.

Workshop Procedure

A participant information sheet, consent form, and a short questionnaire to detail demographics were provided by email to each participant prior to their scheduled workshop. On receipt of the consent form, participants were sent a link to a dedicated Hubs training room to allow them to practice interacting with the virtual 3D environment prior to the study, should they so desire. Information regarding the extent of usage of the training was not captured.

For the study proper, participants initially joined via a Microsoft Teams meeting. During the meeting, an outline of the study procedure was provided verbally, and participants were given further instructions on how to interact within Hubs, if requested. They were then guided to Hubs using a hyperlink posted in the Teams meeting chat. Three researchers joined the participants within Hubs: one to facilitate the discussions and activities, one to deal with any operational difficulties or technical problems, and one to enable a first-person view recording of the workshop, which was subsequently captured using Open Broadcast Software (OBS) (accessed December 1, 2021, at https://obsproject.com/). The latter researcher chose a video camera as their avatar and changed their screen name to “CAMERA” for transparency. Participants were free to select an avatar of their choosing and were encouraged to use their unique participant number as their avatar name to ensure anonymity. Participants were also asked to maintain their presence within the Teams meeting (with their microphones enabled) throughout the study to primarily provide a recording of spoken dialogue for subsequent analysis but also to serve as a technical support channel. Consequently, participants were asked to mute their microphones in Hubs to avoid any interference or feedback to overcome potential problems of missing or losing spoken content due to the spatial audio effects in Hubs. Conversation could be lost in Hubs if the participant’s avatar moved behind the taxi as might be expected in an equivalent real-world situation.

Participants visited the road scene first, in which they spent 30-40 minutes as dictated by the facilitator. A portal was created between the rooms to allow participants to move seamlessly (and en masse following instructions from the facilitator) into the vehicle (the second room) in which they spent another 30-40 minutes. Participants were asked to visit rooms in a predetermined order and were instructed when it was time to move on to the second room. In each room, participants were verbally prompted to imagine themselves in various scenarios in which they might want or need to use an autonomous taxi (for example, an evening out in the city, going to the train station, or attending a work meeting, and so on). Participants were asked to consider whether their opinions and needs changed if they were travelling with friends, dependents, or within a future situation in which they were travelling with somebody they did not know. They were also asked to consider specific tasks they would need to accomplish related to different journey stages (Table 1). Our journey stages were informed by previous research activities conducted by the research team as part of the ServCity project (Hallewell et al., 2022), which included a series of interviews resulting in the creation of nine personas and twelve scenarios, a hierarchical task analysis, and distributed cognition analysis.

Table 1. Journey Stages and Exemplar Tasks Explored During Workshops

|

Journey Stage |

Related Tasks (Examples) |

|

Approach |

Check progress of taxi Locate and confirm correct taxi Negotiate arrival of taxi curbside |

|

Ingress |

Open door Coordinate children/dependents and other travellers Load luggage and assistive devices |

|

Transit |

Follow journey progress Modify route Make an impromptu stop |

|

Arrival |

Confirm destination is correct Negotiate final approach Modify drop-off location |

|

Egress |

Unlock and exit taxi Negotiate retrieval and off-load luggage |

|

Payment |

Make or confirm payment Add a gratuity (for a teleoperator, or other) Leave feedback |

The facilitator utilized a predetermined list of verbal prompts and probes to encourage participants to consider how they would complete each task and how that might translate into a specific HMI design, although not all prompts and probes were used in all workshops. Participants were encouraged to talk through their thoughts and ideas, create their envisioned HMI solutions in real-time by selecting and positioning (dragging and dropping) appropriate 3D models, and use the integrated Draw function in Hubs to illustrate their thoughts. Several 3D models were provided as examples in the environment (which were introduced to participants at the start of the exercise) although participants were able to draw or select anything they desired using the Insert Object search feature in Hubs. The facilitator aided participants as required or requested. Participants were specifically told and reminded not to be confined by their view of current technology and to imagine what their ideal HMI solution might be, even if they believed that this technology was not currently available. Where a suitable existing model could not be found, they were asked to create their ideas using the Draw function.

The images in Figure 3 are screenshots taken from the videos, which give a sense of participants’ envisioned physical placement of HMIs (or visual stand-ins for HMIs in which an actual example could not be sourced or was impossible to depict visually, for example Bluetooth connectivity).

Figure 3. Example of participants’ HMIs and objects placed inside the vehicle.

Analytical Procedure

The discussions were first transcribed for ease of analysis. In total, almost seven hours of recorded dialogue were captured. For speed and efficacy, transcriptions were made to record a general sense of what was said rather than adopting strict and formal conventions required for, say, conversational analysis. Thus, while the transcribed speech may not have recorded utterances verbatim, the key elements pertaining to HMI design were documented.

To develop the dimensions, we employed a two-stage approach. The first stage of analysis involved scrutiny of participants’ comments to identify and code the technological construct and medium/mechanism of interaction, or HMI (these were often related). The workshop transcripts were scrutinized for mentions of specific technologies or interfaces that participants suggested for each journey stage, which produced a list of HMI types that participants suggested for specific journey stages (Table 2).

Similar to the variables highlighted in the UX taxonomy developed by Lee et al. (2021), which differentiated experiences such as who is riding, whether they are sharing the journey, and the activities that they might be engaged in, we noted that participants’ opinions and preferences did not necessarily converge on one specific HMI solution for a specific task or activity. For example, although a conversation or NLI may have been suggested by two different participants for the same task, the first participant may have stated that they would prefer that the exchange was chatty and convivial, whereas the second participant may have preferred that the spoken dialogue was limited and highly functional. Furthermore, some participants were convinced that the interaction would happen entirely through a mobile phone app. Others identified that they would rather have that phone available for other activities during the journey, so they wanted to hand over tasks to a vehicle interface. Given the variance in taxonomy per Lee et al. (2020), each variable has differing levels of the number of people, differing modality of interface, and so on, and we expected themes representing continuums, or design dimensions, in which ideas and concepts existed with some commonality but in which application varied. The second stage of the analysis therefore focused on identifying these dimensions of variance around a particular concept. We produced preliminary, semantic anchors for these concepts on a scale to represent the limits of each dimension, which were assigned based on suggestions from members of a team of five experts in Human Factors involved in the project. The emergent design dimensions were discussed and refined by the research team to gain consensus, remove redundancy, and assign and agree upon the most appropriate semantic anchors for each dimension.

Results

Identification of HMI Concepts

During the first stage of analysis, we sought to identify the kinds of technologies that people envisioned using in an AV MaaS, which we then categorized according to the technological construct and mechanism (HMI). Examples of the HMI types that were discussed are presented in Table 2.

Table 2. HMI Concepts Discussed by Participants

|

Journey Stage |

Suggested HMI Types/Mechanism |

Description |

|

Booking |

Kiosk |

A touchscreen could be provided to enable bookings. |

|

Hailing |

Scan a QR code |

Passengers could scan a QR code with their phone to identify their vehicle and identify themselves to the vehicle. |

|

Chip and pin |

Passengers enter a unique code into the vehicle’s keypad. |

|

|

Google Glass |

Passengers could hail taxis through eye movement and book through the glass interface. |

|

|

Smartwatch |

A hailing gesture signals to taxis that the passenger is requesting the service. |

|

|

Approach |

LCD screen |

An LCD screen displays the user’s unique identification code/icon. |

|

“Available” light |

A light indicates the availability of the vehicle. |

|

|

Proximity noise/audio signal or light signal |

A sound/light/lights come on when the user is detected nearby communicating with their phone. |

|

|

Digital assistant |

User talks to the vehicle to establish if it is available and their booked vehicle. |

|

|

Ingress |

Automatic unlocking |

Vehicle automatically unlocks when the user or their phone is detected in proximity. |

|

QR code access |

User scans a QR code with their phone. |

|

|

Travel card |

User taps the card on a receiver located on the vehicle. |

|

|

Card reader/Chip and pin |

User’s bank/credit card is tapped onto a receiver, or they put the card into a reader and enter their pin. |

|

|

Voice control/Virtual assistant |

User speaks a command to the vehicle to open the door. |

|

|

Keypad |

User types a unique code into a keypad located on the vehicle. |

|

|

Phone app |

User unlocks the doors via a smartphone app. |

|

|

Fingerprint scanner |

User presents their finger to a scanner. |

|

|

Transit |

Voice control |

User speaks commands to the vehicle. |

|

Designated function button (such as park at next safe space) |

A physical button is provided to perform a specific pre-defined task. |

|

|

Artificial intelligence tour guide |

A narration is provided for the user based on where the vehicle is at in that point in time. |

|

|

Augmented reality tour guide |

A visual guide to the outside world is provided through screens or presented onto the windows. |

|

|

Keyboard |

User is provided with a keypad to type in instructions/destination. |

|

|

Touchscreen |

A touchscreen is provided for the user to interact with. |

|

|

Digital assistant/Artificial intelligence |

An assistant identifies common tasks and offers advice or instruction for completing the task. |

|

|

Haptic feedback (such as to control windows) |

User waves their hand over a sensor to adjust the windows. |

|

|

Climate control dashboard |

A standard climate control dashboard for conventional vehicles is provided. |

|

|

Holographic/Augmented reality vehicle controls |

The interface for controlling the journey is presented as a hologram, or the user wears augmented reality devices to see the controls. |

|

|

Bluetooth connectivity for entertainment options |

The vehicle’s entertainment system connects to the user’s phone via Bluetooth. |

|

|

Emergency |

Designated function button (such as emergency stop) |

A physical button is provided to perform a specific pre-defined task. |

|

Communication with an operator |

A communication system is provided to contact a human operator. |

|

|

Voice control |

User speaks commands to the vehicle. |

|

|

Arrival |

Multi-modal arrival notification (visual screen, phone app, and audio alert) |

Several notification types alert the user to their arrival at their destination. |

|

Touchscreen |

The user interacts with a touchscreen to acknowledge their arrival. |

|

|

Payment |

Contactless card payment |

User waves their bank/credit card over a wireless receiver. |

|

Travelcard |

User waves a (proprietary/city based) travelcard over a wireless receiver. |

|

|

Cash deposit facility |

User enters cash into a coin/note slot. |

|

|

Chip and pin |

User enters their card and pin number into a machine. |

|

|

Egress |

Environmental change to bring about alertness |

Lighting/noise/temperature is changed to wake the passenger or alert them to their arrival. |

|

Alert sound |

Noise is played to alert the passenger of their arrival. |

|

|

Designated function button (such as to open the trunk) |

A physical button is provided to perform a specific pre-defined task. |

Interaction Style

As noted, although some participants talked about the same HMI type for certain tasks, they differed on the way in which they would like to interact with this HMI. For example, participants discussed the option of an artificial intelligence agent offering information over and above journey-related information. One offered the suggestion, and one noted the different circumstances under which they would like to use that facility:

P1: “Maybe interaction about the robot or the system asking, “Do you want to know the news, or do you want to know the weather today?”

P2: “I think I’d be comfortable if it was just listening to information. Maybe if it was a holiday visit, information (could be) about the things I could see out the window if it was just a presentation read to me, more for leisure use. I don’t think I’d (need) to use that facility (for) everyday use.”

Another pair of participants discussed the option of linking their phone to the vehicle via Bluetooth and using the vehicle as an output for their own entertainment options. One participant was concerned about the vehicle listening in to their discussions and using it for marketing purposes:

P3: “It would be nice if it had an entertainment system that you could just attach to through Bluetooth or something.”

P4: “I think being able to play music or the radio would be great or (talk) to someone on the phone over the loudspeaker, but I guess that links in with the microphone listening in, (so) maybe it wouldn’t work. But I don’t think I’d want screens or anything… I’d want to be aware of my surroundings.”

Some participants described contradictions within their own preferences, such as one who noted a preference for typing in their instructions to the vehicle if the voice-based interaction was not sensitive enough to their accent:

P5: “I think if we have (a) chance to change [HMI/journey details, etc.] in the car, or you can decide before going into the car, I would rather say it. But you know, in English my accent is different. There’s a lot of countries; everyone speaks different. If the machine understands all accents, it’s ok, but in some places even when I talk to a human, they don’t understand well. Pronunciation is different and sometimes you don’t know how to pronounce it, so [a keyboard] is the better one for me just to type it or write it.”

Int: “What if it was multilingual?”

P5: “Yes, if it was multilingual, I would rather just say it.”

Some participants noted the need for multiple types of HMI as a backup for when their phone was not working or when they did not want to use their phone for a particular task (and so on):

P6: “For double backup, other than the screen on the taxi, actually maybe we can use our phone also to control the car for extra backup for the device if something (is) wrong with the device… so the input can be either from the taxi or the phone.”

Thus, a secondary thematic analysis was conducted on the workshop data with a specific focus on areas in which participants discussed competing forces within their preferences or needs. Within this analysis, the focus was placed on where clear dimensions could be identified from the data. For example, the following quote highlights differences in requirements for small talk:

P7: “Some people like to talk to the driver as they drive, so if you’ve got a sufficiently advanced Artificial Intelligence, you can use that to do that; although some people—if it’s a new technology—would feel slightly less comfortable conversing with an Artificial Intelligence.”

From such instances, a “sociability” dimension was identified and was assigned semantic anchors of “sociable” at one end and “functional” at the other. Notably, this dimension does not necessarily restrict the interaction to a certain HMI. In other words, a sociable exchange could be provided by many different HMIs (not only spoken language based).

Linked anchors for each dimension highlight competing forces on design with ambitions that could be realized by either the type or the functionality of the chosen HMI. As such, there was not necessarily a right or wrong solution.

Design Dimensions

Design dimensions are presented in Table 3 with descriptive examples to demonstrate their use and applicability in the design of AV MaaS and to HMI design more broadly. Importantly, both ends of each dimension represent achievable and desirable goals (neither solution is good nor bad). Instead, by offering each dimension, the intention is that designers can focus on the values and attributes of each interaction and begin to consider, shortlist, or evaluate potential HMIs and build UXs that can deliver the desired result.

Table 3. Design Dimensions for AV MaaS Experiences

|

Category |

Dimension |

Semantic Scale Anchors |

|

|

Interaction |

Sociability |

Functional A purely functional interaction between the service and user. |

Sociable A sociable interaction with the services extending beyond the necessary interactions. |

|

Novelty |

Conventional Vehicle and service design is guided by established interactions/HMIs and conventional taxi experience. |

Futuristic Vehicle and service design is guided by novel HMI ideas and a futuristic taxi experience. |

|

|

Centricity |

Vehicle-Centric/ Integrated Most interactions take place and are moderated by an integrated vehicle-based HMI and are therefore only available during a journey. |

Mobile Most interactions take place using a mobile device (like a smartphone app) and therefore enable a more bespoke/personal experience. |

|

|

Customizable |

Standard/Predefined All users will experience the same service/interactions with no customizations. |

Customizable User can apply their personal preferences both to the HMI and journey experience (vehicle/driving style). |

|

|

Contiguity |

Non-Contact HMI is contact-free (uses gestures and voice-activation). |

Contact HMI requires physical user contact like touchscreens. |

|

|

Functionality |

Inclusivity |

Targeted The service is designed for a specific user base (early adopters) with associated requirements and needs. |

Inclusive The service is designed for general/wider public use, and HMI design has inclusivity considerations. |

|

Transparency |

Basic/Minimalistic Only basic, requisite information is provided. |

Transparent/Information-Rich HMI relays all vehicle decisions and multiple levels of information to user. |

|

|

Interactivity |

Passive The user has little to no control over the experience; most factors are pre-set/default. |

Interactive The UX has a high level of interaction points with the service to enable a higher level of user control. |

|

|

Experientiality |

Standalone The sole focus of the HMI is to deliver the service (transport user from A to B). |

Integrated/Experiential HMI/service is focused on creating a wider experience for the user. |

|

|

Redundancy |

Dedicated Interaction is designed to occur using a sole/primary HMI. |

Redundant Multiple HMIs are provided that enable the same functions. |

|

|

Contextual Factors |

Service |

Pre-Booked Service can only be booked in advance. |

Ad Hoc Service can be used and secured on an ad hoc basis (such as by hailing). |

|

Occupancy |

Sole Service only enables hiring of the entire vehicle. |

Shared Service permits partially booking the vehicle to share with another independent user. |

|

Study 2: Using the Design Dimensions

We took a user evaluation approach to assess whether the design dimensions could be adequately utilized by participants to evaluate an HMI experience. Study 2 employed participants to apply the dimensions to the task of judging the experience provided by several different prototypes. If participants were unable to use the dimensions, we would expect no significant differences between HMIs across participants. If significant differences could be found, it could be inferred that the design dimensions represent a promising tool for evaluating an HMI based on the experience that it provides. This process would also establish which differences in the HMI experiences could be identified.

We developed VR resources demonstrating four different HMI types in use for the completion of two interrelated journey tasks—that of identification of the user to the vehicle and gaining entry to the vehicle—and asked participants to rate the experience along the dimensions. The dimensions were displayed as a seven-point scale.

Participants

Fifty-three participants (47% female (n=25) and 52% male (n=28)) engaged in this part of the study to examine reactions to AV MaaS HMI design concepts. The largest majority of the participants were aged 35-44 years old (mode) although participants presented from all age groups specified.

Virtual Reality Materials

Concepts for AV MaaS HMI options were created to provide an example of how the HMI might work in practice. The options selected reflected the most obvious methods (as discussed during the design sessions) that were also judged as easy to use or preferred. These concepts were presented as prototypes within a Wizard-of-Oz style study, in which we took 360-degree videos of these prototypes in-use and filmed to make it seem to the viewer that they were functioning within a working AV MaaS vehicle; although in reality there was no true functionality, and the vehicle was not autonomous. We presented four HMI types that were being used for the task of identifying the user to the vehicle while gaining entry to the vehicle: one depicted a voice-based interaction; one presented a keypad style interaction; one presented a QR code style interaction; and one presented a travel-card style interaction (see Figure 4).

|

A – Voice Interaction |

B – Keypad Interaction |

|

|

|

C – QR Code Interaction |

D – Travel-Card Interaction |

|

|

Figure 4. Video materials depicting HMIs/interaction styles.

In the voice-based interaction, an actor interacted with the vehicle by reading out a code while a screen showed an abstract animation to give the impression that the vehicle was talking back to the actor. In the keypad interaction, the actor pressed the code (presented on a phone) into a keypad located on the vehicle. In the QR code interaction, the actor used a mobile phone to scan a QR code located on the vehicle. In the travel-card based interaction, the actor presented a card with a chip to a wireless recognition icon on the vehicle.

We purposefully did not attempt to exaggerate any dimensions within the design of these HMIs and interactions to explore whether participants would naturally associate certain attributes with a certain HMI, such as whether users naturally identified voice-based interactions as inherently social and keypad interactions as requiring more physical contact as might be expected. The exercise also allowed us to validate the relevance and applicability of the dimensions. In other words, did the dimensions make sense, could users apply them in this context, and did they successfully differentiate the HMI options presented?

Procedure

Participants were invited to participate in the study using a laptop or via a VR headset. By this stage, COVID-19 restrictions were lifted sufficiently such that participants could come to the lab to participate, although it was limited to university staff/students. Thus participants were invited to complete the study remotely also, and as such, participants could select one of four conditions within which to participate: at home with their own laptop (n=14/26%); at home with their own VR headset (n=13/25%); in the lab with a lab provided laptop (n=11/21%); in the lab with a lab provided VR headset (n=15/28%). An evaluation of the different methods (home versus lab and VR-headset versus laptop browser) as well as further methodological details can be found in Large et al. (2022).

The tasks that participants completed were identical, although some practicalities differed depending on the location/equipment. In-lab participants needed to follow social distancing and cleansing procedures and those at home needed to join a Teams meeting to receive instructions. Participants were presented the videos in a pre-determined order (randomized to counteract order-effects). After each video they were asked to complete an online questionnaire that contained the design dimensions (among other usability and enjoyment measures, etc., which were reported separately). In the lab, participants using a VR headset were asked to remove the headset and complete the questionnaires on a tablet provided or were given the option to be sent URL links to the questionnaires to visit on their own internet-enabled device. Those using a laptop were able to complete the questionnaires on the same laptop or were able to use the tablet or their own internet-enabled device. Participants at home were connected to the researcher via a Teams meeting, so both laptop and VR headset participants were asked to return to the Teams meeting. URL links to the questionnaires were shared within the chat facility.

Analytical Procedure and Results

Participants rated the experience along a seven-point scale; the mid-scale rating (4) was labelled “not applicable/neutral,” and the opposite ends of the scale showed the semantic anchors for each dimension. A numerical rating was produced based on their ratings with the scores 1 to 7 transposed to enable 0 representing the mid-point and -3/3 as the opposing ends of a dimension. To determine the effect of Location (home versus lab) and Method (VR-headset versus laptop browser) on ratings, two-way ANOVAs were conducted for each HMI with Location and Method as independent variables. Full results are reported in Large et al. (2022). In summary, there were no significant differences in ratings for Method and Location, and we therefore concluded that none of the methods or locations unfairly biased a particular HMI; moreover, each Method and Location was equally valid in providing an assessment of the HMIs under examination. Consequently, to evaluate the sensitivity of the design dimensions, ratings were combined for each HMI (ratings were not differentiated based on Location or Method). One way, repeated-measures ANOVAs, with Bonferroni corrections for multiple comparisons, were subsequently conducted using SPSS® for each design dimension with a single independent variable of HMI/interface (Voice, Travelcard, Keypad, and QR-code). Statistical significance was accepted at p < .05. Results are depicted in Figure 5, and statistical comparisons are shown in Table 4.

|

Figure 5. Participant ratings of the four interface options on the design dimensions (mean ratings with standard deviation error bars). |

Significant differences between the mean ratings for the HMIs were found for several dimensions (Table 4).

Table 4. Significant Differences of Experience Between HMI Concepts

|

Dimension |

Statistical Test Result |

Details |

|

Sociability |

F(3,49) = 9.652, p < .001, ηp2 = .371 |

Voice was rated as more sociable than both the Travelcard (p < .001) and QR-code (p = .049). |

|

Novelty |

F(3,49) = 7.636, p < .001, ηp2 = .319 |

Voice and QR-code were both rated as more novel/futuristic than Travelcard (p < .001; p = .004, respectively). |

|

Centricity |

F(3,49) = 14.997, p < .001, ηp2 = .479 |

Keypad and QR-code were rated as more mobile/less vehicle-centric than both Travelcard (p < .001; p < .001, respectively) and Voice (p = .017; p = .001). Voice was rated as more mobile/less vehicle centric than Travelcard (p = .015). |

|

Customizability |

F(3,49) = 3.000, p < .001, ηp2 = .290 |

Keypad and QR-code were both rated as more customizable than Travelcard (p = .003; p < .001, respectively). |

|

Contiguity |

F(3,49) = 48.156, p < .001, ηp2 = .747 |

Keypad was rated as requiring more contact than all others: Voice (p < .001), Travelcard (p < .001), and QR-code (p < .001). |

|

Interactivity |

F(3,49) = 6.973, p < .001, ηp2 = .299 |

Keypad and QR-code were both rated as more interactive than Travelcard (p = .002; p = .001). |

|

Experientiality |

F(3,49) = 5.310, p = .003, ηp2 = .245 |

Voice, Keypad, and QR-code were all rated as more integrated/experiential than Travelcard (p = .002; .026; .041, respectively). |

|

Service |

F(3,49) = 6.836, p < .001, ηp2 = .295 |

Travelcard was rated as more “ad hoc” than both Voice (p < .001) and Keypad (p < .001). |

There were no significant differences on the following dimensions: Occupancy; Inclusivity; Perspicuity/Transparency; and Redundancy.

Results show that users were able to apply the dimensions to the experiences, and these were successful in revealing differences but not for all dimensions. Some of these differences were expected, such as the keypad option being judged to be higher on the “contact” dimension than other experiences (simply because this interface necessitated the most touching of the screen while others had limited or no touching), and the voice-based interaction being more sociable than, particularly, the travelcard experience. Other dimensions might require further investigation to understand the ratings, particularly whether a 0 or mid-point rating was useful or hindered judgement.

Discussion

A user-design activity was conducted through a novel pro-social VR platform to inform design dimensions for future autonomous taxi interface concepts. We proposed 12 design dimensions within the categories of interaction, functionality, and contextual factors that will contribute to the future design and evaluation of user interfaces for AV MaaS technologies and services. These 12 design dimensions were used to evaluate four AV MaaS HMI concepts, and significant differences between HMI concepts were found for eight of the 12 dimensions. In this section, we discuss our findings related to the future design of AV MaaS vehicles.

Design Dimensions to Design Concepts

We conducted this work with participants who were asked to envision AV MaaS services of the future. One major observation was that people expect there to be a variety of possible experiences across the dimensions and subsequently a variety of HMIs to provide these experiences. As with most products and services, there will be no single one-size-fits-all solution to HMI (and UX) design. One of the key acceptance challenge categories put forward by Detjen et al. (2021) is focused on positive experiences, which highlights the need for entertainment and individualization; that category makes customer experience the vital branding factor for AV MaaS operators. Our design dimensions offer a framework of potential experiences from which AV MaaS developers can select the appropriate experience for their desired service provision or to build a unique branded experience. It is expected that offering an appealing experience might persuade people to take up AV MaaS transport by outperforming the single-occupancy vehicle experience or by offering personalization of options to modify the experience to personal desires. Maximizing acceptance is the crucial factor in being able to realize the expected social, environmental, and lifestyle benefits of AV MaaS (Butler et al., 2021; Strömberg et al., 2018).

Within our design dimensions, it is quite feasible that the same HMI technology could deliver different experiences relevant to each end of a single dimension, which means that focus could be directed towards the design of the interaction to deliver the required experience rather than the specific technology that will be used to deliver it. For example, a conversational NLI HMI could fulfil users’ requirements across the entire gamut of the sociability dimension. A “sociable” HMI could utilize discursive language in its responses, encourage two-way conversational exchanges, utilize ‘small talk’ to extend conversation and build common ground and understanding, initiate informal dialogue with the user, employ human-sounding vocal qualities, and be self-referential (such as by using the first person during its utterances) (for guidelines on conversational user interfaces, see Large et al., 2019).

In contrast, a functional NLI could utilize limited, prosaic language, restrict interactions to command-based exchanges (call and response), keep all dialogue strictly task-oriented and perfunctory, limit system-initiated responses to essential alerts and warnings, and avoid the use of human pronouns and vernacular styling. Even so, both experiences would notionally be delivered by an NLI. In other cases, the dimension could encourage the selection of a specific HMI, in this case, a voice-based interface. Further, deciding whether the HMI should be mobile or integrated (centricity dimension) naturally encourages designers to build their experience on a smartphone application for the former, whereas an integrated solution affords several different vehicle-centric experiences, such as an on-board touchscreen (for a comparison of app-based versus vehicle centric interfaces, see Oliveira et al., 2018). We offer a framework to create and evaluate an experience which is not wholly based around the specific technologies that will be required to deliver that experience; rather it is the needs and preferences of users that dictate the design.

Evaluating AV MaaS Experiences Using the Design Dimensions

We utilized the design dimensions in an evaluation activity with participants who viewed simulated interactions for four HMI concepts as a means to demonstrate how these dimensions can contribute to HMI development. The experiences presented to participants were found to differ significantly on several dimensions. The range of significant differences suggest that participants had noted important differences between experiences and were able to successfully use our dimensions to define the differences between them. Thus, our dimensions offer a meaningful framework to evaluate HMI experiences.

It seems reasonable to assume that experiences created from the dimensions on the right side of Table 3 might be more appealing to potential AV MaaS users because these concepts are traditionally valued in novel technologies and services (futuristic, interactive, and sociable, etc.). During the evaluation, participants rated the experiences mostly towards the left side of the dimensions, which potentially suggests that the experiences were not thought to be particularly positive. However, we noted that participants often expressed a desire for the opposite side of the dimension, and they preferred, for instance, an experience that is familiar that did not result in unnecessary conversation over and above essential journey and task-related topics. It was not the intention to suggest the best or worst HMI options from our offering as, clearly, users might prefer experiences towards one side of the dimensions to the other. (We would argue that neither end is inherently positive or negative). Instead, we have shown that the dimensions present a reasonable means of comparing HMI options. Iterative design and evaluation cycles of the HMI prototypes could exaggerate certain elements, if so desired, to examine the use of these dimensions and their impact on the UX further. For example, an examination of the Functional/Sociable dimension might involve comparing a highly sociable to a highly functional experience and moving on to ascertaining whether some combination of both would be acceptable in differing scenarios. Within this dimension, for example, an NLI could be employed, with one experience comprised of a chatty digital-assistant concept and an alternate experience built around the completion of identical tasks with minimal and task-focused language. In this instance, personal preferences will play a large role in the success of each concept; however, an in-depth comparison would highlight individual elements of the experience that are important.

One interesting finding was the contiguity dimension, in which people discussed their desire not to touch common touch points on the vehicle and how this would limit the functionalities and technologies that could be utilized for a journey. These discussions primarily related to the COVID-19 pandemic, and many participants compared their current opinions to how they might have felt before the pandemic. In some cases, participants suggested that at some point in the future they might not be so concerned about touch points on a shared facility, but it was not clear for how long their current level of concern would be heightened. Hensher (2020) opines that COVID-19 raises many issues which might present a barrier to the success of AV MaaS, including an unwillingness to share an enclosed space with strangers. The potential for touch-based viral transmission has been found to be of particular concern within vehicles. Drivers under low cognitive load conditions while driving have been found to engage in face touching behaviors more often than when engaged in more demanding driving tasks, particularly around the nose and mouth area, which potentially increases their risk of viral transmission (Ralph et al., 2021). Ralph et al. (2021) suggest that the passenger experience should be reimagined to reduce physical contact and reduce transmission risk of any communicable disease (not solely COVID-19). We would similarly argue that developing an experience that avoids unnecessary touching of commonly touched surfaces would be one approach to encourage use within a post COVID-19 society that has heightened awareness of germs.

It should be noted that it is not just the HMI options that contribute to the experience; other aspects of the service offering, vehicle design, information, and advertising (and so on) that relate to AV MaaS may also be evaluated using these dimensions. For example, one key aspect of the AV MaaS landscape discussed by participants was the capability to converse with a human operator (teleoperator) in the event of an emergency. Very little is currently known about how passengers of AV MaaS will interact with teleoperators; although Keller et al. (2021) suggest that passengers might desire some level of control, input, and even the chance to overrule teleoperators. It would be useful to evaluate concepts of such interactions utilizing the dimensions to ensure continuity of the experience offering throughout the entire gamut of interactions.

One interesting finding was that the only dimension within which all experiences were judged above the mid-point (closer to the right side slightly) was inclusivity. Within this side of the dimension the experience is generic and caters to all kinds of users. However, our sampling technique was not specifically aimed towards those with additional needs, and as such it would be useful to gather feedback from a more diverse sample regarding how they might respond to the experiences.

Limitations

We have already pointed out that participants gave many conflicting personal preferences that often contradicted each other (and themselves) directly within the workshop. The design dimensions offer a range of options for an experience, but the selection of where the experience fits within the scale of these dimensions is likely to be a difficult process. Within the AV MaaS conceptualization, there will be many different populations to cater to including existing public transport users wishing to have greater access to public transport; existing private car owners who need to be convinced that AV MaaS will be more convenient than a personal vehicle; and people with accessibility needs who will need to be reassured that the service will meet their unique needs. Shergold et al. (2019) identify several considerations that are needed when designing AV MaaS interfaces for older people, whereas Amanatidis et al. (2018) discuss separate (yet often related) considerations for those with disabilities. It seems reasonable to suggest that offering a variety of experiences via different HMIs will provide the most benefit to most users. However, this provision may complicate the HMI offering within and outside of the vehicle because multiple different interfaces would be present offering different options for interaction.

A further disadvantage of the influence of personal preference is that it is unlikely that our dimensions offer an exhaustive range of considerations for all AV MaaS offerings; there is certainly a wider range of dimensions than could be identified here. We focused specifically on scenarios relevant to the ServCity project, which apply more broadly to an AV MaaS concept in which a shared AV operates within the boundaries of a city and operates within a limited set of use-cases such as linking up to public transport, short hops across the city, and group/shared travel. Designers of AV MaaS concepts that operate outside of this remit are likely to need to consider a wider range of dimensions such as a service operating within a defined community (for example a university transport network or retirement village, etc.); they may find users are concerned about more socially oriented dimensions for interactions with fellow passengers which the HMI needs to facilitate (or at least not hinder). A similar design activity would be recommended for each new design domain under which AV MaaS technologies are developed. It would also be useful to examine the extent to which our dimensions, and/or the approach we took to derive them, would apply to other domains in which an experience needs to be created.

It should also be noted that our mid-point was intended to be a neutral scoring to enable participants to forego judgement if the dimension did not seem appropriate for the experience; for example, it was expected that participants would struggle to judge experiences based on occupancy since each experience represented a sole occupancy-based journey. It would be beneficial to examine perspectives more closely for those dimensions in which the experiences were scored close to mid-point to establish whether participants really thought that the dimension was not applicable or that they chose this scoring for another reason, such as their misunderstanding of the dimension/experience.

Conclusion

The physical and social conditions of an AV MaaS journey are new and unique, and as such it is difficult for both designers and end-users to envision how people will want to use such vehicles. Clearly, designers interested in the UX from the perspective of possible activities that could be performed in an autonomous taxi service should consider the potential context of the user in their design process and consider the use-case and design activities accordingly. Specifically, the design of the UX must consider the conditions created for the user. The shared aspect of AV MaaS journeys also needs to be carefully managed, especially in the context of a future society concerned with commonly touched surfaces.

Our design dimensions represent an initial tool for measuring an experience from which HMIs can be developed. We have performed an initial validation of this tool; however, it is acknowledged that further validation is perhaps necessary to examine participants’ reflections on using the dimensions and explore any further dimensions that might be added. The team intends to use the dimensions to evaluate HMI options for HMIs at different stages of a driverless taxi journey, such as the transit stage, egress, and payment interfaces. We would encourage further testing and validation of these dimensions along with examination of how different use-cases might necessitate the use of different dimensions. We anticipate that there are many options for adaptation and reuse of the design dimensions to develop and evaluate UXs.

What seems apparent is that the functionality and selection of HMIs should not be solely focused on the passenger being taken to where they need to go, but they should support a range of different information, safety, comfort, and entertainment needs (and so on). This study has provided emergent themes and dimensions, grounded in user data and utilized in an evaluation of HMI experiences, that are provided as a preliminary framework for HMI design. They are relevant to future autonomous taxis and will be used to inform future research activities in this context but can also be applied more broadly. It is suggested that offering a range of experiences to enable users to select an experience from either end (or somewhere in the middle) of our dimensions might be the most promising approach to encourage users to select an AV MaaS over a personal vehicle or manned taxi. We encourage designers of AV MaaS services and technologies to consider our dimensions when creating experiences and selecting appropriate HMIs, paying particular attention to the type of experience that they wish to create.

Acknowledgements

The ServCity project (https://www.servcity.co.uk/) is funded by the UK Innovation Agency (Innovate UK) and Centre for Connected & Autonomous Vehicles (CCAV) (Grant number: 105091). The authors would also like to thank colleagues at TRL (a ServCity project partner) for providing the 360-degree video used during the study.

Tips for Usability Practitioners

We offer the following practical guidance based on our approach to developing and testing design dimensions for a future technology service, which can be used by practitioners who may wish to utilize this approach.

- User-design practices that involve groups of people discussing prototypes is an ideal means to identify conflicts of needs and preferences, which in turn can highlight dimensions of an experience. Such discussions can be supported by virtual online environments that enable examination of prototypes of products not yet available in real life.

- The process of identification of design dimensions enables developers a framework for the curation of UX, such as novel versus conventional, touch-free versus high contact, and so on.

- Design dimensions can be identified in which participants disagree on certain aspects of a product or service. Where disagreements center around two (or more) different but equal options, there exists an opportunity to make key design decisions that will influence the kind of experience that a developer intends for their user.

- One promising means of comparing prototypes involves asking participants to judge an experience along the identified dimensions, which helps to identify key differences between the experience generated by different concepts.

- Iterative evaluation of experiences using the design dimensions as a framework can help developers to make decisions about the experience they wish to create and whether an HMI can provide this experience.

References

Amanatidis, T., Langdon, P. M., & Clarkson, P. J. (2018). Inclusivity considerations for fully autonomous vehicle user interfaces. In P. M. Langdon, J. Lazar, A. Heylighen, & H. Dong (Eds.) Breaking down barriers: Usability, accessibility and inclusive design (pp. 207-214). Springer. https://doi.org/10.1007/978-3-319-75028-6_18

Bryant, S., & Wrigley, C. (2014). Driving toward user-centered engineering in automotive design. Design Management Journal, 9(1), 74-84. https://doi.org/10.1111/dmj.12007

Burnett, G., Large, D. R., & Salanitri, D. (2019). How will drivers interact with vehicles of the future. RAC Foundation. https://www.racfoundation.org/wp-content/uploads/Automated_Driver_Simulator_Report_July_2019.pdf

Butler, L., Yigitcanlar, T., & Paz, A. (2021). Barriers and risks of Mobility-as-a-Service (MaaS) adoption in cities: A systematic review of the literature. Cities, 109, Article 103036. https://doi.org/10.1016/j.cities.2020.103036

Detjen, H., Faltaous, S., Pfleging, B., Geisler, S., & Schneegass, S. (2021). How to increase automated vehicles’ acceptance through in-vehicle interaction design: A review. International Journal of Human–Computer Interaction, 37(4), 308-330. https://doi.org/10.1080/10447318.2020.1860517

Fagnant, D. J., & Kockelman, K. M. (2014). The travel and environmental implications of shared autonomous vehicles, using agent-based model scenarios. Transportation Research Part C: Emerging Technologies, 40, 1-13. https://doi.org/10.1016/J.TRC.2013.12.001

Hallewell, M. J., Hughes, N., Large, D. R., Harvey, C., Springthorpe, J., & Burnett, G. (2022). Deriving personas to inform HMI design for future autonomous taxis: A case study on user-requirement elicitation. Journal of Usability Studies, 17(2), 41-64. https://nottingham-repository.worktribe.com/output/6910893

Hensher, D. A. (2020). What might Covid-19 mean for mobility as a service (MaaS)? Transport Reviews, 40(5), 551-556. https://doi.org/10.1080/01441647.2020.1770487

Keller, K., Zimmermann, C., Zibuschka, J., & Hinz, O. (2021). Trust is good, control is better: Customer preferences regarding control in teleoperated and autonomous taxis [Paper presentation]. 54th Hawaii International Conference on System Sciences, Hawaii, United States.

Kim, S., Chang, J. J. E., Park, H. H., Song, S. U., Cha, C. B., Kim, J. W., & Kang, N. (2020). Autonomous taxi service design and user experience. International Journal of Human–Computer Interaction, 36(5), 429-448. https://doi.org/10.1080/10447318.2019.1653556

Krueger, R., Rashidi, T. H., & Rose, J. M. (2016). Preferences for shared autonomous vehicles. Transportation Research Part C: Emerging Technologies, 69, 343-355. https://doi.org/10.1016/j.trc.2016.06.015

Large, D. R., Burnett, G., & Clark, L. (2019). Lessons from Oz: Design guidelines for automotive conversational user interfaces [Paper presentation]. 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, Utrecht, The Netherlands.

Large, D. R., Burnett, G. E., Morris, A., Muthumani, A., & Matthias, R. (2017). Design implications of drivers’ engagement with secondary activities during highly-automated driving–a longitudinal simulator study [Paper presentation]. Road Safety and Simulation International Conference, Hague, The Netherlands.

Large, D. R., Hallewell, M. J., Briars, L., Harvey, C., & Burnett, G. (2022). Pro-social mobility: Using Mozilla Hubs as a design collaboration tool [Paper presentation]. Ergonomics & Human Factors Conference, Birmingham, England.

Large, D. R., Hallewell, M. J., Coffey, M., Evans, J., Briars, L., Harvey, C., & Burnett, G. (2022). Evaluating virtual reality 360 video to inform interface design for driverless taxis [Paper presentation]. Ergonomics & Human Factors Conference, Birmingham, England.

Large, D. R., Harrington, K., Burnett, G., Luton, J., Thomas, P., & Bennett, P. (2019). To please in a pod: Employing an anthropomorphic agent-interlocutor to enhance trust and user experience in an autonomous, self-driving vehicle [Paper presentation]. 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Utrecht, The Netherlands.

Lee, S. C., Nadri, C., Sanghavi, H., & Jeon, M. (2021). Eliciting user needs and design requirements for user experience in fully automated vehicles. International Journal of Human–Computer Interaction, 1-13. https://doi.org/10.1080/10447318.2021.1937875

Mahdavian, A., Shojaei, A., Mccormick, S., Papandreou, T., Eluru, N., & Oloufa, A. A. (2021). Drivers and barriers to implementation of connected, automated, shared, and electric vehicles: An agenda for future research. IEEE Access, 9, 22195-22213. https://doi.org/10.1109/ACCESS.2021.3056025

Merat, N., Madigan, R., & Nordhoff, S. (2017). Human factors, user requirements, and user acceptance of ride-sharing in automated vehicles (Vol. 10). OECD Publishing.

Oliveira, L., Luton, J., Iyer, S., Burns, C., Mouzakitis, A., Jennings, P., & Birrell, S. (2018). Evaluating how interfaces influence the user interaction with fully autonomous vehicles [Paper presentation]. 10th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Toronto, ON, Canada.

Pettersson, I., & Ju, W. (2017). Design techniques for exploring automotive interaction in the drive towards automation [Paper presentation]. Designing Interactive Systems Conference, Edinburgh, United Kingdom.

Ralph, F., Large, D. R., Burnett, G., Lang, A., & Morris, A. (2021). U can’t touch this! Face touching behaviour whilst driving: Implications for health, hygiene and human factors. Ergonomics, 1-17. https://doi.org/10.1080/00140139.2021.2004241

Shergold, I., Alford, C., Caleb-Solly, P., Eimontaite, I., Morgan, P. L., & Voinescu, A. (2019). Overall findings from research with older people participating in connected autonomous vehicle trials. Flourish Publications.

Strömberg, H., Karlsson, I. M., & Sochor, J. (2018). Inviting travelers to the smorgasbord of sustainable urban transport: Evidence from a MaaS field trial. Transportation, 45(6), 1655-1670. https://doi.org/10.1007/s11116-018-9946-8

Sun, X., Houssin, R., Renaud, J., & Gardoni, M. (2019). A review of methodologies for integrating human factors and ergonomics in engineering design. International Journal of Production Research, 57(15-16), 4961-4976. https://doi.org/10.1080/00207543.2018.1492161

Tang, P., Sun, X., & Cao, S. (2020). Investigating user activities and the corresponding requirements for information and functions in autonomous vehicles of the future. International Journal of Industrial Ergonomics, 80, 103044. https://doi.org/10.1016/j.ergon.2020.103044

Tscharn, R., Latoschik, M. E., Löffler, D., & Hurtienne, J. (2017). “Stop over there”: Natural gesture and speech interaction for non-critical spontaneous intervention in autonomous driving [Paper presentation]. 19th ACM International Conference on Multimodal Interaction, Glasgow, United Kingdom.