Abstract

Website usability reinforces trust in e-government, but at the local level, e-government tends to have usability and accessibility problems. Web portals should be usable, accessible, well coded, and mobile-device-ready. This study applies usability heuristics and automated analyses to assess a state-wide population of county web portals and examines whether population, per capita income, or median household income are related to usability, accessibility, and coding practices. To assess usability, we applied a 14-point usability heuristic to each site’s homepage. To study accessibility and coding, we examined each homepage with an accessibility checker and with the World Wide Web Consortium’s (W3C) HTML validator. We also examined the HTML and Cascading Style Sheets (CSS) of each site to check for mobile-device readiness and to better understand coding problems the automated tools identified. This study found that portal adoption is associated with each of the demographics above and that accessibility has a weak inverse relationship to per-capita income. Many of the sites we examined met some basic usability standards, but few met all the standards used, and most sites did not pass a basic accessibility analysis. About 58% of the counties we examined used a centralized county web portal (not including county commission sites), which is better than a 2006 study that found a 56% portal adoption at the national level. Resulting recommendations include best-practice suggestions for design and for using automated tools to identify problems, as well as a call to usability professionals to aid in county web portal development.

Tips for Usability Practitioners

The usability and accessibility issues we found with the county web pages we examined raise some important issues for both usability and design practitioners. Some of these issues are evident from the quantitative results, but other issues became apparent during observations we made as we collected data.

Automated analysis tools are valuable as litmus tests for problems, but use them with care:

- When using WAVE, capture the site’s code in addition to a WAVE image—the altered layout of WAVE images for a few highly problematic sites obscure a few of the WAVE icons.

- When using WAVE to compare a set of sites, omit Flash-based sites because WAVE cannot detect accessibility problems within Flash.

- When using an HTML or CSS validator, be aware that a single coding error can cause multiple validation errors.

Also:

- Look for the basic causes of the lack of conformance to published standards, especially accessibility standards, to understand why best practices were not used.

- Discourage drag-and-drop site builders and third-party designers unless you can verify that they produce usable and accessible sites.

- Test web pages when integrating information about county units that have their own websites (e.g., a hospital or an emergency management agency) to make sure that

- users understand that the county site does not fully represent the unit and

- users can easily find the link to the unit’s full site.

- Include all municipalities, whether or not they have websites, when creating listings of municipalities within a county.

- List the state name, clearly and prominently, as some counties share a name with many others across the U.S.

- Consider volunteering your time to help your town or county create accessible and usable websites.

Introduction

Civic websites, such as e-government sites, are critical for fostering civic participation and for “mak[ing] opportunities for democratic engagement” (Salvo, 2004, p. 59). One example of these ideas is the one-stop, local e-government portal (Ho, 2002), in which a local government (i.e., municipalities, counties, and other small subdivisions of state governments) consolidates all its services and information into a single, coherent site, rather than spreading it across multiple agency-specific sites. For example, the site for Jefferson County, Alabama, provides one-stop access to 34 county departments, services, boards, and offices, such as the county attorney, the tax assessor, and family court. Ho (2002) argued these web-based government services open the door for using a customer-oriented approach to focus on end-user “concerns and needs” to both engage and empower citizens (p. 435). County governments continue the move from being “administrative appendages” of state-level government to providing a wide range of services (Benton, 2002; Bowman & Kearny, 2010, p. 274) and having stronger policy-making influence (Bowman & Kearny, 2010), so developing sites that are easy to use and providing access in ways in which users need access is important. These portal sites should be usable and accessible; ideally they will even be poised to meet the needs of users with mobile devices.

An e-government web portal provides users with a central point of entry to a government’s web presence, organizing links to its agencies and, in many cases, other governments. As an example, the web portal of the United States, USA.gov, includes links to state government websites, and many state government portals provide links to both local websites, such as those of municipalities and counties, and to national government websites. Ho (2002) argued that portals engage and empower citizens, even if institutional issues and the digital divide sometimes slowed the process. Huang (2006), in a study of U.S. county e-government portals, noted that 56.3% of counties had moved to a portal-based model and that digital divide demographics, including educational level and income level, seemed to play a role in county adoption of portals. County portal adoption rates varied widely by state, ranging from a high of 100% in Delaware to a low of 10.6% in South Dakota. Huang also found a relatively low adoption rate of advanced e-government services, particularly transactional services. As examples, the most commonly adopted transactional service, collecting taxes, occurred on barely 32% of county websites and only 14.5% of portals allowed citizens to conduct vital records transactions (birth, marriage, and death). Both of these studies helped establish local e government benchmarks.

Feature richness is part of quality—and it has been used as a criterion in research on local government web development (e.g., Cassell & Mullaly, 2012)—but users must be able to reach features easily to take advantage of them. Given that users underuse services that are already available online (e.g., Baker, 2009), Scott (2005) emphasized the need for local governments to maintain quality websites to encourage use. Scott’s (2005) measures of quality e-government included usability, which is often defined as “the extent to which a product [such as a website] can be used by specified users to achieve specified goals with effectiveness, efficiency and satisfaction in a specified context of use” (ISO 9241, 1998). In other words, usability focuses on user experiences. Accessibility, the usability of a website by users with disabilities (Petrie & Kheir, 2007), has also been used as a quality measure in e-government (e.g., West, 2005; 2006; 2007; 2008).

Although corporate usability research is often proprietary and unpublished in journals (Sullivan, 2006), the results filter into best practices in information architecture and design, resulting in a number of free-to-access design standards and techniques. Government-oriented materials are available to government designers in a number of forms, including videos on test setup, scripts, and templates (U.S. General Services Administration, 2013a). Through its website HowTo.gov, the U.S. General Services Administration (2013b) also provides Listserv forum access and an online community, both open to government at all levels. Usability.gov—the self-proclaimed “one-stop source for government web designers to learn how to make websites more usable, useful, and accessible” (U.S. Department of Health and Human Services, n.d.)—makes many resources available, including usability and accessibility articles, instructions for conducting usability tests, and the U.S. Department of Health and Human Services’ (2006) Research-Based Web Design and Usability Guidelines. Even communities that have little or no funding for testing should review their sites to make sure they adhere to basic usability and accessibility standards, such as having a “Home” button at the top left of the page and having alternative text for images.

Alabama is an ideal state with which to examine these e-government issues at the county level, issues that could affect resident access to services and influence on policy making. At the state level, e-government in Alabama has made significant progress. West’s (2005; 2006; 2007) early studies of state-level e-government websites revealed significant usability and accessibility problems, with West ranking Alabama in the bottom 10% of state-level e-government in the United States. In 2008, however, West found that Alabama had made significant strides in state-level e-government, rising to the number 3 position nationally, though accessibility compliance has remained a problem (West, 2008). While Alabama e-government has improved tremendously at the state level, these improvements have not filtered down to the local level (such as municipalities, e.g., the consolidated cities and incorporated places that are parts of counties), and many Alabama municipal websites have significant usability and accessibility issues (Youngblood & Mackiewicz, 2012).

This study addressed the adoption of county portals, used automated and heuristic evaluation of usability and accessibility characteristics to assess whether county web portals in Alabama meet a selection of these standards, and examined whether the counties’ portals are poised to take advantage of new communication tools such as mobile devices. Practices at broader levels of government have the power to influence—for better or for worse—practices at more local levels (e.g., as municipalities going to county portals).

Usability in e-Government

Website user experience focuses on site usability and readiness to embrace and adapt to new communication technologies such as mobile devices. Usability has several important components, including user satisfaction (Zappen, Harrison, & Watson, 2008) and site effectiveness, the concept that users of a site should be able to surf, to search for a known item, and to accomplish tasks (Baker, 2009), such as finding a county’s public notices or accessing local codes. Usability is particularly important for transactional user experiences, including two-way communication. In the case of e-government sites, these experiences can include providing feedback and requesting documents. For two-way communication to be effective, the user must be able to navigate the site to find these features. When the user is able to easily accomplish these tasks, it not only encourages the use of the site, but can help foster trust and helps make users feel that “their input is valued” (Williams, 2009, p. 461). Parent, Vandebeek, and Gemino (2005) argued that trust is a critical component of e-government and that citizens need “a priori trust in government” to trust e-government (p. 732)—in other words, they will not trust an online presence if they distrust its brick-and-mortar counterpart. Huang, Brooks, and Chen (2009) found that a user’s e-government experience is influenced by the nature of the government entity’s online presence, particularly usability. This matches the findings of Fogg et al. (2003) that the strongest influences on a corporate site’s credibility are its design’s look and the information design and structure. Thus, the relationship is complex: Good design fosters credibility, and credibility gives users the security they need to participate in e-government.

Because web page design changes over time, usability attributes are defined by experience. For instance, icons for navigation—such as a house for home—have gone out of fashion, though such icons are addressed in specific usability guidelines from the late 1990s (e.g., Borges, Morales, & Rodríguez, 1996). Trends come and go, so usability taxonomies do well to rely on user experiences. Usability expert Nielsen’s (1996) taxonomy of usability attributes—learnability, efficiency, memorability, errors (as few system errors as possible and allowing users to recover easily), and satisfaction—has been widely adopted, though other taxonomies exist (e.g., Alonso-Ríos, Vázquez-García, Mosqueira-Rey, & Moret-Bonillo, 2009). At a given point in time, these attributes can be distilled into research-based and best-practice-based heuristics (Cappel & Huang, 2007; Nielsen & Tahir, 2002; Pearrow, 2000). For instance, learning to use a new website is easier if the site is designed to follow other current practices in layout and architecture. U.S. users look for a logo at the top, the main navigation on the top or left, and they expect the logo to be clickable (Cappel & Huang, 2007; DeWitt, 2010). Usability research suggests that conventions such as the placement of “Home” on the top left be followed, but otherwise, menu orientation and link order are less important than factors such as link taxonomy (Cappel & Huang, 2007; DeWitt, 2010). However, long menus (greater than 10 links) and vertical menus that extend below the fold can be a problem (DeWitt, 2010). Breaking such conventions makes the user look harder for information.

Accessibility in e-Government

In an abstract sense, many community leaders are aware that their constituencies include a number of people with permanent and short-term disabilities, be they sensory, cognitive, motor, or other disabilities. However, individuals with disabilities can be less visible in the population than their counterparts. The Kessler Foundation and National Organization on Disabilities (2010) found that 79% of working-age adults (18-64 years old) with disabilities are not employed (this includes those not looking for work for a variety of reasons), compared to 41% of non-disabled individuals. Furthermore, people with disabilities go to restaurants and religious services less than people without disabilities, contributing to their under-visibility, and the risk that they might be an afterthought in counties providing routine online services.

Accessibility, or making information accessible for users with disabilities, is critical not only for the legal reasons, but, more importantly, for ethical reasons. The large number of individuals who have visual, hearing, motor, and cognitive disabilities should not be excluded from e government. In Alabama, over 14% of the non-institutionalized, working-age population (i.e., over 422,000 people) has a disability of some sort (U.S. Census Bureau, 2010b). Visual, hearing, motor, and cognitive disabilities affect Internet use (WebAIM, n.d.-a), and 54% of people with disabilities use the Internet (Kessler Foundation and National Organization on Disabilities, 2010). In short, nearly 228,000 working-age Alabama residents with disabilities are likely online. And some populations are affected more than others, especially Alabama African Americans, whose per capita rates for serious vision problems exceed those of Alabama Caucasians (Bronstein & Morrisey, 2000).

For the Internet to be a valuable source of information and assistance, residents must fully and easily be able to access online resources. When accessibility measures, such as supplying alternative text describing an image for visually impaired users, are missing, users with disabilities are excluded from accessing and participating in e-government. Furthermore, because accessibility typically improves usability (Theofanos & Redish, 2003; World Wide Web Consortium, 2010), including on mobile devices (World Wide Web Consortium, 2010), an inaccessible site also can make non-disabled users expend unnecessary effort.

Users expect—and have a right to—accessible content. These expectations are not always met; many municipal sites do not meet standards (Evans-Cowley, 2006), Alabama sites included (Youngblood & Mackiewicz, 2012). Even a number of state (Fagan & Fagan, 2004) and federal sites (Olalere & Lazar, 2011) do not consistently adhere to all of the standards. This problem includes Alabama (e.g., Potter, 2002; West, 2005, 2006, 2007, 2008). Consequently, Alabama residents, like residents of other states, do not have full access to online information and services.

Automated accessibility evaluation (e.g., West 2008) can be used to look at a large number of sites in a short timeframe to capture a snapshot of design for accessibility. It helps identify the extent of accessibility problems, the types of problems (e.g., empty links), and the recurrence within each type (e.g., five empty links on a page), identifying critical issues meriting future research. Furthermore, automated review employing free, easily accessed, widely recognized, and easy-to-use tools can indicate the level of attention designers of a given site have paid to accessibility: If an automated evaluation reveals 15 serious accessibility problems, in our opinion, the site has fundamental accessibility flaws. Given that designers have easy access to the same and additional tools, these flaws indicate lax attention to designing for users with disabilities. An automated analysis that uncovers serious problems suggests that human site review such as expert analysis and testing—particularly by participants with disabilities—in combination with other methods (e.g., Jaeger, 2006; Olalere & Lazar, 2011) might be called for in future studies.

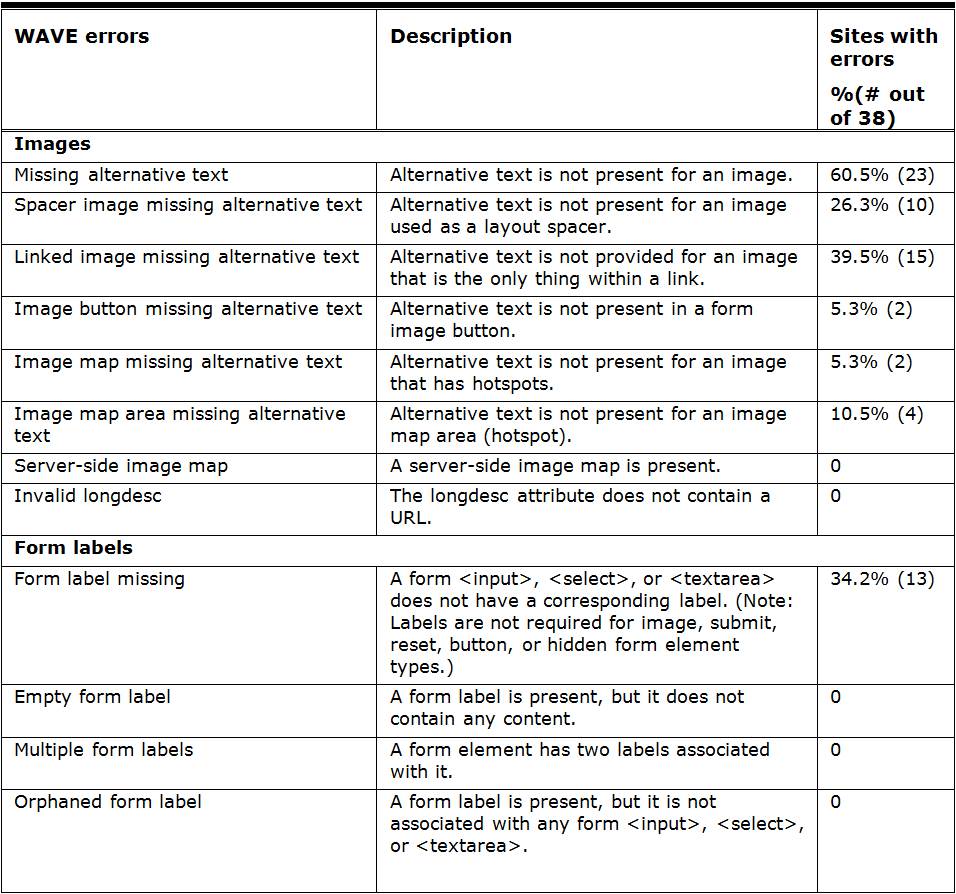

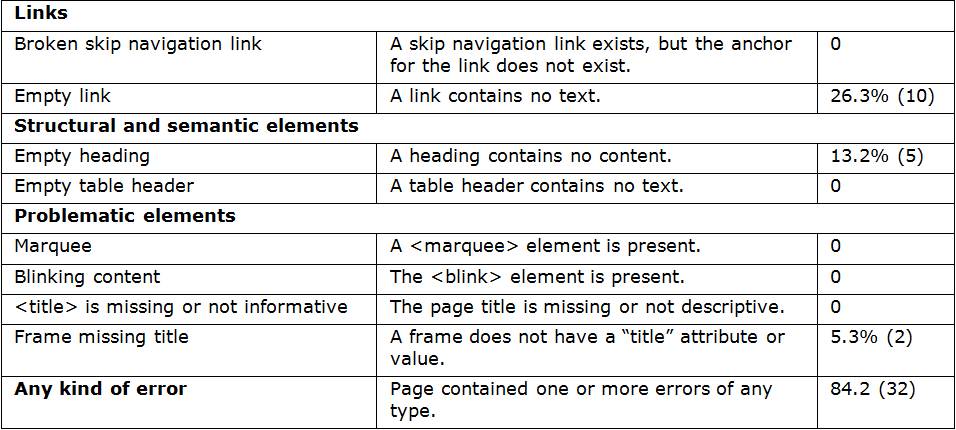

This study uses WAVE (WebAIM n.d.-b), a free automated tool for evaluating site accessibility that has been used as an indicator of web page accessibility in a range of studies, including in e government research (West, 2008; Youngblood & Mackiewicz, 2012). While early accessibility studies often relied on Watchfire’s Bobby for this type of testing, Bobby has been discontinued as a free service and WAVE has become a common substitute (e.g., West, 2008). WAVE tests for accessibility problems in the code of a website, including legal violations, such as failing to comply with Section 508 of the Rehabilitation Act Amendments of 1998. The following is an example of a standard that websites must comply with from Section 508: “A text equivalent for every non-text element shall be provided (e.g., via ‘alt’, ‘longdesc’, or in element content)” (B§1194.22[a]). WAVE checks for likely problems, possible problems, and good practices (e.g., “structural, semantic, [or] navigational elements,” such as heading tags), labeling them, respectively, as errors, alerts, and features. When a user enters a URL, WAVE follows the URL, checks the page for accessibility, generates a version of the original web page with an overlay of descriptive icons, and produces a count of errors. It identifies as errors the 20 types of coding problems that “will almost certainly cause accessibility issues” (WebAIM, n.d.-c, the WAVE 4.0 Icons, Titles, and Descriptions section), such as missing alternative text and missing form labels (see Table 4 for the full list).

We focused on the number of WAVE errors rather than the number of WAVE alerts for potentially problematic HTML, script, and media. Coding that triggers alerts is not consistently problematic: For instance, something that WAVE marks as a possible heading might not be a heading. Alternatively, a piece of code that WAVE marks as an error will most likely cause a problem. Many of the errors cause problems for users with vision problems, such as missing alternative text for an image, empty links, missing page titles, and blinking text. WAVE errors serve as a litmus test for a site designer’s attention to accessibility issues—even though a WAVE test is not as comprehensive or as sensitive as detailed expert review or user testing—and the number of errors is a consistent measure that has been used for comparison of sites in a range of disciplines (e.g., Muswazi, 2009; West, 2008; Youngblood & Mackiewicz, 2012). That said, as an automated tool, WAVE cannot make judgment calls. For example, it can detect the presence or absence of the ALT attribute for an image, but it cannot tell if the text is actually useful, i.e., if it provides a usable description of an image rather than reading “image.” In an ideal world, designers should include users with disabilities and/or expert evaluation as part of the development process.

Mobile Devices and the Digital Divide

Part of creating a positive user experience is enabling users to access sites in the ways they prefer to or are set up to access them, and a growing body of users employ cell phones to access websites. Accessible designs often help make websites more usable and portable, but media-specific style sheets (such as a style sheet that changes the page for mobile devices or for printing) are also important. These style sheets allow designers to provide alternative instructions in the code that help adapt the design of a web page based on how the user is accessing the page. For example, a designer might have separate style sheets for a regular computer screen, a mobile device, and for printing. Without a media-specific style sheet, an otherwise attractive and usable site can become unusable on a mobile device. Some devices, such as the iPhone, allow users to scroll and zoom, but these activities become cumbersome, especially on a complex page with multiple menus and designed for a 1024 x 768 pixel display.

Lack of mobile access is a problem not only for urban, affluent users, but also for users affected by the digital divide. Smith’s (2011) Pew Research Center survey found that 45% of cell-phone owning adults surveyed (in both Spanish and English) use cell phones to access the Internet. Some groups of people are significantly more likely to use cell phones to access the Internet: Only 39% of non-Hispanic White cell phone owners use their phones to access the Internet, as opposed to 56% of non-Hispanic Blacks and 51% of Hispanics. Although rural users are less likely on the whole to use cell phones this way, the demographics in Alabama suggest that residents would benefit from web pages designed for mobile access. Alabama has more than double the national-average proportion of non-Hispanic Black residents—26%, as opposed to 12.2% nationally (U.S. Census Bureau, 2010a; U.S. Census Bureau, 2010b; U.S. Census Bureau, 2010c). Even with a comparatively low Hispanic population, these minorities combined exceed the national average by 1.4%.

With an increasing percentage of Americans accessing the web via mobile devices, it is important that governments at all levels ensure that users can access e-government information on these devices. Prior studies (e.g., Shareef, Kumar, Kumar & Dwivedi, 2011) have called for researchers to begin examining how governments are leveraging mobile devices in delivering information and providing services.

Hypotheses

Prior studies have found positive correlations between the demographic variables of county population, per-capita income, and median household income and the adoption of e-government services nationally (Huang, 2006) but no significant correlations between these demographics and website usability at the municipal-level in Alabama (Youngblood & Mackiewicz, 2012). This study focused on the following four hypotheses.

Portal adoption

Higher numbers of residents might require more coordinated websites to have a better user experience finding the resources they need in a complex local government, and higher income (as it manifests in budgets) might facilitate portals. Huang (2006) found that there was a correlation at the national level, between counties with higher populations, per capita income, or median household income, and portal adoption. We hypothesized that these correlations would hold true for contemporary Alabama county portals.

Usability

As sites grow in complexity, structuring information can become more difficult. For instance, a site with a single page and links to two county departments and three county services (e.g., a local hospital) presents less of a navigation challenge to a designer than a site with 40 pages and links to 23 county departments and services. If population and income were to provide more opportunities for complexity—more local services and greater funding departmental sites and the county portals themselves—the resulting complexity could pose design challenges. Thus, we hypothesized that there would be no correlation between county web portal usability (using basic, broadly accepted standards such as Cappel & Huang, 2007; West, 2008; Youngblood & Mackiewicz, 2012) and either county population or income.

Best coding practices

Best practice coding, including accessibility standards, valid HTML, and the use of external style sheets (such as Cappel & Huang, 2007; WebAIM, n.d.-d; West, 2008; Youngblood & Mackiewicz, 2012), facilitate user access. Again, the demographic variables above may generate both higher demand for quality sites and the resources to produce them. We hypothesized that there would be a positive correlation between best practices in coding and each demographic variable.

New communication technologies

The widespread adoption of mobile Internet access by the general population is still relatively recent. Coupled with Huang’s (2006) finding that counties tend to have low adoption rates of advanced services such as transactional services, regardless of demographics, we hypothesized that there would be no correlation between the adoption of new communication technologies and either county population or income.

Methods

Below, we describe this study’s design, our materials, and our procedures, arranged by line of inquiry.

Study Design

After compiling a list of counties, we identified websites that serve as portals—sites that represent not a single department but the county as a whole, often linking to departments and resources available in a given county. We then conducted a heuristic evaluation of the usability of each homepage, followed by an automated evaluation of best coding practices—the use of valid and accessible coding—and a manual evaluation of the homepage code to see if the designers had employed Cascading Style Sheets (CSS), style sheets that consolidate codes for appearance and separate those codes from the semantic, or meaning-laden, code, so sites are consistent and easier to maintain. We also examined code for CSS for mobile devices, or coding that would make a site more functional on a mobile device. We then analyzed the data to test each of our hypotheses.

The tests for each hypothesis examined correlations between the website variables and, separately, each of three county demographic variables: population, per-capita income, and median household income. In cases in which the website data were continuous, we tested for Pearson product-moment correlations (Pearson’s r). In cases in which the website data were dichotomous, we tested for point bi-serial correlation (rpb), a variant of Pearson’s r. In all cases, the level of significance was set at p<0.05 to establish critical values, and the t-value of the correlation coefficient, r or rpb, was compared to the critical value for t to test for significance. In cases where the expected correlation was directional, we used a one-tailed test.

Materials

We viewed the pages on an Apple computer using OSX 10.6.8 with Firefox 6.02. We set the browser size to 1024 x 768 pixels using Free Ruler v. 1.7b5 to check page sizes. We tested each county portal homepage’s accessibility using the WAVE online accessibility tool (WebAIM, n.d. b) described above. We tested compliance with World Wide Web Consortium (W3C) coding standards by submitting each county homepage to the W3C’s HTML validation tool (W3C, 2010).

Procedure

The following sections discuss how we categorized our data into four primary areas: the adoption of portals, usability, best coding practices, and the adoption of new communication technology.

Adoption of portals

To examine adoption of portals—main county websites—we compiled a list of county web portal addresses for the 67 Alabama counties. We built the list by doing the following:

- We examined the 45 county links on the State of Alabama website (State of Alabama, n.d.).

- We searched for portals for 10 links that were incorrect (either abandoned URLs or links to an organization other than the county, such as a city in the county).

- We searched for the 22 counties listed as not having portals.

To identify missing sites, we used a Google search for the county name and Alabama, and we examined the first 30 results. In all, we identified 39 county portal websites out of the 67 counties in Alabama. Once we identified the sites, we collected our data during a 24-hour period in September 2011.

One county’s portal was not functional during data collection That county’s data were collected at a later date and used for the analysis of portal presence, n=67. The remaining analyses examined only the portals functional during the study (n=38). We did not code departmental sites, such as county school district and county sheriff sites. Nor did we include county commissioner sites because some serve only their commissions, functioning as departmental sites.

Usability

To address adherence to basic and broadly accepted usability standards, we used a set of 14 dichotomous web usability standards developed from prior research (Cappel & Huang, 2007; Youngblood & Mackiewicz 2012; Pew Center on the States, 2008) to code the homepage—and for several measures, a sample of three internal (secondary) pages—of each site. We analyzed all portals functional during the study (n=38). For each standard, we recorded the use of each practice contributing to site usability as a 1 and the failure to adhere to standards as a 0. The standards, listed in detail in the Results section, are grouped by adherence to the following:

- overall design standards, such as not using a splash page

- conventions for hyperlinked text in main text areas, such as using blue text links; where text links were absent from homepages, these measures were assessed on internal pages

- navigational standards, such as using a county logo/name as a home link on internal pages

- findability using a search engine, i.e., whether entering the county name and state into Google returns a link to the county website within the first 10 results; users will often not go past the first page

- the resources directly available to these developers

- the background and training of these developers

- the resources available within local governments

- other constraints, such as resistance to change

- the approaches local governments can take to eliminate or work within constraints to resolve website quality problems

- How are these sites being developed and who are the people developing them? During the code evaluation, we noticed that several of the sites appeared to be built using outdated or problematic tools, such as FrontPage 2000 and Microsoft Word. Others sites appear to have been built by a drag-and-drop site builder or by commercial firms, though it is worth noting that one of the latter provided a county with an entirely Flash-based website.

- How aware are designers and decision makers of best practice guidelines such as usability and accessibility heuristics? While usability practitioners are well aware of these, there may be a need to increase awareness of these heuristics.

- What resources do local governments need to better leverage e-government and increase site usability and accessibility? Our analysis revealed substantial usability and accessibility problems, as well as issues with valid HTML (only 10.8% of homepages) and the tendency to not leverage newer technologies, such as mobile or other alternate styles sheets (only 15% of sites used CSS media definitions). While part of the issue may be awareness, these problems could also stem from financial constraints or easy access to area experts.

- Alonso-Ríos, D., Vázquez-García, A., Mosqueira-Rey, E., & Moret-Bonillo, V. (2009). Usability: A critical analysis and a taxonomy. International Journal of Human-Computer Interaction, 26(1), 53–74.

- Baker, D. L. (2009). Advancing e-government performance in the United States through enhanced usability benchmarks. Government Information Quarterly, 26(1), 82–88. doi:10.1016/j.giq.2008.01.004

- Benton, C. J. (2002). County service delivery: Does government structure matter? Public Administration Review, 62(4), 471-479. doi:10.1111/0033-3352.00200

- Borges, J. A., Morales, I., & Rodríguez, N. J. (1996). Guidelines for designing usable World Wide Web pages. 1996 Conference on Human Factors in Computing Systems, CHI ‘96 Conference Companion on Human Factors in Computing Systems, Vancouver, Canada (pp. 277–278). New York, NY: ACM. doi:10.1145/257089.257320

- Bowman, A., & Kearney, R. (2010). State and local government (8th ed.). Boston, MA: Wadsworth, Cengage Learning.

- Bronstein, J. M., & Morrisey, M. A. (2000). Eye health needs assessment for Alabama. Birmingham, AL: The EyeSight Foundation of Alabama. Retrieved November 9, 2011 from http://www.eyesightfoundation.org/uploadedFiles/File/ESFANeedsAssessment.doc

- Cappel, J. J., & Huang, Z. (2007). A usability analysis of company websites. Journal of Computer Information Systems, 48(1), 117–123.

- Cassell, M. K., & Mullaly, S. (2012). When smaller governments open the window: A study of web site creation, adoption, and presence among smaller local governments in northeast Ohio. State and Local Government Review, 44(2), 91–100. doi:10.1177/0160323X12441602

- DeWitt, A. J. (2010). Examining the order effect of website navigation menus with eye tracking. Journal of Usability Studies, 6(1), 39–47.

- Evans-Cowley, J. S. (2006). The accessibility of municipal government websites. Journal of E-Government, 2(2), 75–90. doi:10.1300/J399v02n02_05

- Fagan, J. C., & Fagan, B. (2004). An accessibility study of state legislative web sites. Government Information Quarterly, 21(1), 65–85. doi:10.1016/j.giq.2003.12.010

- Fogg, B. J., Soohoo, C., Danielson, D. R., Marable, L., Stanford, J., & Tauber, E. R. (2003, June 5–7). How do users evaluate the credibility of Web sites? Proceedings of DUX2003, Designing for User Experiences (pp. 1–15). San Francisco, CA: ACM. doi:10.1145/997078.997097

- Ho, A. T. (2002). Reinventing local governments and the e-government initiative. Public Administration Review, 62(4), 434–444. doi:10.1111/0033-3352.00197

- Huang, Z. (2006). E-Government practices at local levels: An analysis of U.S. counties’ websites. Issues in Information Systems, 7(2), 165–170. doi:10.1057/palgrave.ejis.3000675

- Huang, Z., Brooks, L., & Chen, S. (2009). The assessment of credibility of e-government: Users’ perspective. In G. Salvendy & M. J. Smith (Eds.), Human interface and the management of information. Information and interaction (Vol. 5618, pp. 26–35). Berlin, Heidelberg: Springer Berlin Heidelberg. Retrieved November 2011 from http://www.springerlink.com/content/1741260×54246247/

- ISO 9241. (1998). Ergonomic requirements for office work with visual display terminals (VDTs). Part 11: Guidance on usability. International Organization for Standardization, Geneva, Switzerland.

- Jaeger, P.T. (2006). Assessing Section 508 compliance on federal e-government web sites: A multi-method, user-centered evaluation of accessibility for persons with disabilities. Government Information Quarterly, 23(2), 169–190. doi:10.1016/j.giq.2006.03.002

- Johnson, R. R., Salvo, M. J., & Zoetewey, M. W. (2007). User-centered technology in participatory culture: Two decades “beyond a narrow conception of usability testing.” IEEE Transactions on Professional Communication, 50(4), 320–332. doi:10.1109/TPC.2007.908730

- Kessler Foundation and National Organization on Disabilities. (2010). The ADA, 20 years later: Kessler Foundation/NOD survey of Americans with disabilities. Retrieved December 2011 from http://www.2010DisabilitySurveys.org/pdfs/surveyresults.pdf

- Leporini, B., & Paternò, F. (2008). Applying web usability criteria for vision-impaired users: Does it really improve task performance? International Journal of Human-Computer Interaction, 24(1), 17–47. doi:10.1080/10447310701771472

- Loiacono, E. T., Romano Jr., N. C., & McCoy, S. (2009). The state of corporate website accessibility. Communications of the ACM, 52(9), 128-132.

- Muswazi, P. (2009). Usability of university library home pages in southern Africa: A case study. Information Development, 25(1), 51-60.

- Nielsen, J. (1996). Usability metrics: Tracking interface improvements. IEEE Software, 13(6), 12.

- Nielsen, J., & Tahir, M. (2002). Homepage usability: 50 websites deconstructed. Berkeley, CA: New Riders.

- Olalere, A., & Lazar, J. (2011). Accessibility of US federal government home pages: Section 508 compliance and site accessibility statements. Government Information Quarterly, 28(3), 303–309. doi:10.1016/j.giq.2011.02.002

- Parent, M., Vandebeek, C. A., & Gemino, A. C. (2005). Building citizen trust through e-government. Government Information Quarterly, 22, 720–736. doi:10.1016/j.giq.2005.10.001

- Pearrow, M. (2000). Web site usability handbook. Boston, MA: Charles River Media.

- Petrie, H., & Kheir, O. (2007, April 28–May 3). The relationship between usability and accessibility of websites. Proceedings of ACM CHI 2007 Conference on Human in Computing Systems (pp. 397–406). San Jose, CA: ACM. doi:10.1145/1240624.1240688

- Pew Center on the States. (2008). Being online is not enough: State elections websites. Retrieved October 16, 2013 from http://www.pewtrusts.org/uploadedFiles/wwwpewtrustsorg/Reports/Election_reform/VIP_FINAL_101408_WEB.pdf

- Potter, A. (2002). Accessibility of Alabama government Web sites. Journal of Government Information, 29(5), 303–317. doi:10.1016/S1352-0237(03)00053-4

- Rehabilitation Act Amendments of 1998, Section 508 29 U.S.C. § 794d. (1998).

- Salvo, M. J. (2004). Rhetorical action in professional space. Journal of Business and Technical Communication, 18(1), 39–66. doi:10.1177/1050651903258129

- Scott, J. K. (2005). Assessing the quality of municipal government Web sites. State and Local Government Review, 37(2), 151–165. doi:10.1177/0160323X0503700206

- Shareef, M. A., Kumar, V., Kumar, U., & Dwivedi, Y. K. (2011). E-Government Adoption Model (GAM): Differing service maturity levels. Government Information Quarterly, 28(1), 17-35. http://dx.doi.org/10.1016/j.giq.2010.05.006

- Smith, A. (2011). Americans and their cell phones: Mobile devices help people solve problems and stave off boredom, but create some new challenges and annoyances. Pew Internet and American Life Project (pp. 1–19). Washington D.C.: Pew Research Center. Retrieved November 2012 from http://www.pewinternet.org/~/media//Files/Reports/2011/Cell%20Phones%202011.pdf

- State of Alabama. (n.d.). State of Alabama – info.alabama.gov – County web sites. Retrieved September 2011 from http://www.info.alabama.gov/directory_county.aspx

- Sullivan, P. Interview with M. W. Zoetewey. (2006). Quoted in Johnson, R. R., Salvo, M. J., & Zoetewey, M. W. (2007). User-centered technology in participatory culture: Two decades “beyond a narrow conception of usability testing.” IEEE Transactions on Professional Communication, 50(4), 320–332. doi:10.1109/TPC.2007.908730

- Theofanos, M. F., & Redish, J. (2003). Bridging the gap: Between accessibility and usability. Interactions, 10(6), 36–51. doi: 10.1145/947226.947227.

- U.S. Census Bureau (2010a). 2010 American Community Survey 1 year estimates: Selected social characteristics in the United States.

- U. S. Census Bureau (2010b). Profile of general population and housing characteristics: 2010. 2010 Demographic profile data: Alabama. http://factfinder2.census.gov/faces/tableservices/jsf/pages/productview.xhtml?pid=DEC_10_DP_DPDP1&prodType=table

- U. S. Census Bureau (2010c). Profile of general population and housing characteristics: 2010. 2010 Demographic profile data: United States. Retrieved September 2011 from http://factfinder2.census.gov/faces/tableservices/jsf/pages/productview.xhtml?pid=DEC_10_DP_DPDP1&prodType=table

- U.S. Department of Health and Human Services. (2006). Research-based web design and usability guidelines. Retrieved September 2010 from http://usability.gov/guidelines/index.html

- U.S. Department of Health and Human Services. (n.d.). Usability home. Usability.gov. Retrieved September 2011 from http://www.usability.gov/

- U.S. General Services Administration. (2013a). First Fridays usability testing program. Retrieved March 2013 from http://www.howto.gov/web-content/usability/first-fridays#learn-how-to-facilitate-tests

- U.S. General Services Administration. (2013b). User experience community. Retrieved March 2013 from http://www.howto.gov/communities/federal-web-managers-council/user-experience

- WebAIM. (n.d.-a). Introduction to web accessibility. WebAIM. Retrieved December 2011 from http://webaim.org/intro/

- WebAIM. (n.d.-b). WAVE – Web accessibility evaluation tool. WebAIM. Retrieved September 2011 from http://wave.webaim.org/

- WebAIM. (n.d.-c). Index of WAVE icons. WebAIM. Retrieved December 2011 from http://wave.webaim.org/icons, available at http://web.archive.org/web/20121007073407/http:/wave.webaim.org/icons

- WebAIM. (n.d.-d). WebAIM’s WCAG 2.0 checklist for HTML documents. WebAIM. Retrieved December 2011 from http://webaim.org/standards/wcag/checklist

- West, D. M. (2005). State and federal electronic government in the United States, 2005. Retrieved September 2011 from http://www.insidepolitics.org/egovt05us.pdf

- West, D. M. (2006). State and federal electronic government in the United States, 2006. Retrieved September 2011 from http://www.insidepolitics.org/egovt06us.pdf

- West, D. M. (2007). State and federal electronic government in the United States, 2007. Retrieved September 2011 from http://www.insidepolitics.org/egovt07us.pdf

- West, D. M. (2008). State and federal electronic government in the United States, 2008. Washington D.C.: The Brookings Institution. Retrieved September 2011 from http://www.brookings.edu/~/media/research/files/reports/2008/8/26%20egovernment%20west/0826_egovernment_west.pdf

- Williams, M. F. (2009). Understanding public policy development as a technological process. Journal of Business and Technical Communication, 23(4), 448–462. doi:10.1177/1050651909338809

- World Wide Web Consortium. (2010). W3C markup evaluation service. Why validate? Retrieved November 2011 from http://validator.w3.org/docs/why.html

- Youngblood, N. E., & Mackiewicz, J. (2012). A usability analysis of municipal government website home pages in Alabama. Government Information Quarterly, 28(4), 582-588. http://dx.doi.org/10.1016/j.giq.2011.12.010

- Zappen, J. P., Harrison, T. M., & Watson, D. (2008, May 18–21). A new paradigm for designing e-government: Web 2.0 and experience design. Proceedings of the 2008 International Conference on Digital Government Research (pp. 17–26). Montreal, Canada: Digital Government Society of North America. Available at http://dl.acm.org/citation.cfm?id=1367839

Intercoder reliability for these measures, based on an 11% overlap, had a Cohen’s kappa value of 1.0 (the two coders, the authors, completely agreed), which is not unusual for a study using dichotomous measures. We examined the correlation between each demographic variable and web portal usability by testing both the usability index (the sum of each portal’s usability scores, with a potential high score of 14) and each separate usability measure.

Best coding practices

To test for best coding practices, we used machine testing of accessibility and compliance with W3C coding standards, checking each of the 38 functional portals. First, using WAVE, we examined whether the page included any W3C Priority Level One accessibility compliance errors, standards that the W3C state developers must adhere to (West 2008). We also recorded the types of errors and how many of each error there were, and we documented major issues. WAVE does not test Flash-based content, and the majority of content on two of the portals was Flash based. We included these Flash-based portals in the analysis because WAVE checked the HTML containing the Flash, but we could not ensure that these sites were, in fact, accessible. Although automated accessibility testing software does not replace user and/or expert-based evaluations, it is a good start for accessibility testing, offering diagnostics and a general test of accessibility, particularly for a large set of sites.

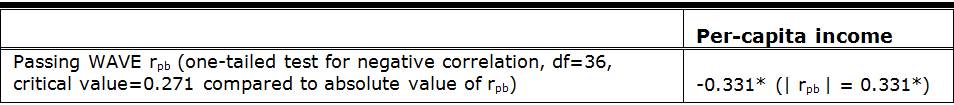

Next, we used the W3C’s HTML validation tool to test each county’s homepage for compliance with W3C coding standards, and we recorded the number of errors. The validation tool was unable to analyze one of the websites, as noted in Table 3, despite multiple attempts. Using valid markup typically demonstrates a level of professional competency as well as helps with accessibility and keeps the site from being susceptible to browser changes, particularly as browsers have become more standards-based. Like WAVE, the W3C Validator is an automated tool, and this brings with it limitations, most notably the fact that coding errors can have a cascade effect in which a single error triggers multiple error messages. To test the possible correlation between best practices in coding and the demographic variables, we separately calculated the correlation between each demographic variable and both (a) passing WAVE with no errors and (b) having W3C valid code.

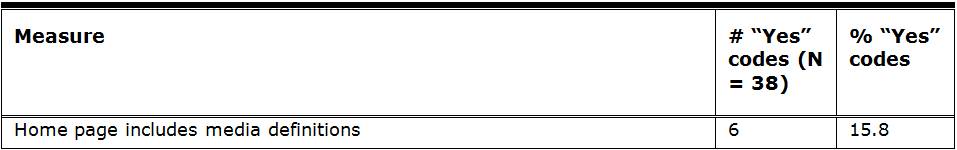

New communication technology

We also looked to see to how counties were approaching the user experience, particularly the use of new communication technology. To address this issue, we examined the HTML code to see if the associated style sheets included the attribute “media” and what media were included (such as print, screen, mobile) or if the accompanying style sheet included an “@media” statement, which serves the same purpose. We then tested for correlations between media styles (including @media statements) and the demographic variables.

Results

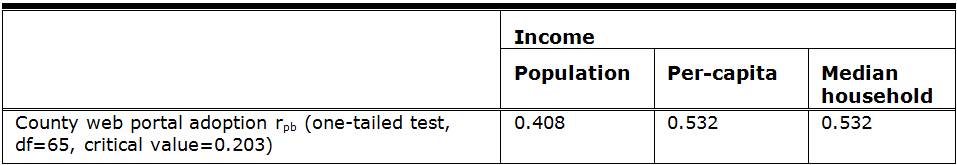

We identified 39 county web portals. As we describe in the Methods section, one was not functional during the study. Over half of the Alabama counties, 58.2%, have adopted web portals for e-government. The results support portal adoption hypothesis: Web portal adoption is weakly correlated with population and moderately correlated with income (see Table 1).

Table 1. Correlation Between Web Portal Adoption and Population and Income

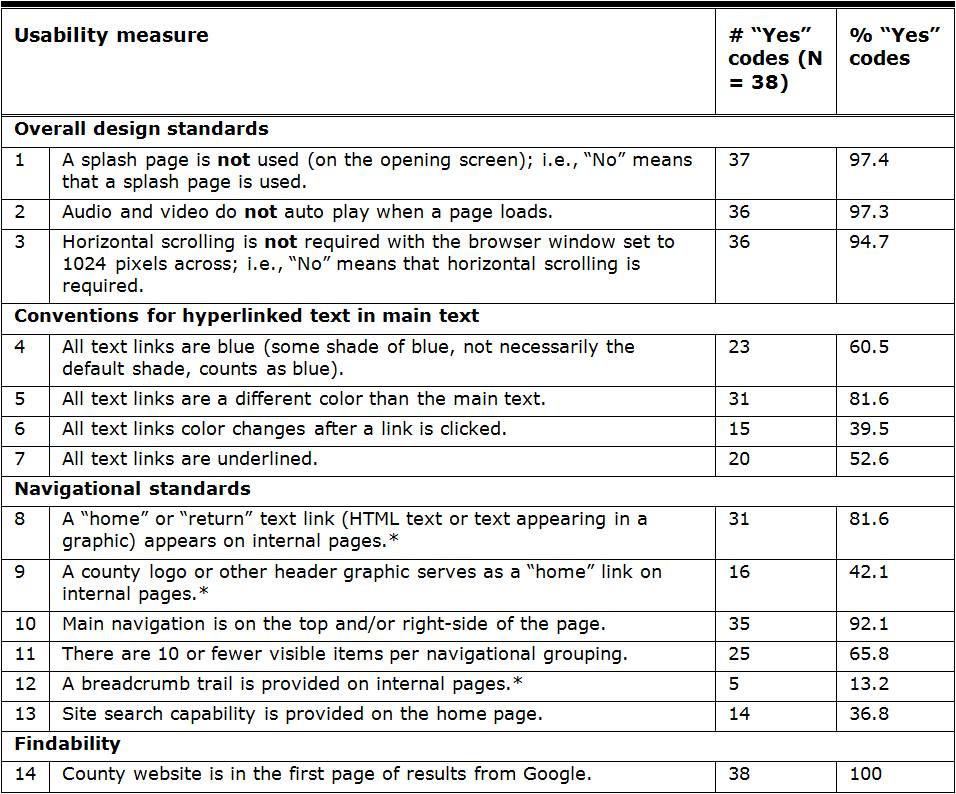

The usability hypothesis asked how well Alabama county websites adhere to basic and broadly accepted usability standards. Sites adhered to some of the standards and best practices, but many were lacking in multiple ways. Table 2 provides detailed results of the measure-by-measure adherence to usability practices.

Table 2. Usability of County Sites

* Always assessed on internal pages.

The findings in this portion of study were at least partly in line with those in the Youngblood and Mackiewicz (2012) analysis of Alabama municipal websites. By-and-large, the county websites included a link labeled home at either the top of left-hand navigation or at the left of the top navigation (81.2%). Sites without the labeled link either used a house-shaped icon or relied on the user knowing to click on the logo or header graphic. With only three exceptions, the county sites placed their navigation in the recommended locations—the left and/or top of the page. County sites also typically fit within the suggested page width of 1024 pixels (94.7%). The main county pages were also easy to locate with the Google search results—all appeared within the first 10 (first page) of search results. Most sites (81.6%) used text links that were a different color than the surrounding text. Substantially fewer had links that changed color after being visited (39.5%) or were underlined (52.6%). On average, sites met between 10 and 11 of the 14 tested usability criteria.

We expected the results to support the null hypothesis that usability would not correlate with demographic factors, and indeed neither the usability indexes (the composite usability scores) nor the individual usability variables correlated with the demographic variables. For two-tailed tests (df=36), r and rpb were less than the critical value of 0.320.

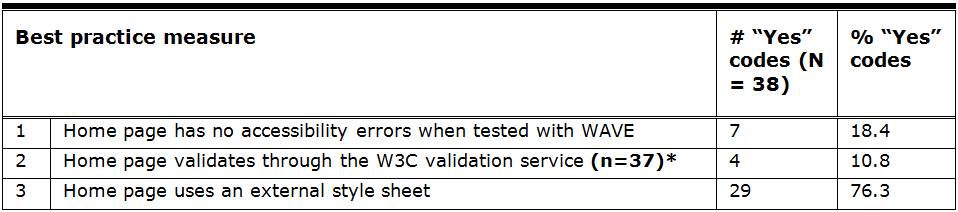

We then addressed adherence to best practice coding, including accessibility standards, valid HTML, and the use of external style sheets (see Table 3). The majority of Alabama county sites did not have valid code; only 10.8% had no validation errors. The same was true for accessibility errors when tested in WAVE; only 18.4% were error-free when we included the two mostly Flash-based sites in the analysis (n=38). Even excluding those sites (n=36), the passage rate is still low: 19.4%. For detailed accessibility results, see Table 4. However, the majority (76.3%) did have external style sheets.

Table 3. Overall Accessibility, Validation, and Style Sheet Use of County Sites

* The W3C validation service was unable to run on one county site despite multiple attempts.

Table 4. Error-by-Error WAVE Performance of County Sites

The results supported the null hypotheses for part of the best-coding-practices hypothesis: There was no significant correlation between the demographic variables and either W3C valid code or the use of external style sheets. However, there was an unexpected negative correlation between passing WAVE’s accessibility check and per-capita income, which means that the lower the income, the higher the number of errors (see Table 5).

Table 5. Correlation Between Passing WAVE Evaluation and Per-Capita Income

*significant

The last hypothesis asked whether the county websites were prepared to take advantage of new communication technologies such as mobile devices. For the most part, they were not, with only 15.8% being prepared (See Table 6).

Table 6. Mobile-Device Readiness of County Sites

We expected no correlation between adoption of new technologies and either county population or income. As expected, media styles did not have significant correlations with demographics.

Recommendations

A number of the county sites have coding or designs that are behind the times in terms of standards and conventions, and these recommendations address this issue. Several of the criteria above may be rudimentary, yet some of the developers need to solve these rudimentary problems before we can widely and meaningfully address more nuanced criteria across county-level sites. For instance, the design of one county’s website, at the time of this writing, has been updated since the time of data collection, but the design still ignores basic usability principles. For further studies, we recommend that we build on existing studies of local government website quality to examine the following:

Once more local government sites meet rudimentary standards, we also recommend further examining the adoption and use of style sheets for mobile devices.

Conclusion

In the use of county web portals, Alabama reflects nationwide patterns. About 2% (58.2) more Alabama counties have adopted portals than Huang (2006) identified as the national percentage of 56.3%, and portal adoption is correlated with county population, per capita income, and mean household income. In the 6-year gap in these studies, we might have hoped for wider portal adoption. Alabama county web portals typically meet a number of usability standards, which is encouraging, but lag in a few areas. On comparable usability measures that Youngblood and Mackiewicz (2012) examined in Alabama municipal websites, the county portals typically fared a bit better than their municipal counterparts, with a few exceptions (home links, underlined text links, and search capabilities). Some areas of usability are weak on average, particularly search capabilities and link features. Breadcrumb trails are largely missing, but some sites may not be deep enough to necessitate their use. The failure to adhere to link conventions is more problematic: Link conventions help users identify links in text, return home, and identify parts of the site they have already explored, thus avoiding retreading old ground in a search for information and services.

Even more problematic are the accessibility findings. Research with users with disabilities indicates that although the standards begin to tackle problems, developers need to go beyond standards to make sites universally usable (e.g., Leporini & Paternò 2008; Theofanos & Reddish 2003). Many of the county homepages failed to meet critical accessibility standards. Around 60% of the homepages had images missing alternative text, and 39.5% had linked images with missing ALT elements. This means that users who rely on alternative text would not be able to access the image content and would be left to wonder if the images were important, particularly in the case of images that served as hyperlinks. In some cases, the WAVE and validation results, though indicators of problems, underestimate the extent of the problems. One county’s attractive portal homepage—text and all—was an image map without alternative text. Thus, not only was the navigation inaccessible, but so was all of the content, and the page was slow to load. And over a third of the sites were missing form labels (such as for search fields). Furthermore, for two of the portals, WAVE could only check the HTML shell, not the Flash-based content within it, possibly meaning that problems here are underreported.

All that said, it is important to remember that Alabama counties are far from alone in having these problems. Accessibility problems are also frequent at the municipal level (e.g. Evans-Cowley, 2006; Youngblood & Mackiewicz, 2012), state level (Fagan & Fagan, 2004; West 2008) and federal level (Olalere & Lazar, 2011), as well as in e-commerce, including Fortune 100 companies (Loiacono, Romano & McCoy, 2009). Counties with lower per capita income were a more likely to pass a WAVE screening; the correlation is statistically significant and moderate, rather than strong. In other words, a scatterplot of the data produces points that group around a line, but not tightly. The correlation might be related to whether counties have the resources to build complex sites, but more research is warranted to explain the finding.

This study identified a range of areas for improvement in Alabama county websites; however, Alabama counties are not the only governmental organizations facing these issues. State and local governments need to take usability and accessibility into account when allocating funds for developing and maintaining a website. Having identified problems that need to be addressed, the next step is to delve into why these problems exist and how they might best be solved. The following are some questions this study raises:

These issues are perhaps best addressed through a combination of surveys, interviews, and focus groups. Our findings also argue for usability and accessibility practitioners reaching out to form partnerships with local government web developers, a partnership that would benefit not only the field but also the public.