In today’s digital economy, the demand for enhanced user experience is a key driver of user-centered product innovations. To be competitive in a crowded global market, companies must deeply understand users’ needs so that they can create products that resonate with their target markets. One effective way to align users’ needs to product design is by applying the UX-Driven Innovation (UXDI) framework, which employs a scientific approach to creating value through product innovation (Djamasbi & Strong, 2019).

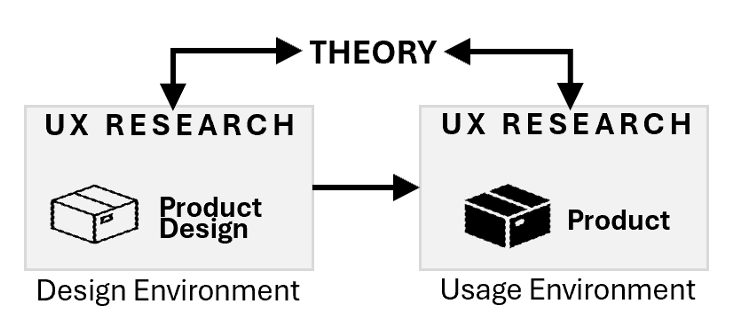

According to the UXDI framework, a product’s life cycle spans two distinct environments. The first is the design environment in which product development begins. In this environment, the design process is informed by business strategy and shaped by factors such as organizational culture, management mindset, and resource availability. The second environment is the usage environment in which the product is launched and used by its intended target market in the real world (Figure 1).

Figure 1: UXDI product innovation.

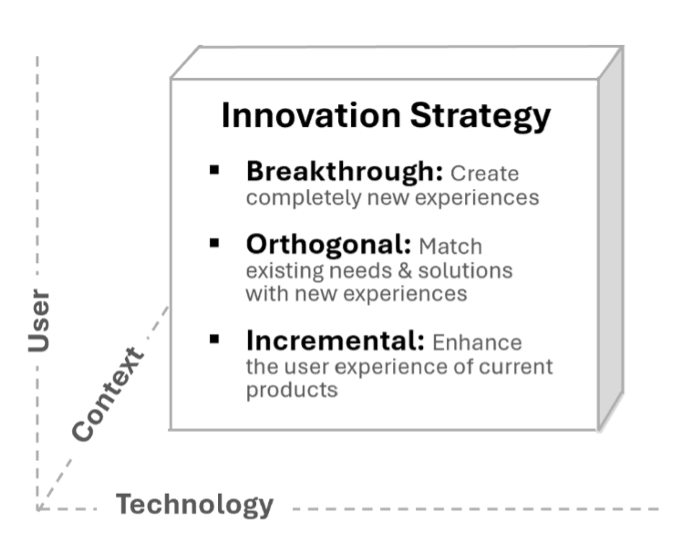

UXDI’s approach to value creation relies heavily on behavioral theories, which provide a scientific framework for exploring the UXDI product design space. This design space is structured around three key dimensions essential to human-centered product design: users, technology, and context.

The user dimension refers to the target market for the product, whereas the context dimension relates to the problem domain or specific situation in which the product will be used. The technology dimension refers to the available technologies that can enable product innovation. Together, these three dimensions help define a problem from the users’ perspective within a specific context, enabling the exploration of technologies—or combinations of technologies—to most effectively address the problem in a way that is perceived as valuable by the intended users (Djamasbi & Strong, 2019).

To clarify how and to what degree product innovation is aligned with short- and long-term business goals, the UXDI design space frames value creation through three key innovation strategies. Incremental innovation refers to significant improvements in user experience design in existing products, such as developing a conversational user interface to enhance information retrieval for a web-based solution (Persons et al., 2021). Orthogonal innovation involves matching existing needs and solutions in new ways to create novel experiences, such as using optical character recognition technology to provide dynamic feedback icons (thumbs up/thumps down) on healthy choices from static nutrition fact labels (Jain et al., 2019; Jain et al., 2020). And breakthrough innovation refers to the development of entirely new innovative experiences, such as using eye movement sensors and AI to detect a user’s high cognitive load and provide real-time feedback to mitigate the negative effects of that load on performance (Shojaeizadeh et al., 2023).

Behavioral theories, which explain user behavior and reactions in specific contexts, can aid in exploring the UXDI design space for product development. Leveraging behavioral theories helps reduce uncertainty during development, optimize costs, and minimize the risk of unexpected challenges, thus making the development process more cost-effective. Moreover, by building a framework for predicting user responses to technological solutions (such as the technology acceptance model, task-technology fit model, information systems success model, and so forth), behavioral theories enhance the likelihood and viability of product success, ultimately improving return on investment (Djamasbi & Strong, 2019).

Figure 2: UXDI design space.

UX research within the UXDI framework is integrated throughout the entire product life cycle. A key objective of this research is to understand and explain how people perceive and interact with products. Traditionally, this objective has been achieved using qualitative research methods, such as observation and interviews, or quantitative methods, such as surveys and experiments, to measure perceptions and performance. However, the rise of sensor-based technologies enables UX researchers to move beyond traditional methods and measure experiences objectively through physiological measures, including eye movements, heart rate variability, and facial expressions. The use of physiological measures in UX research not only creates exciting opportunities to evaluate design but also allows for the development of innovative bio-responsive products and services that leverage physiological data to create smarter, more personalized experiences (Shojaeizadeh et al., 2019; Fehrenbacher & Djamasbi, 2017).

In this article, I discuss the value of capturing and analyzing eye movement data in UX research for product development. Because most individuals rely heavily on vision to process information from their environment, eye tracking serves as a powerful tool for capturing users’ perspectives objectively (Djamasbi, 2014). The increasing availability of high-quality, consumer-grade eye-tracking devices presents a significant opportunity for incorporating eye tracking into UX research for evaluating products. These devices allow for the cost-effective collection of eye movement data at scale, making it feasible for companies to integrate eye tracking into routine user testing and product development processes without incurring the substantial costs traditionally associated with such technologies. Additionally, as eye-tracking technologies become more affordable and widely available in consumer products, they create greater opportunities to leverage eye movement data for creating novel and intuitive experiences. This, in turn, should prompt the advancement of UX theories to deepen our understanding of quantifying visual behavior and applying that knowledge to create value through innovation.

In the following sections, I explain how tracking users’ eye movements can amplify insights gained from traditional UX research and support the development of smart, bio-responsive products. To begin with, I provide a brief overview of how our visual system works and how eye trackers can reveal our information-processing behavior. Next, I present a case study that illustrates how eye tracking can offer researchers a direct and more comprehensive view of a user’s perspective. Then, I explain how subtle shifts in a user’s cognitive processing, detectable through eye movements, can provide valuable input for creating innovative, AI-powered, bio-responsive solutions. I also explain that harnessing and maximizing the benefits of eye-tracking research in product innovation requires the careful development of targeted educational and training programs that integrate scientific eye-tracking in UX research with industry innovation practices. I conclude the article by highlighting the importance of industry-academic collaboration in developing these programs to create a highly competitive workforce capable of shaping the next generation of AI-powered, user-centered product innovations that can detect and address user needs in real time.

The Science of Seeing: How Eye Tracking Reveals Human Cognition

Eye trackers record what we focus on. Because what we focus on is often what we think about (Just & Carpenter, 1980), eye tracking provides valuable insights into our perception and cognition (Djamasbi, 2014).

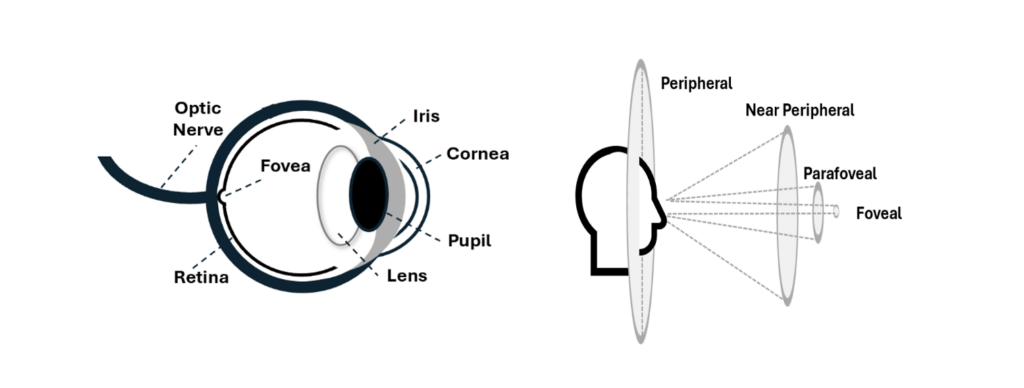

The process of seeing begins when light reflects off an object and enters our eyes. The amount of light entering our eyes is regulated by the constriction and dilation of the pupil, which is continuously adjusted by the autonomic nervous system. This light first hits the retina, a light-sensitive layer at the back of the eye, where two types of receptors—rods and cones—play key roles in how we perceive our environment.

Rods function in low-light conditions, such as in a dark room or at dusk, and are specialized for black-and-white vision. Cones, on the other hand, help us perceive color and work best in bright light like daylight or a well-lit room. The retina contains far more rods (about 120 million) than cones (about 7 million) (Duchowski, 2017). Most of the cones are concentrated in the fovea, a small, central area of the retina. Due to this high concentration, the fovea allows for sharp, detailed vision (Figure 3a).

Figure 3: The anatomy of vision: (a) the structure of the eye, and (b) the cone of vision.

However, the fovea covers only a small part of our visual field—about 2 degrees, roughly the size of a thumbnail held at arm’s length. As we move away from this central point, our vision becomes less sharp. For example, at 5 degrees from the center, our acuity drops to about 50%. Our useful visual field extends to about 30 degrees, and beyond that, the retina is primarily responsible for detecting motion (Duchowski, 2007; Solso, 1996) (Figure 3b).

To compensate for the limited sharp vision in the fovea, we constantly move our eyes in rapid, small jumps known as saccades. These jumps can be small when we are reading, or large when we are scanning a room. During these rapid eye movements, we do not process visual information. Instead, we process visual data when our eyes slow down to focus on an object. These slower eye movements, called fixations, allow us to place an image on the fovea, ensuring clear and detailed vision.

The raw gaze data captured by eye-tracking sensors is translated into key eye movement categories, such as fixations, saccades, and pupillary responses, which provide valuable insights into how we process information. For example, fixations reveal the location, frequency, and duration of our focus on an object, indicating what attracts our attention and to what degree. Saccades offer similar insights by showing how often we shift our focus, how long these shifts last, and how far our eyes travel to refocus attention. The involuntary constriction and dilation of the pupil, known as pupillary responses, reflect our level of involvement in activities like reading or searching, providing further insights into our information-processing behavior.

Improving Product Design with Eye Tracking

User experience refers to an individual’s subjective experience when interacting with a product to achieve a goal or complete a task (Djamasbi & Strong, 2019). As such, a user’s experience may differ from what designers originally envisioned. Eye tracking, which allows us to observe how users attend to and process visual information, offers a valuable way to see a product from the user’s perspective and assess whether our design concepts effectively achieve their intended goals (Djamasbi, 2014).

To demonstrate the value of eye tracking in product design, I will discuss a case study in which eye tracking was used to enhance UX research at various stages of developing a complex web-based, medical decision aid (DA).

Developing the Neuro ICU Decision Aid

Adapted from a paper-based version, a neuro ICU digital DA was designed for short-term use to help surrogate decision-makers prepare for a critical clinician-family meeting. In these meetings, the surrogate decision-makers would decide whether to continue life-sustaining interventions for their non-responsive loved ones with severe brain injuries (Barry & Edgman-Levitan, 2012; Muehlschlegel et al., 2020; Muehlschlegel et al., 2022). The DA provided information on two major treatment options for patients with severe brain injuries who are kept alive artificially in neurological intensive care units (neuro ICUs). One option outlined invasive medical interventions necessary to sustain the patient’s life, including survival probabilities with various levels of disability. The other focused on palliative care, aiming to minimize suffering while allowing the patient to live out the remainder of their natural life. Because the DA was designed to assist individuals—often without medical knowledge—in making informed decisions about their loved one’s treatment, a key goal of the UX research was to enhance user engagement with the DA.

To enhance engagement with the DA, it was crucial to guide users in reviewing its complex medical information in a way that maximized comprehension. Achieving this goal required a strategically designed navigation system. Two prototypes were developed, each containing the same content but using different navigation styles: one with a top navigation bar and the other with a left navigation bar. These navigation bars provided users with a clear overview of the structure of the content collection (Djamasbi et al., 2019).

Fourteen participants were recruited to review the DA while their eye movements were tracked. Afterward, each participant, randomly assigned to one of the two prototypes, completed a survey to assess their perceived engagement with the DA. This was followed by a brief interview session in which participants provided feedback on their experience. While self-reported measures showed slightly higher engagement with the left navigation bar, the differences between the two prototypes were not statistically significant. Similarly, an analysis of the feedback from the exit interviews revealed no major differences in engagement between the two prototypes.

However, the analysis of eye movement data revealed a different story, showing that the prototype with the left navigation bar was significantly more effective in engaging users with the DA. For instance, when comparing attention to the two navigation bars, the left navigation bar attracted significantly more fixations. Additionally, the dispersion of fixation patterns indicated that, as participants progressed through the content, those using the left navigation bar showed a more organized approach to navigating the links compared to those using the top navigation bar. Specifically, participants using the left navigation bar focused their fixations on links to upcoming content, rather than revisiting already-viewed material. In contrast, participants using the top navigation bar had more scattered fixations across various topics (Norouzi Nia et al., 2021). These differences in viewing patterns on the two navigation bars indicated that the left navigation bar was more successful in providing a clear overview of the content structure.

Furthermore, participants reviewing the prototype with the left navigation design showed significantly higher engagement with the DA content, as evidenced by significantly longer fixations on pages (Sankar et al., 2025). Variations in pupil size, used as a measure of changes in information processing strategies (Fehrenbacher & Djamasbi, 2017), were also significantly lower for participants using the left navigation, indicating deeper involvement with the content (Sankar 2025). These results collectively show that eye tracking was able to reveal significant differences in user engagement with the DA that traditional methods could not detect.

The next stage of development focused on designing the content to improve engagement with the DA. Because important information is typically conveyed through written text, the DA relied on text-based communication to present complex medical details across 18 pages. Making life-or-death decisions for a loved one is both cognitively complex and emotionally taxing, placing a heavy burden on an individual’s cognitive resources. When cognitive resources are exhausted, information processing becomes more challenging. Given the volume of content in the DA and the emotionally taxing nature of the information it provided, it was essential to minimize the effort required to review it as much as possible.

To achieve this design goal, the DA underwent four iterative test-and-revise cycles. The first two iterations focused on improving the flow and visual design of information to make the DA easier to follow. Seven clinicians, experienced in explaining treatment options to patients and their families, reviewed the DA and provided suggestions for improvement. Their feedback helped shape how the information was chunked and organized, making it more accessible and easier for surrogate decision-makers to understand. Additionally, five surrogate decision-makers, who had previously made the difficult choice of selecting a treatment option for their loved ones, were interviewed while reviewing the DA. This process allowed us to understand how they perceived the content, what questions arose during their review, and whether they were able to find the answers they needed. The feedback gathered was used to refine the DA’s visual design, making it more intuitive and easier to process.

After revising the DA based on feedback from the first two iterations, eye tracking was utilized in the third iteration to gain deeper insights into how surrogate decision-makers processed the updated DA. Three family members of neuro ICU patients were invited to review the DA while their eye movements were tracked. Following the review, participants were shown a video of their eye movements, which was intended to encourage them to discuss their thoughts and feelings. This technique, known as retrospective think-aloud, is a powerful method for gathering valuable insights to improve products.

The retrospective think-aloud sessions in the third iteration highlighted the need to further reduce the cognitive effort required to review the DA to improve engagement. To address this, the text-heavy DA was redesigned with clearer visual hierarchies to guide the viewers’ attention in a way that ensured the most important content stood out or was noticed first. This was achieved through the strategic use of various design techniques such as font size, weight, color, contrast, and spacing to emphasize critical information.

The information gathered during the retrospective think-aloud sessions provided another important insight for improving engagement with the DA. Although participants acknowledged that the DA delivered critical information, they felt it lacked human warmth and empathy. Feedback from participants indicated that adding warmth and empathy would make the DA more engaging by creating a welcoming experience and enhance trust in its use, ultimately improving its usability (Zhang et al., 2023). In response, a set of videos was designed and produced to accompany the DA’s textual content. These videos featured a neuro ICU doctor explaining the treatment options outlined in the DA, as well as several surrogate decision-makers sharing their experiences in choosing treatment options on behalf of their loved ones.

The revised visual hierarchy of the textual content, along with the addition of videos, was tested using eye tracking in the fourth iteration. Four new family members of neuro ICU patients were invited to review the DA. Similarly, in the third iteration participants’ eye movements were recorded while they reviewed the DA. Qualitative feedback about their experience was collected during retrospective think-aloud sessions.

The analysis of participants’ eye movement patterns revealed that the DA’s revised visual design was processed more thoroughly compared to the third iteration. Verbal feedback from the retrospective think-aloud sessions indicated that using the DA was more satisfying than before, suggesting that the inclusion of videos successfully created a more welcoming experience. Together, these results demonstrated that the revisions were effective in enhancing user engagement with the DA.

Creating AI-Powered Bio-Responsive Solutions with Eye Tracking

Creating bio-responsive solutions through eye tracking requires a comprehensive UX research program that focuses on addressing a fundamental question: Can eye movements reliably serve as biomarkers for user experience? A crucial first step to answer this question is to explore the extent to which eye movements can provide deeper insights into existing behavioral theories relevant to user-centered design. This exploration typically requires developing new investigative methods that not only advance current theories but also create a foundation for proposing new ones (Djamasbi & Strong, 2019). The expansion of theoretical frameworks for product innovation then contributes to practice by creating more opportunities for exploring value creation through bio-responsive solutions in the UXDI design space (Figure 2).

To date, the eye-tracking UX research program aimed at this goal has shown promising results. In this section, I discuss two examples of such research programs that highlight the progress and impact of this approach in developing breakthrough AI-powered bio-responsive solutions.

Developing a Bio-Responsive Solution for Cognitive Strain

The first example explores UX research aimed at determining whether eye movements can serve as a reliable biomarker for cognitive load in decision-making contexts. This knowledge is crucial for developing bio-responsive solutions to enhance decision quality and performance.

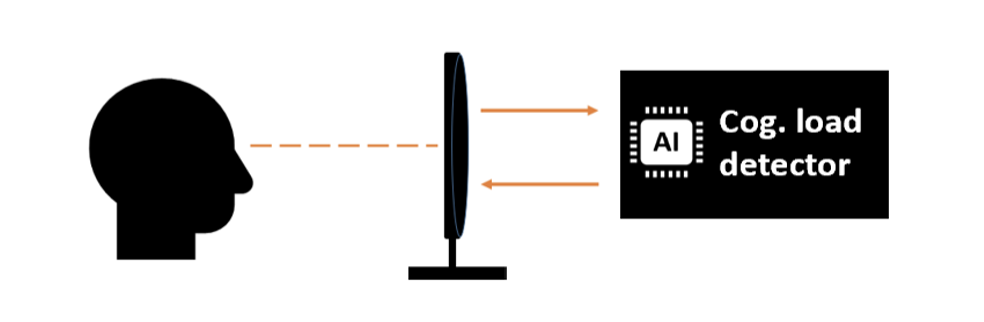

Judgment and decision-making theories suggest that high cognitive load impairs our ability to process information efficiently. Because eye trackers can capture our information-processing behavior, UX research has focused on quantifying eye movement under varying levels of cognitive load (Shojaeizadeh & Djamasbi, 2018; Shojaeizadeh et al., 2017a; Shojaeizadeh et al., 2017b; Shojaeizadeh et al., 2019; Fehrenbacher & Djamasbi, 2017; Sankar et al., 2025). The results show that high cognitive load affects visual information processing behavior, and that gaze- and pupillary-based eye movement metrics can indicate when mental resources are depleted due to high cognitive loads. These findings not only validate judgment and decision-making theories at a deeper cognitive level but also extend them by introducing new methods for detecting cognitive load through real-time eye movement monitoring. This real-time detection creates new opportunities for developing AI-driven, bio-responsive solutions that can enhance decision-making performance in information-rich environments.

According to judgment and decision-making theories, decision-making in information-rich environments is essentially a resource allocation task. When we effectively manage our mental resources to process relevant information, we tend to make better decisions. However, when our mental resources are overwhelmed, our ability to process information—and, consequently, the quality of our decisions—suffers.

Figure 4: AI-powered bio-responsive decision support tool.

This conceptualization of decision-making behavior suggests that one of the most effective ways to enhance performance when cognitive capabilities are compromised is by helping individuals manage their mental resources more efficiently. A bio-responsive tool, capable of detecting high cognitive load through eye movements, can mitigate the negative effects of information overload on decision quality by guiding users to focus their cognitive efforts on the most crucial aspects of the task at hand (Shojaeizadeh et al., 2023).

For example, imagine your team is facing a challenging situation, and you are responsible for making a critical decision within the next few hours. To make an informed choice, you need to evaluate a large volume of information, weighing various factors across several possible scenarios. However, your demanding schedule has left you mentally fatigued, making it harder to process the data effectively. In this case, a bio-responsive tool could help you conserve cognitive resources by suggesting that you concentrate on two or three of the most crucial attributes for your decision. Real-time eye-tracking data would enable a smart system to determine whether you have been focusing on the critical information, and if not, it could draw your attention to key data points, helping you prioritize what matters most.

The ability to detect and respond to cognitive load in real time using eye movements represents a breakthrough innovation in the UXDI design space (Figure 2). Situational factors such as increased workload, stress, fatigue, or insufficient sleep can significantly impair information processing, even for seasoned decision-makers. The real-time detection of cognitive load enables smart bio-responsive tools to adjust to users’ information-processing needs. They help decision-makers consistently deliver high-quality decisions, regardless of whether they are facing situational challenges that could negatively affect their cognitive resources. The applicability of smart bio-responsive systems extends beyond organizational settings. They can also assist in personal decision-making, such as making financial choices (trading stocks or developing retirement portfolios) and managing health and wellness (setting fitness or weight management goals).

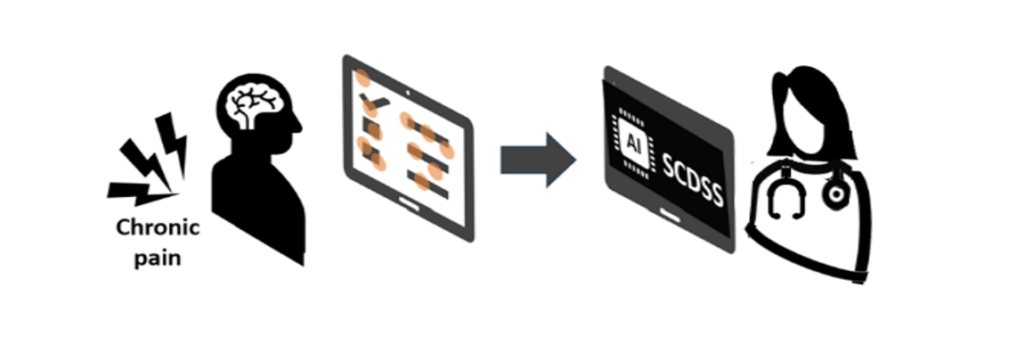

Developing a Smart Clinical Decision Support System for Chronic Pain Treatment

The second example of UX research focusing on developing breakthrough AI-powered, bio-responsive user-centered innovations is research focusing on establishing eye movements as bio markers of chronic pain experience. The theoretical foundation for this line of UX research is grounded in pain literature, which suggests that individuals with chronic pain attend to pain-related information differently than those without chronic pain. While studying the impact of pain on attention has a long history, using eye-tracking technology to examine this phenomenon is relatively new, and the results have been mixed (Chan et al., 2020). Pain studies often compare how individuals with and without chronic pain respond to pain-related stimuli by examining how they visually process simple pairs of neutral and pain-related stimuli, such as words like house versus aching, during brief exposure times such as 500 ms. UX studies suggest that the mixed results in eye-tracking pain research may stem from visual stimuli not being perceived as relevant to an individual’s pain experience, making the stimuli insufficiently engaging to trigger the disruptive effect of pain on attention. Additionally, short exposure times may not offer enough opportunity to capture the attention nuances that could reveal expected differences in viewing behavior (Alrefaei et al., 2023).

Figure 5: AI-powered bio-responsive clinical decision support system for chronic pain.

To support this argument, UX studies have proposed a new stimuli-task paradigm to address the limitations of current pain research methods. This approach aims to resolve the issue of stimuli not being perceived as relevant to one’s pain experience by presenting participants with stimuli that encourages them to reflect on their own pain. To overcome the exposure time problem, the stimuli is presented to participants without any time limit (Alrefaei et al., 2023; Alrefaei et al., 2022). The visual stimuli in these UX studies comprises a set of pain-related questionnaires that ask participants to assess how pain interferes with daily activities, such as household chores, work around the home, or social participation.

The findings from these studies show significant differences in how individuals with and without chronic pain process visual information in the questionnaires. For example, people with chronic pain process information more efficiently than those without pain when reading the questions. They spend significantly less time reviewing the questions, using significantly shorter fixations and significantly fewer shifts in focus. However, when selecting an answer, this behavior changes. People with chronic pain take significantly longer to review the options, using significantly longer fixations and significantly more frequent shifts in focus compared to their pain-free counterparts.

Furthermore, the patterns of fixation and saccades while reviewing and responding to pain-related surveys can reliably predict how an individual would report the intensity of their pain over the past week. In other words, an individual’s eye movement patterns while answering questions about how often pain interferes with their daily activities can accurately predict their pain intensity rating on an 11-point Likert scale, a commonly used pain assessment tool in clinical settings. Motivated by these findings, UX studies are now exploring other visual tasks, such as reading a short pain-related text passage, to yield similar results (Djamasbi et al., 2024).

These studies validate and extend pain theories by providing a robust methodology for capturing attentional bias through eye movements. In doing so, they contribute to practice by highlighting the potential for developing bio-responsive AI tools that can reliably assess chronic pain. The treatment of chronic pain begins with its assessment, which currently relies primarily on patients’ self-reports. While these reports are essential for understanding a patient’s pain experience from their perspective, they offer a limited view of the pain experience. Self-reported ratings require individuals to summarize their chronic pain experience—a dynamic and complex phenomenon comprising sensory, emotional, cognitive, and social components—by selecting a number from a limited set on a Likert scale. This simplification can result in considerable variability and inconsistencies in self-reports (Alrefaei et al., 2024) as it fails to capture the complexity and dynamic nature of the pain experience.

Eye movement patterns can provide a more comprehensive understanding of a person’s pain experience, helping clinicians develop early and targeted treatments that can prevent or minimize potential negative health outcomes (Alrefaei et al., 2024). For instance, chronic pain can trigger two very different reactions: some individuals may become hyper-focused on their pain and others may avoid thinking about it altogether. Both reactions can lead to significant negative health outcomes if left unaddressed. These behavioral patterns can be detected through sustained visual attention to pain-related stimuli (Alrefaei et al., 2023). By identifying these behavioral patterns through monitoring eye movements over time, specialized AI-powered bio-responsive tools can notify clinicians of emerging issues, enabling early intervention and the prevention of further health complications. Advancements in UX research—particularly tracking chronic pain through eye movements over time—offer a new approach to precision medicine in chronic pain management. This approach tailors treatments based on the real-time detection of a patient’s psychological and physiological states, using objective eye movement data to optimize care.

Advancing Product Innovation Through UX Eye-Tracking Research and Education

Advancements in sensor-based technologies, such as eye-tracking, have a significant impact on UX research for product innovation. These technologies provide opportunities to capture product experiences directly from the user’s perspective in real time. This not only allows testing the usability of products and generating actionable design insights for improvement, but it also creates a new line of UX research focused on developing innovative, user-centered solutions that leverage eye movements as biomarkers of user experience.

Effective integration of eye-tracking research with industry innovation practices to create value in user experience design necessitates carefully crafted new educational and training programs to develop a competitive workforce for shaping the future of user-centered innovations. This workforce will not only be capable of using eye-tracking research for usability testing but also capable of executing eye-tracking research to develop AI-powered, bio-responsive tools that respond to user needs in real time.

Such programs must emphasize the rigor of creating scientific knowledge as well as the business skills needed to translate that knowledge into viable, innovative, user-centered solutions. Like any organizational activity, UX research for product innovation comes with a cost that must be justified by its value. Unlike academic research, which derives its value from contributing to knowledge, UX research for product innovation derives its value from contributing to practice and presenting its product innovation ideas in a way that makes business sense (Djamasbi & Strong, 2019). As such, business schools could play a key role in developing these educational programs for UX research in product innovation.

Collaboration between academia and industry is essential for offering workforce training programs. While universities are ideal platforms for providing targeted UX education and training programs for product innovation, establishing new niche programs in universities requires strong industry support to champion and advocate for their importance.

One way to gain industry support for establishing educational and training programs at universities is by raising awareness of the potential of eye-tracking UX research and its value in product innovation. Despite the substantial body of academic work on UX eye-tracking research, many academic articles remain confined to academia and fail to resonate with industry practitioners. This may be because academic research typically focuses on contributing to knowledge, often understating its practical implications for product innovation. Translating academic knowledge into practice—such as publishing the practical implications of academic research in outlets favored by practitioners—could help raise industry awareness of the potential of UX eye-tracking research in product innovation.

Conclusion

The rapidly advancing field of eye-tracking technology presents a transformative opportunity for UX research and product innovation. By establishing specialized educational programs that blend scientific rigor with practical industry innovation practices, we can equip the next generation of UX professionals to harness the full potential of this technology. Collaboration between academia and industry will be essential to connect traditional scientific eye-tracking research to creating value with innovative user experiences. This, in turn, ensures that eye-tracking research contributes to UX knowledge and drives innovation by delivering tangible value for businesses and users alike.

References

Alrefaei, D., Djamasbi, S., & Strong, D. (2024). UX approach to designing a clinical decision support system for pain. Proceedings of the Sixth New England Chapter of AIS (NEAIS) Conference. Worcester, Massachusetts.

Alrefaei., D., Djamasbi S., & Strong, D. (2023). Chronic pain and eye movements: A NeuroIS approach to designing smart clinical decision support systems. AIS Transactions on Human-Computer Interaction, 15(3), 268–291. https://doi.org/10.17705/1thci.00191.

Alrefaei, D., Sankar, G., Norouzi Nia, J., Djamasbi, S., Strong, D. (2022). Examining the impact of chronic pain on information processing behavior: An exploratory eye-tracking study. In D. D. Schmorrow, & C. M. Fidopiastis (Eds.), Lecture notes in computer science: Vol. 13310. Augmented cognition (pp. 3–19). HCII 2022. Springer, Cham. https://doi.org/10.1007/978-3-031-05457-0_1

Barry, M. J., & Edgman-Levitan, S. (2012). Shared decision making—The pinnacle of patient-centered care. New England Journal of Medicine, 366(9), 780–781. https://doi.org/10.1056/NEJMp1109283

Chan, F. H., Suen, H., Jackson, T., Vlaeyen, J. W., & Barry, T. J. (2020). Pain-related attentional processes: A systematic review of eye-tracking research. Clinical Psychology Review, 80, 1–20. https://doi.org/10.1016/j.cpr.2020.101866

Djamasbi, S., Tulu, B., Norouzi Nia, J., Aberdale, A., Lee, C., Muehlschlegel, S. (2019). Using eye tracking to assess the navigation efficacy of a medical proxy decision tool. In D. D. Schmorrow, & C. M. Fidopiastis (Eds.), Lecture notes in computer science: Vol. 11580. Augmented cognition (pp. 143–152). HCII 2019. Springer, Cham. https://doi.org/10.1007/978-3-030-22419-6_11

Djamasbi, S., Norouzi Nia, J., Alrefaei, D., Strong, D., Paffenroth, R. (2024). System for detecting health experience from eye movement. US Patent App. 18/584,731, 2024. https://patents.google.com/patent/US20240324922A1/en

Djamasbi, S., & Strong, D. (2019). User Experience-driven innovation in smart and connected worlds. AIS Transactions on Human-Computer Interaction, 11(4), 215–231. https://doi.org/10.17705/1thci.00121

Djamasbi, S. (2014). Eye tracking and web experience. AIS Transactions on Human-Computer Interaction, 6(2), 37–54.

Duchowski, A. (2007). Eye tracking methodology: Theory and practice. Springer, Berlin Heidelberg.

Fehrenbacher, D., and Djamasbi, S. (2017). Information systems and task demand: An exploratory pupillometry study of computerized decision making. Decision Support Systems, 97(May 2017), 1–11.

Jain, P., & Djamasbi, S. (2019). Transforming User Experience of nutrition facts label – An exploratory service innovation study. In F. H. Nah, & K. Siau (Eds.), Lecture notes in computer science, Vol. 11588. HCI in business, government and organizations. eCommerce and Consumer Behavior (pp. 225–237). HCII 2019. Springer, Cham.

Jain, P., Djamasbi, S., & Hall-Phillips, A. (2020). The impact of feedback design on cognitive effort, usability, and technology use. AMCIS 2020 Proceedings, 19. https://aisel.aisnet.org/amcis2020/sig_hci/sig_hci/19

Just, M. A., & Carpenter, P. A. (1980). A theory of reading: From eye fixations to comprehension. Psychological Review, 87(4), 329–354.

Muehlschlegel, S., Goostrey, K., Flahive, J., Zhang, Q., Pach, J. J., & Hwang, D. Y. (2022). Pilot randomized clinical trial of a goals-of-care decision aid for surrogates of patients with severe acute brain injury. Neurology, 99(14), e1446. https://doi.org/10.1212/WNL.0000000000200937

Muehlschlegel, S., Hwang, D. Y., Flahive, J., Quinn, T., Lee, C., Moskowitz, J., Goostrey, K., Jones, K., Pach, J. J., Knies, A. K., Shutter, L., Goldberg, R., & Mazor, K. M. (2020). Goals-of-care decision aid for critically ill patients with TBI. Neurology, 95(2), e179. https://doi.org/10.1212/WNL.0000000000009770

Norouzi Nia, J., Varzgani, F., Djamasbi, S., Tulu, B., Lee, C., Muehlschlegel, S. (2021). Visual hierarchy and communication effectiveness in medical decision tools for surrogate-decision-makers of critically ill traumatic brain injury patients. In D. D. Schmorrow, & C. M. Fidopiastis (Eds.), Lecture notes in computer science, Vol. 12776. Augmented cognition (pp. 210–220). HCII 2021. Springer, Cham. https://doi.org/10.1007/978-3-030-78114-9_15

Persons, B., Jain, P., Chagnon, C., Djamasbi, S. (2021). Designing the Empathetic Research IoT Network (ERIN) chatbot for mental health resources. In F. FH. Nah, & K. Siau (Eds.), Lecture Notes in Computer Science, Vol. 12783. HCI in Business, Government and Organizations (pp. 619–629). HCII 2021. Springer, Cham. https://doi.org/10.1007/978-3-030-77750-0_41

Sankar, G., Djamasbi, S., Li, Z., Xiao, J., Buchler, N. (2023). Systematic literature review on the user evaluation of teleoperation interfaces for professional service robots. In F. Nah, & K. Siau (Eds.), Lecture notes in computer science, Vol. 14039. HCI in Business, Government and Organizations (pp. 66–85). HCII 2023. Springer, Cham. https://doi.org/10.1007/978-3-031-36049-7_6

Sankar, G. (2025). A NeuroIS-based UX measurement model of cognitive engagement [Manuscript in preparation]. Worcester Polytechnic Institute.

Sankar, G., Djamasbi, S., Tulu, B., & Muehlschlegel, S. (2025). Measuring cognitive engagement with eye-tracking: An exploratory study, applications of augmented cognition. In Lecture notes in artificial intelligence (LNAI 15778), Vol. 13. HCII 2025. Springer, Cham.

Shojaezadeh, M., Djamasbi, S., Paffenroth, R., & Trapp, A. (2023). Eye-tracking system for detection of cognitive load. US11666258B. https://patents.google.com/patent/US11666258B2/en

Shojaeizadeh, M., Djamasbi, S., Paffenroth, R., & Trapp, A. (2019). Detecting task demand via an eye tracking machine learning system. Decision Support Systems, 116(January 2019), 91–101. https://doi.org/10.1016/j.dss.2018.10.012

Shojaeizadeh, M., & Djamasbi, S. (2018). Eye movements and reading behavior of younger and older users: An exploratory eye-tacking study. In J. Zhou, & G. Salvendy (Eds.), Lecture Notes in Computer Science, Vol. 10926. Human Aspects of IT for the Aged Population. Acceptance, Communication and Participation (pp. 377–391). ITAP 2018. Springer, Cham.

Solso, R. L. (1996). Cognition and the visual arts. MIT Press.

Shojaeizadeh, M., Djamasbi, S., Chen, P., & Rochford, J. (2017a). Task condition and pupillometry. Americas Conference on Information Systems (AMCIS), Boston, MA.

Shojaeizadeh, M., Djamasbi, S., Cheng, P., & Rochford, J. (2017b). Text simplification and pupillometry: An exploratory study, international conference on universal access in Human-Computer Interaction. In D. D. Schmorrow, & C. M. Fidopiastis (Eds.), Lecture Notes in Computer Science: Vol. 10285. Augmented cognition, enhancing cognition and behavior in complex human environments. Springer International Publishing.

UserZoom. (2022). The state of UX 2022: Bridging the gap between UX insights and business impact. The UX Insights System. https://www.usertesting.com/sites/default/files/2023-08/The%20State%20of%20UX%202022.pdf

Zhang, L., Tulu, B., Djamasbi, S., Sankar, G., & Muehlschlegel, S. (2023 a). Investigating user satisfaction: An adaptation of IS success model for short-term use. Hawaii International Conference on System Sciences 2023 (HICSS-56), 6. https://aisel.aisnet.org/hicss-56/hc/process/6