[:en]

Abstract

There is an increasing global interest in developing inclusive technologies that contribute to the social welfare of people in emerging economies. Egypt, a country facing socio-economic challenges, has been investing in an increasingly growing ICT infrastructure and human capital. The current ICT workforce is technically-oriented due to the increasing interest in global outsourcing. However, there is a need for a Computer Science (CS) workforce that is willing to engage with the bottom-of-the-pyramid users as a potential market. CS students should harness interdisciplinary skills that enable them to sympathize with users who have diverse backgrounds, perceptions, and digital literacies. Human-Computer Interaction (HCI) education programs could provide the necessary skills for local CS students to fulfill this workforce gap. Like many Arab and African countries, Egyptian higher education programs and industry have been slow in recognizing the value of HCI.

In this article, I address how introducing HCI education to students in an Egyptian engineering institute can benefit industry. I discuss a series of action research cycles focusing on the learning design of a student-centered introductory HCI short course that successfully sensitized students to the role of end-users in technology design. The learning design was planned to circumvent the challenging fact that HCI was underrated by CS students. Formative assessment results and my reflections, as the instructor, are discussed as well.

Keywords

HCI Education, CS Education, Emerging Economies, Global South, Egypt, HCI4D

Introduction

Information and Communications Technology (ICT) is a cornerstone in facing the world’s complex and difficult challenges in order to achieve sustainable development goals (Wu, Guo, Huang, Liu, & Xiang, 2018). Computer Science (CS) students will increasingly find themselves working to solve problems with social relevance in areas facing natural and economic disasters, marginalized communities, and vulnerable user groups (Fisher, Cameron, Clegg, & August, 2018; Patterson, 2005). Human-Computer Interaction (HCI) education has the potential of equipping CS students with methods and techniques that help them design sensibly with and for diverse user groups to create digital technologies that could impact their economic and social welfare. The global HCI community has been concerned with the future of HCI education in a technology landscape that rapidly grows and changes. The idea of an HCI living curriculum was proposed to connect people and resources, and foster a culture of co-creation, co-development, and collaboration among educators to maintain the curriculum resources (Churchill, Bowser, & Preece, 2016). As digital technologies transcend international borders and seek to influence the lives of the people in emerging economies, the perspectives of non-Western HCI educators and designers in global contexts are essential to include in the living curriculum.

HCI education is challenged globally by the lack of interdisciplinary programs to provide rich training to students in an increasingly growing and changing field (Churchill et al., 2016). This challenge is more prominent in Arab and African countries, where Egypt geographically and culturally belongs, and where HCI is still in its infancy. In both contexts, strong boundaries exist between arts and humanities, and science and engineering scholars, leaving HCI courses rather homeless (Alabdulqader, Abokhodair, & Lazem, 2017; Lazem & Dray, 2018). Furthermore, the effort educators put in to champion HCI is exacerbated by a number of challenges, including the lack of a localized HCI curriculum that addresses the particularities of their context such as low-literacy and the limited access to technology. Additionally, educators deal with over-crowded classrooms and a lack of adequate professional training opportunities. They also teach from educational resources that are not written in their native language and do not speak to the specificities of their non-Western cultures (Lazem & Dray, 2018).

Changing the status quo of HCI education at a grassroots level involves increasing the awareness of its relevance to the society and building curriculum for local HCI teachers (Stachowiak, 2013). In the past few years, two communities were created in African and Arab countries that serve these two goals through organizing events to strengthen local HCI research and practice; the events also address challenges pertinent to their cultures (Alabdulqader, Abokhodair, & Lazem, 2017; Bidwell, 2016). A change in local HCI education programs would further require a cultural change in the local education programs by creating solutions that address the challenges educators face in their settings, which is more than merely devising a curriculum that is created based on recognized standards and assessments (Fullan, 2006). Adopting a reflective practice by educators is a key enabler for such a change to develop learning about best practices “in the context” (Fullan, 2006).

Egypt, a country facing socio-economic challenges, has been investing in an increasingly growing ICT infrastructure and higher education programs; however, there is a need for an HCI workforce that is willing to engage with the bottom-of-the-pyramid users as a potential market. Egyptian higher education programs and industry have been slow in recognizing the value of HCI. Moreover, the current workforce is technically-oriented due to the increasing interest in global outsourcing. The efforts to institutionalize HCI are still fragmented and limited to individual educators who are championing HCI education at a grassroots level in their institutes. A cultural change is needed in the current higher education programs so that they become multidisciplinary, value the participation of the students and the hands-on experience over textbook memorization, as well as foster a judgement-free environment to encourage creative expressions.

This research reports findings of an action research project conducted by the author to raise the awareness about HCI among undergraduates who study computing courses and to develop her own teaching practice. Action research is a term that was coined by Kurt Lewin to describe “a small scale intervention in the functioning of the real world and a close examination of the effects of such an intervention” (Cohen & Manion, 1994, p. 186). It is a practical methodology that is concerned with solving problems and changing practices “within every day, natural contexts rather than within controlled settings” (Cousin, 2009, p. 150). While action research applies to a wide range of activities, there is a strong tradition of using it by teachers to develop their classroom practice. Kurt Lewin’s model of action research consists of a spiral of steps. The first, for teaching professionals, is identifying a general idea or concern held by the teacher. This is followed by a fact-finding step, where literature is surveyed to find out how others have addressed the issue to help frame the research project. This could be additionally supplemented by discussions with critical teaching colleagues to provide insights and advice. The fact-finding is followed by a planning step that could include applying a new pedagogical approach or using a new technology. The plan is executed in the first action step. Data such as students’ feedback and the teacher-researcher reflections on the planned approach is collected in the action step. In the evaluative step, the data is analyzed to understand and assess the effectiveness of the implemented intervention. Findings from the evaluation could be used to amend the plan and start another research cycle with a second action step, and so forth.

It is worth mentioning that design thinking is implicit in the historical roots of action research, as manifested in the action research’s pragmatic orientation to create desired social practices or systems (Romme, 2004). The outlined action research methodology has parallels with the design thinking stages (empathize, define, ideate, prototype, and test). The teacher’s empathy with students motivates designing an intervention to change the status quo of the classroom practice. The fact-finding step includes the activities necessary to define the problem. The planning step comprises the ideation and prototyping of a design solution or a plan, then the plan is tested in the action step. Similar to design thinking, action research stresses the value of iteration and often occurs in iterations of cycles to revise and improve the plan based on feedback from the evaluative step.

As an HCI instructor, I was concerned that the existing teaching practices in my institute downplays the HCI courses, as these are considered less technical compared to other computing courses—a view that was consequently adopted by the students. I am interested in making a change to encourage students to learn about HCI. Over four action research cycles, I have explored different learning designs for short two-week introductory training courses to achieve a balance between the technical contents that appeal to the students and the depth of HCI knowledge necessary to give them a proper grasp of the subject. Despite being offered for non-credit, the courses have received a consistently increasing interest from students.

This article extends a work that was presented at the Association of Computing Machinery (ACM) 7th Annual Symposium on Computing for Development that was held in Nairobi, Kenya (Lazem, 2016). The conference paper presented a project-based learning design, where the students appropriated and re-designed chosen technologies to suit the Egyptian context. The reflections presented in the paper indicated that the students’ implementations of HCI methods varied in its depth due to the flexible learning design that allowed them to choose the extent by which the end-users were involved in their re-design activities. This article extends the previous work by reporting a follow-up action research cycle, where I used a more systematic and structured learning design to teach HCI methods. This work contributes to the ongoing discussion about devising a living HCI curriculum by bringing perspectives from global contexts where HCI is in its infancy (St-Cyr, MacDonald, Churchill, Preece, & Bowser, 2018).

Related Works

At the International HCI and UX Conference in Indonesia, Eunice Sari and Bimlesh Wadhwa (2015) presented a paper briefly discussing the difference between HCI education in developed and so-called developing countries in the Asia-Pacific region (2015). In the so-called developing countries, HCI is still a supplementary course. The focus of HCI education in developing countries is less advanced compared to developed countries because it does not explore new learning methods, and it is further limited by only teaching basic concepts. There are also other challenges faced by African HCI educators including over-crowded classrooms and the disciplinary-restricted departmental requirements on content and grades that do not suit the practical nature of HCI education.

Furthermore, involving and empowering people from marginalized communities through participatory approaches was challenged by the social norms. A cross-cultural study of students from UK, India, Namibia, Mexico, and China showed that culture and cognitive attitudes affect the performances of HCI students in analytical tasks, such as heuristics evaluation, and intuitive design tasks, such as creating personas (Abdelnour-Nocera, Clemmensen, & Guimaraes, 2017). That, in turn, suggests the need for a localized HCI curriculum where cultural sensitivities are accounted for. As highlighted in Lazem and Dray’s article (2018), localization is one of the challenges HCI education has in Africa.

There are also challenges in training local Egyptian students on participatory approaches within an international team (Giglitto, Lazem, & Preston, 2018). Despite employing a student-centered pedagogy, the students perceived themselves with less agency compared to other international stakeholders whose legacy suggests they had more power in the past. Moreover, some of the students failed to empathize with the local rural community due to the cultural distance between the community and the students’ urban backgrounds.

The reluctance from CS students to enroll in HCI courses is not a developing world phenomenon, but rather a challenge Western HCI educators had to overcome at some point. Western HCI educators had to advocate the relevance and importance of the topic to their departments. Roberto Polillo refers to this as the double-front faced by educators who compete to get teaching space from more standard subjects and to attract students’ attention who are often not satisfied by the fuzziness of the subject and its lack of hard rules compared to other engineering subjects (2009). Polillo proposed, based on 10 years of practice, a teaching approach that prioritized focusing on the design experience over teaching abstract HCI concepts. The approach included assigning quick projects that address real problems chosen by students, developing interactive prototypes, coaching the students, and refining the prototypes to reveal interaction problems.

Johan Aberg explained that CS students struggled with the extra reading requirements and the fuzzy nature of the studied material compared to the facts taught in other core courses. Furthermore, students perceived HCI knowledge as common-sense and not properly connected to other courses (2010). The students, therefore, could not foresee its potential benefits for their careers. Aberg also discussed a way to mitigate their misperceptions was to design a new course that offered projects that were motivated by timely research problems, demanded working prototypes, and enabled students to use technologies they considered interesting.

Muzafar Khan provided suggestions for teaching HCI to undergraduates in Pakistan (2016). Similar to educators in the developed world, he recommended designing the content around issues that mattered to students. He additionally recommended increasing the number of course credit hours so students could practice during the lab time and that the importance and the depth of the topic should be elaborated on as the students expect the content to focus only on the interface design.

Adopting a project-based, hands-on learning approach in addition to motivating the students through working on projects related to social challenges were key to encourage Egyptian engineering students to learn about HCI (Lazem, 2016). Lazem further recommended the use of resources such as magazine articles and short videos to explain the role of HCI in the design of modern digital technologies.

Methods

As the course instructor, I used an action research methodology to develop my practice for teaching introductory HCI courses. The decision to design a short introductory course was motivated by the fact that the department, where I taught HCI, offered HCI courses under humanities, which CS students perceived as less interesting and less worthy of their time. The course credit hours were less than regular CS courses with no lab time. Furthermore, the final exam accounted for 60% of the students’ final grades, leaving 40% for the year work and two mid-term exams. The grades assigned to project assignments did not match the amount of work the students were required to put in these projects. That was a challenge for me given that the best practices for HCI education recommend that students practice the concepts through project work. Another fundamental challenge I faced was that the students did not appreciate the users’ involvement in technology design, and the students favored making the design decisions on their own. For all these reasons, I had a goal of this action research project to answer the research question of how might an engaging and motivating learning environment be created to help students develop an understanding and appreciation of users’ role in design through a proper application of HCI methods.

The literature of HCI education inspired me to follow recommendations of other HCI educators who faced similar challenges. Examples of these recommendations are the emphasis on producing working prototypes (Aberg, 2010), coaching the students (Polillo, 2009), providing peer-evaluation for students’ designs (Ciolfi & Cooke, 2006), and using teamwork and project-based approaches that rely on real societal problems (Aberg, 2010; Khan, 2016; Polillo, 2009). I therefore focused on designing a student-centered, open, reflective, active learning environment that was improved over three action research cycles. The evaluation of the third cycle revealed that besides learning about HCI, the students were drawn to my courses for reasons such as the teamwork, the novelty of the hands-on experience and technology prototypes, and the fact that the course projects addressed social challenges. The emphasis on creating an engaging environment that appeal to students came, however, at the expense of teaching HCI methods in depth. In the fourth cycle, presented in this article, I changed the learning design to provide more time and mentorship for educating the students about HCI methods.

Recruitment

The course announcement was sent to the three departments in Alexandria Faculty of Engineering (where the students studied computer programming): Computer and Systems Engineering (CSE), Electrical Engineering (EE), and the Computer and Communication special program (CCP). An online survey was used to filter the applications based on the students’ grade point average (GPA) and their previous experiences in other projects. In the institution, GPA is an indication of the students’ performance in exams more than their teamwork skills and hands-on experiences. Therefore, the students were asked in the online survey to describe their contributions in a project of their choice and to state their personal preferences in terms of the project tasks (e.g., information gathering, exploring design ideas, learning new devices and tools, or programming). The students’ answers to those questions, and their GPA score, formed the basis for selecting them to join the course. When two students had similar GPAs, the preference was given to the student who had more hands-on skills. The students were also chosen based on their motivation to join the school as expressed in their answers to questions related to the school work-life balance theme. Out of 104 applicants, thirty-five students (13 females and 22 males) from the third, fourth, and fifth (final) year were selected and attended the school. I assigned the students to mixed-ability, mixed-gender teams. The school participation was free to the students. The students received no course credit, but rather got a training certificate upon completing the school activities.

Learning Design

In the former action research cycles, the course theme featured certain technologies (e.g., low-cost education or health technologies). The students were asked to re-design them for new user groups. In the presented course, the students were asked to investigate the work-life balance in the lives of different Egyptian user groups: college students, academic researchers, and farmers. The students were tasked to propose working prototypes based on understanding the lifestyle of each group. As the instructor, I did not predefine the nature of the technology so students could focus on understanding their users’ work-life balance or lack thereof before designing technical solutions.

The course took place in a research institute during the two weeks of the academic winter break. All the lectures were allocated in the first week of the course. Lecture time was one hour on average and the rest of the day was dedicated to hands-on experience and readings. The students were given introductory material (Rogers, Sharp, & Preece, 2007) and supplementary readings about technology and work-life balance (Bødker, 2016; Fleck, Cox, & Robison, 2015; Gibson & Hanson, 2013; Skatova et al., 2016). Data gathering and analysis activities were conducted according to structured assignments. The second week was dedicated to technical implementation of technology prototypes. It was mostly led by the students. My role was limited to providing feedback and helping them prepare for their final presentations and demos. On the last day, the students presented their project in an open-house event that was attended by CS faculty and the dean of the Faculty of Engineering.

The course had three core assignments described as follows.

Pitch Your Work-Life Balance Idea

The pre-course survey included proposing a technology idea for a user group, chosen by the student, to help this user group achieve work-life balance. The students were asked in the first day of the school to pitch their ideas (30-second pitch) in groups of three and vote to select the best pitch, which then competed against other winning pitches to select the three best ideas. There was also a class discussion about the winning ideas. The students thought their original ideas were not applicable nor creative as one student expressed in the feedback, “It was helpful in breaking the ice, that’s all. In different circumstances, it might have been stimulating as one can hear good ideas, but in my opinion, no idea was stimulating that day” (Student 11).

This was rather surprising for me because in my previous experience with similar cohorts, the students tended to judge ideas based on their technical sophistication rather than their creativity or usefulness. We had a follow-up discussion to solicit the students’ opinions about what distinguishes a good idea and where inspiration might come from. Students suggested that meeting users and gathering more data about their activities could mitigate the drawbacks they observed in the winning ideas. The discussion was successful as it helped highlight the value of understanding end-users and the motivation behind following a user-centered approach.

Data Gathering

After the data gathering lecture, the students were asked to work in pairs and interview each other, and then work in teams to prepare interview questions for their intended users. The interview questions were presented to the whole cohort on the second day. The students and I provided feedback. All teams were asked to conduct interviews (and online questionnaires where applicable) with potential users. The exercise took roughly six hours over two days.

Data Analysis

After gathering interview data, the students were guided to make sense of the collected data and brainstorm design ideas. The data analysis and ideation assignment were appropriated from IDEO’s Human-Centered Design ToolKit (https://www.ideo.com/post/design-kit). The exercise took roughly four hours. Every team shared stories and recordings of their interviews, took notes of interesting insights, voted on the most interesting ones, and categorized them into themes. A number of themes were chosen by each team to develop opportunity areas through generating multiple “How might we” questions and brainstorming solutions for selected questions. Finally, every team chose two solutions to prototype and implement.

Field Work and Research Ethics

As the course involved dealing with human users, a short introduction was given about obtaining necessary ethical approvals of the research design and the users’ consents before data gathering activities. Instructions were given to guide the students on how to introduce themselves and the purpose of the research to their participants. At least one student from each team was asked to complete a freely offered Human Subject Protection training (https://about.citiprogram.org/en/series/human-subjects-research-hsr/).

Evaluating Students’ Learning

Because the short course was intended mainly to sensitize the students to the subject and how the learning design fundamentally differed from the learning styles of the other computing courses, it seemed reasonable to use formative assessment to evaluate if and how the students learned rather than what they learned. Formative assessment is defined as “all those activities undertaken by teachers and/or by their students [that] provide information to be used as feedback to modify the teaching and learning activities in which they are engaged” (Black & William, 1998, p.7). In addition to my personal observations, I relied on two main online surveys to synthesize how the students learned: a peer-review survey and a post-course feedback survey. After the first week, at the middle of the course, the teams presented their project prototypes in a demo session. The students optionally completed a peer-review survey of the presented demos. Sixteen students responded to the survey. Furthermore, upon completing the course, the students optionally answered another online survey to reflect on the learning design and to provide suggestions to improve future offerings. Twenty students responded to the post-course survey.

Summary of Students’ Projects

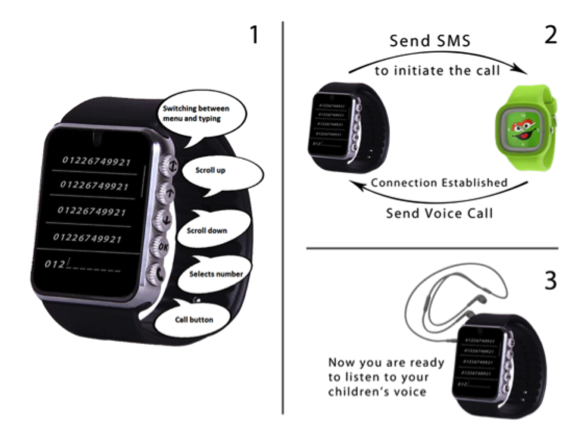

Table 1 shows a summary of the students’ projects. All the projects involved the end-users to a similar extent as shown in Table 2. The projects were diverse with respect to the functionality and form of the technology and reflected the students’ understanding of their participants’ lifestyles and the nature of work-life balance they needed. A notable example is Wesal in Figure 1), an Arabic word for connection, which is a system that consisted of two wearable wrist watches that aimed to help low-literate farmers connect with their children during the long monthly period (up to 20 days) the farmers spend away from home. The design was inspired by domestic media space concepts (Judge, Neustaedter, & Kurtz, 2010), in the sense that a father could at any point in time connect to his child’s watch and listen to his/her activities to feel that he is part of the child’s life. Even though the produced prototype was limited to the connection circuits, the team paid attention to form factors and the aesthetics of the design. The child’s watch was colorful, while the father’s watch featured multiple function buttons to ease the interaction for a low-literate person.

Figure 1. Wesal Design: two wearable devices, one for the father and one for the child. The child’s watch is colorful and analogue with a simple embedded microphone. The father’s watch is digital with simple buttons for low-literate users.

Table 1. Students’ Projects

|

User Group |

Project |

Prototype |

Group* |

|

Students |

Lecture Miner |

An audio filtering circuit that converts a lecturer’s audio speech to text and filters keywords to prime an Internet search. Available in a personalized mobile application for supplementary educational material relevant to a student’s career path. |

2 CSE, 7 EE, 3 CCP 4 Females, 8 Males |

|

Brain Crucible |

A mobile application that recognizes stressed students using a brain-computer interface and provides recommendations to alleviate their stress. |

||

|

Academic Researcher |

Research Hub |

A website to help post-graduate researchers connect to local established researchers with similar interests to learn about their current projects and open opportunities. |

1 CSE, 6 EE, 4 CCP 4 Female, 7 Males |

|

Alfred the Butler |

A telepresence robot that performs remote audio and video streaming to save travel costs to conferences for academics and help them get administrative tasks done remotely. |

||

|

Farmers |

Wesal (Arabic for connection) |

A wearable device to facilitate farmers’ communications with their children during the long period they spend away from home. |

0 CSE, 7EE, 5 CCP 5 Females, 7 Males |

|

Hamada (Arabic male name) |

A device that measures humidity, temperature, and soil moisture using sensors to provide expert recommendations to save the time the farmers spend travelling for farm visits. |

*Computer and Systems Engineering Dept. (CSE), Electrical Engineering Dept. (EE), Computer and Communication Engineering (CCP) Dept.

Table 2. Users’ Involvement in Students’ Projects

|

User Group |

Project |

Users’ Involvement in the Design |

|

Students |

Lecture Miner |

Online questionnaire answered by 35 participants and semi-structured interviews with 5 participants |

|

Brain Crucible |

||

|

Academic Researchers |

Research Hub |

Semi-structured interviews with 5 participants |

|

Alfred the Butler |

||

|

Farmers |

Wesal |

Semi-structured interviews with 5 participants |

|

Hamada |

Results

The following sections present the results for the peer-review survey and the post-course survey. The students’ responses to the peer-review survey indicated a shift in their focus from technological details to design features and their suitability for the intended users. The post-course survey showed an overall satisfaction with the course and revealed the difficulties the students had in the data gathering and analysis phases.

Peer-Review Survey

The peer-review comments provided by the students on their first demos were categorized as Technical-Oriented or Design-Oriented (the students were not instructed to write specifically about the categories). The first category described the comments that discussed technical implementation details, while the latter described comments that discussed issues related to the form factors, usage scenarios, user experience, and technology adoption. The students’ comments were categorized by two independent raters. The inter-rater reliability was assessed using Cohen’s Kappa statistic measure (Kappa = 0.5, 95% CI =0.12), which is interpreted as moderate agreement according to Cohen (McHugh, 2012). Table 3 shows the number of peer-review comments in each category, which was agreed upon by the two raters. The number of Design-Oriented comments outweigh the Technical-Oriented ones, which is consistent with the fact that the first week was focused on understanding the users and the design process. While inconclusive, it indicated that the students were progressing in terms of understanding their users and discussing design alternatives with respect various use cases. Wesal (Figure 1) received most of the design comments that discussed the form factors, possible scenarios for parent-child interactions using the system, and concerns such as the privacy of the children.

(Note: The students preferred to give their feedback in English student-translated from Arabic.)

The children need to have some control on the device, they could be not able to contact [connect] with their parent at the same time that their parents asked to contact them. – Student feedback on the Wesal Design

Table 3. Peer-Review Survey Responses After the Teams’ First Demo

|

Project |

# of Technical-Oriented Students’ Comments |

# of Design-Oriented Students’ Comments |

|

Lecture Miner |

4 |

15 |

|

Brain Crucible |

5 |

11 |

|

Research Hub |

4 |

12 |

|

Alfred the Butler |

6 |

8 |

|

Wesal |

4 |

21 |

|

Hamada |

9 |

11 |

|

Total |

32 |

78 |

Note: The table shows the number of comments classified as technical-oriented or design-oriented by the two raters.

Post-Course Survey

The post-course survey showed that the students thought the school was intellectually stimulating (85%), well-coordinated (95%), well-taught (90%), and that they were supported with useful resources (70%). Additionally, the students agreed that they were required to work to a high standard (80%), learned new ideas and skills (95%), applied knowledge to practice (85%), and that they were satisfied with the final outcomes (90%). In regards to teamwork, 70% of the students were satisfied. This was a challenge in a highly demanding short course to select the students and form the teams.

In their feedback on the pitching activity, 85% of the students thought the pitching activity was helpful (40%) or very helpful (45%) as an ice-breaking activity that helped them learn about each other’s backgrounds and interpretations for the work-life balance problem. This is illustrated by the following comments:

As it help[s] us to understand the target of the school and help[s] us to contribute with others. (Student 17)

It was good knowing how everyone saw work-life balance, their backgrounds and ideas. (Student 10)

Additionally, 90% of the students found the data gathering assignment helpful (20%) or very helpful (70%), particularly in revising their assumptions about their users as they expressed in the following comments:

We had a sample of user group[s] that [were] not our “everyday friends,” so before the interviews we had many assumptions about them we discovered later that they were totally wrong. (Student 12)

Our project was difficult to tackle, because we were addressing people who were part of the problem, so the data gathering was a reference to us and it gave us great credibility and we offered our results as open source so that anyone can check them. (Student 1)

Some students expressed the difficulties they had with the assignment as there was not one right way to do it, which was quite unusual for some and also meant they had to do team discussions and iterate on the questions.

It was hard getting interview questions. There was not a systematic way to do it. (Student 10)

… we had got [sic] a little confusion as the interviewees was [were] not similar . . .but we overcome [overcame] this confusion by filtering idea[s]. (Student 7)

The comment below highlights another difficulty some students from this cohort faced when they were unusually (in their view) asked to focus on understanding the problem rather than just looking for a solution that fits their own definition of the problem. Though not a majority, those students had difficult time putting their ideas aside, which was a bit stressful to their teams.

As it is a research school, I think this activity was the corner stone. Yes, people didn’t fully understand the process and often jumped to conclusions and products when they were not supposed to, but for those with open minds and a longer attention span, it was really great. It should be stressed upon being neutral and just in this activity, maybe even a little test in the application for the school or the HR interview -if available- to see if the applicant has this mindset already or [is] a hopeless case of enforcing their ideas and so, but that is just my opinion. (Student 11)

As for the data analysis and ideation phase, almost all the students had a difficult time getting this exercise right the first time. The first insights they wrote had their suggestions for solutions rather than insights from the interviews; hence, they had to rewrite it after consultation with me. Categorizing the insights into abstract themes was also challenging for them. Deciding and writing a good theme was an ambiguous task, which was somehow surprising because abstraction is one of the computational thinking skills they have presumably mastered. Hence, the amount of time that was allotted was a legitimate concern the students had about this phase.

This was the peak of the school in my opinion. It should have been given more time, attention, and supervision because of the aforementioned reasons about people skipping the data gathering phase. (Student 11)

This assignment took half a day in the scheduled syllabus and required close mentoring to go through most of the insights for the data with the students. It is worth noting that I was dividing my time among all groups, so some groups received guidance rather late in the process. It is my recommendation that more time should be allocated to this exercise to iterate on the data and provide time for team discussions.

In the post-course survey, 90% of the students thought writing and discussing insights were helpful (25%) or very helpful (65%) as it helped them organize the data, form a shared understanding, and focus on the salient issues in the data.

The responses were many and no matter how we tried to focus, we always got lost, the idea of electing the insight was brilliant and saved time and effort and it proved it was worthy [sic] its salt because based on these insights, we got ideas, we prototype[d] them and got pretty positive reviews. (Student 1)

We took so muc[h] time but it taught me a lot. It was the same idea as we listen[ed] to answer not to understand. Putting our judgments aside was the hardest part. (Student 2)

…ability to summarize an activity you made to clear points, to ignore the “not useful” details and focusing on big points was a great skill to learn from such activity[.] Was it helpful for the design process? Yes of course, it made us focus our thought[s] on clear points rather than a big open area of problems. (Student 12)

Furthermore, they appreciated that fact that this is a team exercise as one student put it, “when two people share the interview they look at it from diff[erent] perspectives.” (Student 6)

As for the ideation part, in the post-course survey, 80% of the students thought it was helpful (15%) or very helpful (65%) as illustrated by the following comments:

Breaking down the insights and trying to build ideas by simply asking “how might we” which is a childish (in a good way) innocent question and it abstracts all the complexities and obstacles that would get in the middle of the process, it was something we were familiar to [with] and we got a lot of “How might we’s. […] For me, it was an easy, yet organized way, to start the journey of brainstorming. (Student 1)

This question was like the ideas generator, it helped us a lot[. W]e were able to schedul[e] the ideas and determine which one [was] the mo[st] important. (Student 7)

Other students thought that they had difficulties and needed more time and mentorship to build consensus in their teams or re-iterate on the generated questions.

It went through many iterations, it was hard but [led] to a refined idea. (Student 4)

It is helpful in general, or so I think, but I don’t think it was helpful. Not having a mentor who has experienced this phase before made us divide into more than a group, some thought of general insights that they extracted from our data, some thought of ideas for the project, and some thought of their own problems. This phase is critical and needs guidance. (Student 11)

I think that [the] thought style was very nice, but it was required of us to do it in a very short time and I think this is not correct because creativity is because we know new and different ideas to ask but need time. (Student 20)

Some of the students already thought that the ideation phase was not necessary and the organized insights were enough to start a discussion and exchange ideas based on the shared understanding of the problem. However, in my opinion, those students did not find it easy to produce plenty of “how might we” questions and ideas. They were self-conscious about the quality and the practicality of what they said or wrote, which prevented them from achieving the primary goal of the task—that is, generating plenty of questions that will be later judged by the team. This suggests that a different type of scaffolding and/or ideation method should have been used.

I don’t feel we really got the point of this one. [Es]pecially that the produced ideas were so far away from the questions. (Student 6)

I think this phase didn’t have a great impact, after we agreed on [a] problem, I think discussion will be better, because I will hear others ideas to solve this problem and will lead me to generate an improved idea based on their thinking. (Student 5)

Maybe it helped a little but I think the insights were enough to start the idea generation phase. (Student 8)

In the post-course survey, the students were asked about their perceptions of what the end-user’s role is in the technology design and/or software development process and to what extent the school has contributed to that perception. The students indicated that the input from the end-users helped in framing the problem, corrected the designer’s assumptions, and provided feedback throughout the project as shown with the following comments:

They gave the designer the frame he is going to work inside, the Do’s and Don’t[s.] Do’s he must take care of. [T]hey work as eye openers for some aspects he may overlook, and help him figure out what design is good for them. (Student 12)

They give us the path we need to flow to reach the prospect(skyline) we designed our product for rather than working without [a] guide line for our work. (Student 15)

Further, the end-users provide helpful feedback throughout the project “[…] Their input is valued in the technology [design] process as they know what their problems are […] Their input is valued during the software development phase, because they can tailor the UI and UX to what fits them most. (Student 4)

Overall, 95% of the students agreed that the school has contributed to their views about end-users. They cited the lectures, interviews, and ideation activities as important in forming these views.

… I thought HCI was more about UX and how users interact with things already built. I learned how the companies that don’t have revolutionary tech still succeed. I used to assume a lot about the user-base. Most start-ups do skip the entire process entirely but that’s due to limited resources, so I never encountered that before. For me that was the real takeaway from the winter school. (Student 14)

Some students found one activity particularly useful, where they were asked to check the differences between good and bad designs using the website Bad Human Factors Designs (http://www.baddesigns.com/index.html) as highlighted in this comment:

I remember when we had a task to find some bad designs & put hands on their defects so that we can reach the principles of good design, [t]his task helped a lot to determine how great the impact the end user has on the product design. (Student 8)

Providing support for the teamwork, extending the school duration, and particularly expanding the technical implementation period were highlighted by the students as areas of improvement. Extending the school time depends mainly on the resources available to the instructor. It is often the case that the guidance students need during the technical implementation period is less than the one they need in design activities as they were already chosen based on their technical skills. The students could be given one extra week to polish their projects with online guidance, which could allow teams to work under less time pressure.

Discussion

The presented learning design challenged the students to start their investigations from an unfamiliar point: They were given just a description for a user group and had very little knowledge about the type of technology that could be used for their project. They had to learn to trust the design process. Compared to earlier versions of this course, the design challenge was a more systematic HCI learning experience. As the students’ instructor, it was a rewarding experience to see the diverse technology prototypes and to see the students’ ability increase as they used innovative techniques to produce a working prototype, which turned out to be a key motivator for this cohort. Though, there are some lessons to be learned.

Using interviews to gather data for end users was a straightforward exercise for the majority of the students. The process was also an eye-opener for the students in terms of revising any assumptions that they had made about the end users and how the role of technology can make an impact on their users’ lives. The majority of students found making sense of the collected data and the ideation phase rather challenging. It should be noted that sufficient time should be dedicated for groups to do multiple analysis iterations. Peer feedback could also help in the sense that teams could exchange experiences and review data for each other. It is also worth noting that this is also a challenging learning style for students who are used to being at the receiving end during lectures. Hence, ideas should be explored by instructors to make this type of activity fun and engaging.

Furthermore, it is natural to expect that some students will not be enthusiastic about user-related tasks and some of them may lack the analytical skills needed to excel in these tasks. The pragmatic learning goal for these students could be that they develop some sensitivity towards the importance of involving the users in the design process.

It is also important to note that action research does not provide a strong basis for generalization because it aims at developing and strengthening the researcher’s practice in a certain context (Arnold, 2015). Sharing reflective action research can help in disseminating lessons learned by instructors and motivate other educators to adopt them.

Recommendations

The following recommendations can be taken from this action research:

- Explore ways by which HCI introductory courses could be offered using a blended learning model (Garrison & Kanuka, 2004) that mixes between online and face-to-face learning, where instructors and students who had given or taken these courses could create videos of lectures and provide tips for new HCI champions and students. Also, local educators could lead the hands-on learning experience.

- Re-design the data analysis and ideation exercises.

- Invite students from different disciplines (e.g., psychology, sociology, anthropology) to join the course to create a diverse and rich learning experience. The course should be designed to encourage knowledge exchange and integration among students as opposed to solely assigning user research tasks to students from humanities backgrounds.

- Offer the course materials and summary of students’ feedback as a resource for other HCI champions.

Conclusion

I propose in this article that strengthening HCI education programs would help build a workforce that is adept in understanding the need to design inclusive technologies. This is especially true in the Egyptian context, where interdisciplinary education and research programs are in their early stages.

In this article, a design for a condensed introductory course to champion HCI education in engineering programs is introduced. The pedagogical approach was appreciated by the students and successfully contributed to sensitizing them to the users’ role in the user-centered design approach as shown in their survey responses. The students particularly struggled with data analysis activities that required abstracting and categorizing collected qualitative data, which suggests that more time and scaffolding should be dedicated to these tasks. Producing a working prototype is essential for this cohort of students even in a short course, and hence in the future, it is recommended that HCI assignments should be designed with this recommendation in mind.

Tips for Practitioners

The following tips to share are what I learned while working on this action research project:

- Create a good rapport with the students and encourage them to discuss any concerns regarding the differences between HCI and other computing courses with you.

- Dedicate time every day for students to reflect on the previous day’s activities. Students will learn when they think about what they do in addition to completing the hands-on aspects of a project.

- Support the teams that lack cohesion by joining some of their activities as a team member.

- Encourage hands-on exercises, such as interviewing and prototyping, because they are very valuable for fostering engineering students’ interest in HCI.

Acknowledgements

I thank all the students who participated in my HCI introductory courses. I would like to also thank the City of Scientific Research and Technology Applications, and DELL EMC for sponsoring this presented course.

References

Abdelnour-Nocera, J., Clemmensen, T., & Guimaraes, T. G. (2017). Learning HCI across institutions, disciplines and countries: A field study of cognitive styles in analytical and creative tasks. In R. Bernhaupt, G. Dalvi, A. Joshi, D. K. Balkrishan, J. O’Neill, & M. Winckler (Eds.), Human-Computer Interaction — INTERACT 2017: 16th IFIP TC 13 International Conference, Mumbai, India, September 25-29, 2017, Proceedings, Part IV (pp. 198–217). Cham: Springer International Publishing. https://doi.org/10.1007/978-3-319-68059-0_13

Aberg, J. (2010). Challenges with teaching HCI early to computer students. In Proceedings of the fifteenth annual conference on Innovation and technology in computer science education – ITiCSE ’10 (pp. 3–7). Bilkent, Ankara, Turkey: ACM. https://doi.org/10.1145/1822090.1822094

Alabdulqader, E., Abokhodair, N., & Lazem, S. (2017). Human-computer interaction across the Arab world. In Proceedings of the 2017 CHI Conference Extended Abstracts on Human Factors in Computing Systems – CHI EA ’17 (pp, 1356–1359). Denver, CO, USA: ACM. https://doi.org/10.1145/3027063.3049280

Arnold, L. (2015). Action research for higher education practitioners: A practical guide. Retrieved from https://lydiaarnold.files.wordpress.com/2015/02/action-research-introductory-resource.pdf

Bidwell, N. J. (2016). Decolonising HCI and interaction design discourse. XRDS: Crossroads, The ACM Magazine for Students, 22(4), 22–27. https://doi.org/10.1145/2930884

Black, P., & Wiliam, D. (1998) Assessment and Classroom Learning. Assessment in Education: Principles, Policy & Practice, 5(1), pp. 7-74. DOI: 10.1080/0969595980050102

Bødker, S. (2016). Rethinking technology on the boundaries of life and work. Personal and Ubiquitous Computing, 20(4), 533–544. https://doi.org/10.1007/s00779-016-0933-9

Churchill, E. F., Bowser, A., & Preece, J. (2016). The future of hci education: A flexible, global, living curriculum. Interactions, 23(2), 70–73. https://doi.org/10.1145/2888574

Ciolfi, L., & Cooke, M. (2006). HCI for interaction designers: Communicating “the bigger picture”. Inventivity: Teaching Theory, Design and Innovation in HCI, Proceedings of HCIEd, 1, 47–51. Retrieved from https://pdfs.semanticscholar.org/1eb3/a1b3c9871d0756f88625a6570829b55d4130.pdf

Cohen, L., & Manion, L. (1994). Research methods in education (4th Ed.). London, UK: Routledge.

Cousin, G. (2009). Researching learning in higher education: An introduction to contemporary methods and approaches. New York, NY: Routledge.

Fisher, D. H., Cameron, J., Clegg, T., & August, S. (2018). Integrating social good into CS education. In Proceedings of the 49th ACM Technical Symposium on Computer Science Education (pp. 130–131). New York, NY, USA: ACM. https://doi.org/10.1145/3159450.3159622

Fleck, R., Cox, A. L., & Robison, R. A. V. (2015). Balancing boundaries: Using multiple devices to manage work-life balance. Proceedings of the ACM CHI’15 Conference on Human Factors in Computing Systems (pp. 3985–3988). Seoul, Republic of Korea: ACM. https://doi.org/10.1145/2702123.2702386

Fullan, M. (2006). Change theory as a force for school improvement. In J. M. Burger, C. F. Webber, & P. Klinck (Eds.), Intelligent leadership: Constructs for thinking education leaders (Vol 6). Springer, Dordrecht. https://doi.org/10.1007/978-1-4020-6022-9_3

Garrison, D. R., & Kanuka, H. (2004). Blended learning: Uncovering its transformative potential in higher education. Internet and Higher Education, 7(2), pp. 95–105. http://doi.org/10.1016/j.iheduc.2004.02.001

Gibson, L., & Hanson, V. L. (2013). Digital motherhood: How does technology help new mothers? Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (pp. 313–322). Paris, France: ACM. https://doi.org/10.1145/2470654.2470700

Giglitto, D., Lazem, S., & Preston, A. (2018). In the eye of the student: An intangible cultural heritage experience, with a human-computer interaction twist. In Conference on Human Factors in Computing Systems – Proceedings (Paper No. 290). Montreal, QC, Canada: ACM. https://doi.org/10.1145/3173574.3173864

Judge, T. K. K., Neustaedter, C., & Kurtz, A. F. (2010). The family window: The design and evaluation of a domestic media space. In Proceedings of the 28th international conference on Human factors in computing systems (pp. 2361–2370). Atlanta, GA, USA: ACM. https://doi.org/10.1145/1753326.1753682

Khan, M. (2016). Teaching human computer interaction at undergraduate level in Pakistan. International Journal of Computer Science and Information Security (IJCSIS), 14(5), 278–280.

Lazem, S. (2016). A case study for sensitising Egyptian engineering students to user-experience in technology design. In Proceedings of the 7th Annual Symposium on Computing for Development (pp. 12:1–12:10). New York, NY, USA: ACM. https://doi.org/10.1145/3001913.3001916

Lazem, S., & Dray, S. (2018). Baraza! Human-computer interaction education in Africa. Interactions, 25(2), 74–77. https://doi.org/10.1145/3178562

McHugh, M. L. (2012). Interrater reliability: The kappa statistic. Biochemia Medica, 22(3), 276–282.

Patterson, D. A. (2005). Rescuing our families, our neighbors, and ourselves. Communications of the ACM, 48(11), 29–31. https://doi.org/10.1145/1096000.1096026

Polillo, R. (2009). Teaching HCI to undergraduate computing students : The quest for the golden rules. Retrieved from https://www.slideshare.net/rpolillo/teaching-hci-to-undergraduate-computing-students-the-quest-for-the-golden-rules

Rogers, Y., Sharp, H., & Preece, J. (2007). Interaction Design – Beyond Human-Computer Interaction (2nd ed.). John Wiley & Sons Ltd: Chichester, West Sussex, England. Retrieved from http://www.id-book.com/

Romme, A. G. L. (2004). Action research, emancipation and design thinking. Journal of Community and Applied Social Psychology, 14(6), 411–510. https://doi.org/10.1002/casp.794

Sari, E., & Wadhwa, B. (2015). Understanding HCI education across Asia-Pacific. Proceedings of the International HCI and UX Conference in Indonesia on – CHIuXiD ’15 (pp. 65–68). Bandung, Indonesia: ACM. https://doi.org/10.1145/2742032.2742042

Skatova, A., Bedwell, B., Shipp, V., Huang, Y., Young, A., Rodden, T., & Bertenshaw, E. (2016). The role of ICT in office work breaks. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems – CHI ’16 (pp. 3049–3060). San Jose, CA, USA: ACM. https://doi.org/10.1145/2858036.2858443

St-Cyr, O., MacDonald, C. M., Churchill, E. F., Preece, J. J., & Bowser, A. (2018). Developing a community of practice to support global HCI Education. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems – CHI ’18 (Paper No. W25). Montreal, QC, Canada: ACM. https://doi.org/10.1145/3170427.3170616

Stachowiak, S. (2013). Pathways for Change : 10 theories to inform advocacy and policy change efforts. The ORS Impact (October), 1–30.

Wu, J., Guo, S., Huang, H., Liu, W., & Xiang, Y. (2018). Information and communications technologies for sustainable development goals: State-of-the-art, needs and perspectives. IEEE Communications Surveys Tutorials, 20(3), 2389–2406. https://doi.org/10.1109/COMST.2018.2812301

[:]