[:en]

Abstract

When developing a new product, it is common for designers to feel that they do not have enough information about users’ needs. This is especially true at the front end of a new product design process. An important component of this process is testing and validating potential design concepts. The aim of this study was to explore the validity of augmented reality (AR) and tangible augmented reality (TAR) as tools for evaluating the usability of a product. For this study, 70 college students were recruited to perform a usability evaluation of a space heater product and equivalent AR and TAR representations of it. The results indicate that overall TAR can be a reliable method for evaluating the usability of a fully realized product, especially for products with physical interface controls. However, TAR was not found to be reliable with respect to Ease of Use. Overall, AR was not found to be as reliable as TAR or with respect to any specific aspect of usability that was measured. Applications, limitations, and areas for further study are discussed in this article as well.

Keywords

Tangible Augmented Reality, Augmented Reality, usability assessment, product design evaluation

Introduction

When developing a new product it is common for designers to feel that they do not have enough information about users’ needs (Bruseberg & McDonagh-Philip, 2002). This is especially true at the front end of a new product design process (Moultrie, Clarkson, & Probert, 2007) when many different ideas for a product are considered. Gathering this information is strongly linked to product success, but the best way to collect this information and which components of it will be most useful is not currently well defined (Creusen, 2011).

The general understanding is that the more realistic the product representation is the better a user will be able to evaluate the design concept. The use of detailed physical prototypes is often recommended for this, particularly for evaluating subjective attributes such as aesthetics and emotional appeal, ergonomics and usability, product integrity, or craftsmanship (Srinivasan, Lovejoy, & Beach, 1997). The main drawback with these types of prototypes is that they are both time consuming and expensive to make, meaning that they are only available later in the process or after major design decisions have been made. Even with rapid prototyping techniques, it is not feasible to construct a detailed model of every design permutation that might be considered.

The dilemma for a product designer is that one of the most useful times for input is during the early stages of concept development, but the best representations of a design are not available until the end. This is important because design defects generally become more time consuming and costly to fix the later they are identified (McGrath, Anthony, & Shapiro, 1992). Earlier understanding of a design’s likelihood to be able to actually meet needs can allow good concepts to be generated and selected more quickly leading to fewer dead ends, fewer design iterations, and possibly fewer design defects.

Designers may use many different tools to solicit input from users about a design concept. It is not unusual to use abstract representations of products, such as a sketch or storyboard for example, to solicit user input. Based on this feedback, the designer may make modifications or adjustments to the design. However, when a user looks at one of these early representations and tells the designer that “I like this feature” or “I don’t like this feature,” there is no way to be sure that the user will have the same opinion of this feature when actually realized in the final product. Design defects become more costly to fix the later they are discovered, so early evaluation of a design is important to identify them. If a designer has a way to reliably validate that design decisions were made to generate a particular concept will likely meet user needs and preferences once the concept is realized, it can provide more confidence in moving forward with decisions made during the natural process of design iteration.

Mixed reality prototyping technologies such as augmented reality (AR) and tangible augmented reality (TAR) offer the potential for simulating and evaluating product concepts in more flexible and cost-effective ways. In order to collect detailed data about a new product, the traditional standard would be to use appearance models (which are as detailed as a real product in terms of materials, look and feel, weight, and other physical details) or to use a functional prototype. Either type of model involves significant time and cost to produce. This limits their utility in exploring novel designs because they are produced late in the development process. By this point most major design decisions will have been made and are difficult to change. It is both quicker and cheaper to produce a digital representation of a product that could be used to test design ideas much earlier as well as testing many more design iterations than would be possible with physically based prototypes. These possible benefits can only be realized provided that evaluating a product represented in AR or TAR is able to produce data that is equivalent to what could be obtained from a fully functional version (i.e., equivalent to a product that would actually be found in the marketplace) of the design. If this is not true of these digital prototyping methods, then designers that use them risk basing design decisions on flawed conclusions.

Usability testing is one of the most widely used and important methods for evaluating product design (Lewis, 2006). Usability is the effectiveness, efficiency, and satisfaction that specified users can achieve specific goals in an environment (International Organization for Standardization, 1998). Because constructing highly detailed models of early concepts is not feasible, designers can utilize other types of concept representations such as sketches, storyboards, or 3D renderings.

An increasingly available tool for designers used to visualize and communicate a product concept is augmented reality. AR refers to a view of the real or physical world in which certain elements of the environment are computer generated. These virtual elements could be a modification of a current element in the real world or could be an entirely new element. Early applications allowed text to be overlaid onto a physical object in a real environment. The augmented text was provided through a head mounted display (HMD). The state of the physical object was detected and appropriate text displayed to the user to inform them how to interact with it (Starner et al., 1997).

AR has been applied in product development as a tool to enable interactive modification of design concepts with users (Santos, Graf, Fleisch, & Stork, 2003). Using a tracking system, HMD, and a PC-based graphical station, designers can visually present to users design options using 3D models. The user can comment on elements as they look around, and the designer can immediately modify elements of the design that is being viewed.

AR in product development often combines visualization with physical objects (Billinghurst, Kato, & Myojin, 2009) that can allow users to experience an augmented view and manipulate the augmented view by manipulating physical elements (Lee & Kim, 2009). The physical elements are typically unique markers that are printed on cards and placed in front of a user. When detected by an AR device, the markers are replaced in the view with a 3D virtual object. Users are able to place cards in specific locations to see how the product would look if it was physically there. The cards can also be held, turned, and rotated to view how a product would look from any angle. These kinds of AR systems can allow views of virtual interfaces, like a touchscreen or keyboard, to be overlaid on physical surfaces and allow real time interaction (Shen, Ong, & Nee, 2010). This can provide users with instant feedback and a natural kind of interaction with a virtual product/device (Lee, Nelles, Billinghurst, & Kim, 2004).

TAR works similarly but combines an augmented, mixed-reality view with a physical component. The physical part is generally a low fidelity representation of the product that is similar in shape, size, and so on. In this case, markers are attached to components of the model. AR software detects the markers and overlays an augmented 3D view of the matching component onto the model. This provides a view of what the product would actually look like overlaid on the model wherever it is placed in an environment. Instead of simply holding a single marker card, a user can pick up the physical model and directly interact with it while looking at the augmented view.

Most studies discuss techniques in which augmented reality can be used in product development to visualize concepts such as in an educational setting (Estrada, Urbina, & Ocaña, 2018). Very few have evaluated AR as a tool for assessing/testing a design (Faust, et al., 2018). AR and TAR represent potentially useful tools for evaluating design concepts, particularly earlier in the design process before appearance models or functional prototypes are available. These tools can also provide the ability to demonstrate a product with less time and expense than is involved in developing a high-fidelity physical model/prototype.

The purpose of this study is to explore the validity of AR and TAR as tools for evaluating the usability of a product. To do this, both an AR and TAR representation based on an existing product were built. Usability evaluations of each of the representations was performed and compared to usability evaluations of the actual product. A prior investigation (Choi & Mittal, 2015) indicated that the usability of an AR representation of a product can be similar to the real thing. The product in this study is different, but similar findings are expected. Because the visible TAR representation has a similar level of fidelity to that of AR, we expect to find that usability evaluations of both an AR representation of a product and a TAR representation of a product will not be significantly different than usability evaluations of the product itself.

Methods

The following sub-sections outline the details of the study design, the participants, the test product, procedure, evaluation, and analysis of the methods.

Study Design

This study compared the usability evaluations of three representations of an existing product: AR, TAR, and the product itself. A within-subjects design was used where each participant performed a usability evaluation of all three representations. The representations were provided to each participant in a randomized order.

Participants

For the study, 70 college students (32 male, 38 female) aged 18 or over (mean = 22.04, std. dev. = 3.54) participated. Each participant was recruited via word of mouth and participated on a voluntary basis with no compensation. The study was approved by the Georgia Tech IRB, and all participants provided informed consent.

Test Product

A Sunbeam heater (model SFH5264MW shown in Figure 1) was the product used. It was chosen because it is a relatively simple product without a large number of extra features and because it has straightforward physical controls. The controls consisted of two physical knobs, one above the other. The bottom knob had four discreet settings (clockwise from left to right): power off, fan only, low fan speed, and high fan speed. The top knob allowed continuous adjustment for the built-in thermostat, from low to the left and highest when turned clockwise to the right.

Figure 1. The Sunbeam model SFH5264MW heater.

To generate the various AR representations, a 3D model of the heater was first created in SolidWorks. The 3D model was used to set up the AR representation of the heater (Figure 2) and the TAR representation of the heater (Figure 3). For the TAR representation, the model was utilized within Vuforia, a cross platform AR application. The Vuforia app was run on an Android tablet that was mounted on a desk. It was pointed at a marker card. When viewed through the Vuforia app, the pattern on the marker was detected and replaced in the view with the 3D model of the heater. The controls were set up within the app so they were active and functional via the tablet’s touchscreen. When the power knob was turned on a fan sound was played: low pitch and soft for the low setting and higher pitch and louder for the higher setting. The temperature adjustment could also be turned, and the virtual knobs visually showed the current position of each knob. The knobs were turned by the use of a clockwise swipe motion over the knob itself (like putting a finger on top of the knob and rotating it).

For the TAR representation, markers were placed on the front of a physical model that approximated the size, shape, and control setup of the heater. The Vuforia application superimposed the digital 3D model over the physical model. All of the controls worked similarly to the AR case; however, they were not controlled through the touchscreen but by physically touching and turning the knobs on the model. The knobs themselves were set up so the feel was similar to the actual heater. The temperature control was a continuous adjustment and rotated smoothly while the power/fan speed control clicked into place for each of the discreet settings. All interactions and current positions of the knobs were displayed through the tablet’s view.

Figure 2. Marker card enabling an AR representation of the Sunbeam heater.

Figure 3. Physical model and markers enabling a TAR representation of the Sunbeam heater.

Procedure

Each participant completed a series of tasks with the actual product and each of its two representations. The tasks included adjusting each of the controls/settings on the heater:

- Turn the Mode Control to “High-heat.”

- Turn the Mode Control to “Low-heat.”

- Turn the Mode Control to “Fan-only.”

- Turn the Thermostat Control to the lowest setting.

- Turn the Thermostat Control to a medium setting.

- Turn the Thermostat Control to the highest setting.

- Turn the Mode Control to “Off.”

The order of tasks was randomized for each participant and each representation of the heater. The final task was always to turn off the power.

Evaluation

After completing the task with the heater/representation, participants completed the USE questionnaire (Lund, 2001). The USE questionnaire is a 30-item survey that uses a 7-point Likert-type scale. Participants were instructed to mark the level on the scale that best matched their opinion with each of the statements. Valid responses range from “strongly disagree” (1) to “strongly agree” (7). Participants answered each question and were not provided with a “not applicable” (NA) option.

The questionnaire is made up of four sets of questions related to different aspects of usability: Usefulness (possible range of scores from 8 to 56), Ease of Use (possible range of scores from 11 to 77), Ease of Learning (possible range of scores from 4 to 28), and Satisfaction (possible range of scores from 7 to 49). A usability score is generated by summing the values of each survey item. Scores for each of these aspects of usability were also created by summing the individual items for the related questions.

The items on the USE questionnaire are the following:

Usefulness

- It helps me be more effective.

- It helps me be more productive.

- It is useful.

- It gives me more control over the activities in my life.

- It makes the things I want to accomplish easier to get done.

- It saves me time when I use it.

- It meets my needs.

- It does everything I would expect it to do.

Ease of Use

- It is easy to use.

- It is simple to use.

- It is user friendly.

- It requires the fewest steps possible to accomplish what I want to do with it.

- It is flexible.

- Using it is effortless.

- I can use it without written instructions.

- I don’t notice any inconsistencies as I use it.

- Both occasional and regular users would like it.

- I can recover from mistakes quickly and easily.

- I can use it successfully every time.

Ease of Learning

- I learned to use it quickly.

- I easily remember how to use it.

- It is easy to learn to use it.

- I quickly became skillful with it.

Satisfaction

- I am satisfied with it.

- I would recommend it to a friend.

- It is fun to use.

- It works the way I want it to work.

- It is wonderful.

- I feel I need to have it.

- It is pleasant to use.

Analysis

The Kruskal-Wallis test was used to evaluate differences in the responses to the USE questionnaire between the three representations (AR, TAR, and the actual product). This non-parametric test was chosen due to the ordinal nature of the data. Similarly, the Mann-Whitney test was used to test pairwise differences among the three groups.

Results

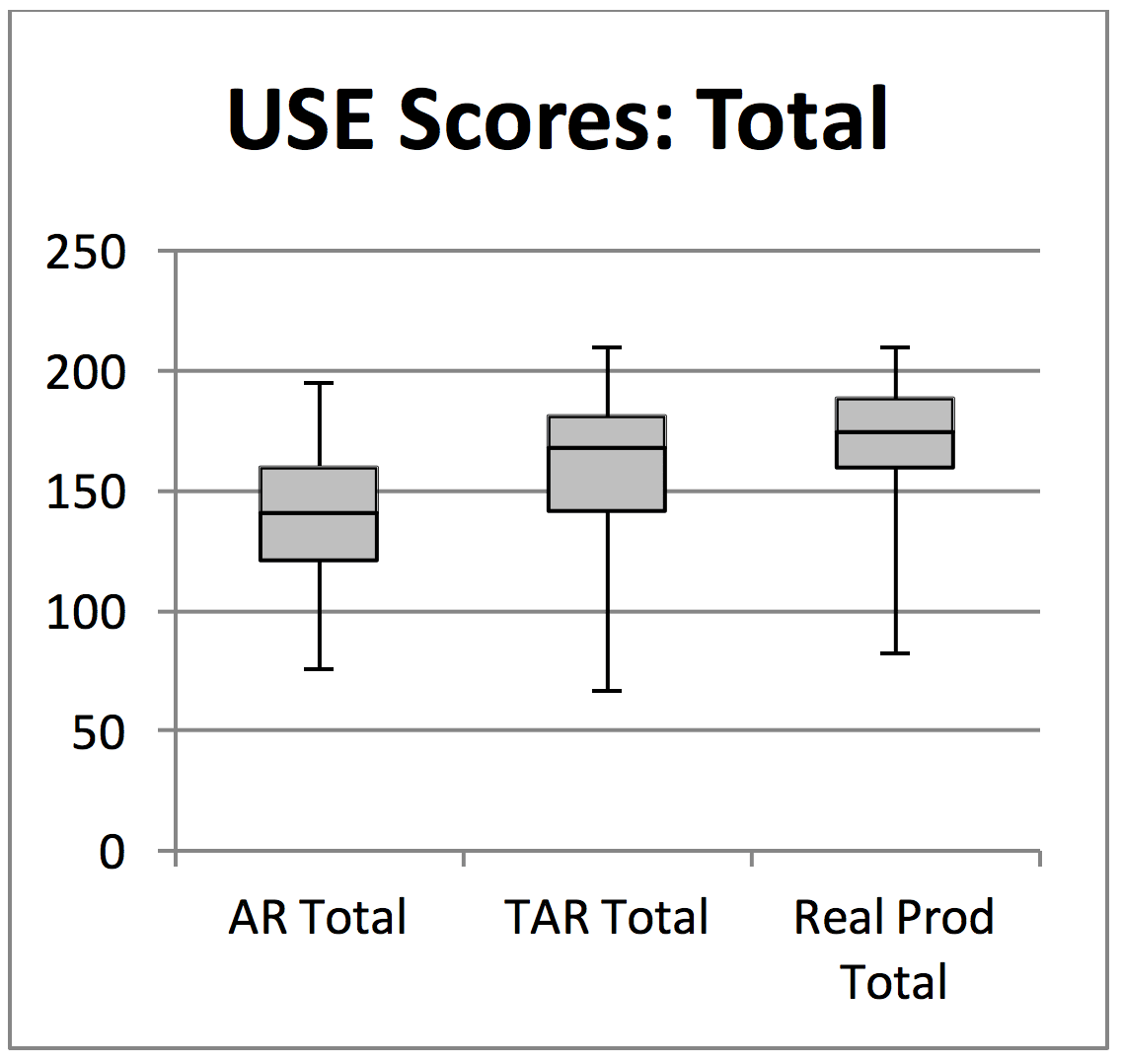

The item scores for the USE questionnaire were summed to provide an overall measure of usability. The possible scores for overall usability range from a lowest possible score of 30 to a highest possible score of 210. Table 1 shows the descriptive statistics from each of the user usability evaluations. Figure 4 shows a box plot of the Total USE scores.

Table 1. Descriptive Statistics for Total USE Scores for the Three Product Representations

|

Product |

Mean |

Median |

Std. deviation |

Std. error |

95% Confidence interval for mean |

|

|

Lower bound |

Upper bound |

|||||

|

Actual product |

169.79 |

174.5 |

27.14 |

3.24 |

163.31 |

176.26 |

|

TAR representation |

160.9 |

167.5 |

29.23 |

3.49 |

153.93 |

167.87 |

|

AR representation |

141.7 |

141 |

26.76 |

3.20 |

135.29 |

148.05 |

Figure 4. Box plot of total USE questionnaire scores.

A Kruskal-Wallis test was conducted to evaluate differences among the three groups for the total USE questionnaire scores (the actual product, the augmented reality representation of the product, and the tangible augmented reality representation of the product). The test, which was corrected for tied ranks, was significant H-stat (df = 2, N = 70) = 36.08, p < .001. Follow-up comparisons using Mann-Whitney tests were conducted to evaluate pairwise differences among the three groups, controlling for Type I error across tests by using the Bonferroni approach. The results of these tests showed that the AR representation (M = 141.7, SD = 26.76) was rated significantly lower (p < .001) than the actual product (M = 169.79, SD = 27.14) for the overall total USE score. No significant differences were found between the total USE scores for the TAR representation and the actual product. These results support the hypothesis that usability evaluations of a TAR representation of a product will be similar to evaluations of the product itself. The results did not support the hypothesis that usability evaluations of an AR representation would also be similar.

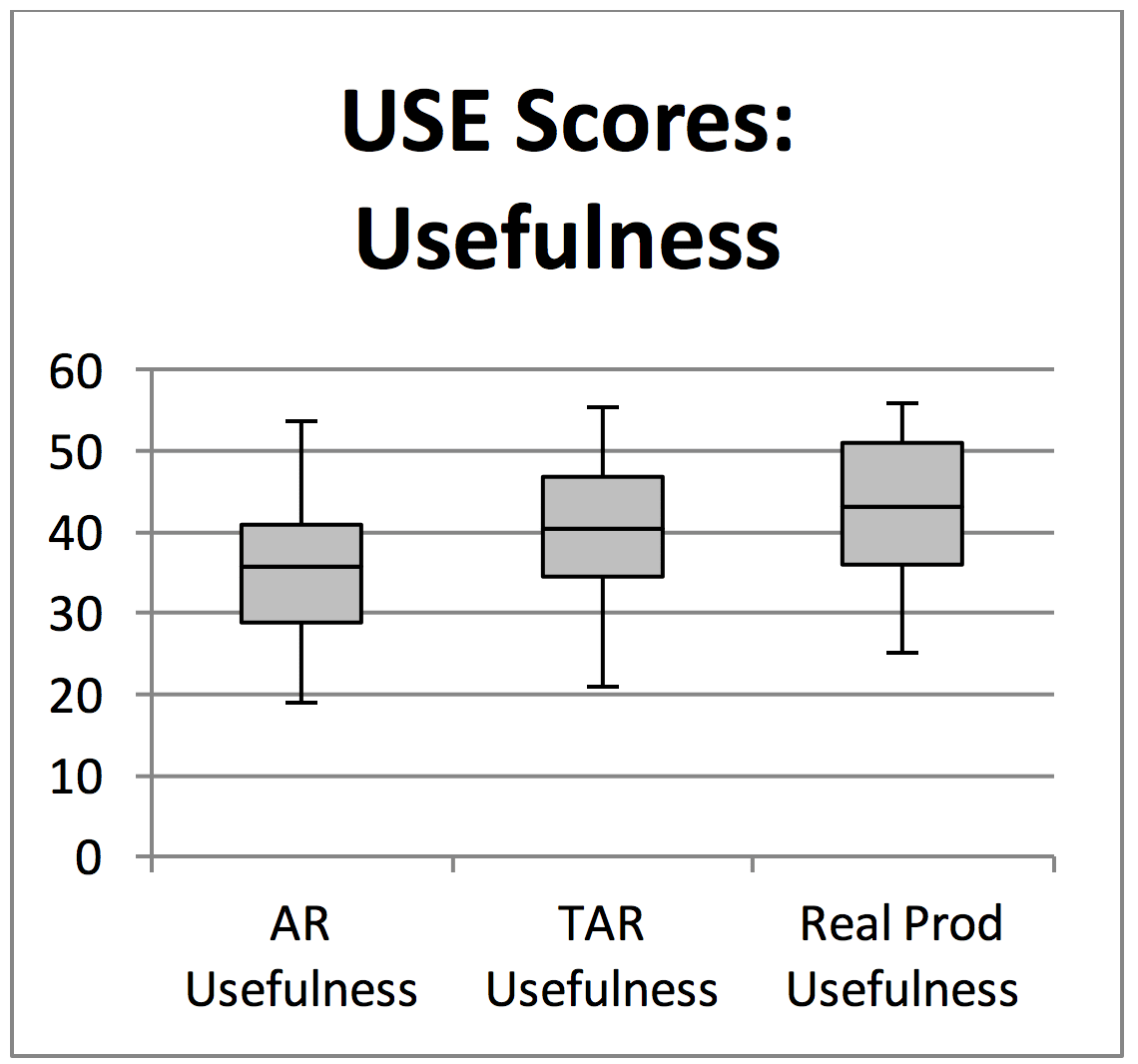

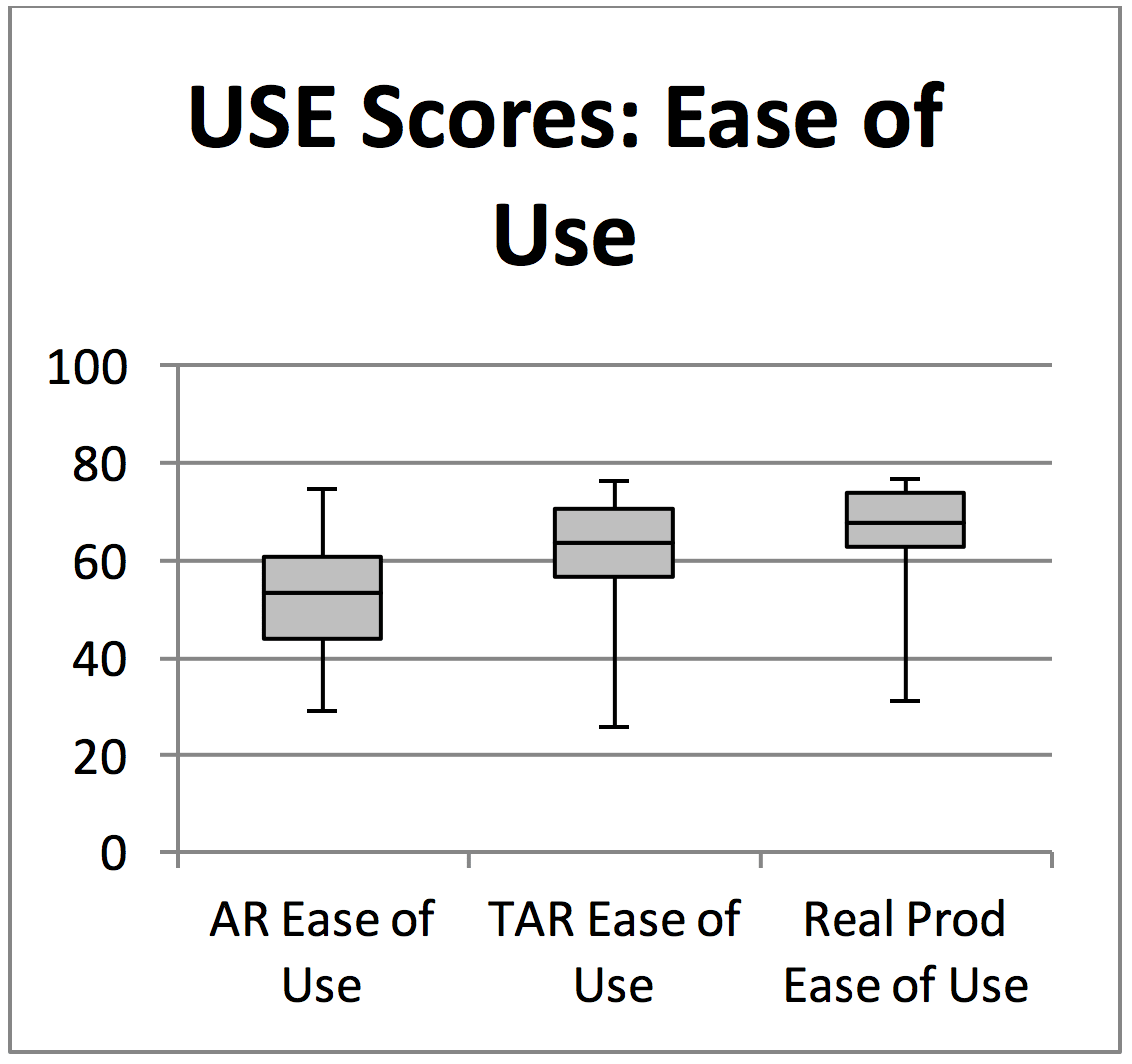

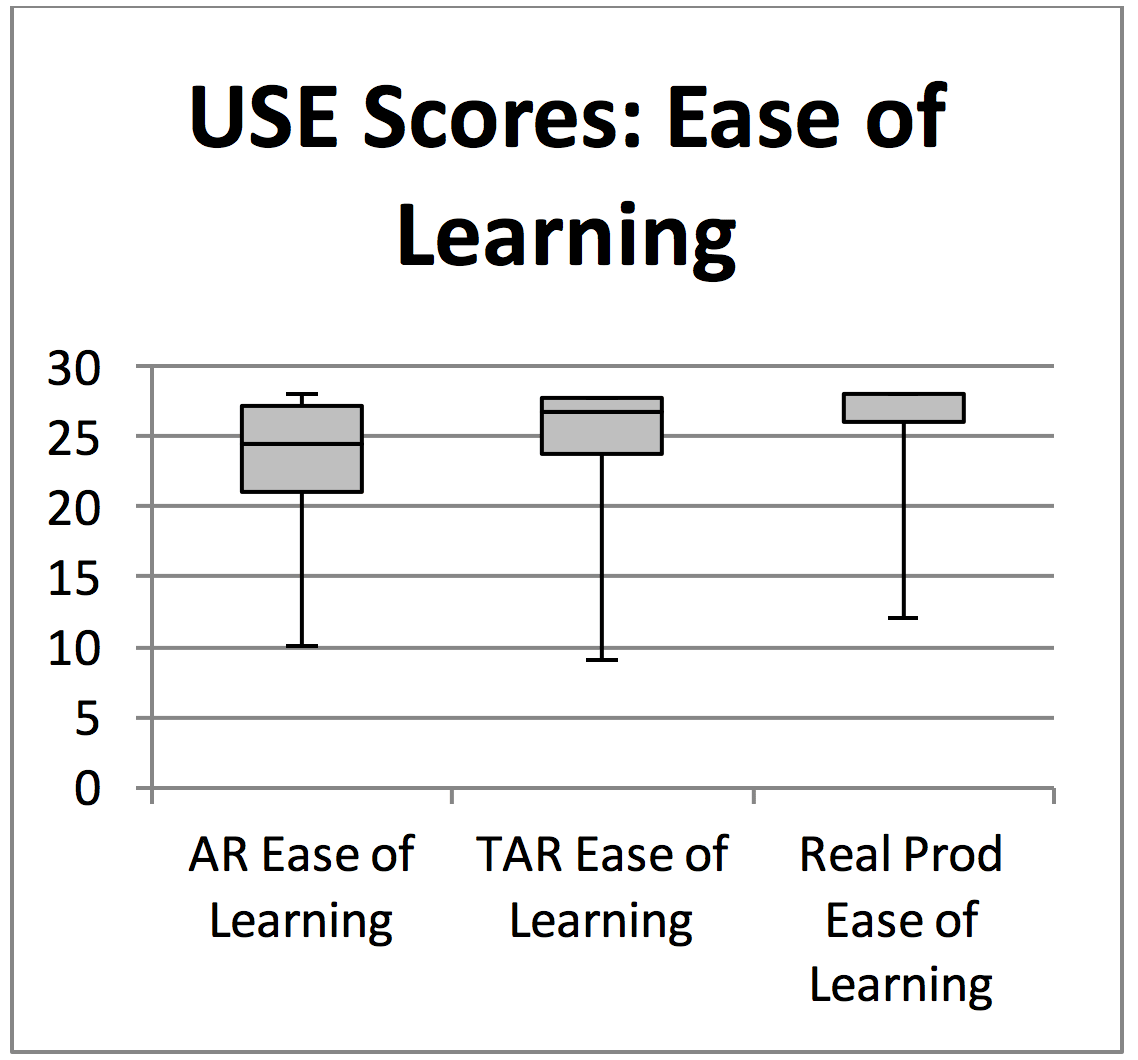

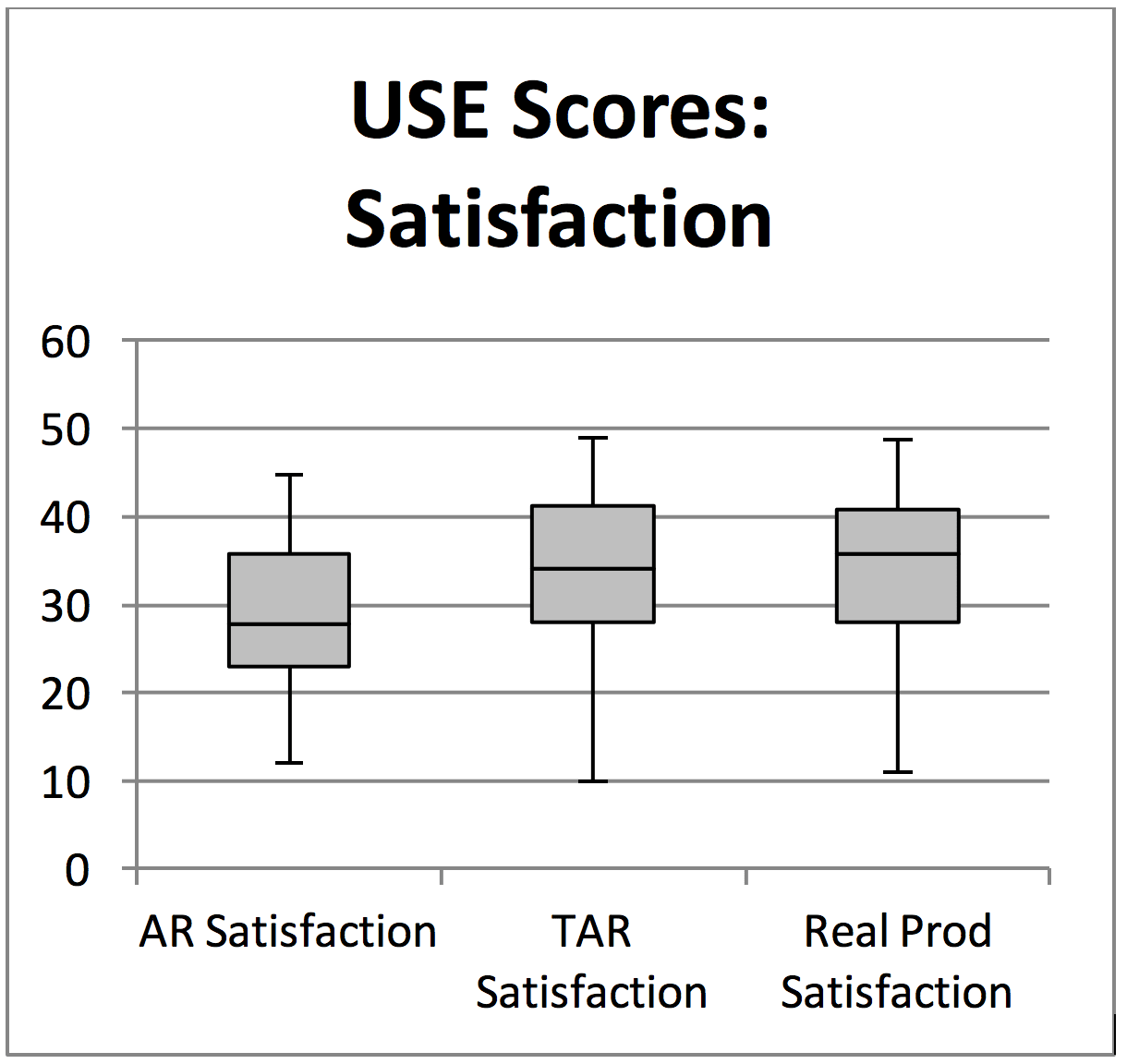

Tables 2–5 show the descriptive statistics for each group of scores. Figures 5–8 show standard box plots of the data. A Kruskal-Wallis test was conducted to evaluate differences between the product representations related to each of the four individual aspects of usability covered by the USE questionnaire. All tests were corrected for tied ranks.

Table 2. Descriptive Statistics for Usefulness Scores for the Three Product Representations

|

Product |

Mean |

Median |

Std. deviation |

Std. error |

95% Confidence interval for mean |

|

|

Lower bound |

Upper bound |

|||||

|

Actual product |

42.76 |

43 |

8.56 |

1.02 |

40.71 |

44.80 |

|

TAR representation |

40.31 |

41 |

8.87 |

1.06 |

38.20 |

42.49 |

|

AR representation |

35.06 |

36 |

7.79 |

0.93 |

33.20 |

36.92 |

Figure 5. Box plot of USE questionnaire scores related to Usefulness.

Table 3. Descriptive Statistics for Ease of Use Scores for the Three Product Representations

|

Product |

Mean |

Median |

Std. deviation |

Std. error |

95% Confidence interval for mean |

|

|

Lower bound |

Upper bound |

|||||

|

Actual product |

66.1 |

68 |

9.98 |

1.19 |

63.72 |

68.48 |

|

TAR representation |

61.41 |

64 |

11.22 |

1.34 |

58.80 |

64.15 |

|

AR representation |

53.86 |

54 |

11.53 |

1.38 |

51.11 |

56.61 |

Figure 6. Box plot of USE questionnaire scores related to Ease of Use.

Table 4. Descriptive Statistics for Ease of Learning Scores for the Three Product Representations

|

Product |

Mean |

Median |

Std. deviation |

Std. error |

95% Confidence interval for mean |

|

|

Lower bound |

Upper bound |

|||||

|

Actual product |

26.13 |

28 |

3.29 |

0.39 |

25.34 |

26.91 |

|

TAR representation |

25.17 |

27 |

3.93 |

0.47 |

24.24 |

26.11 |

|

AR representation |

23.71 |

24.5 |

4.22 |

0.50 |

22.71 |

24.72 |

Figure 7. Box plot of USE questionnaire scores related to Ease of Learning.

Table 5. Descriptive Statistics for Satisfaction Scores for the Three Product Representations

|

Product |

Mean |

Median |

Std. deviation |

Std. error |

95% Confidence interval for mean |

|

|

Lower bound |

Upper bound |

|||||

|

Actual product |

34.8 |

36 |

8.87 |

1.06 |

32.69 |

36.91 |

|

TAR representation |

33.94 |

34 |

9.22 |

1.10 |

31.74 |

36.14 |

|

AR representation |

29.04 |

28 |

8.57 |

1.02 |

26.99 |

31.09 |

Figure 8. Box plot of USE questionnaire scores related to Satisfaction.

For the Usefulness scores, the Kruskal-Wallis test was significant H-stat (df = 2, N = 70) = 26.06, p < .001. Follow-up comparisons using Mann-Whitney tests were conducted to evaluate pairwise differences among the three groups, controlling for Type I error across tests by using the Bonferroni approach. The results of these tests showed that the AR representation (M = 35.06, SD = 7.79) was rated significantly lower (p < .001) than the actual product (M = 42.76, SD = 8.56) for the USE score related to Usefulness. No significant differences were found between the Usefulness scores for the TAR representation and actual product.

For the Ease of Use scores, the Kruskal-Wallis test was significant H-stat (df = 2, N = 70) = 41.25, p < .001. Follow-up comparisons using Mann-Whitney tests were conducted to evaluate pairwise differences among the three groups, controlling for Type I error across tests by using the Bonferroni approach. The results of these tests showed that the AR representation (M = 53.86, SD = 11.53) was rated significantly lower (p < .001) than the actual product (M = 66.1, SD = 9.98). The tests also showed that the TAR representation (M = 61.41, SD = 11.22) was rated significantly lower (p = .003) than the actual product (M = 66.1, SD = 9.98) for the USE score related to Ease of Use.

For the Ease of Learning scores, the Kruskal-Wallis test was significant H-stat (df = 2, N = 70) = 17.18, p <.001. Follow-up comparisons using Mann-Whitney tests were conducted to evaluate pairwise differences among the three groups, controlling for Type I error across tests by using the Bonferroni approach. The results of these tests showed that the AR representation (M = 23.71, SD = 4.22) was rated significantly lower (p < .001) than the actual product (M = 26.13, SD = 3.29) for the USE score related to Ease of Learning. No significant differences were found between the Ease of Learning scores for the TAR representation and actual product.

For the Satisfaction scores, the Kruskal-Wallis test was significant H-stat (df = 2, N = 70) = 16.69, p = .0002. Follow-up comparisons using Mann-Whitney tests were conducted to evaluate pairwise differences among the three groups, controlling for Type I error across tests by using the Bonferroni approach. The results of these tests showed that the AR representation (M = 29.04, SD = 8.57) was rated significantly lower (p < .001) than the actual product (M = 34.8, SD = 8.87) for the USE score related to Satisfaction. No significant differences were found between the Satisfaction scores for the TAR representation and actual product.

As with the overall usability scores, these results support the hypothesis that usability evaluations with respect to Usefulness, Ease of Learning, and Satisfaction of a TAR representation of a product will be similar to evaluations of the product itself. The evaluations of the TAR evaluations were not similar to the actual product with respect to Ease of Use. The results did not support the hypothesis that evaluations of an AR representation would also be similar to the actual product with respect to any of the aspects of usability measured by the USE questionnaire.

Discussion

This study set out to investigate whether tangible augmented reality is a tool that can be used to reliably assess the usability of a design. The results show that overall a TAR representation of a product can generate evaluations that are statistically similar to those of the product itself with relation to most of the main measures of usability.

The data in this study showed that usability tests of an AR representation were significantly different in all respects and did not match those of the actual product. In this case, it indicates that AR by itself is not a good tool for evaluating the usability of a design concept. This is in contrast to a similar study that compared usability evaluations of a digital music player with an AR representation of itself (Choi & Mittal, 2015). That study found that the AR evaluations were not significantly different from those of the product. One of the key differences is that the product in the earlier study did not feature any physical controls: It was controlled via touchscreen only. Because of this the method of interaction with the AR, representation was exactly the same as with the product.

The physical nature of the heater’s controls in this study meant that the AR interaction was different. Functionally, it was the same; however, the tactile nature of the control did not translate well to AR. The ability to turn a physical control on the TAR representation was the only functional difference from the AR representation. This can indicate that AR may not be a suitable or reliable tool to perform usability evaluation of a product with physical controls. If a product concept includes physical interface elements, then a TAR representation may be required to accurately represent it.

Generally, the TAR representation of the product produced statistically similar evaluations compared to the actual, functional product. In the case tested here, TAR could be a suitable tool for accurately assessing most aspects of the usability of the product concept. While evaluations between TAR and the functional product were similar for Usefulness, Ease of Learning, and Satisfaction, significant differences were found with respect to the Ease of Use component of usability. Further study would be required to understand why TAR was different only in this respect. This difference may be partly due to the novelty of the technology. While applications of AR-based technologies are becoming increasingly common, it is still relatively new. The use of a tablet to render the augmented view may also be a factor. It is not as natural as regular vision because the product can only be viewed through the tablet screen and with a less than normal field of view. Mixed reality glasses (such as Google Glass and others) might help resolve some of this; however, these have other limitations (low resolution, restricted field of view, tracking challenges, etc.) as well as being a more novel technology than a tablet screen. Finally, TAR may simply not be a suitable substitute to measure Ease of Use compared to actual product interaction.

Conclusions and Further Study

This study contains some important limitations. All of the participants were young college students. Due to the environment, most of the students were design, architecture, or engineering students who are generally familiar and comfortable with technology such as AR. Their experience in these fields also mean that many have training in evaluating designs through more abstract media (such as via CAD, technical drawings, sketches, etc.). The same results may not be found from non-college participants of the same age that lack one or more of these attributes. Additionally, the same results may not be found among participants of other ages and demographics.

In this study, the similarity of the usability evaluations appeared to gradually degrade as the level of realism was reduced. The augmented 3D view of the AR and TAR models was the same, but the TAR model had the additional element of tactile feedback not present with the AR representation. Though an earlier study showed that evaluations similar to an actual product could be obtained from AR, the same kind of performance was not seen in this study. The difference may be related to the product interface. The heater evaluated in this study required manipulating physical knobs to operate; where the product in the earlier study was fully touchscreen based (so the basic way of interaction was not different).

Even though in this study the TAR representation was a close match to the actual product in most respects, it was not a good indicator of Ease of Use. Further investigation would be useful to better understand which product attributes might make an accurate evaluation more likely when represented in AR vs. TAR. Though the differences between AR and the actual product were significantly different in this study, it would be interesting to investigate the practical impact in the design development process. It does not necessarily mean that AR is not a useful design tool because there is value in the refinement that comes from the process of iteration and testing. This is especially true if it is more accurate than alternative tools, makes the process easier, and leads to more or better ideas explored within the same allotted time. However, if the primary goal is to accurately assess how a product is likely to perform when fully realized, compared to AR, TAR may be a more appropriate tool.

Tips for Usability Practitioners

Based on the findings of this study, practitioners may find the following points useful to consider when utilizing TAR and AR in product evaluations or the design of further research:

- Tangible augmented reality may provide a valid method for accurately assessing the usability of a product design with physical interface elements with respect to Usefulness, Ease of Learning, and Satisfaction.

- Tangible augmented reality may not be suitable for evaluating Ease of Use.

- Augmented reality may not be suitable for assessing the usability of designs that include physical interface elements.

- Further investigation may be required to understand if tangible augmented reality can provide an accurate product design usability assessment tool with non-college student user groups.

References

Billinghurst, M., Kato, H., & Myojin, S. (2009). Advanced interaction techniques for augmented reality applications. Paper presented at the Virtual and Mixed Reality, Berlin Heidelberg.

Bruseberg, A., & McDonagh-Philip, D. (2002). Focus groups to support the industrial/product designer: A review based on current literature and designers’ feedback. Applied Ergonomics, 33(1), 27–38.

Choi, Y. M., & Mittal, S. (2015). Exploring benefits of using augmented reality for usability testing. Paper presented at the 20th International Conference on Engineering Design (ICED 15) Vol 4: Design for X, Design to X, Milan, Italy, 27–30.

Creusen, M. E. (2011). Research opportunities related to consumer response to product design. Journal of Product Innovation Management, 28, 405–408.

Estrada, O. E. S., Urbina, M. G., & Ocaña, R. (2018). Augmented reality for evaluating low environmental impact 3D concepts in industrial design. In G. R., & M. H. Hernández (Eds.) Augmented reality for enhanced learning environments (pp. 222–245). Hershey, PA, USA: IGI Global. doi:10.4018/978-1-5225-5243-7

Faust, F. G., Catecati, T., de Souza Sierra, I., Araujo, F. S., Ramírez, A. R. G., Nickel, E. M., & Ferreira, M. G. G. (2018). Mixed prototypes for the evaluation of usability and user experience: simulating an interactive electronic device. Virtual Reality, 23(2), 1–15.

International Organization for Standardization. (1998). Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs)-Part 11, Guidance on Usability (ISO Standard No. 9241-11).

Lee, G. A., & Kim, G. J. (2009). Immersive authoring of tangible augmented reality content: A user study. Journal of Visual Languages & Computing, 20(2), 61–79.

Lee, G. A., Nelles, C., Billinghurst, M., & Kim, G. J. (2004). Immersive authoring of tangible augmented reality applications. Paper presented at the 3rd IEEE/ACM international Symposium on Mixed and Augmented Reality.

Lewis, J. R. (2006). Usability testing. In G. Salvendy (Ed.), Handbook of Human Factors and Ergonomics (pp. 1275–1316). Hoboken, NJ, USA: John Wiley & Sons, Inc.

Lund, A. M. (2001). Measuring usability with the USE questionnaire. Usability and the User Experience, 8(2), 8.

McGrath, M. E., Anthony, M. T., & Shapiro, A. R. (1992). Product development: Success through product and cycle-time excellence (1st Ed.). Oxford: Butterworth-Heinemann.

Moultrie, J., Clarkson, J., & Probert, D. (2007). Development of a design audit tool for SMEs. Journal of Product Innovation Management, 24(4), 335–368.

Santos, P., Graf, H., Fleisch, T., & Stork, A. (2003). 3D interactive augmented reality in early stages of product design. Paper presented at the HCI International 2003, 10th Conference on Human-Computer Interaction.

Shen, Y., Ong, S. K., & Nee. (2010). Augmented reality for collaborative product design and development. Design Studies, 31(2), 118–145.

Srinivasan, V., Lovejoy, W. S., & Beach, D. (1997). Sharing user experiences in the product innovation process: Participatory design needs participatory communication. Creativity and Innovation Management, 16, 35–45.

Starner, T., Mann, S., Rhodes, B., Levine, J., Healey, J., Kirsch, D., . . . Pentland, A. (1997). Augmented reality through wearable computing. Prescence: Teleoperators and Virtual Environments, 6(4), 386–398.

[:]