Abstract

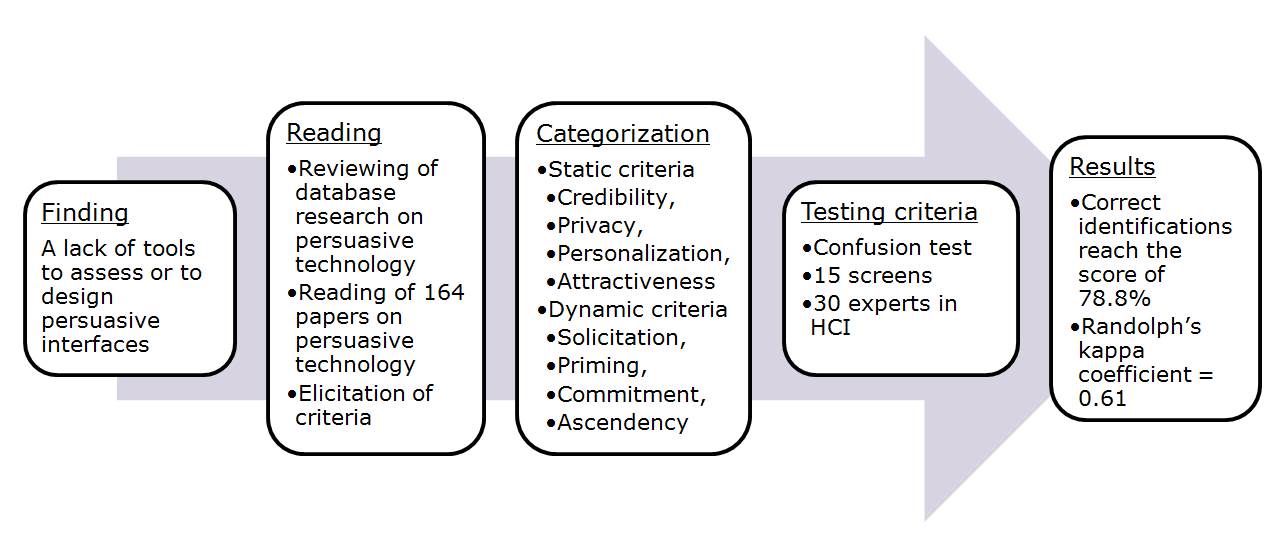

This study presents an attempt to organize and validate a set of guidelines to assess the persuasive characteristics of interfaces (web, software, etc.). Persuasive aspects of interfaces are a fast growing topic of interest; numerous website and application designers have understood the importance of using interfaces to persuade and even to change users’ attitudes and behaviors. However, research has so far been limited by a lack of available tools to measure interface persuasion. This paper provides a criteria-based approach to identify and assess the persuasive power of interfaces.

We selected164 publications in the field of persuasive technology, and we used those publications to define eight criteria: credibility, privacy, personalization, attractiveness, solicitation, priming, commitment, and ascendency. Thirty experts in human-computer interaction (HCI) were asked to use guidelines to identify and classify persuasive elements of 15 interfaces. The average percentage of correct identification was 78.8%, with Randolph’s kappa coefficient = 0.61. These results confirm that the criteria for interactive persuasion, in their current form, can be considered as valid, reliable, and usable. This paper provides some inherent limitations of this method and identifies potential refinements of some definitions. Finally, this paper demonstrates how a checklist can be used to inspect the persuasiveness of interfaces.

Tips for Usability Practitioners

Our approach is to define guidelines to measure and assess the persuasive dimensions of user experience. The following tips are recommended for those who are studying persuasive systems:

- In addition to accessibility, usability, and emotional criteria, HCI experts can provide efficient and effective guidance to gauge how interfaces could be persuasive. A set of persuasive guidelines should be used by UX designers in interface evaluation and design to improve the psychosocial aspect of the interfaces.

- The set of eight criteria is well identified by HCI experts. Consequently, it encourages the use of credibility, privacy, personalization, attractiveness, solicitation, priming, commitment, and ascendency criteria to understand how persuasive elements work in interfaces. The importance of persuasive elements in interface design can be an important factor in developing or evaluating a study that relies on that technology.

- In addition to the criteria of clarity, consistency, brevity, adaptability, etc., persuasive guidelines should/could also be included in the training of UX designers.

Introduction

Usability can be assessed through widely known inspection methods such as walk-throughs and heuristic evaluations. Historically, while these methods were being developed, a set of usability assessment metrics and criteria were defined and resulted in the International standards for HCI and usability in 1998 (i.e., ISO 9241). In this context, these criteria have been developed to facilitate the use of technical systems; to make interaction more useful, efficient, simple, pleasant, etc.; and to make life easier for users.

Our research seeks to define criteria and validate a checklist of criteria to assess the persuasive dimensions of user interfaces. Ergonomics and HCI have produced numerous recommendations to measure the ergonomic quality of products and services, but there is a lack of knowledge and criteria to design and to evaluate persuasive interactions.

The increase in new technologies and interactive media provides new opportunities to influence users. Nowadays, technology offers unprecedented means to change users’ attitude or behavior because technology is an everyday presence for a large portion of the world’s population and can be accessed easily and quickly. This accessibility has created a new type of relationship between users and technology; the user’s experience can be rooted in a more emotional context. Through persuasion, technological media can take users by the hand and lead them to perform target behavior.

Several definitions of persuasive interfaces can be found in the literature. For the purpose of this study, we define a persuasive interface as a combination of static qualities and steps that guide a user through a process to change his or her attitude or behavior. Many industries and companies that have an online presence, such as social networks, e-commerce, e-health or e-learning companies, invest in and produce persuasive interfaces. For instance, Consolvo, Everitt, Smith, and Landay (2006) and Adams et al. (2009) observed a significant increase in healthcare companies’ efforts that aimed to encourage safe behavior and healthier lifestyles. While this effort provides a way to foster better health habits, which has the potential to cut healthcare costs, it also raises some ethical issues like the manipulation of health behaviors.

With a large variety of websites, software, mobile phones, and other applications, experts need access to effective tools to evaluate the persuasiveness of an online interface. These tools can be found in the multidisciplinary field of ergonomics. Ergonomics has a tradition of producing standards, checklists, guidelines, norms, and recommendations that can be used to evaluate the persuasiveness of interfaces.

To present and validate a checklist to assess persuasion in interfaces, we start by describing the theoretical basis of our set of guidelines. Then, we classify persuasive criteria into eight categories. Our set of criteria aims to define and gather all aspects of persuasion present on the interfaces. We then describe an experiment with 30 usability experts performing an evaluation task on the persuasive human-computer interfaces. The validation of the checklist is based on a method of categorization criteria: the more the experts categorize identical criteria, the better the quality of the guidelines. From this point of view, our main question is to understand if the validation says something about whether participants can tell the eight criteria apart and whether the participants find that the eight criteria capture important aspects of the persuasiveness of the interfaces. Finally, we analyze and discuss the results of the experiment and provide some recommendations for the use of this set of guidelines.

Conceptual Framework

The following sections discuss the theoretical background for the concept and design of this study, the design of the set of guidelines used for this study, and the selection of guideline criteria for the checklist.

Theoretical Background

Fogg opened the way for the field of persuasive technology for which he coined the term “captology,” an abbreviation for “computers as persuasive technologies” (2003, p. 5). The concept of persuasion can cover a range of meanings, but Fogg defines it as “an attempt to shape, reinforce, or change behaviors, feelings, or thoughts about an issue, object, or action.” Fogg presents persuasion technology as a tool to achieve a change in the user’s behavior or attitude, a media interaction that creates an experience between the user and the product, and a social actor. The social actor deals with the technology’s use of strategies for social influence and compliance. In short, persuasion technology is a medium to influence and persuade people through HCI. Indeed, the technology becomes persuasive when people give it qualities and attributes that may increase its social influence in order to change users’ behavior. This notion is at the crossroads between ergonomics, social psychology, organizational management, and (obviously) the design of a user interface.

We chose to use and adapt some traditional persuasion techniques used in studies from fields such as advertising, politics, and health (Chaiken, Liberman, & Eagly, 1989; Greenwald & Banaji, 1995; McGuire, 1969; Petty & Cacioppo, 1981). We adapted the techniques used in these earlier studies to match modern technological systems capabilities and specificities (Oinas-Kukkonen, 2010).

This last decade has met with a growing interest for technological persuasion, as shown by Tørning and Oinas-Kukkonen (2009), who investigated persuasive systems produced between 2006 and 2008. The large body of research on this topic clearly attests to the importance of the field of technological persuasion. One of the recommendations for future research is to create methods for a better measurement of successful persuasive systems (Lockton, Harrison, & Stanton, 2010; Oinas-Kukkonen & Harjumaa, 2009; Tørning & Oinas-Kukkonen, 2009). In the same way that Oinas-Kukkonen and Harjumaa (2009) defined four persuasive dimensions (social support, system credibility support, primary task support, and dialogue support), we consider that designers and ergonomists need to have a set of guidelines to evaluate persuasion.

In this paper, we present a checklist that serves as a set of guidelines. We explain the goal of our checklist which is to develop ergonomic practices and to integrate persuasive evaluations into software design processes using concepts such as reduction, credibility, legitimacy, tunneling, tailoring, personalization, self-monitoring, simulation, rehearsal, monitoring, social learning, open persuasion, praise, rewards, reminders, suggestion, similarity, attractiveness, liking, social role, and addictive characteristics—all of which originate from different research (Fogg, 2003; Nemery, Brangier, & Kopp, 2009; Oinas-Kukkonen & Harjumaa, 2008).

Designing the Set of Guidelines

A lack of means to assess or to design persuasive interfaces is apparent (Tørning & Oinas- Kukkonen, 2009). Some of the criteria gathered from earlier studies (Oinas-Kukkonen & Harjumaa, 2009) were not validated with experimental methods that showed the relevance and efficiency of the criteria.

Another criticism, which can be addressed against existing criteria, is the lack of consideration for time as a structural element of social influence. Persuasion is always linked to a temporal process that includes a beginning, followed by modifications leading to behavioral change. This process always takes place over several hours, sometimes several months. A set of criteria should include the moment in time to understand forms of lasting influence, such as addiction or dependence. The concept of temporality emerged as a necessary condition to change user behavior. In order to incur lasting changes of attitude and behavior, it was observed that accepting an effortless initial request predisposes a user in a positive way to accept a subsequent request asking for a greater effort; this social influence is always temporarily structured. For example, the interface displays an invitation to play a short videogame, if you choose to play the game, you are gradually presented with seemingly innocuous requests designed to covertly persuade you, eventually, to give personal information or to purchase options.

Our checklist is based on an analysis of 164 documents linked to technological persuasion (for details of the collected documents, see Némery, 2012). These documents helped us identify the main components of persuasion mediated by tools, organized those components, and offered a classification framework for our guidelines. We developed a total of eight persuasive criteria and 23 sub-criteria. The set of guidelines differentiates static aspects from dynamic aspects. The assumption is that user interfaces need a set of necessary qualities and properties. These elements are conducive to the establishment of a commitment loop that will lead a subject from behavior A (or attitude A) to behavior B (or attitude B).

New devices offer more and more interactive and innovative interfaces that use new user interface features and technologies. The potential persuasive impact of these dynamic interfaces is much greater than a static information display where no interaction is possible. Given a few enabling conditions, users are able to dynamically interact with a device in a short time, which facilitates the desired change in users’ behaviors or attitudes towards a product, service, or informational interface. Interface properties are necessary but not sufficient to change behaviors and attitudes. Change is also a social construct that could be planned while taking into account user specificities.

To better understand the process of persuasion, let’s compare a human to human relationship. For instance, you would not lend money to strangers or someone you do not see as trustworthy. Along the same line of thinking, people will not buy something on a website without critical elements that inspire quality and security during the interaction. If websites are not deemed trustworthy, people generally do not buy products from those sites.

A computer is a social actor (Fogg, 2003). Through repetitive interactions, a user will be more and more emotionally involved. Computers can feel like a living entity with human-like qualities. Through the use of formal and polite language employed in interfaces—as advice, rewards, motivating messages, or praise—computers can serve as a social support entity or as a teammate (Nass, Fogg, & Moon, 1996). Depending on the context, a computer can become a coach, a cooperative partner, or a sort of a symbiotic assistant.

Selection of Criteria and Construction of the Checklist

Research to develop the list of criteria (Figure 1) is based on a review of 164 articles on captology and persuasive design. We also used database research (PsychInfo, Springer-Kluwer, and Science Direct) and conference proceedings presentations and articles (CHI, HCII, Persuasive conference) to develop the criteria. These resources provide a rich collection of many examples of persuasive technology. The publication rate in this area has increased over the past ten years.

“

“

Figure 1. Construction of the checklist.

To select the publications for our research, we used the following indicators of relevance:

- We selected papers that included research that dealt with a user’s change of attitude or behavior through technology. We avoided articles that did not gather sufficient empirical evidence in their methodology.

- We selected papers that focused on topics of persuasive interfaces, i.e., computer-human interactions that combined qualities and planned interactions that guide a user through a process to change his or her attitude or behavior. The papers that did not focus directly on these topics or were deemed less relevant to our area of study were

Two ergonomists selected or rejected articles based on reading the titles and abstracts of those articles. For an article to be accepted for the resource list, at least one of the two ergonomists needed to select the article. In total, 164 articles were selected. The general conclusions that emerged from this set of publications were the following:

- The publication rate in this area has clearly increased over the past ten years. From 1994 to 2005, persuasive technology was just emerging (around five papers each year presented recommendations on persuasive interfaces), but after 2005, publications increased until 2009 with 42 papers published on persuasive technologies.

- Our findings were interdisciplinary and take into account related disciplines such as sociology, psychology, computer science, marketing, and communication science.

- The selected publications include the following fields (the percentage represents the ratio of papers/fields used in this study): Health (28.22%), Education (23.31%), Service (18.40%), Business (9.82%), Ecology (9.20%), Marketing (6.75%), and Leisure (4.29%).

- The following technologies are the main technology resources written about in the selected publications: websites (31.90%), games (26.38%), software (16.56%), mobile apps (9.20%), ambient systems (4.29%), and others (11.67%).

Previous research defined the initial checklists (Némery, Brangier, & Kopp, 2009, 2010, 2011). After an iterative work of classification and organization, we designed the checklist to include eight elements (Figure 2). After this checklist was finalized, a second checklist was drawn up with the following requirements:

- Simplification: Each element must be understood by ergonomists, HCI specialists, computers engineers, and interactive designers.

- Consistency: All criteria extracted from the 164 articles must be reflected in the checklist (Figure 2). This consistency gives relevance to the checklist because all items must be present in one form or another.

- Exclusivity: Only one criterion per persuasive item must be applied. Ambiguity between criteria should be avoided as much as possible. A persuasive item must be relevant to the criteria, and vice versa.

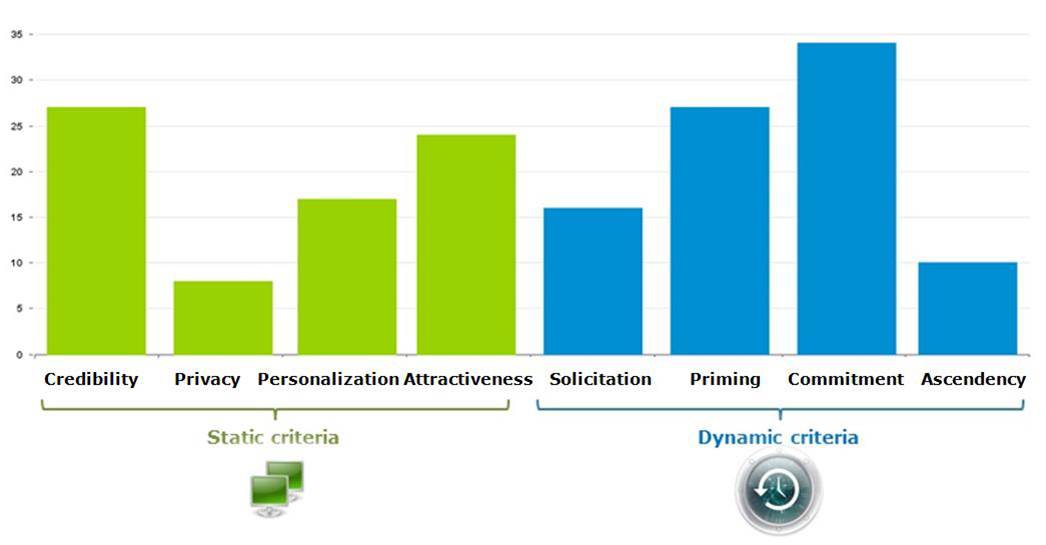

Figure 2. Distribution of papers (n= 164, published from 1994 to 2011) dealing with the eight criteria developed in the checklist for persuasive interfaces.

A Set of Guidelines for Persuasive Technology

A classification of the 164 papers shows that 53% of papers dealt with the dynamic aspect of the computer’s influence and 47% were more related to static dimensions of persuasive interfaces (Figure 2).

Static Criteria

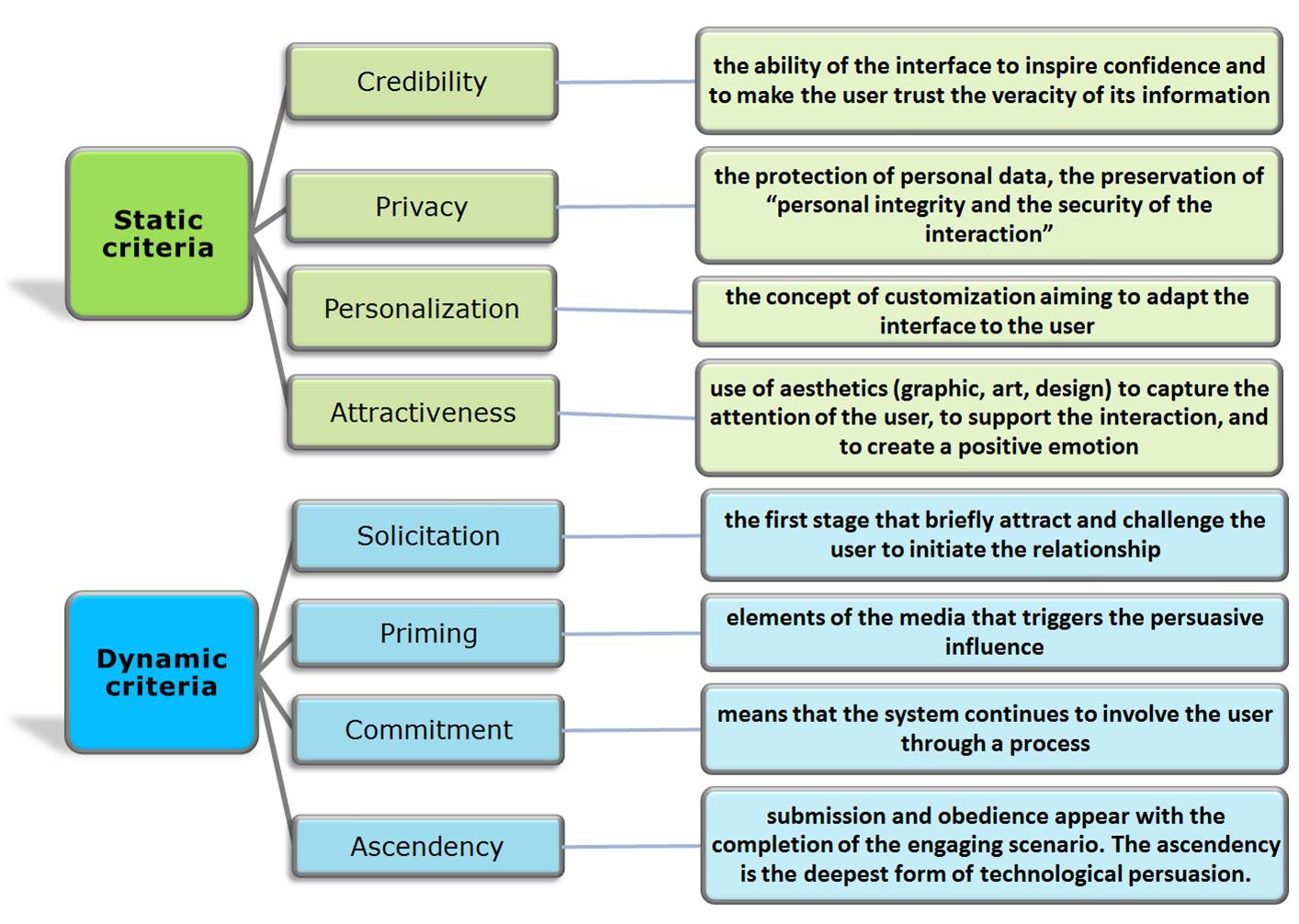

Static criteria are all the surface elements that are necessary to help launch a dynamic process of user engagement. That is to say, in interfaces, some prerequisites are necessary to foster acceptance of an engaging process. These criteria are based on the influence content. Mostly not affected by time or sequence of interactions, these criteria are designed to assess the content of the interaction. Static criteria are opposed to dynamic criteria, which incorporates strong temporal components. We identified four static components to foster acceptance and confidence of users: credibility, privacy, personalization, and attractiveness (Figure 3).

Figure 3. General architecture of the eight interactive persuasive criteria.

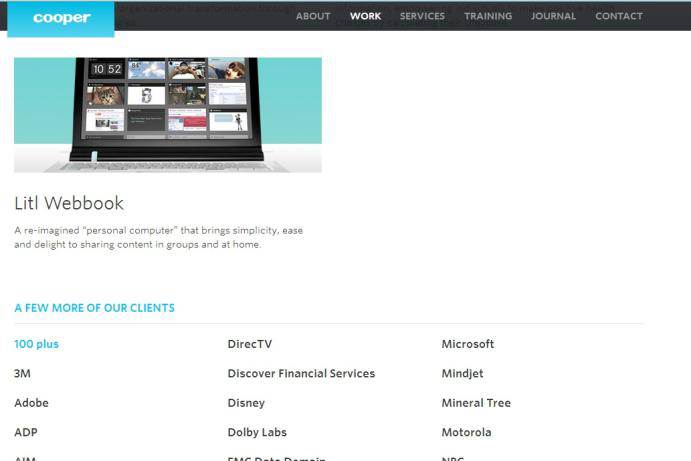

Credibility is the first general criterion (see Figure 4 for an example). It is the ability of the interface to inspire confidence and to make the user trust the veracity of its information. Credibility is also based on a company’s or an industry’s reputation. All the people or institutions that provide the components that make up an industry’s or company’s systems (e.g., the different types of technical systems) must be recognized as being honest, competent, fair, and objective. Credibility comprises three components: trustworthiness, expertise, and legitimacy. Much has been written about the credibility and trust of web media (e.g., Bart, Venkatesh, Fareena, & Urban, 2005; Bergeron & Rajaobelina, 2009; Huang, 2009).

Figure 4. Example of credibility criterion: This website provides a list of famous clients to inspire trustworthiness and legitimacy in their field (source: www.cooper.com, screenshot captured on 03/15/2013).

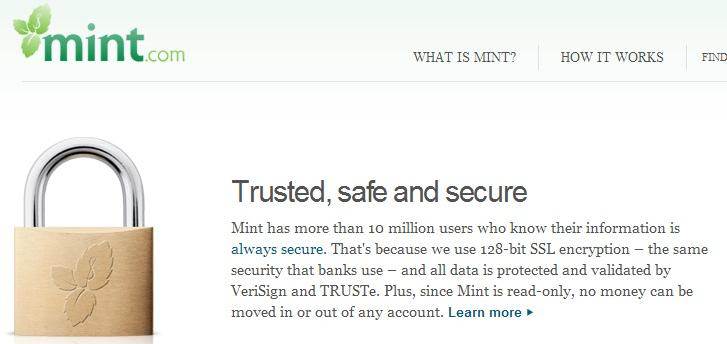

Privacy means the protection of personal data, the preservation of personal integrity, and the security of the interaction (Figure 5). It covers all aspects of privacy that are used in interactions. This criterion also aims to ensure protection against loss, destruction, or inadvertent disclosure of data (Liu, Marchewka, Lu, & Yu, 2005). Privacy concerns the expression of perceived safety, the perception of rights, and the protection of confidentiality of information. For instance, organizations such as Alcoholic Anonymous and Weight Watchers are successful entities because they are very rigorous in terms of privacy (Khaled, Barr, Noble, & Biddle, 2006).

Figure 5. Example of privacy criterion: This financial website helps users manage their budget. Mint provides a lot of reassuring information about security and associated entities (source: www.mint.com, screenshot captured on 03/15/2013).

Personalization refers to the ability for a user to adapt the interface to the user’s preferences (Peppers & Rogers, 1998; Figure 6). Personalization includes all actions aimed at characterizing a greeting, a promotion, or a context to achieve a closer approach to the user. Personalization may include individualization and group membership. Personalization requires an analysis of the activity beforehand. Its power is dependent on the quality of data from the user and the degree of personalization. The latter implies that the interface gradually learns the characteristics of the user and modifies its interaction with the user towards a higher level of personalization. Personalization offers greater potential returns than standardized and impersonal products (Pine, 1993). As customers, users want services tailored to their needs. E-commerce has produced many strategies to customize interactions. Old business practices promoted a homogeneous market with standardized products; this model is not adequate any longer and does not fit each user’s needs. The proliferation of interface products pressures designers to mass produce customizable interfaces. They provide personalized information and synthesized solutions that match the choice of respective communities, not necessarily individuals. All these elements combined lead to a customized user experience.

Figure 6. Example of personalization criterion: The user is called by his or her first name throughout the interaction which creates a sense of familiarity (source: www.facebook.com, screenshot captured on 03/15/2013).

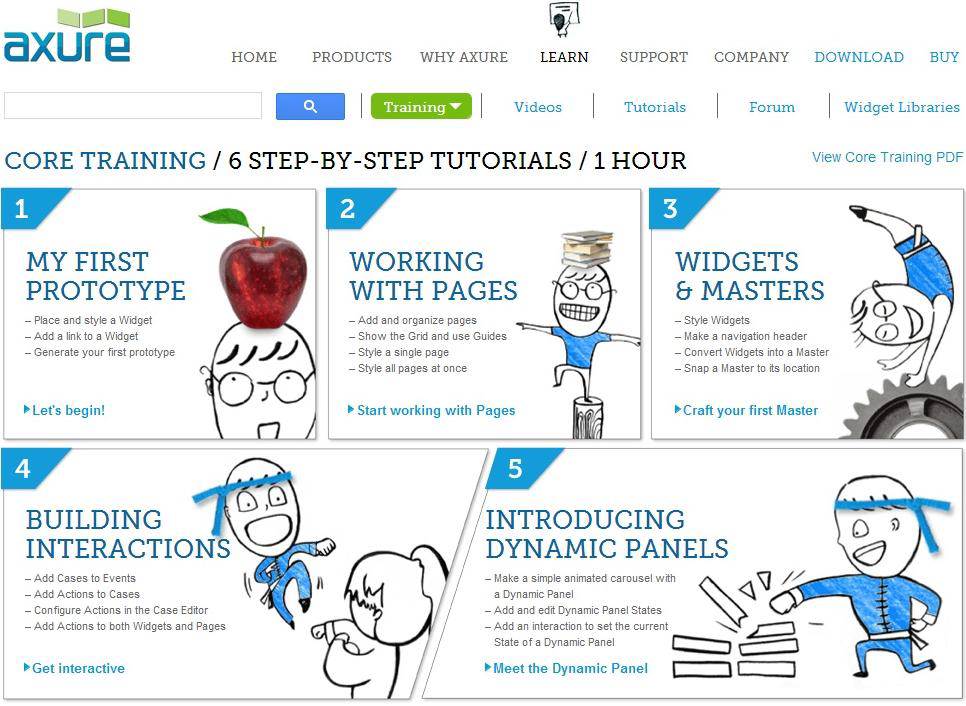

Attractiveness is the use of aesthetics (graphic, art, design) to capture the attention of the user, to support the interaction, and to create a positive emotion (Figure 7). The animation, colors, menus, drawings, and video films are designed to catch and maintain the interest of the user. Presentation of these persuasive interactive elements must consider the cognitive perceptual characteristics of the user. Persuasive design could motivate users towards specific actions (Redström, 2006). Attractiveness has three components: emotional appeal, call to action, and tunneling design. Tunneling design is a kind of attractive interaction that tries to lead the user through a predefined succession of actions to produce a desired result.

Figure 7. Example of attractiveness criterion: The drawings are used to create a visual identity and unique experience that could leverage positive emotions (source: www.axure.com, screenshot captured on 03/15/2013).

Dynamic Criteria

To encourage users to change their behavior, it is important to take the temporal aspect into account. Design is an engaging and interactive activity that requires segmenting and planning persuasive processes. Here, dynamics means a way to involve the user in an interactive process that progressively engages him or her with the interface. There are four dynamic criteria: solicitation, priming, commitment, and ascendency.

Solicitation refers to the first stage that aims to briefly attract and challenge the user to initiate a relationship (Figure 8). We can distinguish three elements for this “invitation” stage: allusion, suggestion, and enticement. The invitation sets up the beginning of the relationship and the dialogue between the user and electronic media. When this approach is used often by electronic media, the probability of initiating the desired action by the user increases. The interface attempts, by words, graphics, or any form of dialogue, to suggest a behavior followed by action. Solicitation represents the ability to induce an action by the user with minimal influence from the interface. Here, the interface suggests ideas or actions that the user could initiate or could have (Dhamija, Tygar, & Hearst, 2006).

Figure 8. Example of solicitation criterion: Several advertisements are displayed with a short message that tries to catch a user’s attention with an attractive or free offer (source: www.awwwards.com, screenshot captured on 03/15/2013).

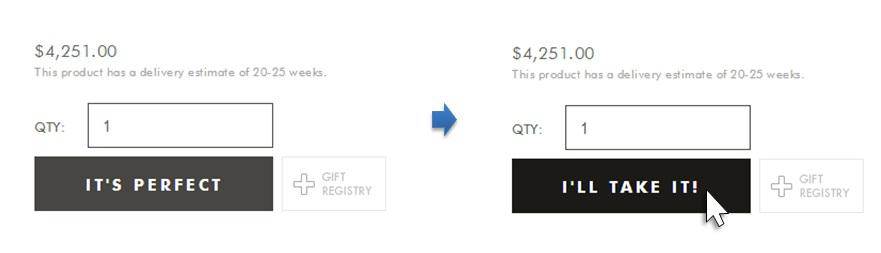

Priming refers to elements of the media that trigger the persuasive influence (Figure 9). These elements may take the form of piloting the first steps. Following requests from the interface, the user’s attention is captured. Users are encouraged to realize the first engaging action. With priming, the first action is carried out without coercion or awareness. Users are caught in a process that gradually draws them in (Yang, 2005).

Figure 9. Example of priming criterion: The first action expected in an e-commerce website is to make the user add an item to the cart. In this interface, the message “Add to cart” on the button is replaced by “It’s perfect” as an invitation and incitation to click. In addition, the message is reinforced by the fact that on mouse over, the message is replaced by “I’ll take it” to strengthen the intention (source: http://www.thefutureperfect.com, screenshot captured on 03/15/2013).

Commitment means that the interface continues to involve the user through a process (Figure 10). This process uses action sequences or predetermined interactions to gradually involve the user. The successful completion of these queries and actions is followed by praise, encouragement, reward, and continued interaction, which induces more intensive and regular behavior from the user (Weiksner, Fogg, & Liu, 2008).

Figure 10. Example of commitment criterion: To use this app, the person must follow a wizard assistant that asks for more personal information through a tunneling process. The idea is not to lose the user’s attention and also to ask the user to interest his friends in the app by (a) making the user’s use public and (b) asking the user to invite friends (source: iPhone App—Path, screenshot captured on 03/15/2013).

Ascendency is an indicator of the completion of the engaging scenario (Figure 11). When users enter the ascendency stage, the user has definitely accepted the logic and goals of the electronic media. The “grip” or hold that the technology has on a user’s attention is the deepest form of technological persuasion. At this stage, the user involvement is complete and he or she runs the risk of addiction or at least an over-consumption of the electronic media. In these interactions, the user behaves in such a way as to generate pleasure and maybe relieve negative feelings.

At the interface level, the influence is apparent through various elements: irrepressible interaction, tension release, and also consequences beyond the interaction with the media. Immersion is often mentioned in the field of video gaming. Because these games were first devoted to a flight simulator environment, they were realistic, and they caught the user’s full attention. In the end, the player becomes a part of the environment due to the repetition and the regularity of interaction (Baranowski, Buday, Thomson, & Baranowski, 2008). The emotional involvement in the story of the game could cause a risk of dependence and identification. This does not only concern desktop applications, but also GPSs and Smartphones. Users who developed an emotional attachment to their device cannot imagine living without these products (Nass, Fogg, & Moon, 1996).

Figure 11. Example of ascendency criterion: The game challenges users throughout their progression and offers them different types of rewards like public badges to show success or new items to accomplish more exploits. Statistics are also displayed to encourage daily use. At this stage, there is a risk of addiction or overconsumption (source: Video game—Starcraft 2, screenshot captured on 3/15/2013).

To sum up, this framework presents eight persuasion criteria divided into two dimensions—static and dynamic aspects of the interface—that seeks to improve the evaluation of persuasive elements in interfaces. These criteria complement the traditional ergonomic criteria that focus on the user’s ability to be effective, efficient, and satisfied. Persuasion is generally not within the scope of the inspection, and criteria can be summarized in the following sentences:

- Credibility: Give enough information to users that enable them to identify the source of information as reliable, relevant, expert, and trustworthy.

- Privacy: Do not persuade users to do something that publicly exposes their private life and which they would not consent to do.

- Personalization: Consider a user as a person and consequently develop a personal relationship.

- Attractiveness: Capture the attention of a user to elicit emotion and induce favorable action.

- Solicitation: Initiate the relationship by first temptations.

- Priming: Help users to do what the system wants them to do.

- Commitment: Involve, engage, and adhere to the objectives of the system.

- Ascendency: Provide incentives to get a user totally involved with the system.

Interface designers and ergonomic analyzers must be careful to ensure that their interfaces do not contain intrusive aspects that would affect the ethical handling of certain domestic or professional interactions, particularly as these factors affect the attitudes of users. By applying knowledge about how humans work on the psychosocial level, our criteria are therefore based on a normative approach of what should or should not be persuasive. At present, it is necessary to validate these guidelines to demonstrate their relevance and consistency.

Method to Validate the Set of Guidelines for Persuasive Interfaces

The following sections discuss the internal validation process, the study participants, the study materials and equipment, the tasks and procedures used in the study, and the data collection and analysis methods.

Internal Validation

On the basis of the compilation of recommendations presented in the previous section, we compiled a list of persuasive criteria to serve as a support for the classification and identification of persuasive elements related to interfaces (examples shown in Figures 3 through 12).

In agreement with Bastien (1996), Bastien and Scapin (1992), Bach (2004), Bach and Scapin (2003, 2010), who have validated ergonomic criteria on the pragmatic aspects of interfaces, we used the same method to validate the criteria of persuasion. The validation method of the checklist consists of a test, completed by experts in HCI (or study participants), identifying persuasive elements in the interfaces using the proposed criteria. If the HCI experts identify the problem with the right criteria, the checklist is relevant. Conversely, if experts misidentify problems and/or criteria, the checklist is irrelevant. Correct identification is calculated and shown on the screen interface, and subject scores are broken down into static vs. dynamic criteria. Correct identification (or good assignment) is when the criteria defined by the participant matches the one identified by the specialist. A high percentage of correct identification reflects the quality of the set of guidelines; a high percentage of mismatches indicates that the set is not efficient.

The task assignment can also identify the possible confusion between two or more criteria. An overall score is calculated by comparing participants’ responses and expected responses. This score can include the proportion of correct identification or the proportion of incorrect identification, which could signify that a criterion could be confused with another criterion. In other words, the task of the test participants is to find then classify elements. Several outcomes can happen during this process. Participants can either find an element that was defined as persuasive (hit, correct), or they cannot find it (unidentified). Or they can find an element that they consider persuasive, but that is not real according to the expected criteria (false). Once something has been correctly or incorrectly identified as a persuasive element, it can be classified either correctly, incorrectly (false), or the test participant may not know how to classify it (unidentified). Consequently, our hypothesis is that the higher the number of correct identifications (hits), the more the internal validation is conclusive. Respectively, the higher the number of misallocations (false), the more the internal validation will be ineffective and the quality of the guidelines inferior.

Participants

Thirty people participated in the study. They were all experts in the HCI field: ergonomists, engineers, professors, researchers, project managers, R&D, and interaction designers. They were all experienced with user interfaces evaluation and/or design. On average, participants had 13 years of experience (SD=7.9). Twelve of the experts were from the academic field and eighteen employed in industry, but all had a similar background in evaluation and in computer experience. Ten women and twenty men were gathered for this study.

Materials and Equipment

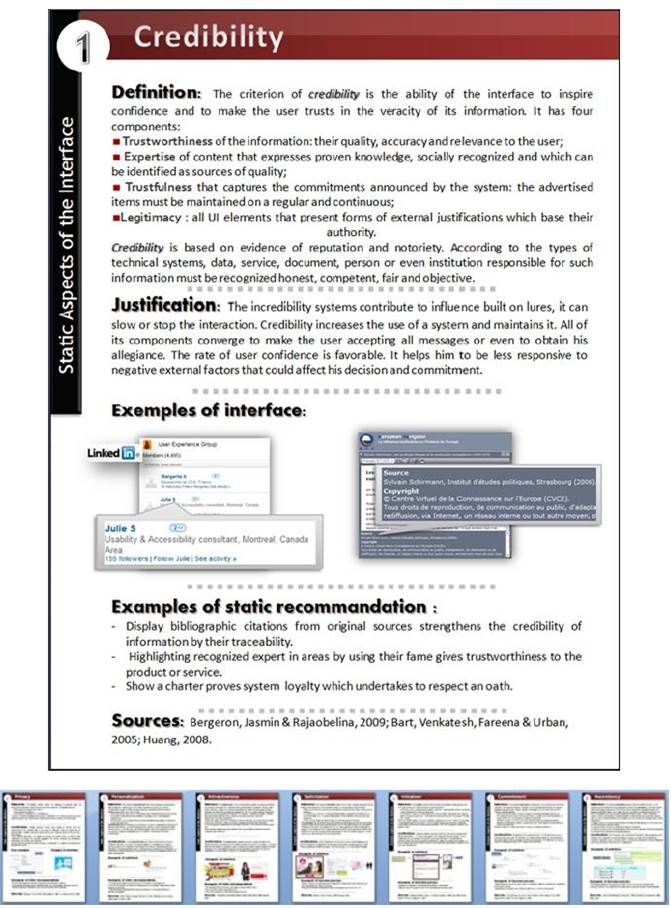

The eight criteria were summarized as a set of eight paper cards (Figure 12). Each criterion card provided a detailed definition, a rationale based on references, and some examples illustrated from various devices and fields.

Two experts (the authors) examined and selected the interfaces used in this study. They found 84 persuasive items that included 43 static elements and 41 dynamic elements: 12 forms of credibility, 10 forms of privacy, 13 forms of personalization, 8 forms of attractiveness, 22 forms of solicitation, 8 forms of priming, 8 forms of commitment and 3 forms of ascendency. On average, there were 10.5 elements per criteria (SD=5.5) and 5.6 elements per interface (SD=2.7). These 15 screen interfaces were chosen because both authors were in total agreement on the criteria mentioned. Screen interfaces that were disagreed upon were discarded.

Figure 12. Credibility card and the seven others cards (small). Download the French version of the cards used during the experiment at http://www.univ-metz.fr/ufr/sha/2lp-etic/Criteres_Persuasion_Interactive-2.pdf.

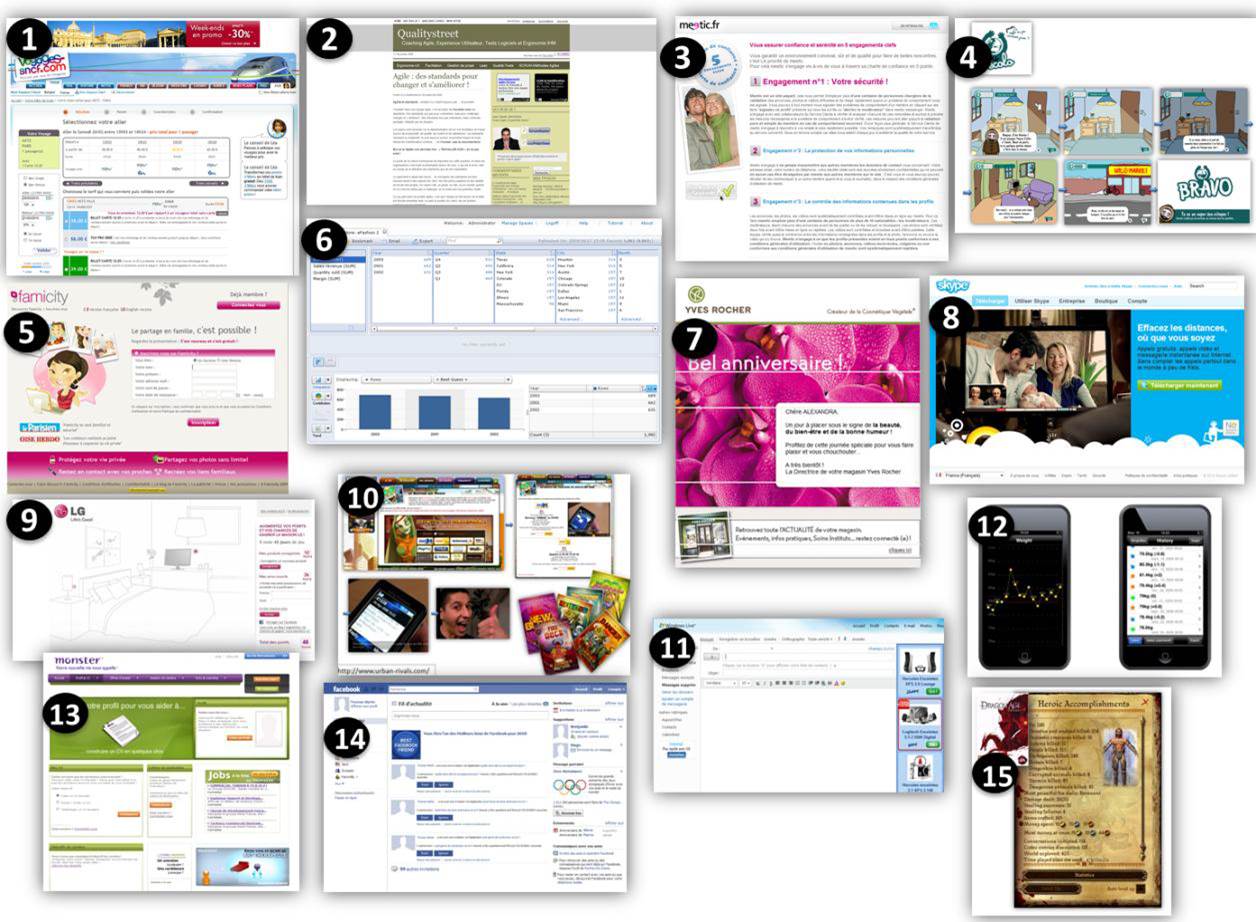

We chose fifteen interfaces that contained persuasive elements for the study (Table 1 and Figure 13). Twelve of them were websites and three were software applications. Various areas were represented: business (3), video games (3), social networks (3), e-commerce (2), transport (1), communication tools (1), health (1) and ecology (1). Six interfaces were unknown, whereas nine were familiar to the participants.

Table 1. General Characteristics of the Tested Interfaces and Statistical Results of Correct Identification Percentage of Criteria, Screen by Screen

Figure 13. Set of 15 interfaces.

Task and Procedure

The experimental task is, in fact, an identification task of persuasive criteria from examples (classification accuracy). We individually tested each participant. The instruction began with an introduction to the experimental procedure and information about the gathered data and criteria. We asked the participants to provide comprehensive comments about their perceptions of the criteria. At the beginning of the session, we asked participants about their background, years of experience, and expertise in heuristic evaluation.

In the first phase (familiarization), we asked participants to read the criteria documents very carefully (Figure 12) and to pay particular attention to the screenshot examples that would only be viewed during this first phase (i.e., the examples would not be visible later on in the experiment). The objective of this phase was for the participants to become familiar with the criteria and its content. The first instruction was, “I will present you with a list of eight criteria to assess interactive persuasion. I will ask you to read this list in full; it differentiates static criteria and dynamic criteria of interfaces. I would like you to look carefully at the examples because I won’t display them again during the identification phase. Do not hesitate to ask me any questions.”

In the second phase (identification test), the aim of the task was to allow participants to memorize the criteria and its definitions. In this second phase, we gave the participants another version of the checklist without screenshot examples. This specific point of the method was emphasized by Bastien (1996) who suggests that subjects should not refer to the examples that are anecdotal, but to the criteria that are conceptual. Indeed, the presence of the screenshot examples could have influenced the criteria identification during this phase. In fact, the goal was not to test the ability of recognition, but to check their understanding of the criteria. In addition, with only two or three examples per sheet, it was not possible to cover all the possible cases for a single criterion. The 30 experts were invited to review the 15 screen interfaces; in each interface, they had to identify persuasive elements and match them with the set of guidelines. They could freely consult the criteria documents. At the end of each session, the interfaces were presented again to allow the participants to confirm or not their initial choice to assign a criterion to an item on the interfaces.

In a third phase (confirmation), the interfaces were shown again in order to confirm or refute participants’ first choice.

At the end of the session, the researchers performed a semi-structured interview to obtain qualitative data on the structure and content of the document containing the experimental criteria. The questions were open and encouraged greater freedom of speech and criticism from the participants.

To sum up, the participants were asked to evaluate 15 interfaces with the checklist for persuasive interfaces. For each screen interface, they had to find persuasive elements and classify them according to the set of guidelines. For each subject, interfaces were randomly presented, and there were no time limitations for each task.

Data Collection and Analysis

Two types of data were collected. First, we were interested in recording how much time participants spent reading the criteria. The second measure was the number of persuasive criteria correctly identified by the participants, which was separated into dynamic vs. static criteria. We also calculated a score for the correct identification per interface and per subject. Identification was considered as correct if the answer matched with the expected criteria (or theoretical answer). If the answer did not match the expected criteria, it was considered false. Quality of the criteria definition was evaluated through this assignment task, that is, if a criterion was defined as correct or false. Proportions of correct identifications or false identifications (i.e., confusions) were analyzed as discriminative indicators of the relevance of the checklist.

Results and Analysis

The following sections present the overall scores, details about the criteria matrix and Kappa score, and the quality of the criteria definitions.

Overall Scores

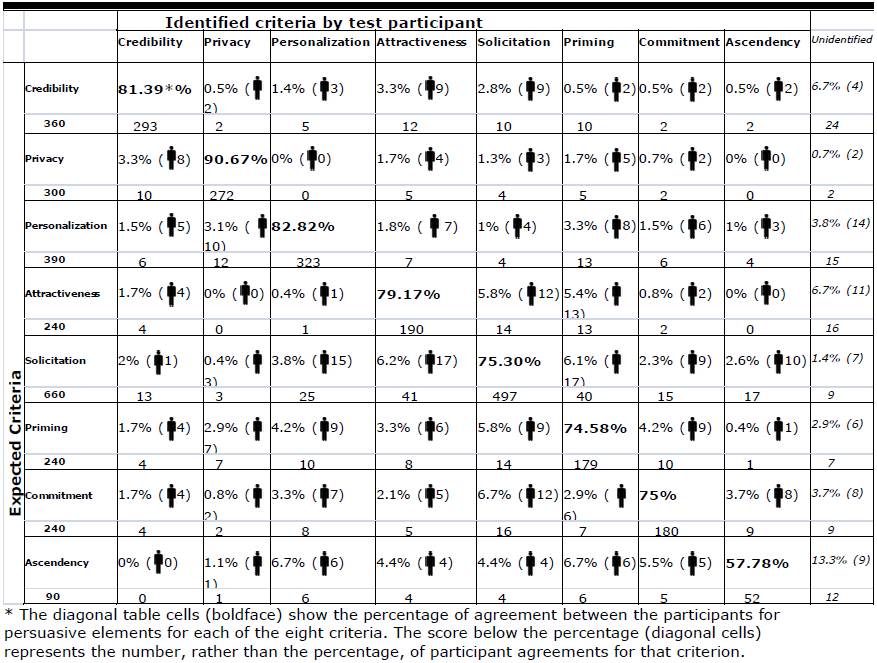

The average time to read the criteria document was 11 minutes 45 seconds (SD=4.2). The average session time was 1 hour 6 minutes (SD=13). The overall scores of the confusion criteria matrix show a high level of agreement among participants to identify persuasive elements in interfaces. Table 2 shows the classification criteria according to the proportion of correct identification.

Correct identifications reached the score of 78.8% on average. This result is encouraging considering the short time that participants took to learn new criteria and considering that most of the participants lacked prior knowledge of the technological persuasion theory. With an acceptance of 50% as a threshold to determine the quality of definition (Bach, 2004), all the criteria reached a good to a very good level of acceptance. Overall, static criteria were better understood than the dynamic criteria. Another possible explanation for this finding is that static elements are easier to identify on a static user interface compared to identifying dynamic elements on a static interface. However, ascendency appears to be difficult to assess. This can be explained by the fact that ascendency is a criteria that does not solely depend on the interface itself, but also on behavioral patterns of the user that are acquired over time and that cannot be readily observed in the context of this experiment.

We calculated a percentage of correct identification per screenshot (see Table 1). Most of the screenshots performed very well (between 65% and 85%); however, 2 screenshots stand out: screens 7 and 15.

- Screen 7 is an advertisement received by email for a birthday promotional offer. All the elements on the screen indicate that a user’s perception is used to associate persuasive attempts in the advertising area. The awareness is developed especially when the delivery medium is email. A score of 92.5% shows a high capacity to detect this particular format as a persuasive element.

- Screen 15 is a dashboard screen of a role-playing video game. According to the statistics displayed, it indicates that the user played a huge amount of hours in just few days. In this case, the persuasive elements were not easily found by the participants (52.22%). It indicates that in the video game area, the link with persuasion is not obvious for the participants. This media is associated with fun and a degree of freedom. Even if the risk of dependency is quite known.

Criteria Matrix: Agreement and Disagreement of Persuasive Elements

Table 2 reports the criteria matrix for the interface elements classification. Each row of the matrix represents the instances in a predicted criterion category. For example, we assume that the 15 interfaces include a total of 12 items relating to credibility. These 12 items were presented to 30 experts, representing a total of 360 items that should be properly assigned to the criterion of credibility. In reality, participants identified 293 items correctly, committed 53 errors (2 x privacy, 5 x personalization, 12 x attractiveness, 10 x solicitation, 10 x priming, 2 x commitment, 2 x ascendency), and provided 14 non-assignments because they could not categorize the item. This same row also shows how many participants made mistakes (the statistics are placed after the icon; for instance, two participants classified two items of presupposed credibility in privacy). The following is a detailed description of the data in the table:

- The first column represents the expected answers based on the amount of each criterion potential for a correct match, as determined by the researchers. The bottom numbers in this column represent the maximum possible number of correct identifications.

- The diagonal table cells (boldface) show the percentage of agreement between the participants for persuasive elements for each of the eight criteria.

- The score below the percentage (diagonal cells) represents the number, rather than the percentage, of participant agreements for that criterion.

- All of the other percentages in the table represent disagreement between the participants’ characterization of each criterion.

- The numbers in brackets presented in conjunction with the icons represent the number of participants who contributed to the disagreement score.

- The right column (Unidentified) contains elements that could not be matched with any element in the identified elements or criteria.

The criteria matrix reveals that most of criteria (except ascendancy, see The Quality of Criteria Definition section) are generally found in many interfaces. The 30 experts correctly identified the elements of persuasion and were able to categorize persuasive criteria accurately. Inter-ranking judgments were fairly homogeneous; the same features of persuasive interfaces were named consistently.

Table 2. Criteria Matrix: Agreement and Disagreement of Persuasive Elements

Kappa Score

Kappa is a statistical coefficient for assessing the reliability of agreement between a fixed number of evaluators when assigning categorical ratings to a number of criteria. We used Randolph’s kappa (2005), instead of Cohen’s Kappa or Fleiss’ Kappa, because it gives a more accurate and precise reliability measurement of the 30 experts’ agreement. We used a free-marginal version of kappa that is recommended when raters are not restricted in the number of cases that can be assigned to each category, which is often the case in typical agreement studies.

Randolph’s kappa is considered to be a good statistical measure of inter-rater agreement; this measurement tool resulted in a score of 0.61 for our study. An indication of strong agreement for a measure of quality of a classification system, according to Landis and Koch (1977), is when an overall performance score is higher than 60%.

Finally we must remember that the kappa value measures the agreement among the 30 participants, independent of the two experts who determined which interfaces had which persuasive elements.

The Quality of Criteria Definition

Table 2 also presents the classification criteria based on their average frequency of misidentification and the proportion of correct identification. From this point of view, seven criteria are considered to be well defined. These criteria were privacy, personalization, credibility, attractiveness, solicitation, priming, and commitment. Among all the elementary criteria, only one requires an improvement in definition: ascendency. These results are particularly interesting when taking into account the short time taken by participants during the learning phase (M = 11min 45s; S.D. = 4.2) and the unfamiliarity of the subjects with social psychology.

There was some slight confusion for all of the criteria; however, misclassifications are always very low, usually below 5%. In our study the misclassification score was sometimes slightly higher and in the case of the ascendancy criteria was 13%. Ascendency is the criterion that caused the most confusion and that needs the most adjustments. In fact, the ascendancy score is linked both to confusion with other criteria (personalization, 6.7%; priming, 6.7%; commitment, 5.5%) and to the lack of attribution; experts prefer to classify forms of ascendancy as an unidentified criterion (13.3%). Other criteria show confusion too, due to the high number of persuasive elements. Note also that the static criteria, which are directly identifiable on the screens, cause less confusion than the dynamic criteria, which involve a time aspect of persuasion.

Discussion and Conclusion

Computer ergonomics have produced a number of guidelines to measure the ergonomic quality of products, technologies, and services. We seek to establish a tool—a checklist that is sound, reliable, useful, relevant, and easy to use—to focus on the persuasive dimensions of interfaces and their effects.

While many criteria appear in the literature, it is very important to structure them within a set of guidelines or some other forms. Too many suggested criteria are not supported by further information on its potential use and application by HCI experts. The fundamental advantage of this checklist is its remarkable internal validation. The criteria for interactive persuasion in its current form can be considered valid, reliable, and usable. The use of criteria enables the identification and classification of elements at the source of social influence in interfaces. We designed an experimental test to assess the relevance of our checklist by measuring confusion scores—the lower the confusion score is, the better the set of guidelines. In addition to improving the rate of elements found, the checklist also covers a larger number of elements (regardless of the type of software, website, game, or mobile device to be evaluated.)

Let us summarize our main findings during the successive phases of refinement of the criteria and the findings from the experiments. Firstly, the criteria were based on the selection and classification of 164 publications published between 1994 and 2011, covering many sectors (health, services, business, ecology, education, leisure, and marketing), and based on all types of technologies (website, video games, phones, software, and ambient systems). Secondly, the content analysis of these publications identified two dimensions of persuasion (static and dynamic) and eight criteria that we have defined, explained, and exemplified. The criteria for interactive persuasion emphasize the social and emotional dimensions of interfaces and, as such, enhance the usual inspection criteria (clarity, consistency, homogeneity, compatibility, usability, etc.). Thirdly, an experimentation of an internal validation of the criteria should show that the criteria can be effectively identified in a realistic setting. In an allocation task, 30 specialists in ergonomic software correctly matched 78.8% of a series of 15 interfaces with 84 forms of persuasion (12 forms of credibility, 10 forms of privacy, 13 forms of personalization, 8 forms of attractiveness, 22 forms of solicitation, 8 forms of priming, 8 forms of commitment, and 3 forms of ascendency). Accuracy measures are a little bit higher than those found by Bastien (1996) and Bach (2004) when studying the ergonomic quality of inspection criteria.

Fourthly, the criterion of ascendency has a good score, but is lower than other criteria. Not surprisingly ascendency is the last step of the engaging scenario. In many cases, ascendency is not visible on the interface as it is the user who reflects the result of the persuasive influence. Ascendency is a hard criterion to allocate because it also has a strong temporal incidence.

The influence of technology is a long-term process that does not only depend on the interface but also on the user’s personal characteristics. In regards to ascendency, the problem is to identify the possible effects that go over and beyond interactions with the media: the user persists in attitude or behavior, even outside his or her interaction with technology. The way to identify ascendency implies making assumptions about links between elements of interfaces and opportunities for acquiring and engaging the user. These diagnoses are not obvious to ergonomists and interactive designers. For some of them, psychosocial aspects and notions were not easily understood. Obviously, ascendency is the one that gets the lowest score in terms of correct assignment. It represents the most difficult dynamic criterion because it is the last of the engaging scenario. It marks the final step, the one where we obtain the change in behavior or attitude expected. However, in most cases of persuasive processes, this result is not directly observable through the interface, but in the real environment of the subject and sometimes a long time after interactions with the media. We can imagine that this criterion could be improved by combining it with a more clinical analysis. But, the objective for this checklist is only to be integrated into the practice of HCI experts.

Criteria allow rapid identification of the most important problems; they can also identify a wide variety of problems. That said, the experiment task implies that the identified criteria are correlated with scenarios. This may be a drawback of the method that we used. Indeed, this presupposes the existence of a relatively generic model of participant. Nevertheless, the diversity of people and context of use (tested interfaces came from games, mobile phones, business software, social networks, e-commerce, health, and transport) belies this narrow conception of the human. Consequently, the support provided by persuasive criteria can certainly be improved further by producing complementary methods. It seems important that specialists’ inspection methods refine ways to integrate these persuasive criteria in assessment practices (Mahatody, Sagar, & Kolski, 2010).

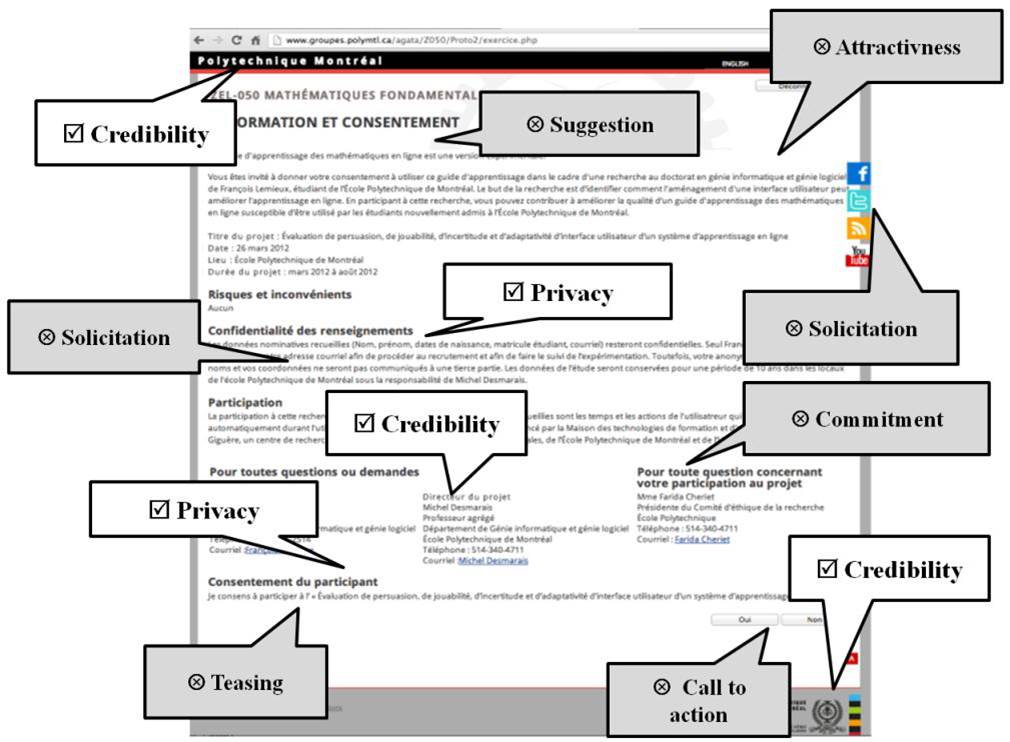

As with other guidelines, our persuasive set of guidelines could be useful in several effective ways. An interface, for example, could receive a score of absence/presence per criterion (0 if the criterion is not present, 1 if the interface matches the criterion) or a score on a scale (e.g., 0 if the criterion is not present, 5 if the interface totally matches the criterion). A general score could be calculated for several interfaces in order to compare them. The checklist could also help the designer to detect strengths and weaknesses in his or her interface among the eight criteria. For example, Brangier and Desmarais (2013, 2014) attempted to design a more motivating and engaging e-learning applications through persuasive guidelines. They conducted a persuasiveness assessment with our set of guidelines of an existing e-learning application intended for self-regulated learning of middle school mathematics (Figure 14). It showed that the inspection could reveal factors that explained the low engagement observed as the e-learning application was used and usage data was recorded.

Becoming a social actor, technology is not only shaping information, but it also reshapes our behaviors, perceptions, and attitudes towards desired goals, often without the awareness of the user. Thus, persuasion is effectively inseminating the field of HCI and thereby renewing the concepts, methods, and tools of interactions analysis, leading us to develop a new set of criteria for the design and evaluation of persuasive interactions, which aim to provide user experience designers with more knowledge to develop persuasive interfaces, while respecting users’ ethics.

Figure 14. Example of a screen inspected with the set of guidelines for persuasiveness in interfaces. (Source: http://www.polymtl.ca/, Brangier and Desmarais 2013, 2014).

References

- Adams, M.A., Marshall, S.J., Dillon, L., Caparosa, S., Ramirez, E., Phillips, J., & Norman, G.J. (2009). A theory-based framework for evaluating exergames as persuasive technology. Proceedings of the 4th International Conference on Persuasive Technology. New York, NY : ACM.

- Bach, C. (2004). Élaboration et validation de Critères Ergonomiques pour les Interactions Homme-Environnements Virtuels. Thèse de Doctorat, Université Paul Verlaine, Metz.

- Bach, C., & Scapin D. L. (2003). Adaptation of ergonomic criteria to human-virtual environments interactions. In Interact’03 (pp. 880-883). Zürich, Switzerland): IOS Press.

- Bach, C., & Scapin, D.L. (2010). Comparing inspections and user testing for the evaluation of virtual environments. International Journal of Human-Computer Interaction, 26(8), 786-824.

- Baranowski, T., Buday, R., Thomson, D., & Baranowski J. (2008). Playing for real video games and stories for health-related behavior change. Am J Prev Med, 34(1), 74-82.

- Bart, Y., Venkatesh, S., Fareena, S., & Urban, G.L. (2005). Are the drivers and role of online trust the same for all web sites and consumers? A large-scale exploratory empirical study, Journal of Marketing, 69, 133-52.

- Bastien, J. M. C (1996). Les critères ergonomiques: Un pas vers une aide méthodologique à l’évaluation des systèmes interactifs. Thèse de Doctorat, Université René Descartes, Paris.

- Bastien, J. M. C., & Scapin, D. L. (1992). A validation of ergonomic criteria for the evaluation of human-computer interfaces. International Journal of Human-Computer Interaction, 4 (156), 183-196.

- Bergeron, J., & Rajaobelina, L. (2009). Antecedents and consequences of buyer-seller relationship quality in the banking sector. The International Journal of Bank Marketing, 27 (5), 359-380.

- Brangier, E., & Desmarais, M. (2013). The design and evaluation of the persuasiveness of e-learning interfaces. International Journal of Conceptual Structures and Smart Applications. Special issue on Persuasive Technology in Learning and Teaching. 1(2), 38-47.

- Brangier, E., & Desmarais, M. (2014). Heuristic inspection to assess persuasiveness: a case study of a mathematics e-learning program. In A. Marcus (Ed.): DUXU 2014, Part I. Springer International Publishing. LNCS 8517, pp. 425–436.

- Chaiken, S. Liberman, A., & Eagly, A.H. (1995). Heuristic and systematic processing within and beyond the persuasion context. In J.S. Uleman, J.A. & Bargh,(Eds.), Attitude Strength: Antecedents and Consequences (pp. 387-412). NJ: Erlbaum.

- Consolvo, S., Everitt, K., Smith, I., & Landay, J.A. (2006). Design requirements for technologies that encourage physical activity. Proceedings of the ACM Conference on Human Factors in Computing systems, CHI 2006 (pp. 457-466). New York, NY: ACM.

- Dhamija, R., Tygar, J.D., & Hearst, M. (2006). Why phishing works. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI 2006 (pp. 581-590). New York, NY: ACM.

- Fogg, B. J. (2003). Persuasive technology: Using computers to change what we think and do. San Francisco: Morgan Kaufmann.

- Greenwald, A.G., & Banaji, M.R. (1995). Implicit social cognition: Attitudes, self-esteem and stereotypes. Psychological Review, 102, 4-27.

- Huang, X. (2009). A review of credibilistic portfolio selection. Journal Fuzzy Optimization and Decision Making archive, 8(3), 263-281.

- Khaled, R., Barr, P., Noble, J., & Biddle, R. (2006). Investigating social software as persuasive technology. Proceedings of the First International Conference on Persuasive Technology for Human Well-Being, Persuasive06. Berlin Heidelberg: Springer.

- Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33, 159–174.

- Liu, C., Marchewka, J., Lu, J., & Yu, C. (2005). Beyond concern: A privacy-trust-behavioral intention model of electronic commerce, Information and Management, 42, 289-304.

- Lockton, D., Harrison, D., & Stanton, N. (2010). The design with intent method: A design tool for influencing user behaviour. Applied Ergonomics, 41(3), 382-392.

- Mahatody, T., Sagar M., & Kolski C. (2010). State of the art on the cognitive walkthrough method, its variants and evolutions. International Journal of Human-Computer Interaction, 26(8), 741-785.

- McGuire, W.J. (1969). Attitude and attitude change. In G. Lindzey & E. Aronson (Eds.), Handbook of social psychology (2nd. ed., pp. 136-314) Reading, MA: Addison-Wesley.

- Nass, C., Fogg, B.J., & Moon, Y. (1996). Can computers be teammates? International Journal of Human-Computer Studies, 45(6), 669-678.

- Némery, A. (2012). Élaboration, validation et application de la grille de critères de persuasion interactive. Thèse de Doctorat, Université de Lorraine, Metz. http://tel.archives-ouvertes.fr/tel-00735714.

- Némery, A., Brangier, E., & Kopp, S. (2009). How cognitive ergonomics can deal with the problem of persuasive interfaces: Is a criteria-based approach possible? In L. Norros, H. Koskinen, L. Salo, & P. Savioja. Designing beyond the product: understanding activity an user experience in ubiquitous environments (pp. 61-64). ECCE’2009, European Conference on Cognitive Ergonomics. New York, NY: ACM.

- Némery, A., Brangier, E., & Kopp, S. (2010). Proposition d’une grille de critères d’analyses ergonomiques des formes de persuasion interactive In B. David, M. Noirhomme et A. Tricot (Eds), Proceedings of IHM 2010, International Conference Proceedings Series (pp. 153-156). New York, NY: ACM.

- Némery, A., Brangier, E., & Kopp, S. (2011). First validation of persuasive criteria for designing and evaluating the social influence of user interfaces: Justification of a guideline. In A. Marcus (Ed), Design, User Experience, and Usability (pp.616-624), LNCS 6770.

- Oinas-Kukkonen, H. (2010). Behavior change support systems: Research agenda and future directions. Lecture Notes for Computer Science, Persuasive2010, 6137, 4-14.

- Oinas-Kukkonen, H., & Harjumaa, M. (2009). Persuasive systems design: Key issues , process model, and system features. Communications of the Association for Information Systems, 24(1), 485-500.

- Peppers P., & Rogers M. (1998). Better business one customer at a time. The Journal For Quality and Participation, Cincinnati, 21(2), 30-37.

- Petty, R.E., & Cacioppo, J.T. (1981). Personal involvement as a determinant of argument-based persuasion. Journal of Personality and Social Psychology, 41, 847-855.

- Pine II, B.J. (1993). Mass Customization. Boston: Harvard Business School Press.

- Randolph, J. J. (2005, October 14-15). Free-marginal multirater kappa: An alternative to Fleiss’ fixed-marginal multirater kappa. Paper presented at the Joensuu University Learning and Instruction Symposium 2005, Joensuu, Finland.

- Redström, J. (2006). Persuasive design: Fringes and foundations. Persuasive 2006, LNCS 3962, pp. 112 – 122.

- Tørning, K., & Oinas-Kukkonen, H. (2009). Persuasive system design: State of art and future directions. ACM International Conference Proceeding Series, Proceedings of the Fourth International Conference on Persuasive Technology, Claremont, CA, USA. New York, NY: ACM.

- Weiksner, G. M., Fogg, B. J., & Liu, X. (2008). Six patterns for persuasion in online social networks. In Proceedings of the 3rd international conference on Persuasive Technology. Persuasive ’08. Berlin Heidelberg: Springer.

- Yang, K.C.C. (2005). The influence of humanlike navigation interface on users’ responses to Internet advertising. Telematics and Informatics, 23, 38-55.