[:en]

Abstract

Human-centered design (HCD) has developed an impressive number of methods for gaining a better understanding of the users throughout the design process. The dominant orientation in HCD research has been to develop and validate individual methods. However, there has been growing amount of criticism towards this dominant orientation, as companies and designers seldom design services or products as entirely separate projects, let alone use single methods for doing so.

Our longitudinal case study that is based on interviews, meeting observations, and company documentation was conducted at a high HCD-mature company. The study shows that instead of conducting new user research or testing for each project, designers draw information from previous studies and other user insight sources in the company. HCD work is mostly accomplished through a combination of methods and other information sources on the users. The cumulation of user knowledge gained during the past projects and employment years is notably high among designers, product managers, projects, and in the company as a whole. In addition, knowledge based on user research and HCD methods does not replace other sources such as customer insight from marketing but, rather, complements these. The chosen approach of studying method mixes in an organization provided useful insights into understanding the user information sources in an organization.

Keywords

Human-centered design, user insight, user knowledge, user involvement, methods, method-mixes, product design

Introduction

The founding concern in human-centered design (HCD) research was that neither a designer’s introspection nor a marketing and management view of the customers could be trusted to result in adequate technologies for the final users (see, e.g., Norman, 1988; Norman & Draper, 1986). To remedy this, HCD guidelines have long embraced replacing such sources of user knowledge with an orientation to final users throughout the design process, including researching them in context and testing the solutions thoroughly (ISO 9241-11, 1998). To achieve this, hundreds of methods and tens of methodologies in user research, design, and testing have been developed in what is soon to be four decades of HCD research (see, e.g., Beyer & Holzblatt, 1998; Hackos & Redish, 1998; Hollingsed & Novick, 2007; Nielsen, 1993; Norman & Draper, 1986; Pitkänen & Pitkäranta, 2012).

The traditional orientation of HCD research towards methods has been an orientation towards the development and validation of single methods either in lab settings or in real-life projects. The validation has not proven easy as the same methods have produced somewhat different results in different settings and projects (Gray & Salzman, 1998; Hornbaek, 2010; Woolrych, Hornbæk, Frøkjær, & Cockton, 2011). At the same time, it is generally known that practitioners combine different HCD methods in real-world development projects. This is a common point of departure in practitioner guidebooks (e.g., Hall, 2013; Hyysalo, 2010; Kuniavsky, 2003; Sharon, 2012), but there is surprisingly little academic research seeking to establish what kinds of method mixes are used and which combinations may be sufficient or even optimal in different projects (Jia, Larusdottir, & Cajander, 2012; Johnson et al., 2014a; Johnson et al., 2014b; Mäkinen, Hyysalo, & Johnson, 2018; van Turnhout et al., 2014).

The research on practitioners’ “method mixes,” however, raises another topic that is pertinent to how we should think of the real-world use of HCD methods and which has further bearing on the potential expansion of studies into mixed method validation. This is that companies, and the designers within them, seldom design new releases, services, or products as entirely separate projects, and do not tend to repeat user studies or user tests if they believe there is information from previous studies or other sources of user insight (Hyysalo, 2010; Johnson et al., 2014a; Johnson, 2013; Kotro, 2005; Pollock & Williams, 2008). This, in turn, affects the adequacy of method mixes and calls for broadening the HCD method research agenda and making it better address real-world practitioner concerns.

Methods Research in HCD

The human-centered methods development, research, and validation has continued since the early 1980s. Various studies have reported the methods usage in different HCD studies or compared diverse methods of usability evaluation (Duh, Tan, & Chen, 2006; Gray & Salzman, 1998; Nielsen & Phillips, 1993). A classic example of these studies is the investigation of Nielsen and Phillips from 1993 that compared two user interfaces with heuristic evaluation: GOMS (goals, operators, methods, and selection rules) analysis and user testing. They then compared the findings from each method and performed a cost–benefit analysis in order to be able to assess the performance of each method (Nielsen & Phillips, 1993). As a more recent example from the design side of HCD, the study by Hare et al. from 2018 compared two cases showing the benefits of traditional and generative (arts-based) methods for addressing both tacit and latent user needs. Across such studies, the dominant orientation has been to either validate single existing methods through design projects and evaluations or to create and validate new methods. Different methods and studies have been compared to each other in order to discover whether they result in similar outcomes or if one method appears more effective than another.

Over the three decades of HCD research this orientation has only slightly changed as the applied fields have broadened. Lately, with digitalization, new digital tools have been added to HCD studies. An example of this is the development of the UXblackbox for user-triggered usability testing (J. Pitkänen & Pitkäranta, 2012) or the data analysis application for usability studies that is based on inspections of sequential data analysis (SDA) or exploratory SDA (ESDA; H. Pitkänen, 2017). Despite these new digital applications, the dominant approach in HCD studies has been to report method usage in single studies or product and service development cases (as in Choi & Li, 2016; Dorrington, Wilkinson, Tasker, & Walters, 2016; Fuge & Agogino, 2014) or developing current methodologies (as in Kujala, Miron-Shatz, & Jokinen, 2019; Lewis & Sauro, 2017; Linek, 2017).

This orientation to methods has been the target of critique, particularly during the last decade. On the one hand, the results and validity of evaluation methods have been questioned, suggesting that the reliability of HCD methods writ large may not be as superior as one might believe from the claims of HCD researchers. On the other hand, there are rising doubts about the ecological validity (Cole, 1996; Kuutti, 1996) of single method development and testing.

This latter concern is more urgent for us in this paper as it is common knowledge that practitioners mix different methods in their work (Johnson et al., 2014a; Solano, Collazos, Rusu, & Fardoun, 2016; van Turnhout et al., 2014; Woolrych et al., 2011). Studies presenting combinations of methods for HCD have been reported numerously (as in Keinonen, Jääskö, & Mattelmäki, 2008). The selection of the combined HCD methods can be formed either systematically (van Turnhout et al., 2014) or in a looser manner, by convenience or by preference (Keinonen et al., 2008). Especially in social sciences (but also in HCI research), the notion of mixed methods research reports combinations of methods in different projects (Tashakkori & Teddlie, 2010; Teddlie & Tashakkori, 2008). Usually these have combined qualitative and quantitative methods (Arhippainen, Pakanen, & Hickey, 2013; R. B. Johnson, Onwuegbuzie, & Turner, 2007; Livingstone & Bloomfield, 2010) but have also described test setups similar to those in HCI research (Leech, Dellinger, Brannagan, & Tanaka, 2010).

Yet many of the recent mixed methods studies leave the previous information sources out. For example, Livingstone and Bloomfield (2010) discussed the mixed-methods approach, but they did not connect the information gathered with mixed methods to already existing information. Van Turnhout et al. (2014) stated that the combinations should be validated and that these studies still lack integration with previously gathered knowledge, which often happens in real-life settings. Indeed, many guidebooks to user research (ours included) regularly take for granted that HCD research in real projects is always conducted against the backdrop of what the design team or company already knows in relation to the design challenge at hand (e.g., Hall, 2013; Hyysalo, 2010; Sharon, 2012). Yet treating method-mixing and pre-existing knowledge as taken for granted practitioner skillsets and rules of thumb does little to further understand and improve these aspects of HCD method use.

Woolrych et al. (2011) proposed that HCD research focuses on two levels—the detailed level and the strategic level—and claimed that the mixing of methods adopted in practice is ignored. Johnson et al. (2014a) focused on method mixes, comparing five company cases that they had examined during a research program that had lasted 15 years. They argued that it is essential to investigate the currently applied method combinations in order to discover synergies and gaps between them. The research avenues presented by Woolrych et al. (2011) have had surprisingly few followers. The meta-level reviews should be conducted within cases as well as across them.

Additionally, Johnson et al. (2014a) noted that it is rare that companies pay attention to their users for the first time through (the mix of) HCD methods, and Johnson (2013) further observed that companies do not tend to repeat studies but to accumulate insight across product or service releases. Thus, the information gained through user studies is inspected in relation to existing knowledge. Therefore, in the majority of product design and development cases, a considerable amount of information about the users already exists inside the company and is naturally utilized during the development process.

To sum up, HCD research has focused on methods research, emphasizing user research and involvement per project. The fact that, in reality, companies mix methods has been amply recognized by practitioners and guidebooks and has begun to be noticed in some research cases. The reality that companies often have already cumulated user knowledge from previous projects and thus may have no need to repeat the user studies in every project has not been properly acknowledged and investigated in HCD research.

Aims of this Study

To gain a better sense of how HCD methods are used in real R&D organizations, we examined findings from an in-depth longitudinal case study of an award winning (including Red Dot, iF, and several others) high HCD-mature company. We used an analysis of method mixes at company, division, project, and individual practitioner scales to clarify the methods used to obtain user insight. We followed the method mix analysis of Johnson et al. (2014a) that distinguishes what formal HCD methods are used, whether there are informal ways of knowing the users and customers, and how these relate to pre-existing stocks of information on users at the company. The analysis thus allows examining how both combinations of methods and pre-existing pools of information are used.

The objective of the present study is thus to examine the following:

- If and how different HCD methods are combined in R&D practice.

- If and how existing information on users and markets is combined into new information.

The next section presents the case study and its methodology, following by inspecting the method mixes used in the case company. The discussion draws cross-cutting insights and makes suggestions for future work.

Methods: The Research Process

The research was conducted in an industrial company (the company will be called CompanyIM) during the time span of 2014–2018. CompanyIM offers industrial solutions for a specific technology for different markets and purposes. Its products vary from simple machines to large-scale systems that incorporate automated machines and management software. Additionally, CompanyIM offers services, including training and consultation. It has approximately 650 employees, offices in 13 countries, and it exports to 70 countries with a turnover of over €110M/year. They have a strong background in innovation, design, and user-centeredness, having won several innovation and design awards. Based on the Human-Centeredness Scale (Earthy, 1998), which includes 12 dimensions (each containing 1 to 4 evaluation points) to assess maturity of a company’s human-centeredness in five levels, they would be mostly on the second highest level D, and partly on level C of the model, also implementing some aspects of the highest level E. Therefore, they are well-established in design and user-centeredness.

Participants and Procedure

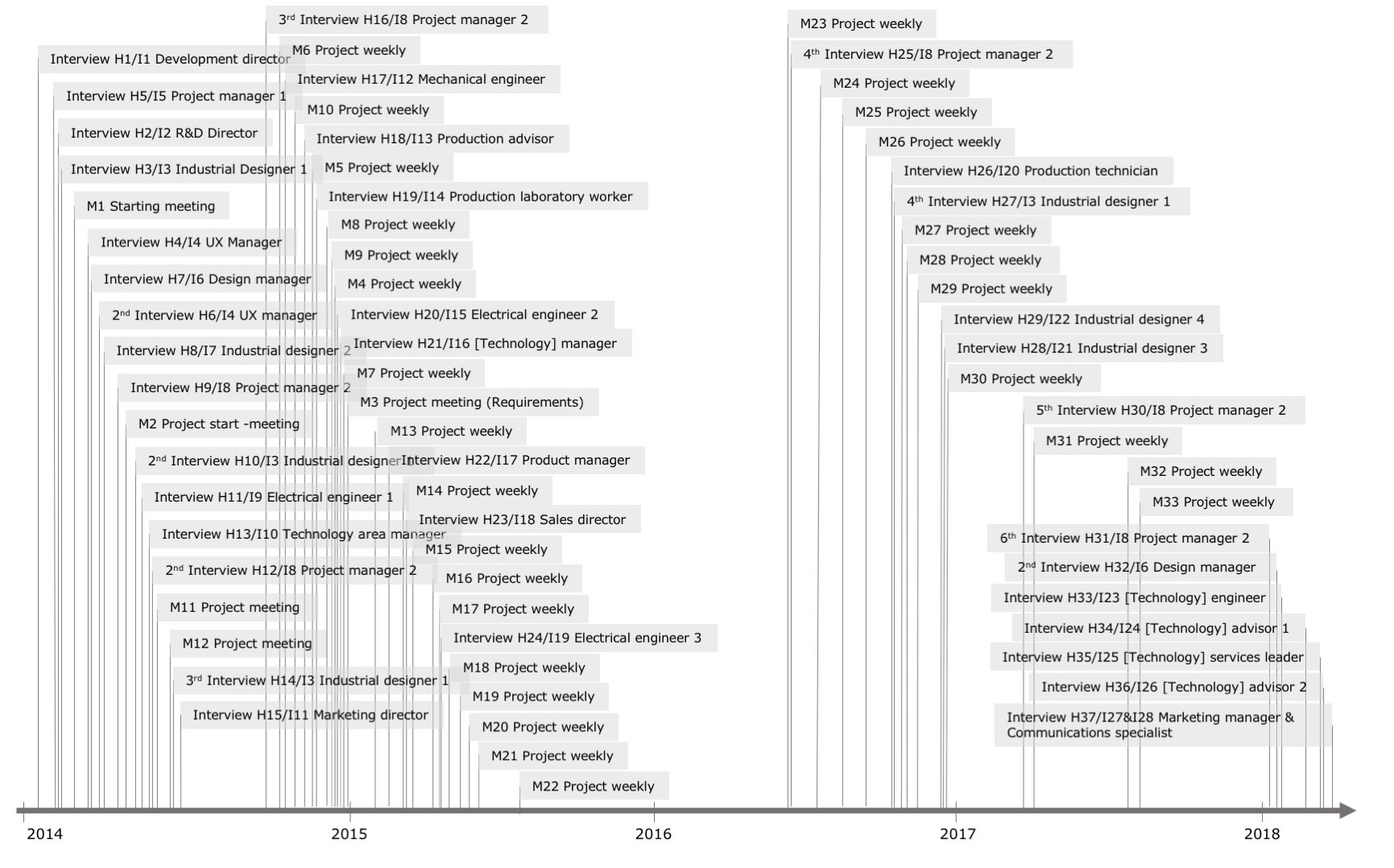

The main research methods used to study CompanyIM included semi-structured interviews and ethnographic meeting observations. The outline for the interviews can be found in Appendix A. One product development project was the focal point of the broader study and followed in detail (the project is referred to by the name ProjectND). The goal of ProjectND was to design and develop a portable, wireless machine for industrial use (their previous machines have been wired). The interviewees were selected by choosing representatives from all the relevant parts of the organization by interviewing the participants of ProjectND and by using a “snowball” sampling method by asking the interviewees to nominate who else would be the best interviewees on the topics investigated (Freeman, 1966; Goodman, 1961; Welch, 1975). The interviewees represent mainly R&D personnel (engineers, designers, project managers), in addition Sales and Marketing personnel (product manager, sales representatives, marketing manager, and communications specialist), Technology area (team leader and experts), and directors were interviewed. The research data consist of 37 interviews, 33 observed project meetings, and approximately 250 pages of gathered documents. A total of 28 persons were interviewed; one interview included two interviewees and 4 interviewees were interviewed more than once (2 to 6 times). The interviews covered, among other things, interviewees work profiles, the company’s projects and how they are organized, interviewees’ method use, and sources of user insight. The data are further described in Table 1, and a longitudinal time-line of the research activities is presented in Figure 1.

Table 1. Research Data

|

Data type |

Amount |

|

Interviews · Main focus on R&D and also a focus on Sales and Marketing · Lengths vary from 25 min to 2 h · Voice recorded and transcribed; field notes |

37 interviews 28 interviewees 4 interviewees were interviewed more than once (2 to 6 times); one interview had two interviewees

|

|

Observed meetings · Mostly weekly project meetings · Some larger project meetings · An initial meeting when starting this study · Voice recorded and transcribed; pictures; some video; field notes |

33 meetings, duration between 18—89 minutes, average duration 38 minutes |

|

Documentation · Organizational charts · Project documentation templates · User study “guidelines” · Project documentation (requirements, specifications, concepts) |

33 documents involving approx. 250 pages |

Analysis

In the analysis we followed grounded theory to identify the empirical themes in the transcriptions of interviews and observations. Open coding using ATLAS.ti resulted in 65 thematic codes (Glaser & Strauss, 1967; Strauss & Corbin, 1990). As is typical to longitudinal studies, our ensuing axial coding focused on documenting how the process has evolved and what cross-cutting themes were found across the open codes. The analysis then proceeded into writing a narrative analysis of the case project, as well as of the company in general, and cross-comparing different information sources such as documents and interviews. The later interviews included theoretical sampling to deepen the study regarding theoretically interesting key themes about the sources of user and customer information, the methods the interviewees have used, and other methods used inside the company. For the present analysis of the method use in CompanyIM and ProjectND, the methods and procedures related to customers, usability, and human centered design were systematically compared across the occupational groups, projects, and company level.

Figure 1. Timeline visualizing the data gathering across the research period.

Results: The Recognized Method Mixes

The results present different method mixes that were formulated while conducting research at CompanyIM. In reporting the method mixes, we have utilized the grouping format of Johnson et al. (2014a). The first ones include a broader focus: the company level and the case project level. After this, we move to the marketing method mix and from there to some individual practitioners’ method mixes.

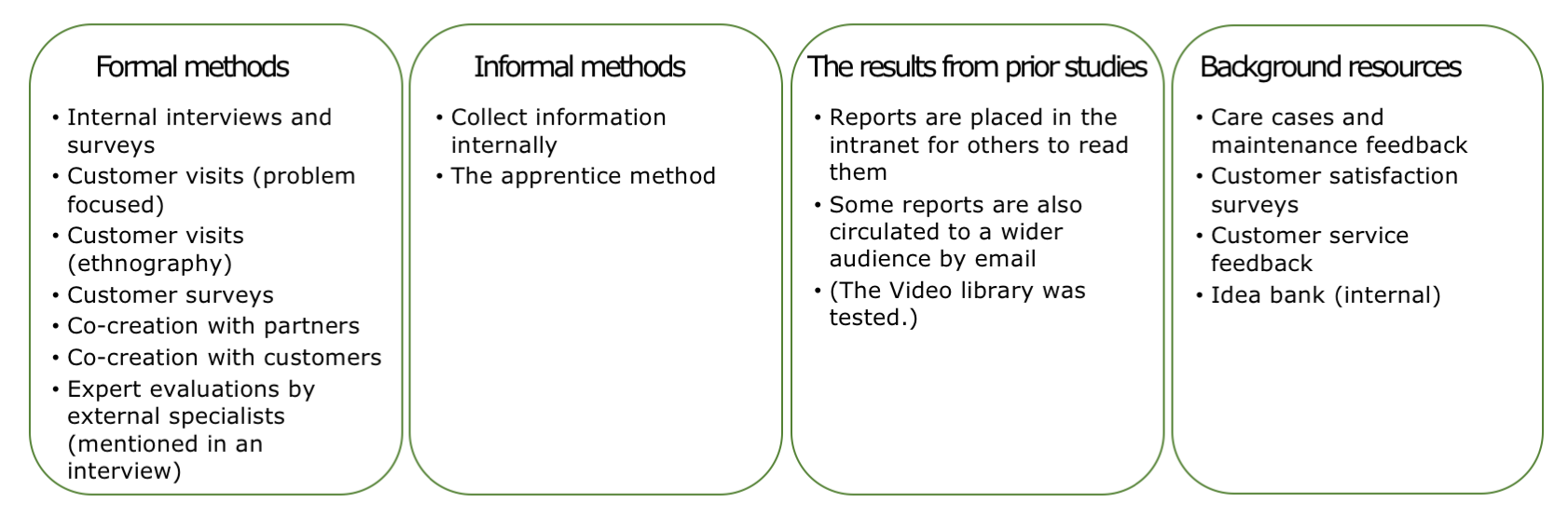

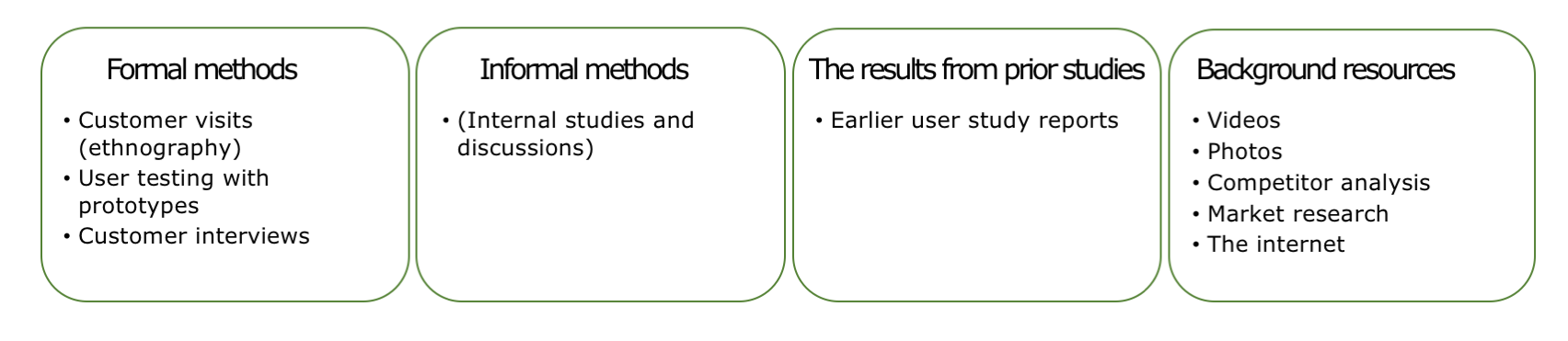

The Official, Company-Wide Method Mix

When the fieldwork started, CompanyIM had just finished an internal development project with the aim, among other aims, of introducing and formalizing new ways for R&D professionals to gather user insights[1]. Thus, the first method mix is based on the official company-wide method map created during the development project, summarized in Figure 2. Let us examine examples more closely. During the project, the company created a formal procedure for customer visits[2]. It consisted of several phases, including interviews, observations, video recordings, and data analysis workshops. The studies were conducted at three organizational levels. They also piloted a video library that would enable searching and viewing the videos later but realized that creating and maintaining it was too time-consuming and, due to the infrequent use, it was considered unfeasible as a regular activity[3].

CompanyIM also has a unique resource and source for user information: They have workers who have been employed by their customers and who still, in their current position, use the machines daily that the company and its competitors manufacture[4]. We call these people internal users. To describe them better without losing the anonymization of the company, we can create a comparison: If the company were a piano manufacturer, these experts would be former professional pianists and piano tuners. Thus, they can provide accurate and noteworthy input for the product development projects.

Figure 2. The official company-wide method-mix[5].

As can be seen, the range of methods the company uses are quite comprehensive, and they have a good tradition and focus on user-centered design. They have methods with varying levels of user involvement (from ethnographic site visits to co-creation), formal and informal methods, and have had clearly focused on developing a usage of HCD methods that fits their organization and ways of working.

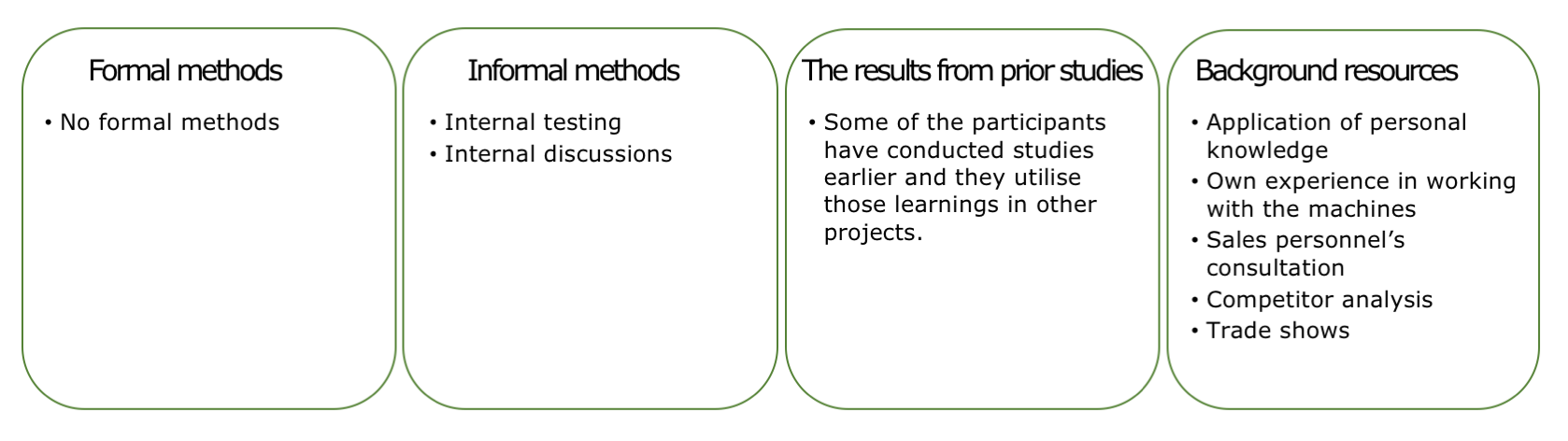

ProjectND’s Method Mix

ProjectND was an exceptional project from the point of view of user studies as the top management had decided that the project should not involve external stakeholders for reasons of exceptionally high trade secrecy[6]. User testing and studies with external users were prohibited. Nonetheless, the project needed to obtain user input in order to be able to develop a successful product. Therefore, the ProjectND method mix, presented in Figure 3, shows that the more informal methods and methods that utilize existing information were employed. More formal user tests were only conducted in the very last phases of the project, after the product had been presented at a trade show[7]. The project relied heavily on the internal users’ insights.

Figure 3. ProjectND’s method-mix.[8]

The method mix highlights the importance of user insights gathered in earlier projects, as well as from personal knowledge and experiences. These insights, especially the combination of earlier created user research data in the development of new products, have often been neglected in academic research. Although the users are not involved directly, information about them is applied actively. This information has cumulated during the previous projects and user studies. The sources for user information are diverse and information is readily available.

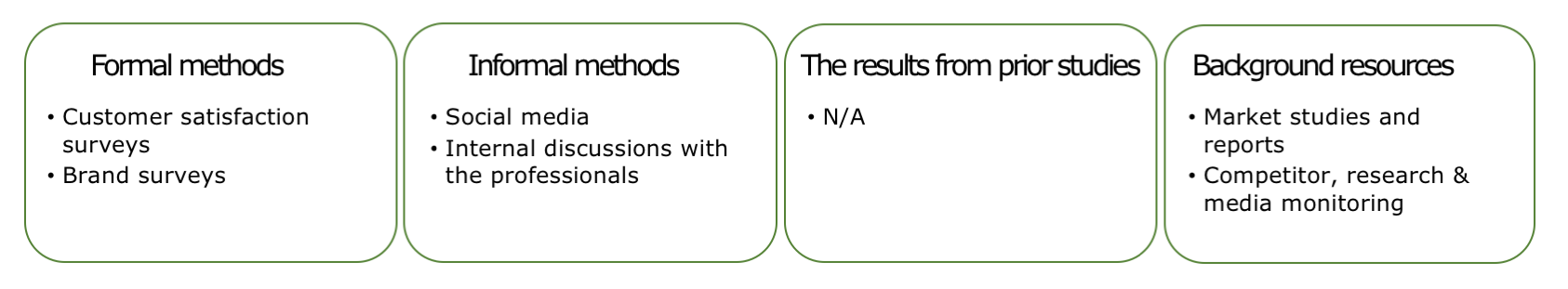

The Marketing Method Mix

The marketing department has an important role in the interface of CompanyIM with its customers and users. They also have contacts that are initialized from outside the company and have insights about the users and customers that others in the company do not have, and they are the purchasers of the external market reports[9]. The methods that marketing applies somewhat differ from those of R&D. In particular, the use of social media in gaining customer and user understanding is unique in the marketing at CompanyIM, and with the methods they have, give access to completely novel information. Marketing also relies on formal market studies and customer satisfaction surveys (Figure 4). A broader customer satisfaction survey is done once every three years, and a more compact one is done yearly. In addition, they have conducted brand surveys internally and externally. These are done for all their main markets[10]. For ProjectND, marketing relied mainly on internal sources, competitor information, and market studies.

Figure 4. The marketing method-mix.[11]

The information that marketing can provide is often downplayed in HCD research. Their methods differ from the methods that R&D traditionally applies. However, when considering how all the valuable knowledge of the users and customers adds up, they too have a significant role. This is particularly true in cases such as ProjectND in which cross-comparing different information sources was among the key techniques used to overcome the constraints set on new user studies following a strict secrecy policy. Similar cross-comparisons and the qualitatively different nature of user insight and the complementarity of the marketing and R&D departments’ user studies have also been observed before in other companies with a good track record in HCD such as the Finnish sports equipment manufacturer Suunto (Kotro, 2005).

Next, we move to presenting the method mixes of individual employees of the company in order to provide a deeper sense of how practitioners within projects create and maintain their understanding of users.

The Product Manager’s Method Mix

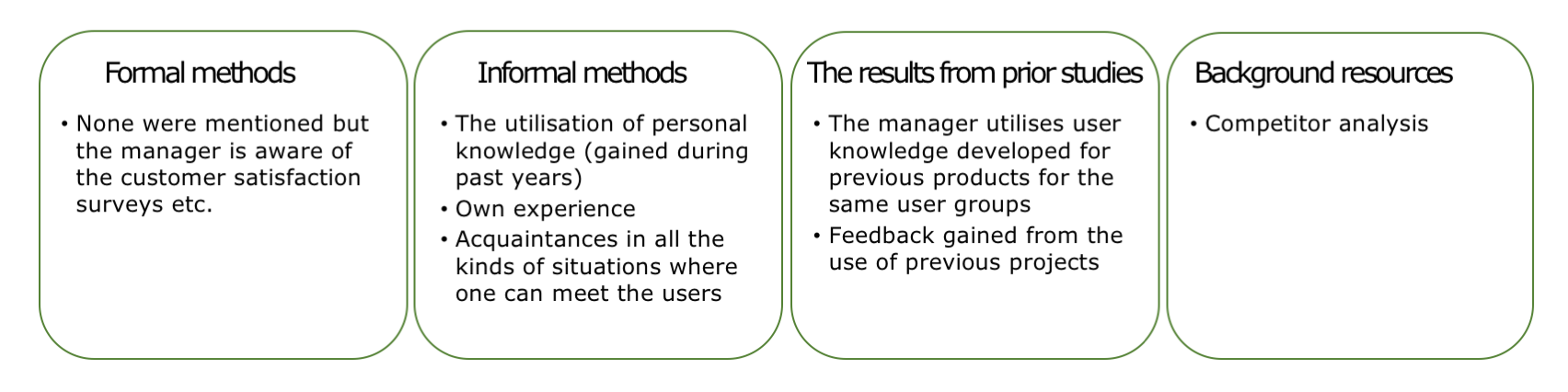

The product manager is from the sales organization. He knows the customers profoundly as he has worked on the user side of the industry as well[12]. His main information sources are his daily contacts with the customers and other people outside the company. In addition, he knows the competitors’ products well. He has a very good understanding of the customers and users, and he is one of the internal sources that was often mentioned by others as a person to ask about the use and user related subjects[13]. In development projects, the product manager has the primary knowledge of the customers and users, and he has the main responsibility for filling in the market needs and other information in the project documentation[14]. He was also the product manager for ProjectND. Figure 5 presents the general method mix for the product manager (not only for ProjectND). Most of these methods, however, are ones that cumulate information and are not specific for a certain project.

Figure 5. The product manager’s method-mix.[15]

In sum, the product manager relies mostly on his personal knowledge and contacts as he has his own experience in the industry and as he is constantly in touch with the customers and users. He also has a long history in the company and has gained knowledge during the previous projects, in their development phase as well as during the use phase (with knowledge gained through customer feedback). This highlights the importance of already existing information as a source for HCD research and the connection of Sales and R&D for shared knowledge.

The Project Manager’s Method Mix

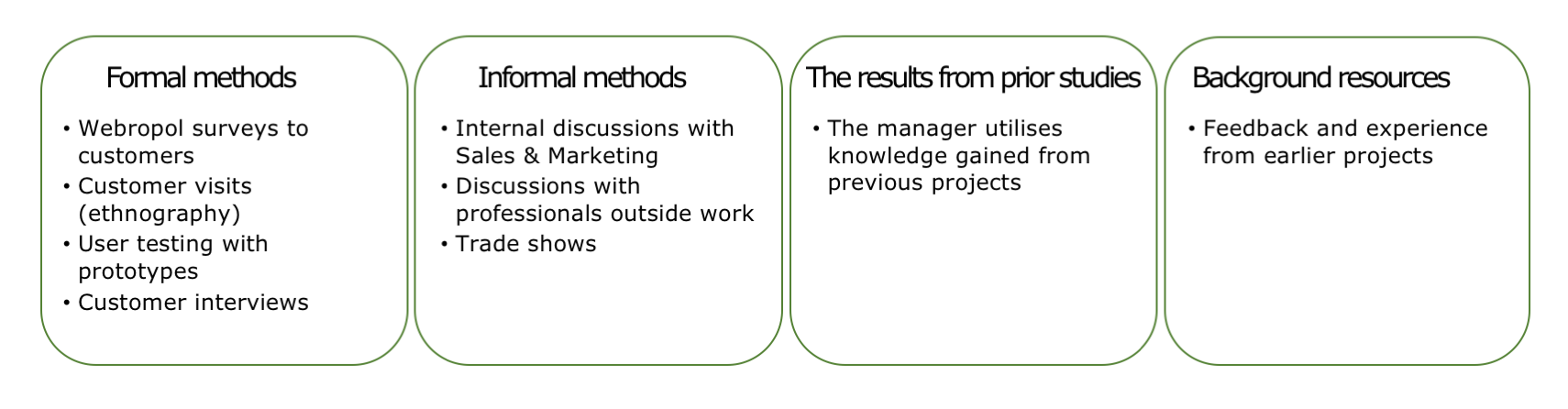

The project manager is from the R&D organization and has a technical background[16]. He has usually one ongoing development project, as well as maintenance work from preceding projects.[17] In general terms, the project manager has relevant work experience and earlier user knowledge that is applied to the ongoing project.

The project manager for ProjectND (Figure 6) had participated in formal customer visits, as well as user tests at customer sites. He had also led development projects before the case project and the information gained from those was applied in the case project as well. This information included the feedback and maintenance cases from the projects after they had been on the market. He also relied on the internal information sources, such as the internal professionals and sales people.

Figure 6. The project manager’s method-mix.[18]

The project manager also bases a lot on previous knowledge, which has been gained during several years within the company. However, when compared to the product manager, the project manager has more formal methods in his methods palette.

The Industrial Designers’ Method Mixes

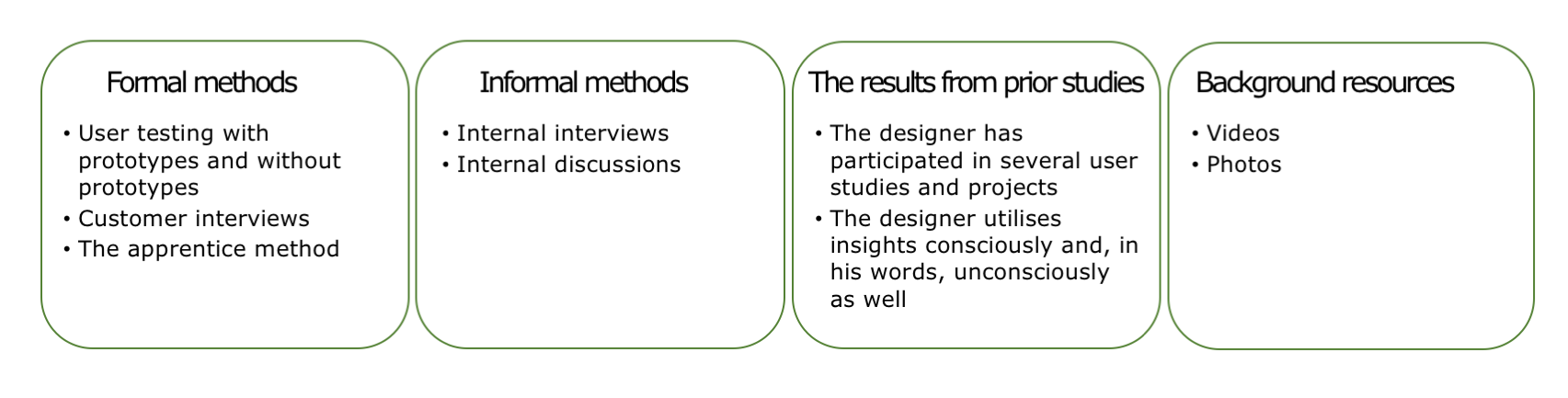

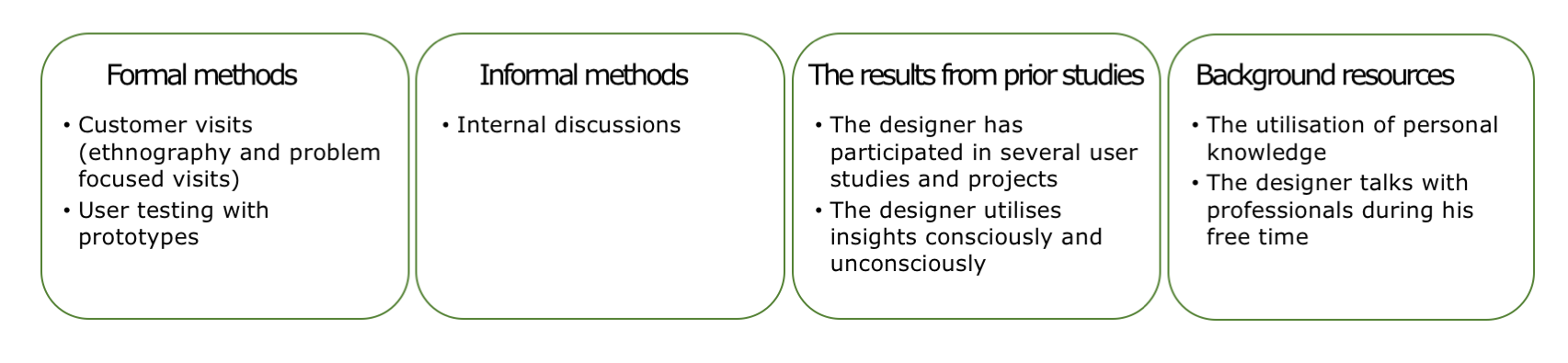

Next, we inspect the method mixes of two industrial designers. The first one of them participated in ProjectND, being the main designer, and had participated in earlier projects as well[19]. The second one only did small tasks for ProjectND but had participated in several other projects[20]. Their method mixes are quite similar to each other, the main difference being that the first one (Figure 7) had applied the apprentice method (i.e., he had worked for a few days in one of their customer sites, familiarizing himself with the working conditions and the ways in which the machines were really used)[21]. The second designer (Figure 8) had not employed the apprentice method, but he had friends who worked in the industry, and he talked with them during his free time[22].

Figure 7. The industrial designer 1’s method-mix.[23]

This method mix includes some common designer methods, such as user testing and interviews. However, the less common apprentice method is visible here. When looking at the utilization of cumulative information, the designer noted himself that he uses earlier information consciously and unconsciously.

Figure 8. The industrial designer 2’s method-mix.[24]

Here (in Figure 8), different types of site visits and user testing are emphasized. Also, it should be noted that both of these designers rely on knowledge that has been gathered earlier. For a comparison to these two industrial designers, who had been working together for some time already, the method mix of a third industrial designer is presented in Figure 9. This designer had just recently started his career at CompanyIM and thereby provides a different viewpoint on methods usage, one without the effects of the substantial cumulative knowledge building that characterizes all the other professionals. In addition to having a shorter history at the case company, this designer was also from another cultural background, which added an interesting nuance. In his method mix, the earlier materials are emphasized, as well as other external studies and earlier study reports. This designer did not mention internal discussions or tests explicitly[25]. However, internal tests are a part of the company’s product development process and, thus, they cannot be avoided. This oversight was more likely due to his short experience within the company.

Figure 9. Industrial designer 3’s (the new designer) method-mix.[26]

In Figure 9, the significant difference is that, despite all the earlier user study reports being available for research, the new designer was the only one who mentioned looking at reports from earlier studies. It appears to be too time-consuming and tedious to browse through the old reports with a new project in mind so the employees put the task aside if they can. However, they still benefited from the information as they had participated in the earlier studies themselves and thus had first-hand knowledge about the findings and insights. Therefore, they usually relied on their memory regarding the results and did not dig into the reports.

Conclusions

This paper has investigated in detail the HCD methods usage in a company with a long and successful history of using HCD. The analysis of the method mixes of the company and its key individual employee profiles has been used to clarify how information about past and present users have been oriented to influence design and R&D activities. As such, this is an important empirical addition to the relatively scant existing research on HCD regarding how methods are used in reality in companies with a high level of user-centered maturity (Johnson, 2013; Righi & James, 2007; Wilkie, 2010).

To sum up the results, all of the studied people were using a method mix to orient to users by using information that had cumulated during earlier projects, and this also applied at project and company levels. As these method mixes demonstrate, method usage and sources vary considerably within a company and its employees in different positions. Many of these user information sources can even be used without explicitly involving or studying the users in the particular project. Because the project workers have also worked on similar products before and have encountered the users before, they have gathered significant information about the users previously, and this knowledge is actively utilized especially in the conception and development phases of product development. In addition, the complementarities between insights provided by different departments or parts of the organization have been shown to significantly add to the user and customer understanding. In spite of its limitations, being a study of a single company, this study adds to our understanding of the ways practitioners in larger companies combine methods to user insight and cumulated knowledge. (Table 2 summarizes the differences in the method mixes analyzed.)

Table 2. Summary of the Main Differences in the Analyzed Method Mixes

|

Organization or person |

Main characteristics of methods use |

|

Company-wide |

A comprehensive range of methods with different levels of user involvement and intensity. Wide usage of these different methods across projects. |

|

ProjectND |

No user involvement was allowed, so the focus was on background resources, internal testing, and insights gained during earlier studies (such as interviews, usability studies, and ethnographic field trips). |

|

Marketing |

Mainly surveys, market reports, and use of the internet and social media sources. Also, internal discussions were used. |

|

Product manager (Sales) |

Focus on own experience and contacts to users and related professionals. Knows the market and company’s customers well. |

|

Project manager (R&D) |

Has participated in customer visits, tests, and interviews. Also utilizes internal discussions and knowledge from earlier projects. |

|

Industrial designer 1 |

Versatile methods for user understanding, including user testing and ethnographic field trips; internal discussions and tests. Utilizes knowledge from previous studies, which has been a part of conducting. Has applied the apprentice method. |

|

Industrial designer 2 |

Versatile methods for user understanding, including user testing and ethnographic field trips; internal discussions and tests. Utilizes knowledge from previous studies, which has been a part of conducting. |

|

Industrial designer 3 (new designer) |

Versatile methods for user understanding, including user testing and ethnographic field trips. Reads earlier study reports; searches the internet. |

Taken together, these findings underscore how thoroughgoing the usage of cumulative user information is in a mature HCD company. The analysis of method mixes reveals that all of the sections and employees rely on information that has been cumulated during past projects. The information gained from earlier studies and projects is relied on often, although the actual reports were no longer seen as useful by most of those employees, who had been involved in these previous studies and remembered the main findings. Only the newly hired designer made an exception to this as he still needed to gain the cumulative information and was, thus, the only one examining the existing reports in detail.

These findings are at odds with the way HCD processes are presented in standards and how the methods development and validation has proceeded for decades within academic HCD research, both of which presume that projects should always involve new user research and the use of validated methods therein (Beyer & Holzblatt, 1998; Hackos & Redish, 1998; Helander, Landauer, & Prabhu, 1997; Hollingsed & Novick, 2007; ISO 9241-11, 1998; Nielsen, 1993; Nielsen & Phillips, 1993). We conjecture that the HCD research’s neglect of paying attention to the cumulative user information stems from the days when HCD was novel and very few companies had long histories of practicing it—this situation has fortunately changed in four decades. Yet this may mean that HCD research should by now reorient itself regarding the role of user research and design methods in such mature HCD settings (cf. Johnson, 2013, and reflected in handbooks Hall, 2013 and Sharon, 2012). Gathering new information for each development project is not appropriate for organizations that have a high HCD maturity as these organizations have already cumulated information and it is not feasible, or necessary, to gather new information for each project. The practice by experienced designers and project managers in CompanyIM of using previous studies by memory is effective in deriving value from previous projects and research when it again becomes relevant, even as it may be a potential source of losing details and intricacy of findings.

The secondary contribution of our analysis concerns the dominant standpoint of HCD in single methods creation and validation (as in Gray & Salzman, 1998; Nielsen & Phillips, 1993), which has been critiqued during the past decade (e.g., Hornbaek, 2010; Woolrych et al., 2011). We join this criticism by showing that HCD work has mostly been accomplished through a combination of methods and other information sources on users in all studied scopes: company R&D, projects, and the various practitioners.

Our tertiary contribution concerns the sources and nature of user information in mature HCD companies. Contrary to what much HCD research has rhetorically implied in making the case for its uniqueness and usefulness, knowledge based on user research and HCD methods does not replace other sources of customer insight, but their mutual relations are rather those of complementary sources that mutually qualify each other. In the studied company, three parts of the organization have important knowledge about the users and customers: R&D, Sales, and Marketing. The marketing department has often been downplayed or even excluded from HCD research although they have valuable information and some unique sources of information that can be seen as complementary to the user knowledge gathered in R&D (Earthy, 1998; Fuge & Agogino, 2014; Righi & James, 2007). Especially through social media, marketing has access to new kinds of insights into the potential users and this should be taken more seriously in HCD. In the studied case, the information from sales was brought to product development through discussions and official documents. Knowledge based on market information can become an important addition when combined with the information gathered through HCD research methods.

Finally, regarding research methodology, the analysis of method mixes supports discovering what kind of information the organization already has and what information is still missing, which is the existing information being often left out of HCD research. Therefore, studying method mixes in an organization provides useful insights into understanding the complete picture of the user information sources in an organization.

It has proven useful to inspect the method mixes of all of the above and these should be studied further in the future. Thus, we recommend further research into the practices of the industry to discover the method mixes used in different companies as well as the practices used in combining the existing cumulated information with new information gathered through user studies. In addition, as the format for the method mixes presented in this paper is quite limited, the mapping of the methods and information sources in real-life cases should be inspected further (see, e.g., Mäkinen et al. 2018). Finally, we recommend identifying how the information is actually transferred in an organization when it relies on cumulative knowledge. What kinds of formats are used to store and communicate the knowledge of the users within the organization during and between projects?

Tips for Usability Practitioners

Following the results of this study, we make the following recommendations for practitioners working with development projects:

- When starting a project, discuss what is already known about the users and usage based on earlier projects.

- Before planning new user tests, investigate what insights are readily available from previous studies.

- During project work, combine the insights from different departments, including Sales and Marketing. Maintain a constant dialogue between all relevant parties.

- When user studies are conducted, it is advantageous to have designers participate in order to transfer user information and also provide the unreported insights.

- During induction to new employees working with product development, make sure that the findings from earlier user research is also transferred to them, for example, via reports.

- To get more out of method mixes, they should be planned to cover different levels of participation level and required resources. Information about company-level method palettes should be conveyed for all projects with example method-mixes and potentially aided by company specific software to help select appropriate methods for each project.

It should be noted that we are not recommending to abandon user research, but rather to take advantage of previously gained insights and to use the opportunities that method mixing offers. In addition, these recommendations do not apply to usability tests that are conducted to validate newly developed features, as the company cannot have existing information that could cover the need for validating new features.

Acknowledgements

We would like to thank the case company and its employees for their time and contribution to this study.

References

Arhippainen, L., Pakanen, M., & Hickey, S. (2013). Mixed UX methods can help to achieve triumphs. In E. L.-C. Law, E. T. Hvannberg, A. P. O. S. Vermeeren, G. Cockton, & T. Jokela (Eds.), Proceedings of CHI 2013 Workshop “Made for Sharing: HCI Stories for Transfer, Triumph and Tragedy” (pp. 83–88). University of Leicester. Retrieved from https://www.cs.le.ac.uk/events/HCI-3T/HCI-3T-Complete-Proceedings.pdf

Beyer, H., & Holzblatt, K. (1998). Contextual design, defining customer-centered systems. Morgan Kaufmann Publishers Inc.

Choi, Y. M., & Li, J. (2016). Usability evaluation of a new text input method for smart TVs. Journal of Usability Studies, 11(3), 110–123. Retrieved from http://dl.acm.org/citation.cfm?id=2993219.2993222

Cole, M. (1996). Cultural psychology: A once and future discipline. Harvard University Press.

Dorrington, P., Wilkinson, C., Tasker, L., & Walters, A. (2016). User-centered design method for the design of assistive switch devices to improve user experience, accessibility, and independence. Journal of Usability Studies, 11(2), 66–82. Retrieved from http://dl.acm.org/citation.cfm?id=2993215.2993218

Duh, H. B.-L., Tan, G. C. B., & Chen, V. H. (2006). Usability evaluation for mobile device: A comparison of laboratory and field tests. In Proceedings of the 8th Conference on Human-computer Interaction with Mobile Devices and Services (pp. 181–186). ACM. https://doi.org/10.1145/1152215.1152254

Earthy, J. (1998). Usability maturity model: Human centredness scale. Retrieved September 18, 2018, from http://www.idemployee.id.tue.nl/g.w.m.rauterberg/lecturenotes/USability-Maturity-Model[1].PDF

Freeman, L. C. (1966). Survey sampling. Social Forces, 45(1), 132–133. Retrieved from http://dx.doi.org/10.1093/sf/45.1.132-a

Fuge, M., & Agogino, A. (2014). User research methods for development engineering: A study of method usage with IDEO’s HCD Connect. Retrieved from http://dx.doi.org/10.1115/DETC2014-35321

Glaser, B., & Strauss, A. (1967). The discovery of grounded theory: Strategies for qualitative research. Aldine Publishing Company.

Goodman, L. A. (1961). Snowball sampling. The Annals of Mathematical Statistics, 32(1), 148–170. Retrieved from https://www.jstor.org/stable/2237615?seq=1

Gray, W. D., & Salzman, M. C. (1998). Damaged merchandise? A review of experiments that compare usability evaluation methods. Human-Computer Interaction, 133(13), 203–261. https://doi.org/10.1207/s15327051hci1303_2

Hackos, J., & Redish, J. (1998). User and task analysis for interface design. John Wiley & Sons.

Hall, E. (2013). Just Enough Research. A Book Apart.

Hare, J., Beverley, K., Begum, T., Andrews, C., Whicher, A., & Ruff, A. (2018). Uncovering human needs through visual research methods : Two commercial case studies. The Electronic Journal of Business Research Methods, 16(2), 67–79. Retrieved from http://www.ejbrm.com/

Helander, M., Landauer, T. K., & Prabhu, P. V. (1997). Handbook of human-computer interaction (2nd. Ed.). North-Holland.

Hollingsed, T., & Novick, D. G. (2007). Usability inspection methods after 15 years of research and practice. Proceedings of the 25th Annual ACM International Conference on Design of Communication – SIGDOC ’07, 249. https://doi.org/10.1145/1297144.1297200

Hornbaek, K. (2010). Dogmas in the assessment of usability evaluation methods. Behaviour and Information Technology, 29(1), 97–111. https://doi.org/10.1080/01449290801939400

Hyysalo, S. (2010). Health technology development and use. Routledge.

ISO 9241-11. (1998). Ergonomic requirements for office work with visual display terminals (VDTs). Part 11: Guidance on Usability.

Jia, Y., Larusdottir, M. K., & Cajander, Å. (2012). The usage of usability techniques in scrum projects. In M. Winckler, P. Forbrig, & R. Bernhaupt (Eds.), Human-Centered Software Engineering (pp. 331–341). Springer Berlin Heidelberg.

Johnson, M. (2013). How social media changes user-centred design – Cumulative and strategic user involvement with respect to developer–user social distance. Aalto University.

Johnson, M., Hyysalo, S., Mäkinen, S., Helminen, P., Savolainen, K., & Hakkarainen, L. (2014a). From recipes to meals … and dietary regimes : Method mixes as key emerging topic in human-centred design. In NordiCHI ’14 Proceedings of the 8th Nordic Conference on Human-Computer Interaction: Fun, Fast, Foundational (pp. 343–352). ACM.

Johnson, M., Mozaffar, H., Campagnolo, G. M., Hyysalo, S., Pollock, N., & Williams, R. (2014b). The managed prosumer: Evolving knowledge strategies in the design of information infrastructures. Information, Communication & Society, 17(7), 795–813. https://doi.org/10.1080/1369118X.2013.830635

Johnson, R. B., Onwuegbuzie, A. J., & Turner, L. A. (2007). Toward a definition of mixed methods research. The Journal of Mixed Methods Research, 1(2), 112–133. https://doi.org/10.1080/17439760.2016.1262619

Keinonen, T., Jääskö, V., & Mattelmäki, T. (2008). Three in one user study for focused collaboration. International Journal of Design, 2(1), 1–10.

Kotro, T. (2005). Hobbyist knowing in product development. Desirable objects and passion for sports in Suunto Corporation. University of Art and Design Helsinki.

Kujala, S., Miron-Shatz, T., & Jokinen, J. J. (2019). The cross-sequential approach: A short-term method for studying long-term user experience. Journal of Usability Studies, 14(2), 105–116. Retrieved from https://uxpajournal.org/cross-sequential-studying-long-term-user-experience/

Kuniavsky, M. (2003). Observing the user experience: A practitioner’s guide to user research. Morgan Kaufmann.

Kuutti, K. (1996). Activity theory as a potential framework for human/computer interaction. In B. Nardi (Ed.), Context and consciousness: Activity theory and human-computer interaction (pp. 45–67). The MIT Press.

Leech, N. L., Dellinger, A. B., Brannagan, K. B., & Tanaka, H. (2010). Evaluating mixed research studies: A mixed methods approach. Journal of Mixed Methods Research, 4(1), 17–31. https://doi.org/10.1177/1558689809345262

Lewis, J. R., & Sauro, J. (2017). Can I leave this one out? The effect of dropping an item from the SUS. Journal of Usability Studies, 13(1), 38–46. Retrieved from http://dl.acm.org/citation.cfm?id=3173069.3173073

Linek, S. B. (2017). Order effects in usability questionnaires. Journal of Usability Studies, 12(4), 164–182. Retrieved from http://dl.acm.org/citation.cfm?id=3190867.3190869

Livingstone, D., & Bloomfield, P. R. (2010). Mixed-methods and mixed-worlds: Engaging globally distributed user groups for extended evaluation and studies. In A. Peachey, J. Gillen, D. Livingstone, & S. Smith-Robbins (Eds.), Researching learning in virtual worlds (pp. 159–176). Springer London. https://doi.org/10.1007/978-1-84996-047-2_9

Mäkinen, S., Hyysalo, S., & Johnson, M. (2018). Ecologies of user knowledge: Linking user insight in organisations to specific projects. Journal of Technology Analysis and Strategic Management, 31(3), 340–355. https://doi.org/10.1080/09537325.2018.1502874

Nielsen, J. (1993). Usability engineering. Academic Press.

Nielsen, J., & Phillips, V. L. (1993). Estimating the relative usability of two interfaces: Heuristic, formal, and empirical methods compared. Proceedings of the INTERACT’93 and CHI’93 Conference on Human Factors in Computing Systems, 214–221. https://doi.org/10.1145/169059.169173

Norman, D. (1988). The psychology of everyday things. Basic Books.

Norman, D. A., & Draper, S. W. (1986). User centered system design; New perspectives on human-computer interaction. L. Erlbaum Associates Inc.

Pitkänen, H. (2017). Exploratory sequential data analysis of user interaction in contemporary BIM applications. Aalto University.

Pitkänen, J., & Pitkäranta, M. (2012). Usability testing in real context of use : The user-triggered usability testing. 7th Nordic Conference on Human-Computer Interaction: Making Sense Through Design, 797–798. https://doi.org/10.1145/2399016.2399153

Pollock, N., & Williams, R. (2008). Software and organisations. The biography of the enterprise-wide system or how SAP conquered the world. Routledge.

Righi, C., & James, J. (2007). User-centered design stories: Real-world ucd case studies. Morgan Kaufmann Publishers Inc.

Sharon, T. (2012) It’s our research: Getting stakeholder buy-in for user experience research projects. Morgan Kaufmann.

Solano, A., Collazos, C. A., Rusu, C., & Fardoun, H. M. (2016). Combinations of methods for collaborative evaluation of the usability of interactive software systems. Advances in Human-Computer Interaction Volume 2016, Article ID 4089520,157–163.

Strauss, A., & Corbin, J. (1990). Basics of qualitative research: Grounded theory procedures and techniques. Sage Publications, Inc.

Tashakkori, A., & Teddlie, C. (2010). SAGE handbook of mixed methods in social & behavioral research (2nd ed.). SAGE Publications. Retrieved from https://books.google.fi/books?id=iQohAQAAQBAJ

Teddlie, C., & Tashakkori, A. (2008). Foundations of mixed methods research: Integrating quantitative and qualitative approaches in the social and behavioral sciences. SAGE Publications. Retrieved from https://books.google.fi/books?id=AfcgAQAAQBAJ

van Turnhout, K., Bakker, R., Bennis, A., Craenmehr, S., Holwerda, R., Jacobs, M., … Lenior, D. (2014). Design patterns for mixed-method research in HCI. Proceedings of the 8th Nordic Conference on Human-Computer Interaction Fun, Fast, Foundational – NordiCHI ’14, 361–370. https://doi.org/10.1145/2639189.2639220

Welch, S. (1975). Sampling by referral in a dispersed population. The Public Opinion Quarterly, 39(2), 237–245. Retrieved from http://www.jstor.org/stable/2748151

Wilkie, A. (2010). User assemblages in design: An ethnographic study. Doctoral thesis, Goldsmiths, University of London

Woolrych, A., Hornbæk, K., Frøkjær, E., & Cockton, G. (2011). Ingredients and meals rather than recipes: A proposal for research that does not treat usability evaluation methods as indivisible wholes. International Journal of Human-Computer Interaction, 27(10), 940–970. https://doi.org/10.1080/10447318.2011.555314

Footnotes

[1] The most important sources for the main results are presented in footnotes. The code list can be found in Appendix B. The meeting and interview codes (M and H) can also be found in Figure 1, D codes refer to documents. Sources for this argument: M1, H1, H2, H4, H6, H7, H8, H9, H12, H13, H23, D2, D3, D6, D8, D9

[2] M1, H1, H6, H7, H8, H12

[3] H4, H6, H7, H8, H12

[4] M1, M6, M10, H2, H3, H4, H6, H7, H8, H29, H35, H36, D7, D12, D13, D19, D20, D24, D25

[5] M1, H1, H32 D3, D6, D8, D9

[6] H13, H22, H25

[7] H25, H27, H30

[8] H3, H9, H11, H12, H13, H16, H17, H18, H20, H21, H22, H24, H25, H26, H30, H31, H33

[9] H37

[10] H37

[11] H37

[12] H22

[13] H11, H18, H25, H37

[14] M10, H1, H9, H15, H21, H23, H35, H37

[15] H22

[16] H1, H2, H4, H9

[17] H1, H2, H5, H9, H12

[18] H9, H12, H16, H25, H30, H31

[19] H3

[20] H8

[21] H14

[22] H8

[23] H3, H10, H14, H27,

[24] H8

[25] H28

[26] H28

Appendix A: Interview Themes

The following interview themes cover the contents of the basic interviews. The goal was to get a picture of the interviewees’ basic work habits, their relation to user information (what to call the users and what methods to use), and the company’s project practices. Some additional themes were covered in a few interviews, especially in those that addressed the studied project or the designers’ work practices in more detail.

- Warm-up, confidentiality issues, and the background for the interview

- The interviewee’s background

- Job description

- Work history

- Most common co-workers

- A typical working week

- Different project types—defining the user and customer

- Defining and differentiating different types of product development projects

- Differences between projects

- Defining the user and the customer

- Defining other terms used for the user

- Single case/project

- The selection of a project in which the interviewee has participated lately

- The goal/target of the project

- The trigger for the project

- Project participants

- Planned users and customers for the product

- How user information was gained during the project (methods, who collected it, who analyzed it, at which phases of the project)

- The stages when user and customer information was especially used

- How the information was stored

- Whether the information was returned to during the project

- Methods

- The different methods used to gain user and customer knowledge at the case company (the interviewer lists these on paper to be discussed further)

- The interviewee’s own methods and other methods used at the company (the interviewer adds these to the previous list)

- Other methods used in the company that are still missing from the list, if such exists (the interviewer adds these to the previous list)

- Whether employees are taught or guided somehow to collect user insights

- Going through the formed list with the interviewee and adding any missing methods or practices

- Discussion about a large development project that targeted developing the ways of getting user insights at the company

- Discussion about the project and the interviewee’s role in it

- What should still be developed in the area of gaining better user understanding

- Suggestions of other people to interview and knowledgeable people

- Additional comments the interviewee would like to add

Appendix B: The Code List for Information Sources

|

Meetings |

Interviews |

Documents |

|||

|

Code |

Meeting type |

Code |

Interviewee |

Code |

Document type |

|

M1 |

Starting meeting |

H1 |

Development director |

D1 |

ProjectND documentation |

|

M2 |

Project kick-off meeting |

H2 |

R&D director |

D2 |

Development project materials |

|

M3 |

Project meeting |

H3 |

Industrial designer 1 |

D3 |

Development project materials |

|

M4 |

Project weekly |

H4 |

UX manager |

D4 |

Project documentation template |

|

M5 |

Project weekly |

H5 |

Project manager 1 |

D5 |

CompanyIM materials |

|

M6 |

Project weekly |

H6 |

UX manager 2nd interview |

D6 |

Development project materials |

|

M7 |

Project weekly |

H7 |

Design manager |

D7 |

CompanyIM materials |

|

M8 |

Project weekly |

H8 |

Industrial designer 2 |

D8 |

Development project materials |

|

M9 |

Project weekly |

H9 |

Project manager 2 |

D9 |

Development project materials |

|

M10 |

Project weekly |

H10 |

Industrial designer 1 2nd interview |

D10 |

CompanyIM materials |

|

M11 |

Project meeting |

H11 |

Electrical engineer 1 |

D11 |

CompanyIM materials |

|

M12 |

Project meeting |

H12 |

Project manager 2 2nd interview |

D12 |

CompanyIM materials |

|

M13 |

Project weekly |

H13 |

Technology area manager |

D13 |

ProjectND related materials |

|

M14 |

Project weekly |

H14 |

Industrial designer 1 3rd interview |

D14 |

ProjectND documentation |

|

M15 |

Project weekly |

H15 |

Marketing director |

D15 |

ProjectND related materials |

|

M16 |

Project weekly |

H16 |

Project manager 2 3rd interview |

D16 |

ProjectND related materials |

|

M17 |

Project weekly |

H17 |

Mechanical engineer |

D17 |

ProjectND related materials |

|

M18 |

Project weekly |

H18 |

Production advisor |

D18 |

ProjectND documentation |

|

M19 |

Project weekly |

H19 |

Production laboratory worker |

D19 |

ProjectND related materials |

|

M20 |

Project weekly |

H20 |

Electrical engineer 2 |

D20 |

ProjectND related materials |

|

M21 |

Project weekly |

H21 |

[Technology] manager |

D21 |

ProjectND documentation |

|

M22 |

Project weekly |

H22 |

Product manager |

D22 |

ProjectND related materials |

|

M23 |

Project weekly |

H23 |

Sales director |

D23 |

ProjectND documentation |

|

M24 |

Project weekly |

H24 |

Electrical engineer 3 |

D24 |

ProjectND related materials |

|

M25 |

Project weekly |

H25 |

Project manager 2 4th interview |

D25 |

ProjectND related materials |

|

M26 |

Project weekly |

H26 |

Production technician |

D26 |

ProjectND related materials |

|

M27 |

Project weekly |

H27 |

Industrial designer 1 4th interview |

D27 |

ProjectND related materials |

|

M28 |

Project weekly |

H28 |

Industrial designer 3 |

D28 |

ProjectND documentation |

|

M29 |

Project weekly |

H29 |

Industrial designer 4 |

D29 |

ProjectND documentation |

|

M30 |

Project weekly |

H30 |

Project manager 2 5th interview |

D30 |

ProjectND documentation |

|

M31 |

Project weekly |

H31 |

Project manager 2 6th interview |

D31 |

ProjectND related materials |

|

M32 |

Project weekly |

H32 |

Design manager 2nd interview |

D32 |

ProjectND documentation |

|

M33 |

Project weekly |

H33 |

[Technology] engineer |

D33 |

ProjectND related materials |

|

|

|

H34 |

[Technology] advisor 1 |

D34 |

CompanyIM materials |

|

|

|

H35 |

[Technology] services leader |

D35 |

ProjectND documentation |

|

|

|

H36 |

[Technology] advisor 2 |

|

|

|

|

|

H37 |

Marketing manager & Communications specialist |

|

|

[:]