Abstract

The increasing prevalence of chronic heart failure requires new and more cost-efficient methods of chronic heart failure treatment. In the search for comprehensive and more cost-effective disease management, the use of self-monitoring devices has become increasingly popular. However, the usability of these wireless self-trackers needs to be evaluated if they are to be successfully implemented within a healthcare system. The aim of this study is to evaluate the usability of six self-tracking devices for use in telerehabilitation of heart failure patients. The devices were evaluated by 22 healthy volunteers who used them for 48 hours. Based on structured interviews, the volunteers rated the devices for their user-friendliness, design, comfort, and motivation. Also, the devices’ step count precision was assessed using a treadmill walking test. Of the six devices subject to user tests, the Fitbit One, Fitbit Charge HR, Garmin Vivofit 2, and Beddit Sleep Tracker received significantly higher average scores than the Jawbone UP3 and Jawbone UP24, based on the structured interviews, p < 0.001. The Fitbit One and the Garmin Vivofit 2 received the lowest error percentage rates during the walking tests. The results indicate that in terms of overall usability for telerehabilitation purposes, the most suitable self-tracking devices would be the Fitbit One, Garmin Vivofit 2, and the Fitbit Charge HR.

Keywords

usability, activity monitor, self-tracking, telerehabilitation, physical activity, step count, fitness, heart failure

Introduction

Unhealthy lifestyle and physical inactivity are major contributors to the rise in the prevalence of chronic cardiovascular disease (Hootman, 2009). The increasing prevalence of chronic heart failure (HF) has led to a demand for new and more cost-efficient methods of designing chronic HF treatment (Thorup et al., 2016). The current treatments mainly consists of medication, physical rehabilitation, and encouraging healthy lifestyle changes such as a low-salt diet and reducing smoking (Evenson, Goto, & Furberg, 2015). To facilitate this treatment, many patients participate in rehabilitation programs, during which they attend physical activities and lectures in healthy lifestyle and self-motivation. In recent years, a new approach within rehabilitation allows the caregiver and the patient to be physically separated and communicate through technology, a process known as telerehabilitation. Telerehabilitation has been shown to increase patients’ quality of life as compared to traditional rehabilitation (Ong et al., 2016). However, results from telerehabilitation are conflicting on reducing mortality and on rates of rehospitalization of HF patients (Boyne et al., 2012; Chaudhry et al., 2010; Inglis, 2015). One possible explanation for this may be the lack of tailored telerehabilitation programs that could fit the needs of the individual patients (Piotrowicz et al., 2016).

As part of telerehabilitation regimes, some programs utilize self-tracking by means of mobile apps or commercially available self-monitoring devices such as wearable activity trackers (Albert et al., 2016). Self-monitoring devices are becoming increasingly popular, which is reflected by the rapid growth in sales for commercial industry (from 9.2 billion USD in 2014 to an estimated 30.2 billion USD in 2018). There are now several types and brands of self-tracking devices available on the market (Salah, MacIntosh, & Rajakulendran, 2014). However, the majority of these devices are still aimed at fitness/lifestyle-related consumers. Most self-tracking devices have not been approved as medical devices and do not meet the usual standards for the kind of monitoring equipment normally utilized in clinical contexts. Even though most self-trackers have been designed for the consumer market rather than as rehabilitative or medical devices, they have considerable potential for use in heart failure management.

This study grew out of the Future Patient telerehabilitation research project with a focus on HF. The project, based on a participatory design, focuses on multiparametric individualized monitoring to detect worsening of symptoms, avoid hospitalization, and create better self-management of disease (Clemensen, Rothmann, Smith, Caffery, & Danbjorg, 2016; Kushniruk & Nøhr, 2016).

Related Research: Current Usability Evaluations of Self-Trackers for Clinical Use

Self-Tracking Technology and Usability

Self-tracking as related to healthcare is the act of systematically recording information that may be of clinical relevance about one’s own health. Many devices have self-tracking capabilities, including pedometers, smartphones, sleep trackers, or online health logs (Albert et al., 2016). Self-trackers are defined as a device specifically used to enable these recordings.

Usability of Self-Tracking for Monitoring Purposes

A central part of heart failure treatment is self-care and regular symptom monitoring. Self- trackers such as smartphones, tablets, or activity trackers, may be used to facilitate symptom monitoring by integration into patients’ daily living (Creber, Hickey, & Maurer, 2016). Furthermore, many self-trackers provide an open online application program interface (API) as a convenient way to transmit and access data. The API often allows for the online monitoring, recording, and analyzing physical activity (Honko et al., 2016; Washington, Banna, & Gibson, 2014). Some of the current studies of the usability of clinically applied self-tracking devices focus on their validity and reliability within specific parameters/environments (Andalibi, Honko, Christophe, & Viik, 2015). There has been a wealth of validation on different parameters, such as number of stairs climbed, steps walked, walking/running distance, resting/active pulse, calories burned, and sleep quality (Shameer et al., 2017).

As such, self-monitoring devices provide new opportunities to monitor changes in patients’ daily lives and physical activity, usually with no or minimal disturbance to the users. The newer generation of self-trackers is also capable of providing tailored motivational feedback about activities and sleep patterns, prompting the user with motivational text messages or goal-setting, some even include competitive features such as leader boards, badges, or ways to facilitate competitive networks. (Mercer, Giangregorio, et al., 2016).

Further testing of usability and validation is still required within disease specific populations, such as HF, before we can assess the full potential of self-tracking devices for use in clinical contexts (Lee & Finkelstein, 2015; Mercer, Li, Giangregorio, Burns, & Grindrod, 2016).

Usability of Self-Trackers to Promote Self-Awareness

Some self-trackers may lack extensive validation of the different monitoring parameters. However, self-tracking devices may still be used to motivate individuals to promote healthy behaviors (Shameer et al., 2017). Previous studies of self-trackers have been used to promote self-awareness and encourage positive changes in health behavior (Lyons, Lewis, Mayrsohn, & Rowland, 2014; Patel, Asch, & Volpp, 2015; Wortley, An, & Nigg, 2017). A popular example of this effort towards positive impact are the pedometers used to promote physical activity in various chronic diseases, including HF (Piette et al., 2016; Thorup et al., 2016). Behavioral change in HF is a process that unfolds over longer periods of time and may take a lifetime of maintenance (Paul & Sneed, 2004; Prochaska & Velicer, 1997). To accommodate these kinds of behavioral changes, the devices need to be validated for long-term use, be comfortable, user-friendly, and motivate the users to monitor their physical activity and maintain a healthy lifestyle.

When introducing a new health technology, the usability of the technologies is determined by many factors. One model that describes the acceptance of new technologies is the technology acceptance model (TAM; Davis, 1989). Notably the users’ perceived usefulness and perceived ease of use are central to this model and how people come to use new technologies. The widespread availability and low cost of self-trackers make them ideal for implementation in chronic cardiovascular disease management. Utilizing the health-monitoring data generated by patients may then facilitate the design of new and better telerehabilitation strategies (Hickey & Freedson, 2016; Tully, McBride, Heron, & Hunter, 2014). However, more research is still required to evaluate the usability and validity of self-tracking devices in HF patients (Alharbi, Straiton, & Gallagher, 2017).

The aim of this study is to evaluate the usability of self-tracking devices for use in telerehabilitation of HF patients within the framework of the Future Patient research project. As opposed to previous studies, this study includes both qualitative evaluation for the devices based on comfort, user-friendliness, motivation of physical activity, and a quantitative assessment based on the step count precision in current devices.

Methods

The usability of the self-tracking devices has been explored through a triangulation of data collection techniques: structured interview, user comments, and treadmill testing.

Study Participants

The participants in the study were 22 healthy volunteers (11M, 11F) aged 21–49 years (Mean = 27, SD = 7.25). The volunteers were recruited from around the campus of Aalborg University. Participants were included if they were >18 years old, capable of understanding Danish, and able to provide signed written and informed consent. The informed consent form also included statements where participants declared that they did not suffer from previous neurologic, musculoskeletal, or mental illnesses, had no use of walking aids, and were not pregnant.

Ethical Considerations

The study was submitted for review by the local Ethics Committee. However, due to the harmless nature of the study, no review by the Ethical Committee was necessary. Nonetheless, the study was performed according to the Helsinki Declaration, and all participants signed informed consent forms.

Selection of Devices

Selection of the devices for this study was based on an initial search performed in August 2015, where a total of 82 commercially available self-trackers were identified. The devices evaluated in this study were selected based on the following criteria:

- European Conformity (CE) marked self-trackers designed for daily use;

- Capable of measuring pulse, sleep, and/or steps;

- Data from the devices must be freely obtainable through an online Application Interface (API); and

- The self-tracking devices must be commercially available and obtainable by the research team.

On this basis, the following self-trackers were selected for testing in this study: Garmin Vivofit 2, Fitbit One, Fitbit Charge HR, Jawbone UP3, Jawbone UP24, and Beddit Sleep Tracker. All self-trackers were updated to the most recently available firmware on September 11, 2015, and were not updated further during the study.

Study Setup

After enrollment, researchers followed the same study procedure for all participants:

- The baseline data (gender, age, weight, etc.) for each participant was collected.

- Each participant received the first three self-tracking devices: Jawbone UP24, Garmin Vivofit 2, and Fitbit One. The devices were set up according to each participant’s height, weight, and date of birth.

- Each participant was instructed in how to use and wear the self-trackers. For 48 hours, participants were asked to follow their usual daily routines and use the self-tracking devices as described according to the manufacturer’s instructions.

- After the 48-hour period, each participant returned to the laboratory at Aalborg University for

- a structured interview focusing on the usability of the individual trackers, and

- a treadmill exercise, where each participant walked on the treadmill for three sessions. The aim of this exercise was to evaluate the precision of the individual devices during each of the three walking speeds:

- 2 km/h walking session of 1, 2, and 3 minutes;

- 5 km/h walking session of 1, 2, and 3 minutes;

- 5 km/h walking session of 1, 2, and 3 minutes.

- Steps 2 through 4 were repeated for the next three self-tracking devices: Jawbone UP3, Fitbit Charge HR, and Beddit Sleep Tracker.

Structured Interview and Content

The development of the structured interview guide was inspired by the technology acceptance model (TAM; Holden & Karsh, 2010). This ensured that it was not the device itself that was rated, but rather an assessment of the perceived usefulness of the technology. The structured interview evaluated 3 to 5 areas of usability: user-friendliness, satisfaction with the design for both male and female participants, comfort related to self-trackers, and motivation. Participants were asked to rate a series of statements using one of six options on a Likert scale: completely disagree, disagree, somewhat disagree, somewhat agree, agree, and completely agree. To quantify the results from the interview, the Likert scale was converted to a 0 to 5 scale, where 0 was the most negative answer and 5 was the most positive. The mean score of each area was evaluated by a two-ways ANOVA test using Tukey’s honest significant difference (HSD).

As part of the structured interview, participants were encouraged to provide additional comments or observations about their use of the trackers.

Treadmill Exercise and Measurement of Step Count Precision

The self-trackers’ ability to measure steps was investigated by measuring step count precision. Participants were asked to walk at three different gait speeds on the treadmill: 2 km/h, 3.5 km/h, and 5 km/h. The gait speed of 5 km/h was chosen because it is the preferred gait speed for healthy adults and ideal for the self-trackers (Browning, Baker, Herron, & Kram, 2006). The 2km/h and 3.5 km/h speeds were chosen to match the gait speeds of chronically ill and weaker patients (Harrison et al., 2013; Mercer, Giangregorio, et al., 2016). The participants walked three sessions at 1, 2, and 3 minutes at each of the three gait speeds, and the number of steps was noted, either from the self-trackers display or via the mobile application. To ensure that the mobile application was up to date, the self-trackers were synchronized manually.

The number of steps measured through each gait speed was averaged for each self-tracker in order to calculate the mean number of steps. The error rates were calculated as the actual number of steps measured per minute minus the mean number of steps per minute. To compare the results, we used the following equation to calculate the error percentage of each of the three gait speeds, for each subject participant.

Results

Generally, the participants in this study had higher levels of education, mostly above high school level, and 77% of them were married or in a co-habiting status. More than half had a full-time job (37 hours) and exercised once per week. All the participants were very familiar with computers and smartphones, and 68% had access to a tablet device.

Usability

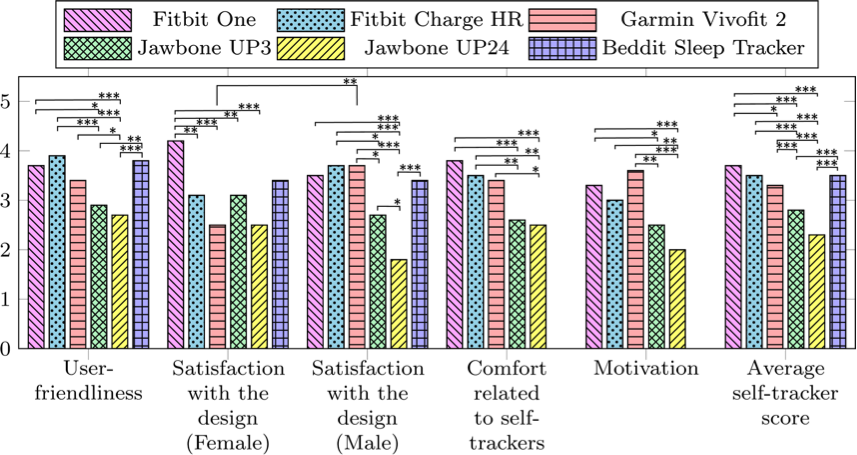

The responses to the questions from the structured interviews were scored from 0 to 5 and evaluated for each self-tracker. Figure 1 shows the mean score of each tracker within the five usability areas and an average score of each self-tracker.

Figure 1. Bar graph showing the results from the structured interviews for each self-tracker. The scores range from 0 to 5, where 0 indicates lowest degree of satisfaction and 5 indicates the highest degree of satisfaction.

The devices were evaluated on five aspects, as shown in Figure 1 along the horizontal axis. The vertical axis represents the mean scores from the Likert scales of the 22 participants’ responses. The structured interview related to the Beddit Sleep Tracker did not include “Comfort related to self-trackers” or “Motivation.” The “Average self-tracker score” bars show the average score from all other categories. Significant differences between the self-tracking devices were evaluated using two-ways ANOVA test using Tukey’s honest significant difference (HSD) and was illustrated as bridges in the figure. The bridges are marked according to significance level by *p < .05, **p < .01, and *** p < .001. Significant differences between female and male responses related to the “Satisfaction with design” were also shown and evaluated with the same method.

The Fitbit Charge HR, Fitbit One, Garmin Vivofit 2, and Beddit Sleep Tracker had the highest mean scores for user-friendliness. Significant differences in user-friendliness were generally found between the highest-rated trackers and the Jawbone UP3 and Jawbone UP24. The Fitbit One had the highest mean score for design (female) with significant differences to all other tracking devices except the Beddit Sleep Tracker. For the mean design score, females rated the Garmin Vivofit 2 higher compared to the males. Otherwise, no significant differences were found between female and male satisfaction with design. Male participants scored the Jawbone Up24 significantly lower than all other self-tracking devices. The Fitbit One and the Fitbit Charge HR were scored significantly higher on comfort than the Jawbone UP3 and UP24. The Fitbit One, Fitbit Charge HR, and Garmin Vivofit 2 scored highest in the motivation category, with significant differences to the Jawbone UP3 and Jawbone UP24. The Fitbit One, Fitbit Charge HR Beddit Sleep Tracker, and the Garmin Vivofit 2 received the highest average self-tracker scores, significantly higher than the Jawbone UP3 and the Jawbone UP24 F(5,1696) = 32,89, p < 0.001. Results from the structured interviews show that participants generally gave higher user-friendliness and motivation scores to those devices that included a display.

Usability Comment Analysis

Additional comments from the structured interviews were analyzed and grouped into three themes, subdivided into 11 corresponding sub-themes. Along with the grouping of the comment, the sentiment of each comment was also graded. To increase consistency, commentaries were analyzed by three researchers independently. The designated grouping and overall sentiments of each comment were agreed upon if at least two researchers agreed. Examples of comments related to each self-tracker are shown in Table 1.

Table 1. Examples of User Comments About the Individual Self-Trackers

|

Trackers |

Positive comments |

Critical comments |

|

Garmin Vivofit 2 |

“it functioned well” and “looks like a watch” |

“could not operate display” |

|

Fitbit One |

“simple and discreet design” |

“difficult when wearing a dress” and “wrist sleeve can be uncomfortable” |

|

Fitbit Charge HR |

“the best of the trackers” and “looks like a watch” |

“begins to smell quickly,” “slightly uncomfortable,” and “larger display would make it prettier” |

|

Jawbone UP3 |

“smart without a display” |

“annoying locking mechanism,” “rivets are unpleasant,” and “gave red marks” |

|

Jawbone UP24 |

“smart without a display” |

“bothersome when typewriting” and “clumsy” |

|

Beddit Sleep Tracker |

“I hardly noticed the tracker” |

“annoying with wires when sleeping” and “needs longer band” |

Note: N = 22 participants commenting on each of six tracking devices

Table 2 shows the evaluations of each self-tracker and the number of associated positive or critical comments within each theme and subtheme. These comments were analyzed by placing them in one of nine identified sub-themes and grading them by type of sentiment (positive or critical).

Table 2. Categorization of the Comments

|

Themes |

Sub-themes |

Garmin Vivofit 2 |

Fitbit One |

Fitbit Charge HR |

Jawbone UP3 |

Jawbone UP24 |

Beddit Sleep Tracker |

|

Overall impression |

|

↑3 |

|

↑4 |

|

|

|

|

User experience |

|

|

|

|

|

|

|

|

|

Comfort |

↓1 |

↓1 |

↓1 |

|

↑1 ↓3 |

↑1 ↓2 |

|

|

Skin irritation |

|

|

|

↓2 |

|

↓1 |

|

|

Appearance |

↑1 ↓1 |

↑1↓1 |

↑1 |

↓1 |

↓3 |

|

|

|

Hygiene |

|

|

↓1 |

↓1 |

|

|

|

|

Stigma |

↑2 |

|

↑1 |

|

|

|

|

|

Summary |

↑3 ↓2 |

↑1 ↓2 |

↑2 ↓2 |

↓4 |

↑1 ↓6 |

↑1 ↓3 |

|

Functionality |

|

|

|

|

|

|

|

|

|

Improvement |

↓3 |

|

↓1 |

|

↓1 |

↓3 |

|

|

Locking / Wearing |

|

↑1↓4 |

|

↓6 |

↓1 |

↑1 |

|

|

Adjustable size |

|

|

|

|

↓2 |

|

|

|

Display and menu |

↑1 ↓3 |

↓2 |

↑1 |

↓2 |

↑1 ↓3 |

|

|

|

Summary |

↑1 ↓6 |

↑1 ↓6 |

↓6 |

↓8 |

↑1 ↓7 |

↑1 ↓3 |

Note: Categorization of the comments recorded during the structured interview, grouped into themes and sub-themes. Critical comments include a down arrow (↓) and positive comments are marked with an up arrow (↑). All categorized comments are summarized under each of the three themes.

Step Count Precision

Because the Beddit Sleep Tracker does not measure steps, only the five out of the six devices were evaluated in a treadmill exercise at three different walking speeds.

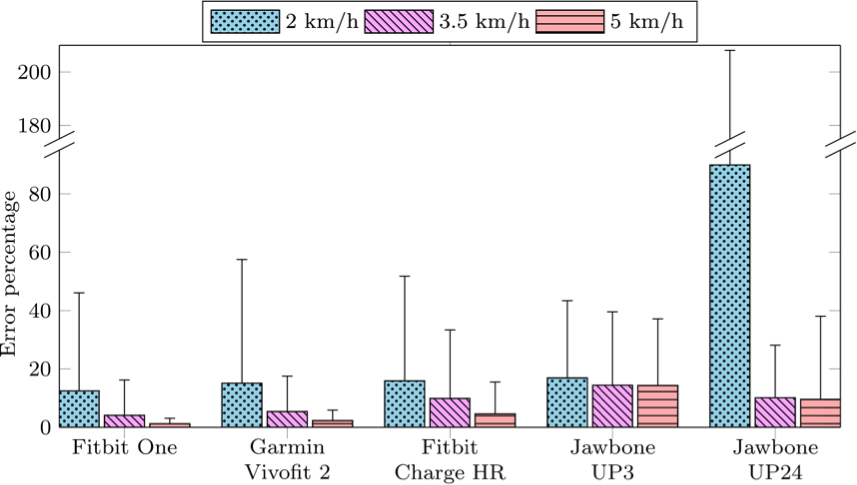

Figure 2. Bar graph illustrates the results of step count precision. The horizontal axis shows five included wireless self-trackers. The vertical axis shows the mean error percentage of the step count relative to the average step count at the designated walking speeds of 2 km/h, 3.5 km/h, and 5 km/h. Whiskers on the figure show the 95% confidence interval.

At gait speeds of 2 km/h, the self-trackers’ ability to reproduce the estimated step count is generally lower than at gait speeds above 3.5 km/h. At gait speeds of 2 km/h, the Jawbone UP24 had the lowest reproducibility. For all self-trackers except the Jawbone UP24, the percentage error was below 20% at all gait speeds. The two Jawbone self-trackers showed the highest percentage of errors at all gait speeds.

Discussion

The aim of this study has been to evaluate the usability of wireless self-tracking devices in a telerehabilitation program for HF patients.

Research into the usability of wireless self-trackers, although a new area, has received increasing interest as the use of self-trackers has now entered healthcare systems. Several studies have evaluated the capabilities of self-tracking devices for measuring heart rate, sleep, energy expenditure, and step accuracy. However, further evidence on the usability of self-tracking devices is needed for successful implementation in telerehabilitation. In the present study of six devices, four of them—the Fitbit One, Fitbit Charge, Beddit Sleep Tracker, and Garmin Vivofit 2—received the most positive comments from users and were generally evaluated significantly higher than the Jawbone UP3 and UP24 devices in the structured interviews.

Previous studies had investigated the usability and desirability of the Fitbit One and Jawbone UP24, and in line with the results from our present study, Pfannenstiel and Chaparro, (2015) found the Fitbit One to be evaluated significantly higher than the Jawbone UP24. These results may reflect the fact that the Fitbit One was equipped with a digital display, as Pfannenstiel and Chaparro (2015) found that the most highly rated self-tracking devices were those with a digital display. However, results from our study also show that the male participants scored the Garmin Vivofit 2 significantly higher than the female participants, which may indicate a need for more customized solutions. The optimal choice of devices for telerehabilitation may certainly differ from person to person. Many self-trackers are beginning to include aesthetics and user modifications into their design—an in-line innovation that could meet the needs for more customized solutions. The Jawbone UP3 and UP24 models received the lowest average tracker score, and the comments show that the two Jawbone self-trackers were criticized for cumbersome “locking/wearing,” and lack of “adjustable size.”

The walking test was set up to investigate the step count precision of five self-trackers. Results from these tests reflect how well the individual devices could reproduce their measurements during different gait speeds. In our study, all the devices showed error percentages of less than 14.3% in the 5 km/h walking test. A similar approach has been used by Ehrler, Weber, and Lovis (2016) who showed that at 5 km/h, the self-trackers’ error percentages were below 15% in older adults (Kooiman et al., 2015). However, our study also indicates that the errors generally increase with a reduction of walking speed. Previous studies have expressed concern about increased errors in estimated step count and distance travelled during use of self-trackers in slow-walking populations (Crouter, Schneider, Karabulut, & Bassett, 2003; Huang, Xu, Yu, & Shull, 2016). Even though the self-trackers used in these studies differed from those used in our study, making comparison of results difficult, these findings may point towards step counts generated by self-monitoring devices being less suited for use in telerehabilitation of extremely slow-walking populations.

One limitation of this study is the use of healthy volunteers instead of a target population of telerehabilitation patients. However, results from the usability evaluation and the walking tests correspond with previous studies on elderly and rehabilitation patients (Harrison et al., 2013; Mercer, Giangregorio, et al., 2016; Thorup et al., 2016), indicating that the usability of these devices may be dependent, at least to some extent, on the technology rather than the target population. Although evaluation of usability and desirability of self-trackers has been the focus of this and previous studies (Kim, 2014; Kooiman et al., 2015; Sousa, Leite, Lagido, & Ferreira, 2014; Tully et al., 2014), the usability of specific devices in telerehabilitation and in relation to daily use still needs further investigation (Mercer, Giangregorio, et al., 2016). This study is also limited by the fact that the usability evaluations were based on only 48 hours of use. This short period of time cannot reveal anything about potential longer-term benefits or problems with the self-tracking devices, including battery life, maintenance and cleaning of the devices, and updates of devices and apps. The motivation for long-term use is an essential element in the successful use of self-trackers in telerehabilitation. Compared to similar studies of usability of self-trackers, periods of a few days up to three months have been used (Kim, 2014; Mercer, Giangregorio, et al., 2016).

Recommendations

The need for more tailored telerehabilitation solutions has been recommended throughout the literature. Nevertheless, many solutions continue to rely on the one-size-fits-all model when applying technology in these programs. For future research, it would be useful to investigate the usability of self-tracking devices in different segments of the population (e.g., male/female, old/young). This will help to further evaluate the application of self-tracking devices in individual patient-tailored solutions.

Conclusion

The Fitbit One, Fitbit Charge HR, and Beddit Sleep Tracker received on average the highest user evaluations. The walking tests show a step count error percentage of < 14.3% at 5km/h for all self-tracking devices, but at slower gait speeds at 3.5km/h and 2km/h, the error percentages increased. The Fitbit One and Garmin Vivofit 2 performed with the lowest error percentage at all gait speeds. Based on our findings, the Fitbit One and Fitbit Charge HR are the best suited self-trackers for telerehabilitation. Further studies into the long-term usability of self-trackers in a telerehabilitation population need to be carried out.

Tips for Usability Practitioners

Here we offer some suggestions for usability practitioners on key issues to consider when selecting self-tracking technologies for use in a telerehabilitation context:

- Before selecting the self-tracking device that will be used, consider the walking speed of the population and keep in mind the estimated error percentages that are associated with the self-tracking devices you select.

- Give prospective users freedom of choice between the different kinds of self-tracking technologies. A one-size-fits-all solution does not always take into account the needs of the individual patients.

- Be thorough when explaining how to use the self-tracking device to your participants.

Acknowledgements

The authors wish to thank Aalborg University and Aage and Johanne Louis-Hansens Foundation for funding this study.

References

Albert, N. M., Dinesen, B., Spindler, H., Southard, J., Bena, J. F., Catz, S., … Nesbitt, T. S. (2016). Factors associated with telemonitoring use among patients with chronic heart failure. Journal of Telemedicine and Telecare, 23(2), 283–291. http://doi.org/10.1177/1357633X16630444

Alharbi, M., Straiton, N., & Gallagher, R. (2017). Harnessing the potential of wearable activity trackers for heart failure self-Care. Current Heart Failure Reports, 14(1), 23–29. http://doi.org/10.1007/s11897-017-0318-z

Andalibi, V., Honko, H., Christophe, F., & Viik, J. (2015). Data correction for seven activity trackers based on regression models. Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 15, 1592–1595. http://doi.org/10.1109/EMBC.2015.7318678

Boyne, J. J. J., Vrijhoef, H. J. M., Crijns, H. J. G. M., De Weerd, G., Kragten, J., & Gorgels, A. P. M. (2012). Tailored telemonitoring in patients with heart failure: Results of a multicentre randomized controlled trial. European Journal of Heart Failure, 14(7), 791–801. http://doi.org/10.1093/eurjhf/hfs058

Browning, R. C., Baker, E. A., Herron, J. A., & Kram, R. (2006). Effects of obesity and sex on the energetic cost and preferred speed of walking. Journal of Applied Physiology (Bethesda, Md. : 1985), 100(2), 390–8. http://doi.org/10.1152/japplphysiol.00767.2005

Chaudhry, S. I., Mattera, J. A., Curtis, J. P., Spertus, J. A., Herrin, J., Lin, Z., … Krumholz, H. M. (2010). Telemonitoring in patients with heart failure. New England Journal of Medicine, 363(24), 2301–2309. http://doi.org/10.1056/NEJMoa1010029.Telemonitoring

Clemensen, J., Rothmann, M. J., Smith, A. C., Caffery, L. J., & Danbjorg, D. B. (2016). Participatory design methods in telemedicine research. Journal of Telemedicine and Telecare, 23(9), 780–785. http://doi.org/10.1177/1357633X16686747

Creber, R. M. M., Hickey, K. T., & Maurer, M. S. (2016). Gerontechnologies for older patients with heart failure: What is the role of smartphones, tablets, and remote monitoring devices in improving symptom monitoring and self-care management? Current Cardiovascular Risk Reports, 10(30). http://doi.org/10.1007/s12170-016-0511-8.Gerontechnologies

Crouter, S. E., Schneider, P. L., Karabulut, M., & Bassett, D. R. (2003). Validity of 10 electronic pedometers for measuring steps, distance, and energy cost. Medicine and Science in Sports and Exercise, 35(8), 1455–1460. http://doi.org/10.1249/01.MSS.0000078932.61440.A2

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319. http://doi.org/10.2307/249008

Ehrler, F., Weber, C., & Lovis, C. (2016). Positioning commercial pedometers to measure activity of older adults with slow gait: At the wrist or at the waist? Studies in Health Technology and Informatics, 221, 18–22. http://doi.org/10.3233/978-1-61499-633-0-18

Evenson, K. R., Goto, M. M., & Furberg, R. D. (2015). Systematic review of the validity and reliability of consumer-wearable activity trackers. International Journal of Behavioral Nutrition and Physical Activity, 12(1), 159. http://doi.org/10.1186/s12966-015-0314-1

Harrison, S. L., Horton, E. J., Smith, R., Sandland, C. J., Steiner, M. C., Morgan, M. D. L., & Singh, S. J. (2013). Physical activity monitoring: Addressing the difficulties of accurately detecting slow walking speeds. Heart and Lung: Journal of Acute and Critical Care, 42(5), 361–364. http://doi.org/10.1016/j.hrtlng.2013.06.004

Hickey, A. M., & Freedson, P. S. (2016). Utility of consumer physical activity trackers as an intervention tool in cardiovascular disease prevention and treatment. Progress in Cardiovascular Diseases, 58(6), 613–619. http://doi.org/10.1016/j.pcad.2016.02.006

Holden, R. J., & Karsh, B.-T. (2010). The technology acceptance model: its past and its future in health care. Journal of Biomedical Informatics, 43(1), 159–72. http://doi.org/10.1016/j.jbi.2009.07.002

Honko, H., Andalibi, V., Aaltonen, T., Parak, J., Saaranen, M., Viik, J., & Korhonen, I. (2016). W2E-wellness warehouse engine for semantic interoperability of consumer health data. IEEE Journal of Biomedical and Health Informatics, 20(6), 1632–1639. http://doi.org/10.1109/JBHI.2015.2469718

Hootman, J. M. (2009). 2008 Physical activity guidelines for americans : An opportunity for athletic trainers. Journal of Athletic Training, 44(1), 5–6. http://doi.org/10.4085/1062-6050-44.1.5

Huang, Y., Xu, J., Yu, B., & Shull, P. B. (2016). Validity of Fitbit, Jawbone UP, Nike+ and other wearable devices for level and stair walking. Gait and Posture, 48, 36–41. http://doi.org/10.1016/j.gaitpost.2016.04.025

Inglis, S. (2015). Telemonitoring in heart failure: Fact, fiction, and controversy. Smart Homecare Technology and TeleHealth, 3, 129. http://doi.org/10.2147/SHTT.S46741

Kim, J. (2014). Analysis of health consumers’ behavior using self-tracker for activity, sleep, and diet. Telemedicine Journal and E-Health : The Official Journal of the American Telemedicine Association, 20(6), 552–8. http://doi.org/10.1089/tmj.2013.0282

Kooiman, T. J. M., Dontje, M. L., Sprenger, S. R., Krijnen, W. P., van der Schans, C. P., & de Groot, M. (2015). Reliability and validity of ten consumer activity trackers. BMC Sports Science, Medicine and Rehabilitation, 7(1), 24. http://doi.org/10.1186/s13102-015-0018-5

Kushniruk, A., & Nøhr, C. (2016). Participatory design, user involvement and health IT evaluation. Studies in Health Technology and Informatics, 222(Evidence-Based Health Informatics), 139–151. http://doi.org/10.3233/978-1-61499-635-4-139

Lee, J., & Finkelstein, J. (2015). Consumer sleep tracking devices : A critical review. Digital Healthcare Empowering Europeans, 210, 458–460. http://doi.org/10.3233/978-1-61499-512-8-458

Lyons, E. J., Lewis, Z. H., Mayrsohn, B. G., & Rowland, J. L. (2014). Behavior change techniques implemented in electronic lifestyle activity monitors: A systematic content analysis. Journal of Medical Internet Research, 16(8), e192. http://doi.org/10.2196/jmir.3469

Mercer, K., Giangregorio, L., Schneider, E., Chilana, P., Li, M., & Grindrod, K. (2016). Acceptance of commercially available wearable activity trackers among adults aged over 50 and with chronic illness: A mixed-methods evaluation. JMIR mHealth and uHealth, 4(1), e7. http://doi.org/10.2196/mhealth.4225

Mercer, K., Li, M., Giangregorio, L., Burns, C., & Grindrod, K. (2016). Behavior change techniques present in wearable activity trackers: A critical analysis. JMIR mHealth and uHealth, 4(2), e40. http://doi.org/10.2196/mhealth.4461

Ong, M. K., Romano, P. S., Edgington, S., Aronow, H. U., Auerbach, A. D., Black, J. T., … Fonarow, G. C. (2016). Effectiveness of remote patient monitoring after discharge of hospitalized patients with heart failure: The better effectiveness after transition-heart failure (BEAT-HF) randomized clinical trial. {JAMA} Internal Medicine, 176(3), 310–318. http://doi.org/10.1001/jamainternmed.2015.7712

Patel, M. S., Asch, D. A., & Volpp, K. G. (2015). Wearable devices as facilitators, not drivers, of health behavior change. JAMA, 313(5), 459. http://doi.org/10.1001/jama.2014.14781

Paul, S., & Sneed, N. V. (2004). Strategies for behavior change in patients with heart failure. American Journal of Critical Care, 13(4), 305–314.

Pfannenstiel, A., & Chaparro, B. S. (2015). An investigation of the usability and desirability of health and fitness-tracking devices. In HCI International 2015 – Posters’ Extended Abstracts (Vol. 529, pp. 473–477). http://doi.org/10.1007/978-3-319-21383-5_79

Piette, J. D., List, J., Rana, G. K., Townsend, W., Striplin, D., & Heisler, M. (2016). Mobile health devices as tools for worldwide cardiovascular risk reduction and disease management. HHS Public Access, Postgrad Med, 127(2), 734–763. http://doi.org/10.1080/00325481.2015.1015396.Mobile

Piotrowicz, E., Piepoli, M. F., Jaarsma, T., Lambrinou, E., Coats, A. J. S., Schmid, J. P., … Ponikowski, P. P. (2016). Telerehabilitation in heart failure patients: The evidence and the pitfalls. International Journal of Cardiology, 220, 408–413. http://doi.org/10.1016/j.ijcard.2016.06.277

Prochaska, J. O., & Velicer, W. F. (1997). The transtheoretical change model of health behavior. American Journal of Health Promotion, Inc., 12(1), 38–48.

Salah, H., MacIntosh, E., & Rajakulendran, N. (2014). MaRS market insights wearable tech : Leveraging canadian innovation to improve health. MaRS- Ontario Network of Enterpenieurs, 1–45.

Shameer, K., Badgeley, M. A., Miotto, R., Glicksberg, B. S., Morgan, J. W., & Dudley, J. T. (2017). Translational bioinformatics in the era of real-time biomedical health care and wellness data streams. Briefings in Bioinformatics, 18(1), 105–124. http://doi.org/10.1093/bib/bbv118

Sousa, C., Leite, S., Lagido, R., & Ferreira, L. (2014). Telemonitoring in heart failure: A state-of-the-art review. Portuguese Journal of Cardiology, 33(4), 229–239.

Thorup, C., Hansen, J., Grønkjær, M., Andreasen, J. J., Nielsen, G., Sørensen, E. E., & Dinesen, B. I. (2016). Cardiac patients’ walking activity determined by a step counter in cardiac telerehabilitation: Data from the intervention arm of a randomized controlled trial. Journal of Medical Internet Research, 18(4), e69. http://doi.org/10.2196/jmir.5191

Tully, M. A., McBride, C., Heron, L., & Hunter, R. F. (2014). The validation of Fitbit ZipTM physical activity monitor as a measure of free-living physical activity. BMC Research Notes, 7(1), 952. http://doi.org/10.1186/1756-0500-7-952

Washington, W. D., Banna, K. M., & Gibson, A. L. (2014). Preliminary efficacy of prize-based contingency management to increase activity levels in healthy adults. Journal of Applied Behavior Analysis, 47(2), 231–245. http://doi.org/10.1002/jaba.119

Wortley, D., An, J.-Y., & Nigg, C. (2017). Wearable technologies, health and well-being: A case review. Digital Medicine, 3(1), 11. http://doi.org/10.4103/digm.digm_13_17