Abstract

Voice assistant devices, such as Google Home™ and Amazon Echo™, are at the forefront of natural voice interaction and natural language search through the removal of any graphical user interface (GUI). This user experience study is one of the first to compare information foraging using Google Home versus search behaviors using a traditional computer or desktop in a learning environment. We conducted research (N = 20) to investigate information foraging and retrieval behaviors of participants and measure query effectiveness, efficiency, and user satisfaction. Participants were separated into two distinct groups. The experimental group used the Google Home smart speaker to retrieve information for predefined question sets in the following categories: research, trivia, and math. The control group sought to answer the same question set using more traditional technologies including computers, tablets, cell phones, and calculators.

The results show that participants with voice assistants found the correct answers almost two times faster for research and trivia questions and three times faster for math questions than participants using traditional technologies. User satisfaction also scores much higher with voice assistants than with traditional systems. Yet, despite these results, we found that privacy concerns, limited searchable databases, and voice-recognition challenges are all limiting factors to the adoption and widespread use of voice assistants. To reach the full promise of relative efficiency and user satisfaction favoring voice assistants over traditional systems, these weaknesses must be addressed.

Keywords

human-computer interaction (HCI), voice assistant, Google Home, Amazon Echo, user satisfaction, smart speakers, voice search

Introduction

The use of voice for conversational information foraging is a very natural, human interaction method. Emerging technologies such as voice interactive systems take advantage of this naturalness to eliminate the friction of a graphical user interface (GUI) (Demir et al., 2017) and respond to informational queries in a human-like voice. By replacing the GUI with a voice user interface (VUI), voice assistant devices may represent a coming significant change in human-computer interaction patterns, usability, and user satisfaction during the information foraging and retrieval process.

A voice assistant device is a speech recognition technology that provides detailed answers to informational questions when queried by the user, such as a smart speaker controlled by voice (Martin, 2017). In a student-controlled learning environment, voice assistive devices give students the freedom to learn at their own pace out of the classroom (Kao & Windeatt, 2014). As voice assistant devices become more mainstream, further exploration of this niche in educational technology research has the potential to improve ways of teaching and learning. Among other applications, these devices can help create personalized learning experiences, contributing to student control and ownership over their learning. They also allow the student to ask questions and receive prompt answers concurrently with teaching and learning activities. The two most significant examples of voice assistant devices are Amazon Echo™ and Google Home™, the latter of which we included in this study.

Previously, it has been found that voice-prompted interaction systems can increase task completion efficiency (Demir et al., 2012). The purpose of this study was to investigate the differences between traditional and voice assistant systems for information foraging and retrieval as well as the extent to which voice assistive systems influence the efficiency and effectiveness of retrieval in an educational setting. The results of this study point to a new method of information retrieval for students in educational settings. The research questions for this usability study were as follows:

- To what extent do voice assistant systems influence efficiency and effectiveness in information retrieval?

- What are the differences between traditional and voice assistant systems for information seeking?

Background

Previous literature has discussed the ways information and communication technologies such as the World Wide Web (www), GUIs, and mobile devices with internet access dramatically changed information seeking and retrieval behaviors (Baeza-Yates & Ribeiro-Neto, 1999). In this study, we expand upon prior literature to examine the impact of VUIs and voice-activated systems on those behaviors. We refer to the information-foraging theory, which has emerged as one of the most relevant theories to investigate how people search for information while expending the least amount of time and energy to achieve results. Additionally, this research is grounded in usability theory, which examines the effectiveness, efficiency, and subjective satisfaction with a given experience.

As described by Pirolli and Card (1999), information foraging theory is used in this study to understand patterns of information retrieval across traditional and emergent devices. In this theory, the user seeks information in the most efficient and effective means possible. Further iterations of this theory show that a given individual’s level of competency or literacy in a particular topic, as well as tool literacy, increases the efficiency and effectiveness of any query. Designing tools for information retrieval, specialized databases, and personalized search results have improved information foraging and retrieval activities, which has led to better usability.

Users search for information in ways that they feel is natural and familiar. The Berrypicking model indicates that search queries evolve; users gather information in bits and pieces rather than in one grand set, and users use various search techniques through various sources according to Bates (1989), who also suggested creating flexible databases to allow users to adapt their information-seeking behaviors.

Usability theory focuses on the extent to which a given product can be used by a set of users to accomplish specific goals within a particular context of use, and it has been used to evaluate technologies and GUI through the following key measures: effectiveness, efficiency, and subjective user satisfaction. In this study, we are interested in the usability of traditional technologies versus emergent voice assistant devices for information retrieval in a one-on-one setting. We researched whether these tools can be used in educational settings and what factors enable or prevent their usage. Our study accounted for the design of traditional tools versus voice assistant devices, the literacies of the user, and whether their perceptions and attitudes toward these technologies are limiting, or enabling, factors to their adoption.

Speech is commonly the quickest way of communicating, and some have argued that although devices like mobile phones have improved voice assistants and voice search, many still require the cumbersome and error-prone use of typing (Schalkwyk et al., 2010). It is no wonder that voice assistant devices have now surfaced. According to Martin (2017), voice assistant devices have revolutionized technology. People can now simply ask for the information they want in their everyday lives. They can talk to a device and have it look up the information they are interested in. In this way, voice assistants enable information retrieval and learning within the fabric of everyday conversation.

Though timesaving, easy, convenient, and human-friendly, there are concerns about voice assistant devices. Nelson and Simek (2017) bring up important privacy issues that concern many would-be users. For example, once a device is being used, it begins to record and send data to the cloud. It keeps all personal history data, including what the user has searched. This history cannot be managed or deleted by the individual in the same way they might via an internet browser.

Voice assistant devices, with both promise and criticism, are becoming the way of the future. According to Klie (2017), almost 20% of all mobile searchers use voice search by a voice assistant, and this figure is expected to increase by 50% every year. Klie predicts that voice-activated virtual assistants will eventually be common in everyone’s home and office. Therefore, it is important to analyze and understand this trend and how these technologies will change people’s lives, including their effect on shopping behavior, information-seeking behavior, and other lifestyle influences.

Definitions of Informational and Exploratory Queries

For context, we defined the types of queries used in interactions with information retrieval (IR) systems to better understand the relative strengths and weaknesses of desktop versus voice search. Marchioni (2006) defines two categories of IR tasks: lookup tasks, which is subsequently referred to as informational, and exploratory tasks. Further studies of search task complexity (Byström, 2002) describe informational search as the most basic kind (Nuhu et al., 2019; White et al., 2009; Kim et al., 2009; Aula et al., 2006) while an exploratory search is defined as multifaceted (Wildemuth et al., 2012; White et al., 2009) and more difficult for the user (White et al., 2009). Additional research into users’ exploratory search behaviors indicates that users behave and adapt differently when the search goal is less precise (Kim et al., 2009; Kim et al., 2001) as is also true when the user is an expert at searching (Jenkins et al., 2003; Saito et al., 2001). During an exploratory search, the user’s tactics change. Queries begin in the conceptualization process and later focus on specific topics (White et al., 2009; Marchioni, 2006). As topics narrow and the user’s knowledge increases, queries may narrow or broaden (Sutcliffe et al., 2000; Rouet, 2013; Vakkari, 1999). Although the exploratory search is increasingly important (Kules et al., 2008), it is difficult for IR systems to support this type of search (White et al., 2009). However, a variety of ways have emerged to support it, including new user interfaces (Alonso et al., 2008) and studies of user behavior (Althukorala et al., 2014; Althukorala et al., 2013; Alonso et al., 2008).

Voice versus Desktop Search Usability

Depending upon the interface and type of search being conducted, the usability of an IR system may vary tremendously and indeed become multifaceted. Voice assistants are embedded in various devices and within interfaces as several studies point out (Ghosh et al., 2018; Moussawi, 2018). Therefore, the level of complexity between these varied interfaces, modalities of interaction, and information foraging activities must be considered carefully. Studies of voice search behavior (Xing et al., 2019) have shown that voice search has high usability for particular tasks such as local and contextual informational lookup (Feng et al., 2011; Guerino et al., 2020; Ji et al., 2018). Usability is also dependent upon the device’s context, in which voice search on a phone is often done on the go (Feng et al., 2011), whereas voice search via a smart speaker is mainly done at home during daily activities and within the family dynamic where conversation may trigger a search (Brown et al., 2015). Measuring usability by the System Usability Scale (SUS) is a very familiar practice used to evaluate everyday products (Kortum et al., 2013). However, due to the combination of sensory, physical, and functional qualities of the device and its artificial intelligence (AI), these measures are complicated (Moussawi, 2018; Ghosh et al., 2018). Desktop search usability compared to voice search on mobile or smart speaker devices tends to be more effective and efficient but dependent on task complexity (Vityurina et al., 2020). Complexity may include the user’s own challenges, such as second-language errors in query formation (Pyae et al., 2019), timing issues in query reformation (Jiang et al., 2013; Awadallah et al., 2015; Sa et al., 2019), and contextual factors such as background noise or concerns about privacy in public places like a busy street (Robinson et al., 2018) or even moderately private places like the home (Pridmore et al., 2020). In the classroom, concerns of privacy, background noise, and task complexity must be considered for student users’ experience.

Voice versus Desktop Search User Satisfaction

Voice search queries comprise both exploratory and informational searches through conversational, or natural language, search. This new way of searching has both advantages and challenges for the user and the IR system, which many studies have attempted to define (Thomas et al., 2018; Radlinksi et al., 2017; Trippas et al., 2017). According to research by several groups, context and medium impact the complexity of search tasks undertaken by desktop and voice search IR systems (Guy, 2018; Yankelovich et al., 1995). The complexity increases the cognitive load placed upon the user (Murad et al., 2018) and may influence the user’s preference for, and satisfaction with, voice-based versus desktop IR systems (Demberg, 2011). Task completion (Schechtman et al., 2003), surveys, and interviews (Purlington et al., 2017; Luger et al., 2016) are some of the most common means of evaluating user satisfaction with IR systems. Many studies have considered user satisfaction with voice assistants in lab contexts (Purlington et al., 2017; Luger et al., 2016). Others have sought to understand the impact of context on user satisfaction and have focused on the experience of users in the home while accomplishing domestic routines (Ammari et al., 2020; Menniken et al., 2012; Bell et al., 2002; Crabtree et al., 2004); furthermore, these studies consider other actors in the home as well as technology affordances (Menniken et al., 2012; Poole et al., 2008). Additional studies have examined how voice assistants and the conversational search experience are perceived by the user (Sa, 2020; Porcheron et al., 2017). To understand user satisfaction with voice-activated systems in an educational setting, similar studies of context and medium must be conducted and considered.

Smart Speakers and Voice Search in Educational Settings

Even as smart speakers and voice-activated assistants move into the home and everyday lives of individuals and families (Brown et al., 2015; Terzapoulos et al., 2019; Ammari et al., 2019, Seo et al., 2020), they are also moving into traditional information retrieval contexts such as libraries (Williams, 2019; Lopatovska et al., 2018), educational settings like the K-12 classroom (Crist, 2019; Lieberman, 2020), and college classrooms (Winkler et al., 2019; Lopatovska et al., 2018; Gose, 2016). Typically, smart speakers and voice-activated assistants act as assistants paired with the teacher (Shih et al., 2020; Gose, 2016) or to some extent as replacements for staff in public areas, such as help, welcome, and reference desks in school libraries and office reception areas (Shih et al., 2020; Lopatovska et al., 2019). For the most part, studies on these educational uses focus on individual use. However, some are beginning to look at the role a smart speaker or voice-activated assistant might play in a group or work setting in the classroom context (Winkler et al., 2019). Still, smart speakers’ dominant usage remains local for information-seeking with most queries relating to weather, addresses, event times, and other fast retrieval queries (Ammari et al., 2019; Williams, 2019; Bentley et al., 2018). Despite this typical usage pattern, the necessity of remote education during the 2020 pandemic has necessarily stretched the applications of smart speakers and voice-activated assistants—at least at the K-12 level—to supplement online classes, help with homework, and aid both teachers and parents in answering student questions (Emerling et al., 2020; Lieberman, 2020; Saíz-Manzanares et al., 2020). Although K-12 levels increased their usage, the extent to which these systems are rolling out on college campuses or in university online classrooms is not clear.

Privacy and Personalization Challenges

A major challenge to the ubiquity of smart speakers and voice-activated assistants in educational or any similar public setting is that of student and individual privacy (Herold, 2018; Ammari et al., 2019; Malkin et al., 2019, Richards, 2019). Multiple studies have shown that privacy is a real concern due to saved logs of user history (Lau et al., 2018; Pfeifle, 2018; Neville, 2020), and the legal ramifications for device manufacturers caused much fewer public institutions to deploy such technologies or posit and understand privacy concerns (Pfeifle, 2018). Simultaneously, the promise of these technologies cannot be understated considering the gains in information retrieval usability and user satisfaction, at least as discussed previously, when completing certain types of tasks. Here, the privacy challenge may be both answered and further complicated through engineering personalized solutions such as fingerprinting (Das et al., 2014), continuous authentication (Feng et al., 2017), speech discrimination (Nozawa et al., 2020), and design solutions (Bentley et al., 2018; Kompella, 2019; Yao et al., 2019). To what extent personalization of these devices in educational settings may alleviate privacy concerns remains to be studied.

Research Significance

Voice assistant devices have natural language understanding and allow for personalized learning that supplements and aids learning, both in and out of the classroom. The user may ask questions and then narrow their query in a conversational manner, democratizing learning for the average user. Students seeking basic skill assistance without teacher support may use such devices to get their questions answered promptly. Despite the advantages voice assistant devices create in next-generation human-computer interaction, they have yet to be offered in any significant way towards advancing teaching and learning. This research is one of the very first studies to evaluate how voice assistants can be used to advance teaching and learning. The purpose of this research is (1) to observe how students retrieve information through Google Home, and (2) how efficiency, effectiveness, and satisfaction rates compare to traditional methods of information retrieval, such as using computers and cellphones.

Methods

This research study adopted a mixed-methods approach by using quantitative and qualitative methodologies to analyze the effectiveness, efficiency, and user satisfaction of traditional and voice assistant technologies for information foraging and retrieval. We collected statistical data and collated the details of a comprehensive user experience.

The study laid the groundwork for a better understanding of not only the usability of each device but also of each user’s individual experience with either voice-assisted or traditional devices, such as a computer or cell phone. This study also investigated the advantages and limitations of traditional versus emergent voice technologies to determine which option best accommodates student learning.

A moderated, in-person user experience case study was conducted to investigate how users interact with desktop computers, cell phones, or calculators for information retrieval and calculation tasks compared to Google Home. The research took place from March—April 2019 before the global Covid pandemic in 2020; therefore, there were no restrictions on face-to-face meetings at the time of data collection. The questions in the set had previously been searched by the researchers on both the emergent and the traditional devices to ensure that answers were reachable for both groups. Each participant was given a task sheet and asked to find the answers to several questions. Exploratory (research) included questions such as these: “What is the chemical formula of water?” “What branch of zoology studies insects?” Informational (trivia) questions consisted of questions such as these: “How many U.S. states border the Gulf of Mexico?” “Norway, Sweden, Finland, Denmark, and Iceland are also known as what?” The final category of questions were mathematical ones such as these: “What is the square root of 256?” “What is the least common multiple (LCM) of 4 and 11?” There were three sets of questions and a total of 30 questions each. The task completion success rate (effectiveness) and task completion time (efficiency) were measured. A post-test interview session provided qualitative data on how each user felt about each device. The industry standard SUS survey was given to participants after completing all the tasks to measure the users’ overall experience. This survey is the most common tool used to quantitatively measure a system’s usability (Tullis & Albert, 2013).

Each usability test was conducted face-to-face on a one-on-one basis. A Google Home device for the experimental group and a desktop computer for the control group was set up before the scheduled test time. The control group participants were also allowed to use their cell phones to complete the predefined tasks. A brief verbal instruction was provided for the experimental group to practice using Google Home, and they were also allowed to practice with a couple of questions and answers without a time limitation. A camcorder was used to record the usability test session. A stopwatch on the phone was used to keep time on task completion. The time it takes to complete a task is a common way to measure efficiency in UX studies.

A semi-structured interview session with the participants who used Google Home in their information retrieval tasks followed the usability testing. The interview sessions were recorded and transcribed for data analysis. Quotations from the interviews were used for interpreting identifiable themes.

Participant Demographics

A total of 20 college students from a state university located in the Midwestern U.S. participated in the study. Participants with no previous experience with the voice assistant devices were purposefully recruited. Of the 20 participants, 15 were doctoral level, and 5 were master’s level students. The study participants consisted of 10 males and 10 females ranging from 23—52 years of age (M = 35, Mdn = 37). All gave full consent to participate. In terms of racial makeup, the participants indicated that there were 7 Asian American, 4 African American or Black, 1 Latin American or Hispanic, and 8 European American or White students. All participants self-reported that they had previous experience in using computers; 9 were advanced, 10 were intermediate, and 1 was a beginner. Out of 20 participants, 16 had no previous experience with voice assistant systems, with only 4 indicating they had previous usage.

The participants were randomly assigned into groups: an experimental group of 10 participants using Google Home to complete the task set and a control group of 10 participants using a traditional device to complete the same task set. As referenced by the literature, 5 participants are satisfactory to determine major and moderate usability problems (Nielsen, 1993; Demir et al., 2012).

Participants in the experimental group self-reported that they spend at least 2 hours per day information-seeking on the internet, but they had never used a smart speaker prior to the experiment. Participants in the control group self-reported that they spend at least 2 hours per day information-seeking on the internet, and that they were intermediate-level computer users. Out of the groups of 10 participants, 6 from the control group and 5 from the experimental group were non-native English speakers.

Procedure

The participants were recruited by the snowballing method in which potential participants and their acquaintances were invited to participate in the study at a Midwestern college in the U.S. When they agreed to participate, they were randomly assigned either to the experimental or the control group, and a time and location convenient to them was arranged to participate in the study. Once they arrived at the test session, they were greeted and allowed to get comfortable. The purpose of the research study was explained, and each participant signed a consent form and filled out a demographic questionnaire. The task sheet with 30 questions was then presented to the participant, and they were asked to complete the tasks in their own time. They were informed that they could verbally state if they were not able to complete a question and move on to the next one. Once they had completed all the tasks, the participants were asked several post-test questions in a semi-structured interview for qualitative feedback. Participants shared any thoughts or recommendations that they wanted to express openly about the session (Barnum, 2010). The usability test sessions were recorded by a camcorder for further analysis. The task completion success rate (effectiveness), task completion time (efficiency), and the user’s personal experience were collected in the session. The SUS survey provided quantitative data on the users’ satisfaction.

Data Analysis

After each test session was completed, the data was collected and recorded in an Excel™ file, including effectiveness, efficiency, and SUS scores. The data on effectiveness (task completion success rate) and efficiency (task completion time) were put into tables, and averages were calculated for both sets. Data from the SUS survey, a 10-item Likert-type survey, was calculated for an overall SUS score. To analyze the qualitative data, notes were made during the live sessions and during playback sessions of the video recordings to add additional keywords, flag emotions that the participants mentioned during sessions, and highlight key themes that arose from the post-test questions.

Results

Efficiency Rates

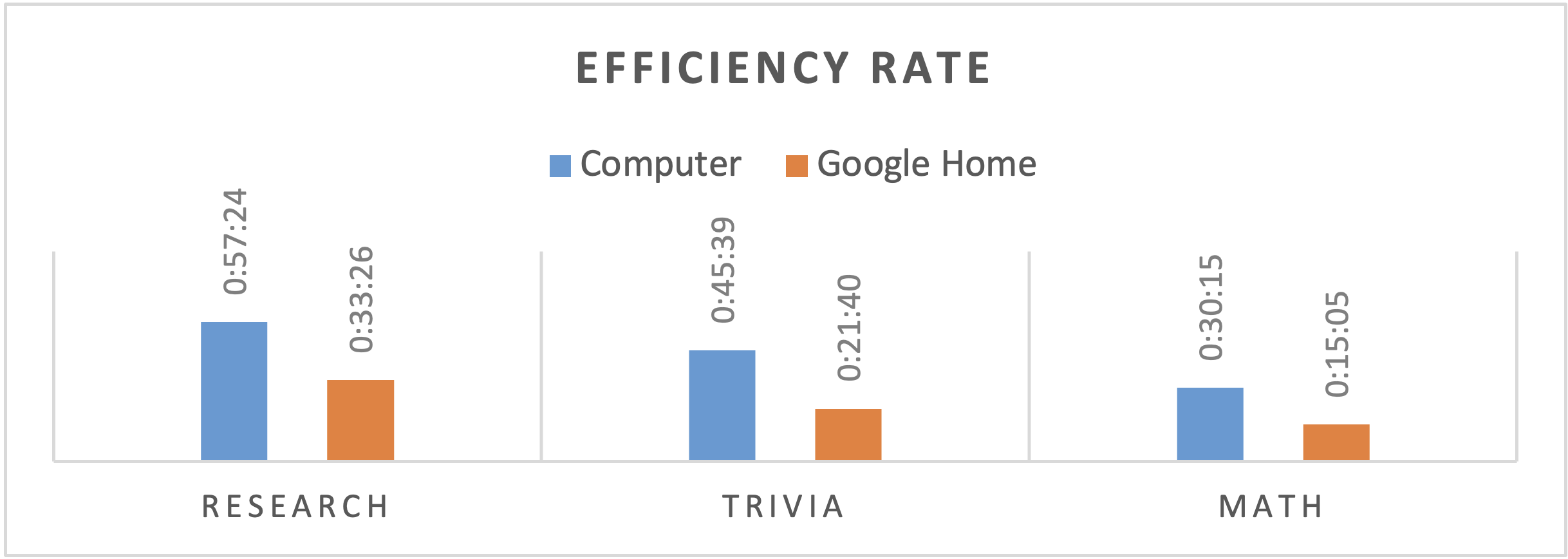

We started measuring the time when the users read or voiced the question. The Google Home users who repeated the questions had a delayed time in efficiency. Figure 1 shows the total amount of time it took all 10 participants to complete each category. The figure shows the comparative times using the desktop computer and using Google Home. The average time to complete all the questions using a desktop computer or a cell phone was 44 min 43 s whereas the average time to complete all questions using Google Home was 23 min 39 s.

Figure 1. Efficiency rate and total completion time for each category.

It is no surprise that information retrieval was quicker using Google Home than a desktop computer. It is generally quicker to voice a sentence than it is to type the same sentence. Figure 2 shows the average time to complete each category was shorter using Google Home.

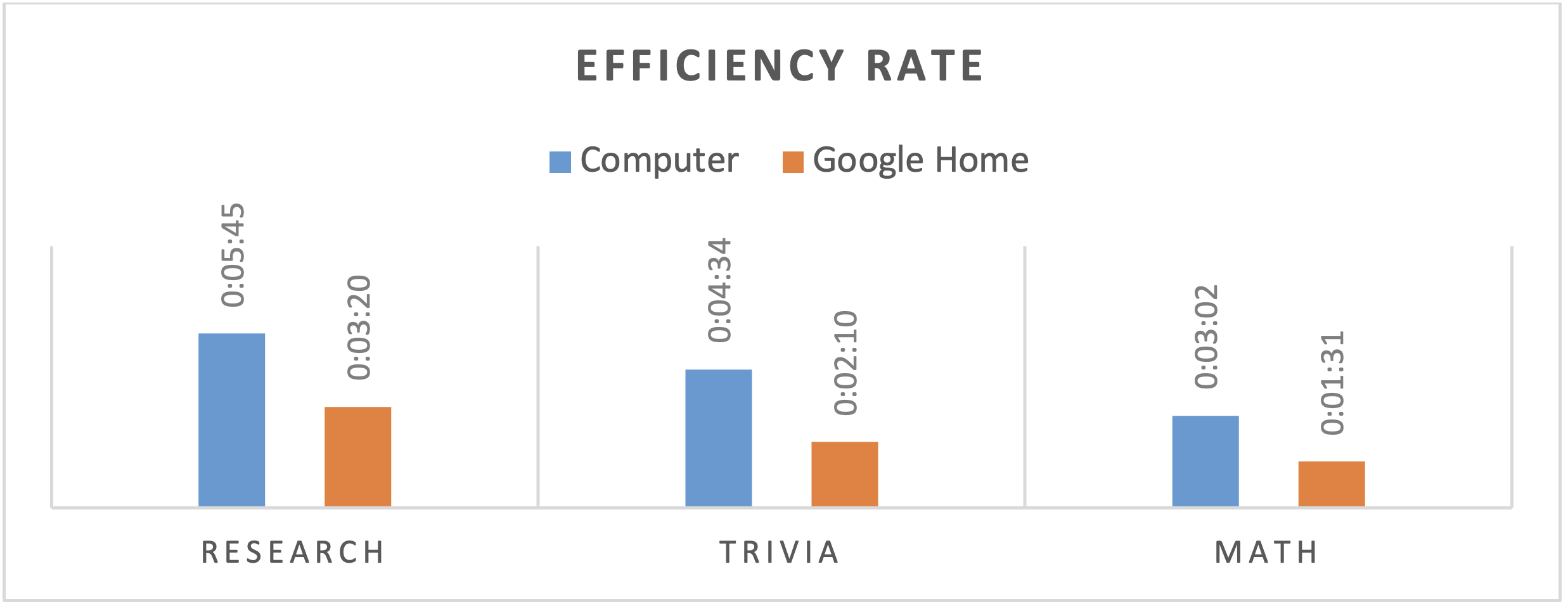

Figure 2. Efficiency rate and average completion time for each category.

Efficiency is measured by the time (in seconds) it takes to complete each of these three groups of tasks. As for the analysis results pertaining to efficiency, it took 5 min 46 s on average for the control group to answer all 10 research questions, whereas it took 3 min 20 s on average for the experimental group to finish the same task. The statistical significance test for the difference had a p-value of 0.001, indicating that Google Home users (the experimental group) completed research-related questions in a significantly shorter amount of time compared to computer users (the control group).

Regarding the trivia questions, the control group had a mean of 4 min 33 s for completing the trivia questions, whereas the experimental group had a mean of 2 min 10 s. The difference in task completion time was statistically significant (p = 0.011), indicating that Google Home users completed trivia questions in a significantly shorter amount of time compared to computer users.

For math questions, the results show that the control group answered math questions in 3 min 1 s on average, whereas it took only 1 min 30 s on average for the experimental group to finish the same task. The difference in task completion time was statistically significant (p = 0.001), indicating that Google Home users completed math questions in a significantly shorter amount of time compared to computer users.

Effectiveness Rates

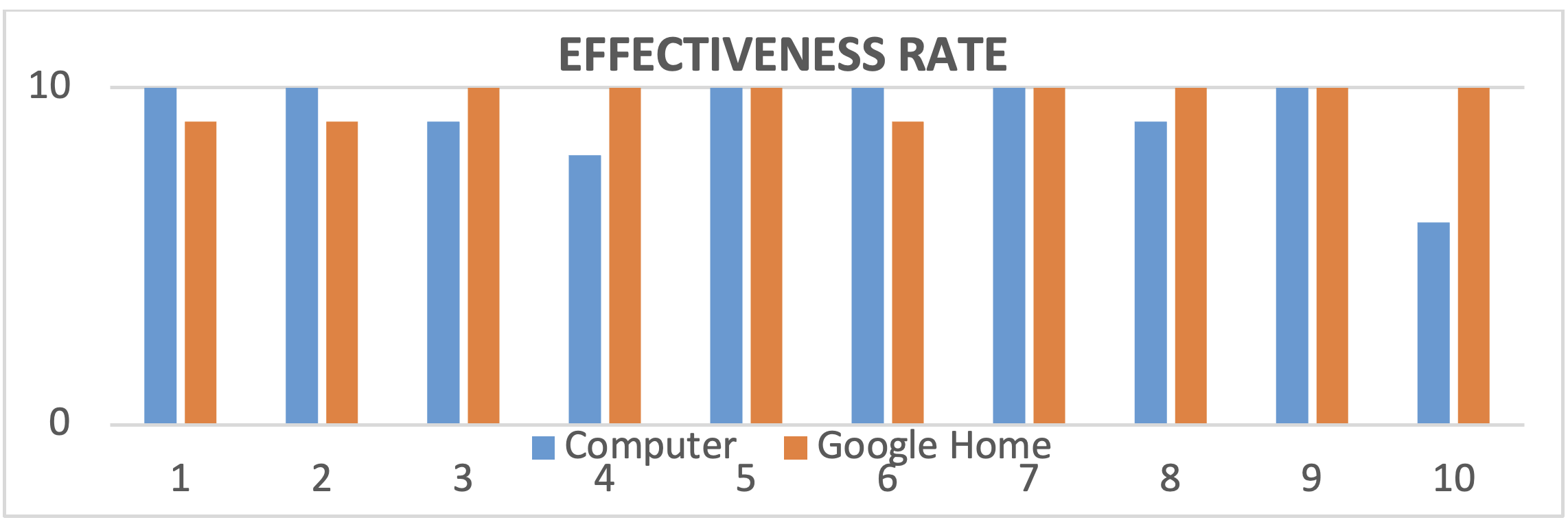

The task success rate assessed how participants achieved the given tasks successfully with the Google Home or a desktop computer or cell phone. With 10 participants for each technological device in all, there were 300 questions to be completed using Google Home and 300 questions using a computer or cell phone. When using a desktop computer or cell phone, 87 out of the 100 questions for the research questions were completed, resulting in an 87% task success rate. This is considered a success rate; however, as it is not 100%, there are areas of usability that need to be addressed. For example, lack of task completion could have been caused by incorrect device operation or devices designed without beginner users in mind. In terms of the success rate for the control group, 89 out of the 100 questions for the trivia questions were completed, resulting in an 89% task success rate, and 92 out of the 100 questions for the math questions were completed for a 92% task success rate. These results were higher than the task success rate for the Google Home device. Using Google Home, 82 out of the 100 questions for the research questions were completed for an 82% task success rate, and 88 out of the 100 questions for the trivia questions were completed, resulting in an 88% task success rate. This indicates that users were able to complete the research and trivia questions more effectively using a desktop computer than a voice assistant device. However, when comparing the task success rate for math questions, Google Home was deemed the more effective device with 97 out of the 100 questions completed for a 97% task success rate (Figure 3).

Figure 3. Effectiveness rates for mathematics questions.

An independent two-sample t-test was administered to test whether there was a significant difference between the experimental (Google Home users) and control (computer users) groups in terms of effectiveness in answering the questions correctly. Effectiveness is measured by the number of correct answers in research, trivia, and math, respectively.

According to the analysis results, the control group users had a mean of 8.7 correct answers for the research questions (out of 10 questions), whereas the experimental group of Google Home users had a mean of 8.2 correct answers. This difference is not statistically significant (p = 0.248).

As for the trivia questions, the control group had a mean of 8.9 correct answers (out of 10 questions), whereas the experimental group had a mean of 8.8 correct answers. The difference is not statistically significant (p = 0.901).

For math questions, the control group had a mean of 9.2 correct answers (out of 10 questions), whereas the experimental group had a mean of 9.7 correct answers. This difference is not statistically significant (p = 0.247).

User Satisfaction Survey Results

The users’ satisfaction was measured using the standard SUS survey. The overall SUS score for the traditional device was 92.8, which is higher than the industry standard average score of 68. However, the overall SUS score for the Google Home device was 96.0, which is well above average. These scores highlight that the experimental group with Google Home had a higher satisfaction rate compared to the control group. Adopting Sauro and Lewis’ (2016) curved grading scale, both systems stand at the A+ level. Nevertheless, this score does not explicitly indicate why users were fully satisfied or not (Demir, 2012); therefore, interview questions provided more qualitative data.

Interview Results

A thematic analysis was conducted on the semi-structured interview responses with the participants who used Google Home. The major themes were identified as (1) ease-of-use, (2) struggles in verbal communication, and (3) privacy. Participants’ themes and quotes use pseudonyms.

Ease-of-Use

All participants agreed that it was easy to use Google Home. They started using Google Home within minutes even though none had previous experience. The average time for the participants to get familiar with Google Home was 2 min 40 s.

Kaylee: “I felt very confident with Google Home even though I am using it for the first time. It is easy and fun to use. It reads the answers perfectly, even with partially structured sentences, and I found it a great source for retrieving the answers quickly. I am inspired to answer 24 questions in less than 5 minutes, and it is very engaging and impressive. I thought such devices were produced for only entertainment purposes but found it very useful for hunting down the information in seconds.”

Kim: “Even though this is my first time using Google Home, I found it is very easy to use. Google home promptly answered my questions. As an international student, I sometimes have difficulties spelling the words in a text-based search, but it is fantastic to search verbally. I also really liked how it responds to math questions. I wish to use it during my engineering classes.”

Struggles in Verbal Communication

Of the 10 participants, 9 in the experimental group indicated that they did not regularly use verbal search in daily life. Non-native English speakers, 4 participants, pointed out that they felt a bit hesitant in verbal information seeking; 1 non-native English speaker with a heavy accent had serious trouble communicating with Google Home.

Omar: “The device (drove) me crazy. First, I kept forgetting to turn it on by saying, ‘Hey Google,’ and it never responded to me. Second, it did not understand me when I am asking questions. It responded to me that she could not help me with that. Probably, my accent is the problem, or the system is designed for only native speakers. I got furious and disappointed!”

Ferzan: “I am a visual learner, so I want to discover the features of Google Home. However, I am having difficulties understanding where to start. There is no screen or written instructions. I do not know what to ask Google Home to learn more about how to use it effectively. I should get training on discovering the device. Otherwise, I am just looking at the device, and I do not know what I can do with it.”

Privacy

As the literature already underscores, privacy is a major concern with Google Home. Participants (8) indicated that the voice assistant system would be a threat to privacy. They indicated that they feel hesitant to place such devices in their rooms where they would be always listening to the environment.

Kristen: “Google Home is connected to the internet and actively remaining in silence mode. When I trigger it by saying, ‘Hey Google,’ it automatically turns on and responds to me. But it is actively listening to the room, and I am not sure whether it is posting the conversations going on in the room to somewhere I do not know.”

Nesli: “I do not want to place Google Home in my living room. I am talking to my family and having very personal discussions with my family. Google Home is designed to listen and respond verbally. I feel my privacy is violated when a device listen(s) to my family and some people (are) collecting data from my living room.”

Students’ Behaviors Using Google Home

All participants except one indicated that they did not have any previous experience with Google Home. They all indicated that they are aware of the Siri™ voice assistant on iPhones, but they do not regularly use Siri in their daily activities.

The Google Home was introduced to the experimental group, and they were given instructions on how they can activate the device by calling, “Hey Google,” followed by a question to get an answer. We allowed participants to familiarize themselves with the device without any time restrictions. They prompted questions of their choice such as these: “What is the population of the US?” “Who is the prime minister of Germany?” “Who is the founder of Tesla motor company?” The average time to get familiar with Google Home was 3 min 40 s before participants indicated they wanted to start the data collection session.

The most challenging part of using Google Home for participants was forgetting to say, “Hey Google,” to put the device into listening mode. The device requires the phrase “Hey Google” to turn on the listening mode although it stays in the listening mode for three seconds after answering a question for any follow-up questions.

Another challenge with the device is the mispronunciation of words. One international student with a heavy accent had problems communicating with the device. Google answered some questions from the participant with, “Hi there, I am listening, how can I help today?”, and “I can’t help with that yet.” The participant self-identified that pronunciation was the problem and tried to voice words clearly.

Furthermore, participants with long pauses between their words did not have a chance to complete full questions because Google Home assumes they completed speaking and prompts the search activity immediately. Particularly the international students who speak slowly preferred to read written questions aloud at a faster pace during the session to get Google Home to capture the full question.

One participant preferred to ask questions with keywords rather than as a full sentence. For instance, they prompted, “Hey Google, smallest country in Europe,” or, “Hey Google, square root of 256.” They received the correct answers more efficiently than other participants.

Discussion

The results of this usability test support the literature that states voice assistant systems, such as Google Home, provide prompt answers to various questions. Especially in mathematics, the average time it took to ask Google Home a question and get a response was half the time it took to type the question on a desktop computer. The results show that Google Home, or voice assistant devices in general, are good for information retrieval, especially in delivering answers swiftly. These devices accommodate personal life inquiries and can also assist with personalized learning.

However, when analyzing the data from the post-test questions, it can be said that voice assistant devices may not be the best for in-depth information seeking or for an in-depth investigation of a topic. The research results back up White et al. (2009) who stated exploratory search is difficult with IR systems. Another unfavorable aspect of Google Home might be the difficulty in selecting sources from which to retrieve information. Google Home will sometimes retrieve information from an online source that differs from sites found using a desktop computer or cell phone. This means that these sets of information may differ, resulting in contrasting answers. This could be a reason why the effectiveness rate differed between the two devices. Although Google Home was good for quick answers, participants mentioned they could not do in-depth research with this device and did not like that they could not track mathematical processes visually; rather, they were told the answer.

In this study, the question set included primarily informational tasks which were well supported by the Google smart speaker. In this type of task, traditional means of informational retrieval performed better. However, more exploratory tasks require a level of expertise in using voice assistants that not every user has at their disposal.

Voice assistant devices are innovative devices that people are increasingly drawn to when seeking information. Most participants mentioned that they could retrieve information promptly, even with partial sentences in place of questions. Most participants were first-time Google Home users and were surprised at how easy it was to use. Another positive analysis of Google Home was that this device would benefit English as a Second Language (ESL) students who can verbally ask a question but cannot write well in English. As participants stated, it increases engagement, creates personalized learning, and works great for personal use or in one-on-one situations. However, this device is not without its limitations. Participants mentioned that foreign accents and enunciation might not be easily understood.

Overall, privacy is the biggest concern most participants emphasized that they had with voice assistant systems, which ties into their sense of safety and security in using these devices. The results support the previous studies that privacy is a leading concern with voice assistant systems (Lau et al., 2018; Pfeifle, 2018; Neville, 2020). The participants expressed a low level of trust with voice assistant systems because the device itself is always listening to the environment and responding when they prompt it with, “Hey, Google.” This feature of Google Home is defined as a vulnerability by the participants because the device might be capturing all communications in the environment and might broadcast or submit voice recordings somewhere without users’ consent. To consider wider rollout on campuses and in educational settings, user satisfaction must be better understood to determine these technologies’ best usage, and privacy concerns must be addressed.

Voice assistant systems utilize search on websites to find the best answer to users’ questions. However, the search capacity is obviously very limited by the device. The voice assistant device’s response is often, “I cannot help with that yet,” or “I am still learning.” The limited search capacity of the voice assistant prevents users from searching on multiple sources and the VUI limits the user’s ability to access a list of available sources. Furthermore, missing visual cues with the voice assistant system are a challenge for visual learners. Participants sometimes wanted to get the answers repeated to make sure that they heard them correctly.

The use of desktop computers or cell phones has been around for many years; therefore, students have familiarized themselves with these technological devices. All but one of the participants stated that their level of skill with computers was intermediate or advanced, so it can be assumed that they would not face too many problems when retrieving information. The participants who used the computer to answer the task questions stated that retrieving information with the computer allowed them to choose between multiple sites, granting them access to explore more detailed research results on a specific topic. There is no limitation on the amount of information a person can source and read on the web. However, the participants indicated a few limitations to using a desktop computer. The first is that information retrieval can be time-consuming. Reading information to get to specifics can take time, especially when the necessary details are embedded in pages of information.

These promises and challenges attract and repel K-12 and higher education academic institutions alike in their consideration of smart speakers for inclusion in the classroom, library, and other departments with academic functions. If, as Rowlands et al. (2008) claim, the researcher of the future uses these newer forms of search on smart speakers and smartphones, then it makes sense to adopt and adapt to their usage, especially Google Home, which according to Bentley et al. (2018) has a much higher rate of retained daily use than Alexa™ and other assistants. If, as our study shows, user satisfaction is much higher with voice assistants and smart speakers in the performance of informational queries, then integrating these devices can add to students’ satisfaction with their academic institutions. However, search efficiency and search task effectiveness with voice search do not eliminate the need for a web-based search, which is why search, especially on mobile phones, is becoming multimodal (Feng et al., 2011). Furthermore, search via smart speaker is still considered to be at a very early stage, requiring increasing personalization to be effective over the long term (Bentley et al., 2018).

Students, as Sendurur (2018) says, are inherently information seekers both in academic and everyday contexts. Those academic institutions and library systems that have investigated smart speakers’ use have found that students in K-12 increased their engagement and built their listening skills (Crist, 2019). According to Emerling et al. (2020) and Şerban et al. (2020), voice assistant devices have proven to be an important at-home teaching aide during the Covid-19 pandemic. The largest concern on the part of both students and representatives of academic institutions is that of privacy, as expressed in several studies including that of Pfeifle (2018). These issues, according to Easwara and Vu (2015) contribute to users’ social concerns around the use of voice-controlled assistants in less structured settings. Users also mistrust the device manufacturers for a variety of reasons including the fact that smart speakers are designed to be always listening (Lau et al., 2018). Smart speaker device manufacturers must invest heavily in protections for these general use contexts like classrooms and libraries through better privacy settings, personalization, and user permissions.

As our data shows, voice search using devices such as smart speakers is efficient and yields greater user satisfaction than search via the web on traditional devices. However, smart speakers warrant more research in terms of context; comparison of query type; observation and analysis of query reformation behaviors; and their effectiveness for use in other querying subjects beyond those highlighted in this study. These additional research topics can guide both academic institutions in their dissemination of this technology on campuses and the device manufacturers in their continued efforts to evolve it. We believe in the promise of smart speakers and voice assistants as they continue to grow in usage and effectiveness.

Indeed, the promise of these emergent technologies is far beyond that of the average person’s in-home usage or even within the context of a classroom or campus. We, of course, see the growth of this potential during the pandemic, with increased usage in both contexts, but we are also witnessing the isolation of our senior population as well as those people with disabilities due to their high-risk status. This isolation of our at-risk population means that voice assistive devices have become an indispensable lifeline that enables these individuals to connect to family members, friends, and healthcare professionals and to keep entertained and informed. Indeed, these devices have allowed vulnerable individuals to manage their schedules, order groceries, and otherwise maintain a more self-sufficient lifestyle than they would have been able to otherwise. As a demonstration of how important these devices have become during the pandemic, one must only look at the new release of Amazon® Care Hub, which is Alexa™ for seniors. Moreover, such systems should be flexible to allow users to conduct information retrieval processes to their own current needs, as suggested by Bates (1989).

As stated in previous sections, we grounded our research in information-foraging theory to interpret our research and to understand how people search for desired information by spending the least amount of time and energy. The research results indicate that participants are satisfied and tend to continue to use voice assistant systems to access information due to the ease of use and prompt access to information. The usability theory investigates effectiveness, efficiency, and satisfaction. The results show that the experimental group with voice assistant systems achieved the tasks with better scores than the control group with computers and cell phones. We may expect to see voice assistant systems deployed more fully in the future on campuses, especially when user concerns are addressed and eliminated in the future.

Recommendations

- Google Home can be an alternate method for accessing information quickly and eliminating the problems of graphical user interface design. Researchers who analyze information retrieval can adopt Google Home if applicable to the research settings and target audience.

- Google Home is evaluated as more enjoyable for information seeking than computers. Therefore, it can be a good solution for younger users.

- Google Home is evaluated as an efficient option for searching, especially in the non-depth information-seeking setting. The device would be useful to reduce learners’ cognitive load resulting from a visual interface.

- Replicating the research with other voice assistant systems can help to survey the capabilities of such systems and generalize results to a broader perspective. Google Home can provide optimal solutions in an English teaching and learning setting for future researchers in the language learning field.

- Since Google Home eliminates possible problems with graphical user interfaces, future researchers might consider it a good solution for senior users and people with disabilities.

Limitations

As previously detailed, students, especially graduate students, are sophisticated information seekers by training (Sendurer, 2018) with a myriad of information-seeking experiences driven by their studies, relationships with faculty members, and other factors (Sloan & McPhee, 2013). In addition to the specificity of the graduate student population represented in this study, it is also important to note that the majority, if not all of the study participants, are a part of the Google Generation (Rowlands et al., 2008) who have grown up and completed their entire scholastic path with the support of browser and desktop-based search engines and online sources.

The younger graduate student participants are part of that group of individuals who have never known a time without a mobile phone with some level of search capability. Their experience and expertise in information-seeking make them a very specific population in the context of this study; therefore, our results are not completely generalizable for a wider audience, especially an older demographic whose information-foraging techniques and practices show a fundamentally different approach (Bilal & Kirby, 2001).

In addition to the specificity of the participants’ demographic and experience, the small sample size of 20 participants prevents the researchers from doing a full statistical regression analysis to better verify the results of the study. This limitation would need to be addressed in a larger study, along with the recruitment of a more generalizable participant pool.

Conclusion

Although there is no statistically significant difference between people finding the correct answers for the given questions using desktop versus voice assistant search, the research results help us conclude that users were able to complete the research, trivia, and math questions more quickly and efficiently using a voice assistant device than using computers. When comparing the task success rate for math questions, Google Home was deemed the more effective device with a 97% task success rate versus 92% with computers. When it comes to efficiency, the average time to complete questions in each category was shorter when using Google Home. Additionally, Google Home usually provided a direct answer in circumstances in which the computer users needed to identify correct answers by reading, searching, and comparing multiple websites or trying to find the desired information on long, text-heavy pages.

Although the participants were first-time users of Google Home, the adoption and ease of use of such devices were welcomed. Surprisingly, subjective satisfaction scores highlight that participant users were more satisfied with Google Home than traditional technological devices.

This study gives evidence that Google Home is a useful gateway to acquiring information. These emergent technologies not only benefit one’s personal life but add to and enhance learning for the student. Our findings indicate that the use of Google Home has the potential to help learners focus on problem-solving without the unnecessary cognitive load resulting from manual information processing. In addition to traditional devices, voice assistant devices such as Google Home could help students learn and research information.

This study highlighted concerns about online security and safety with voice assistant devices. Participants were concerned with privacy issues such as the fact that these devices are always on and listening to information relayed near it. They also had concerns about browser history content, how this information is used, and how it can be cleared. In the future, this study should be replicated with other voice assistant devices, alternative questions, and a different demographic. Understanding how voice assistant devices can be used with different student age groups can provide better research and understanding of how these devices can be altered and improved to accommodate all learning types and how they can be used in different learning environments.

Tips for Usability Practitioners

- Set up Google Home beforehand to save time researching with smart speakers.

- Allow first-time users to get familiar with Google Home before you start data collection.

- Google Home is easy to use and learnable without any training. Adopting voice assistant systems in user experience research can provide benefits to particular user groups such as English language learners, the elderly, and people with visual disabilities.

- It is important to pay attention to potential challenges in using Google Home for non-English speakers. The advantages of using these systems may vary depending on the instructional support provided for these students.

- Although Google Home can be a useful tool for quick answers such as simple math problems, utilizing the device for in-depth learning purposes requires further research and development.

Acknowledgments

This research is funded by the College of Education’s Dean’s Research Grant for the 2017-2018 academic year. This internal grant allowed us to pay the cost of the research, participant incentives, and purchase smart speakers.

Disclaimer: The views expressed herein are those of the authors and not necessarily those of the Department of Defense or other U.S. federal agencies.

References

Ammari, T., Kaye, J., Tsai, J., & Bentley, F. (2019). Music, search, and IoT: How people (really) use voice assistants. ACM Transactions on Computer-Human Interaction, 26(3), 1-28. https://doi.org/10.1145/3311956

Athukorala, K., Oulasvirta, A., Glowacka, D., Vreeken, J., & Jacucci, G. (2014). Narrow or broad?: Estimating subjective specificity in exploratory search. Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management. Association for Computing Machinery, 819–828. https://dl.acm.org/doi/10.1145/2661829.2661904

Athukorala, K., Oulasvirta, A., Glowacka, D., Vreeken, J., & Jacucci, G. (2015). Is exploratory search different? A comparison of information search behavior for exploratory and lookup tasks. Journal of the Association for Information Science and Technology, 67(11), 2635-2651. http://dx.doi.org/10.1002/asi.23617

Baeza-Yates, R., & Ribeiro-Neto, B. (1999). Modern information retrieval: The concepts and technology behind the search. Addison-Wesley.

Bates, M. J. (1989). The design of browsing and berrypicking techniques for the online search interface. Retrieved from https://pages.gseis.ucla.edu/faculty/bates/berrypicking.html

Barnum, C. M. (2011). Usability testing essentials: Ready, set… test! Elsevier.

Bentley, F., Luvogt, C., Silverman, M., Wirasinghe, R., White, B., & Lottridge, D. (2018). Understanding the long-term use of smart speaker assistants. Proceedings of the ACM on Interactive, Mobile, Wearable, and Ubiquitous Technologies, 2(3), 1-24. https://doi.org/10.1145/3264901

Bystrom, K. (2002). Information and information sources in tasks of varying complexity. Journal of the American Society for Information Science and Technology, 53(7), 581-591. https://doi.org/10.1002/asi.10064

Craig, R. T. (2016). Pragmatist realism in communication theory. Empedocles: European Journal for The Philosophy of Communication, 7(2), 115-128. https://doi.org/10.1386/EJPC.7.2.115_1

Demir, F., Karakaya, M., & Tosun, H. (2012). Research methods in usability and interaction design: Evaluations and case studies (2nd ed.). LAP LAMBERT Academic Publishing.

Demir, F. (2012). Designing intranet communication portals for government agencies. Polis Bilimleri Dergisi, 14(2), 75-94.

Demir, F., Ahmad, S., Calyam, P., Jiang, D., Huang R., & Jahnke, J. (2017). A next-generation augmented reality platform for mass casualty incidents (MCI). Journal of Usability Studies, 12(4), 193-214.

Emerling, C., Yang, S., Carter, R. A., Zhang, L., & Hunt, T. (2020). Using Amazon Alexa as an instructional tool during remote teaching. Teaching Exceptional Children, 53(2), 164-167. https://doi.org/10.1177/0040059920964719

Herold, B. Alexa moves into class, raising alarm bells. Education Week, 37(37).

Kao, P., & Windeatt, S. (2014). Low-achieving language learners in self-directed multimedia environments: Transforming understanding. In J. Son (Ed.), Computer-assisted language learning: Learners, teachers, and tools (pp.1-20). Cambridge Scholars Publishing.

Klie, L. (2017). Marketers are unprepared for voice search: Voice assistants are altering consumer habits, and marketers need to adjust. CRM Magazine, 21(9), 14.

Lopatovska, I., & Oropeza, H. (2018). User interactions with “Alexa” in public academic space. ASSIS&T Annual Meeting, 309-318. https://doi.org/10.1002/pra2.2018.14505501034

Lopatovska, I., Rink, K., Knight, I., Raines, K., Cosenza, K., Williams, H., Sorsche, P., Hirsch, D., Li, Q., & Martinez, A. (2019). Talk to me: Exploring user interactions with the Amazon Alexa. Journal of Librarianship and Information Science, 51(4), 984-997. https://doi.org/10.1177/0961000618759414

Losee, R. M. (2017). Information theory for information science: Antecedents, philosophy, and applications. Education for Information, 33(1), 23-35. https://doi.org/10.3233/EFI-170987

Marchionini, G. (2006). Search, sensemaking, and learning: Closing gaps. Information and Learning Science, 120(1/2), 74-86. http://dx.doi.org/10.1108/ILS-06-2018-0049

Martin, E. J. (2017). How Echo, Google Home, and other voice assistants can change the game for content creators. Econtent, 40(2), 4-8.

Nelson, S. D., & Simek, J. W. (2017). Are Alexa and her friends safe for office use? Law Practice: The Business of Practicing Law, 43(5), 26-29.

Nielson, J. (1993). Noncommand user interfaces. Communications of the ACM, 36(4), 83-99. http://dx.doi.org/10.1145/255950.153582

Nuhu, Y., Mohd, A., & Norfaradilla, B. (2019). A comparative analysis of web search query: Informational vs. navigational queries. International Journal on Advanced Science Engineering Information Technology, 9(1), 136-141. http://dx.doi.org/10.18517/ijaseit.9.1.7578

Rowlands, I., Nicholas, D., Williams, P., Huntington, P., Fieldhouse, M., Gunter, B., Withey, R., Jamali, H., Dobrowolski, T., & Tenopir, C. (2008). The Google generation: The information behaviour of the researcher of the future. Aslib Proceedings: New Information Perspectives, 60(4), 290-310. https://doi.org/10.1108/00012530810887953

Sauro, J., & Lewis, J. (2016). Quantifying the user experience: Practical statistics for user research. Elsevier/Morgan Kaufmann.

Schalkwyk, J., Beeferman, D., Beaufays, F., Byrne, B., Chelba, C., Cohen, M., & Strope, B. (2010). “Your word is my command”: Google search by voice: A case study. https://doi.org/10.1007/978-1-4419-5951-5_4

Terzopolous, G., & Satratzemi, M. (2020). Voice assistants and smart speakers in everyday life and in education. Informatics in Education, 19(3), 473-490. https://doi.org/10.15388/infedu.2020.21

Winkler, R., Sollner, M., Neuweiler, M.L., Leimeister, J. M., & Rossini, F. (2019). Alexa, can you help us solve this problem? How conversations with smart personal assistant tutors increase task group outcomes. Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems, 1-6.

APPENDIX 1: TASK QUESTIONS IN THREE CATEGORIES

(1-RESEARCH QUESTIONS)

- What is the total enrollment of undergraduate students at Northern Illinois University?

- 12,742

- 14,079

- 16,751

- 18,814

- What is the population of Dekalb, IL in 2016?

- 41,853

- 42,719

- 43,194

- 48,478

- Who is the author of “Don’t make me think”?

- Dan Norman

- Steve Krug

- Ruth C. Clark

- Chopeta Lyons

- What is the mascot of the University of Baltimore?

- Lion

- Tiger

- Phoenix

- Bee

- What is the research ranking of Northern Illinois University?

- Tier I

- Tier II

- Tier III

- Not known

- Who is the founder of Northern Illinois University?

- Baron Johann DeKalb

- Joseph Glidden

- John P. Altgeld

- Lisa Freeman

- What is the IRB?

- Accepts the student loan applications

- Approves research to protect the human subjects participated in research

- International protection agency for monitoring climate change

- None of the above

- What is the address of the Center for Latino and Latin American Studies – Latino Resource Center of Northern Illinois University?

- 1425 W. Lincoln Hwy. Dekalb, IL

- 313 First St. Dekalb, IL

- 6016 Sycamore Rd. Dekalb, IL

- 515 Garden Rd. Dekalb, IL

- What is the average tuition fee for Northern Illinois University?

- 9,465 per year for in-state residents

- 10,275 per year for in-state residents

- 10,900 per year for in-state residents

- 11,648 per year for in-state residents

- What is the phone number of the NIU Police Department?

- 815-753-1212

- 815-753-8000

- 815-753-6312

- 815-753-1911

(2-TRIVIA QUESTIONS)

- What was the first planet to be discovered using the telescope?

- Mercury

- Jupiter

- Uranus

- Pluton

- How many U.S. states border the Gulf of Mexico?

- 3

- 4

- 5

- 6

- What color is Absynth?

- Green

- Yellow

- Blue

- Black

- What is a water moccasin often called?

- Water snake

- Cottonmouth

- Piggy tail

- Dragon eyed

- How far is the earth from the moon?

- 165,853 miles

- 198,956 miles

- 220,380 miles

- 238,900 miles

- What is the world’s biggest island?

- Madagascar

- Greenland

- Iceland

- Borneo

- Where do skinks NOT live?

- Forests

- Polar regions

- Gardens

- Urban areas

- What is the smallest country in Europe?

- Slovenia

- Vatican

- Sweden

- Albania

- How many days there are until Memorial Day? (Write your answer below)

…………………………………………………………………………….

- What does NATO stand for? (Write your answer below)

…………………………………………………………………………….

(3-MATH QUESTIONS)

- What is the 54% of 2,650?

- 49,074

- 4,907

- 1,431

- 14,310

- What is 687 times 879?

- 603,873

- 616,239

- 567,928

- 595,962

- What is the square root of 86?

- 7.5924

- 8.4182

- 9.2736

- 9.7825

- What is the 4 divided by 5 times 40? [(4 / 5) x 40 = ?]

- 30

- 32

- 34

- 36

- What is 726 minus 319? [726 – 319 = ?]

- 407

- 417

- 427

- 437

- What is 1976 divided by 12?

- 156.223

- 160.444

- 164.667

- 168.775

- What is 78 percent of 7?

- 5.46

- 6.45

- 7.12

- 7.83

- What is 233 multiplied by 170?

- 39,190

- 39,901

- 39,610

- 39,910

- What is the square root of 256?

- 14

- 15

- 16

- 17

- What is the 7 divided by 13 plus 140? [(7 / 12) + 140 = ?]

- 140.5

- 143.5

- 145.0

- 147.3