Abstract

This research study focuses on evaluating the usability of multi-touch tablet devices by adults with Down syndrome for workplace-related tasks. The usability evaluation involved 10 adults with Down syndrome, and the results of the study illustrate that (a) adults with Down syndrome are able to use multi-touch devices effectively for workplace-related tasks, (b) formal computer training seems to impact participant performance, and (c) password usability continues to be a challenge for individuals with Down syndrome. Implications for designers, for policymakers, for researchers, and for users are discussed, along with suggestions for effective implementation of usability testing when involving adults with Down syndrome. Information technology can be a potential workplace skill for adults with Down syndrome, and more of the user experience community needs to get involved in understanding how people with Down syndrome utilize technology.

Practitioner’s Take Away

Tips for Usability Practitioners

When performing usability testing involving people with Down syndrome, we have seven suggestions for user experience practitioners:

- Use pilot sessions prior to usability testing with users with disabilities. There are often more logistical challenges to doing usability testing with people with disabilities than with standard usability testing. It can help focus the usability testing as well as reveal potential challenges before they occur during the actual usability testing.

- Use real examples and real accounts when conducting usability testing. People with Down syndrome are often literal and direct and would feel uncomfortable using accounts that are not theirs.

- Be flexible when participants say that they would instead prefer to type in a different time or a different piece of data. The level of engagement with tasks is high, and allowing for flexibility allows for the level of engagement to remain high. As presented earlier in the paper, some of the participants argued about the data entry not being to their liking or not being appropriate (writing a paragraph about wintertime clothes when it’s actually summertime outside).

- Ask participants to bring their passwords to the usability testing written out on paper. The participants often forgot their passwords or had trouble remembering where the capitalization or symbols were in the password.

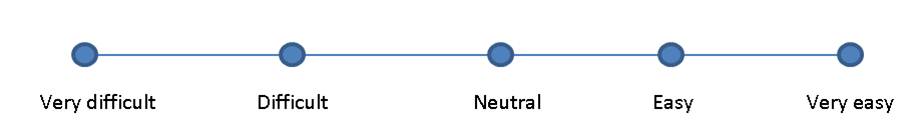

- Present satisfaction scales (such as a Likert scale) visually (as described earlier in the paper), due to the visual strength of people with Down syndrome. Instructions should be provided both verbally and also in printed, visual format. Having the printed versions of instructions and materials seems to be helpful in having participants comprehend the information.

- Cover up any list of tasks (when using printed instructions), whenever possible, so that the participant will not be distracted by the other tasks listed on the sheet and will not jump ahead to a different task.

- Create tablet apps that include robust auto-suggested search features, visual as well as text-based aspects, and clear, straightforward icons, as these were features of particular value to users with Down syndrome in this study.

Introduction

Research has found that children and young adults with Down syndrome can use computers effectively (including the mouse, keyboard, and screen), often without any assistive technologies or modifications (Feng, Lazar, Kumin, & Ozok, 2008). The skills that individuals with Down syndrome need to use computers include fine motor skills, visual-motor skills, visual memory skills, letter recognition skills, reading and literacy skills, and, depending on how instructions are given to them, auditory and visual processing skills. Previous medical/clinical research has documented difficulties with fine motor skills and challenges with perceptual motor coupling (Bruni, 1998; Savelsbergh, van der Kamp, Ledebt, & Planinsek, 2000) in people with Down syndrome; however, multiple HCI data collection efforts related to children and adults with Down syndrome using computers do not show fine motor difficulties when using the mouse and/or keyboard. So, there appears to be a disconnect between the findings in the medical/clinical literature relating to difficulty with discrete fine motor tasks and the use of fine motor skills for computer input (Feng et al., 2008; Feng, Lazar, Kumin, & Ozok, 2010; Hu, Feng, Lazar, & Kumin, 2011; Lazar, Kumin, & Feng, 2011). For instance, children and adults with Down syndrome can use the computer mouse for input much more effectively than would be expected, based on the medical/clinical literature.

Generally, visual motor skills, visual processing skills, and visual memory skills are documented in the literature as strong avenues for learning for people with Down syndrome, whereas auditory processing and auditory memory skills are found to be relatively weaker channels for learning (Bull, 2012; Chapman & Hesketh, 2000; Fidler, Most, & Philosky, 2009; Jarrold & Baddely, 2002; Pueschel, Gallagher, Zastler, & Pezzulo, 1987; Shott, Joseph & Heithaus, 2001). Because the computer is often a visual medium, computer use has been found to be effective for communication and learning for people with Down syndrome (Burkhart, 1987; DeBruyne & Noecker, 1996; Meyers, 1988; Meyers, 1994; Romski & Seveik, 1996; Steiner & Larsen, 1994).

Before our ongoing research into the human-computer interaction (HCI) of people with Down syndrome, there were no research studies published that focused specifically on the population of users with Down syndrome. Studies in the HCI literature have included people with Down syndrome in the broader category of “people with cognitive impairments,” without noting their specific strengths and challenges (Dawe, 2006; Hoque, 2008). Individuals on the autism spectrum and individuals with neurologically based impairments have previously been included in the same analysis group in research as individuals with Down syndrome. Because individuals with Down syndrome have visual learning strengths, it is important to look at their performance separately from those with other cognitive impairments. It is also important to investigate computer usage in this population using a variety of research methods, rather than only one research method, which may lead to unintended bias (Lazar, Feng, & Hochheiser, 2010). It is possible that people with Down syndrome have higher performance using some HCI research methods than others, because with no history of using these research methods with these user populations, it is impossible to tell at this point if any biases are present.

There are a number of recent examples of studies involving technology usage by individuals with Down syndrome. For instance, Kirijian and Myers (2007) was a design case study involving people with Down syndrome in the design to help build online training modules. Another example was a survey study of 561 children and young adults with Down syndrome regarding computer usage (Feng et al., 2008; 2010). Another study reported on an ethnographic observation of 10 expert adult users with Down syndrome (Lazar, Kumin, & Feng, 2011). A further analysis examined the types of computer-related workplace tasks being performed by employed adults with Down syndrome (Kumin, Lazar, & Feng, 2012). A recent study used an experimental design that compared the effectiveness of a keyboard, a mouse, speech recognition, and word prediction for teens and young adults with Down syndrome (Hu et al., 2011). Although case study design, survey, ethnographic observation, and experimental methodology have been used to study computer use in the population, usability testing has not yet been employed as a method for investigation of computer use in people with Down syndrome.

Our previous studies have investigated the use of several input techniques by individuals with Down syndrome, including keyboard, mouse, speech recognition, and word prediction (Feng et al., 2008; Hu et al., 2011; Lazar et al., 2011). Our current focus is on computer skills in adults and how these skills can be used in employment. Touch-screen and tablet computers are increasing in workplace usage, and many employers are even purchasing these devices for employees (such as the iPad, Samsung Galaxy, and Blackberry Playbook). These types of devices are also widely used by people in non-work related settings. Consequently, not being able to use multi-touch tablet computers could not only result in fewer employment opportunities, but could also result in social exclusion, because people often share pictures and movies using a tablet computer or other mobile touch-screen devices.

A high level of mouse skills have been documented in both adults and children with Down syndrome (Feng et al., 2008), but it’s also worthwhile to investigate other forms of input devices, such as touch-screens. Theoretically, general touch-screen usage should be easier than mouse usage for individuals with Down syndrome, because a mouse is an indirect pointing input device, whereas a touch-screen is a direct pointing device, and therefore, less cognitive processing is needed (Greenstein & Arnaut, 1988). Also, when using a touch-screen for simple gestures (such as pointing, but not including multi-touch gestures), only one finger is used, versus the multiple fingers that are typically used for holding and guiding a mouse (Forlines, Wigdor, Shen, & Balakrishnan, 2007). In survey data from 2007 and 2008, 12.3% of children and young adults with Down syndrome were reported to be using touch-screens. This at first might seem low; however, it is important to note that touch-screen and tablet computing was much less prevalent in 2007, when the data was collected, before the introduction of the iPad. In the neurotypical community of users, a major increase in touch-screen usage during that same time period would also be expected (Feng et al., 2008).

Another aspect of touch-screen usage that is important to investigate is the on-screen (virtual) keyboard. Keyboard input can be challenging for many people with Down syndrome: while expert users with Down syndrome have some success with keyboard usage (Lazar et al., 2011), many more novice users only use one finger on one or both hands while typing, resulting in slow and error-prone keyboarding (Feng et al., 2008; 2010). It appears that having multiple keyboarding classes while growing up, in both K-12 education and post-secondary education, has a positive impact on keyboard typing skill level for adults with Down syndrome (Lazar et al., 2011). However, there is no current research on the use of touch-screen-based keyboards by people with Down syndrome. It is important to understand how people with Down syndrome can interact with a keyboard when it is only a visual keyboard on a touch-screen, without the tactile nature of a physical keyboard.

Methods

We decided that for the current investigation of using touch-screens for workplace-related tasks, a modified usability testing methodology would be the most appropriate approach. Usability testing, at a basic level, is when representative users perform representative tasks (Nielsen, 1993). And a typical focus of usability testing is finding and fixing flaws in an interface. This research study is designed to be a preliminary investigation of how users with Down syndrome could potentially utilize touch-screens for workplace tasks to obtain a sense of some of the potential challenges to effective use of tablet computers for this population and to investigate how usability testing involving people with Down syndrome could be effectively performed. Typically, a usability testing method is used to understand what improvements are needed in interfaces (or compare the effectiveness of different interfaces), whereas experimental design is utilized more often to study users themselves or interfaces (controlling one aspect and studying the other). The focus of this study is equally on understanding the users themselves and also understanding potential interface improvements. Usability testing is the most appropriate method to reach this goal for the following reasons:

- There is very limited research on computer usage by people with Down syndrome and none on touch-screen usage by people with Down syndrome. It is difficult to use experimental design for preliminary investigations like the current study when the relevant factors are not even completely identified. So we are interested in understanding the issue from multiple different viewpoints, with a more structured method than ethnography, but less structured or focused than experimental design. This study helps identify key factors that are worth further investigation through future experimental studies.

- Experimental studies usually require full control of one or more relevant factors. However, there were many factors in this study that would be impossible to control, such as the specific, personal email account used for the email tasks. In an experimental study, it would be expected for all participants to use exactly the same email account (e.g., Gmail or Windows Live). But for users with Down syndrome, it was important to use their own email and Facebook accounts, because security and privacy are major issues in the population of computer users with Down syndrome. Parents have serious discussions with their adolescent and adult children about these issues. Using a fictitious account (as is typically done in experimental design and often is done in usability testing) could be viewed by participants with Down syndrome as using someone else’s account. It would feel dishonest and wrong for the participants to use someone else’s account. Furthermore, using a participant’s personal accounts, when possible, increases the validity of a usability testing session by engaging the participants more deeply in the session (Zazelenchuk, Sortland, Genov, Sazegari, & Keavney, 2008). However, using each individual’s own account presents problems for a true experimental design. Each participant’s Facebook account would differ due to the number of friends, number of pictures posted, and whether the account uses the Facebook timeline feature. Every user could potentially have a different email account from a different provider (Gmail, Yahoo! AOL, Comcast)—each with a different interface.

- Because touch-screens and tablet computers differ so greatly, both in terms of their screen interface as well as the gesturing required, it would be hard to generalize many of the results from one tablet computer (such as the iPad) to another (such as the Samsung Galaxy). Our goal is primarily to understand usage of the iPad, not all tablet computer devices, and to explore whether adults with Down syndrome are able to use the iPad successfully. Only a few of the findings (related, for instance, to touch-screen-based keyboarding) could be generalized to other devices. Usability testing is similarly focused on understanding one interface, not on finding statistical differences and generalizing to many other interfaces, as is common for experimental design.

- Be at least 18 years of age.

- Have the Trisomy 21 form of Down syndrome (the most prevalent form for 95% of the population)

- Have previous experience with computers and the Internet.

- Have a minimum of basic experience with touch-screen computers.

- Have an existing Facebook account and a Web-based email account that they could use for the study.

- Use the Safari Web browser, go to www.facebook.com, and type in your email address and password.

- Search Facebook for the page for the National Down Syndrome Congress. Click to “like” the page.

- From your Facebook home page, under Messages, find a friend on Facebook (they don’t have to be logged on to Facebook right now) and send them the following message using Facebook: “What is your favorite restaurant? Mine is [user’s favorite restaurant was included here].”

- Find the most recent status update (recent activity) for any friend of yours on Facebook.

- Logout from your Facebook account.

- Using the Safari Web browser, login to your email account, such as Gmail, Yahoo Mail, or AOL.

- Create an email message saying “Hope you have a good weekend, [researcher’s name was used here]!” and send it to [researcher’s email address was used here]. You can leave the subject line blank.

- Add a person to your email account address book/contact list. The person’s name is [particular name was used here], who is the head of public relations at [particular organization was used here], and her email address is [email address was used here].

- Using your address book/contact list, send a new message to [individual’s name was included here] (with no subject in the subject line) and have the message say, “Hope you have a good weekend, [name was used here]!”

- What is the date of the World Down Syndrome Day (it is on the calendar)?

- Please select the day of your birthday on the calendar, and then “add event” with a title of “birthday party” starting at 12:00 p.m. and ending at 2:00 p.m., with a location of [user’s favorite restaurant was inserted here].

- Go to the barnesandnoble.com Web site. How much does it cost to purchase the new paperback book in English titled “Count Us In: Growing Up with Down Syndrome,” written by Jason Kingsley and Mitchell Levitz?

- Go to the amazon.com Web site. How much does it cost to purchase the new paperback book in English titled “Count Us In: Growing Up with Down Syndrome,” written by Jason Kingsley and Mitchell Levitz?

- Go to the staples.com Web site. How much does it cost to purchase the Olympus VN-8100PC Digital Voice Recorder?

- Go to the officedepot.com Web site. How much does it cost to purchase the Olympus VN-8100PC Digital Voice Recorder?

- Bruni, M. (1998). Fine motor skills in children with Down syndrome: A guide for parents and professionals. Bethesda, MD: Woodbine House.

- Bull, M. (2011, August 1). Health supervision for children with Down syndrome.

Pediatrics, 128(2), 393-406. - Burkhart, L. (1987). Using computers and speech synthesizers to facilitate communication interaction with young and/or severely handicapped children. Wauconda, IL: Don Johnston.

- Chapman, R., & Hesketh, L. (2000). Behavioral phenotype of of individuals with Down syndrome.

Mental Retardation and Developmental Disabilities Research Reviews, 6(2), 84-95. - Dawe, M. (2006). Desperately seeking simplicity: How young adults with cognitive disabilities and their families adopt assistive technologies.

Proceedings of the SIGCHI conference on Human Factors in computing systems (CHI ’06), In Grinter, R., Rodden, T., Aoki, P., Cutrell, E., Jeffries, R., & Olson, G. (Eds.; pp. 1143-1152). New York, NY, USA: ACM. - DeBruyne, S., & Noecker, J. (1996). Integrating computers into a school and therapy program.

Closing the Gap, 15(4), 31-36. - Dumas, J., & Loring, B. (2008). Moderating usability tests: Principles & practices for interacting. San Fransisco, CA: Morgan Kauffman.

- Feng, J., Lazar, J., Kumin, L., & Ozok, A. (2008). Computer usage by young individuals with down syndrome: An exploratory study.

Proceedings of the 10th international ACM SIGACCESS conference on Computers and accessibility (Assets ’08;

pp. 35-42). New York, NY, USA: ACM. - Feng, J., Lazar, J., Kumin, L., & Ozok, A. (2010, March). Computer usage by children with Down syndrome: Challenges and future research. ACM Transactions Accessible Computing, 2(3), 35-41.

- Fidler, D., Most , D., & Philosky, A. (2009). The Down syndrome behavioural phenotype: Taking a developmental approach. Down Syndrome Research and Practice, 37-44. doi:10.3104/reviews.2069.

- Findlater, L., Wobbrock, J., & Wigdor, D. (2011). Typing on flat glass: Examining ten-finger expert typing patterns on touch surfaces.

Proceedings of the 2011 annual conference on Human factors in computing systems (CHI;

pp. 2453-2462). Vancouver, BC, Canada: ACM - Forlines, C., Wigdor, D., Shen, C., & Balakrishnan, R. (2007). Direct-touch vs. mouse input for tabletop displays. Proceedings of the SIGCHI conference on Human factors in computing systems (CHI ’07; pp. 647-656). New York, NY, USA: ACM.

- Greenstein, J., & Arnaut, L. (1988). Input devices. In M. Helander (Ed.),

Handbook of human-computer interaction (pp. 495-516). Holland: Elsevier Science Publishers. - Hoque, M. (2008). Analysis of speech properties of neurotypicals and individuals diagnosed with autism and down.

Proceedings of the 10th international ACM SIGACCESS conference on Computers and accessibility (Assets ’08; pp.311-312). New York, NY, USA: ACM. - Hu, R., Feng, J., Lazar, J., & Kumin, L. (2011, November). Investigating input technologies for children and young adults with Down syndrome. Universal Access in the Information Society. doi: 10.1007/s10209-011-0267-3.

- Jarrold, C., & Baddely, A. (2002). Short term memory in Down syndrome: Applying the working memory model.

Down syndrome Research and Practice, 7(1), 17-23. - Kirijian, A., & Myers, M. (2007). Web fun central: Online learning tools for individuals with Down syndrome. In J. Lazar (ed.),

Universal usability: Designing computer interfaces for diverse users (pp. 195-230). Chichester, UK: John Wiley & Sons. - Kumin, L., Lazar, J., & Feng, J. (2012, June). Expanding job options: Potential computer-related employment for adults with Down syndrome.

ACM SIGACCESS Accessibility and Computing (103), 14-23. - Lazar, J. (2006). Web usability: A user-centered design approach. Boston: Addison-Wesley.

- Lazar, J., ed. (2007). Universal usability: Designing computer interfaces for diverse user populations. Chichester, UK: John Wiley & Sons.

- Lazar, J., Kumin, L., & Feng, J. (2011). Understanding the computer skills of adult expert users with Down syndrome: An exploratory study.

Proceedings of the 13th international ACM SIGACCESS conference on Computers and accessibility (ASSETS ’11; pp. 51-58). New York, NY, USA: ACM. - Lazar, J., Feng, J., & Hochheiser, H. (2010). Research methods in human-computer interaction: Chichester, UK: John Wiley & Sons.

- Lazar, J., Olalere, A., & Wentz, B. (2012). Investigating the accessibility and usability of job application Web sites for blind users.

Journal of Usability Studies 7(2), 68-87. - McGuire, D., & Chicoine, B. (2012). Mental wellness in adults with Down syndrome. Bethesda, MD: Woodbine House.

- Meiselwitz, G., & Chakraborty, S. (2010, October 28-31). Exploring the connection between age and strategies for learning new technology related tasks. Information Systems Education Journal, 9(4), 55-62.

- Meyers, L. (1988). Using computers to teach children with Down syndrome spoken and written language skills. In L. Nadel (ed.), The Neurobiology of Language. Cambridge, MA: M.I.T. Press.

- Meyers, L. (1994). Access and meaning: The keys to effective computer use by children with language disabilities.

Journal of Special Education Technology, 12(n3), 257-75. - Nielsen, J. (1993). Usability engineering. Boston: Academic Press.

- Pueschel, S., Gallagher, P., Zastler, A., & Pezzulo, J. (1987). Cognitive and learning processes in children with Down syndrome.

Research and Developmental Disabilities, 8(1), 21-37. - Romski, M., & Sevcik, R. (1996). Breaking the speech barrier: Language development through augmented means. Baltimore, MD: Paul H. Brookes.

- Savelsbergh, G., van der Kamp, J., Ledebt, A. & Planinsek, T. (2000). Information-movement coupling in children with Down syndrome. In D.J. Weeks, R. Chua, & D. Elliott (Eds.), Perceptual-Motor Behavior in Down Syndrome (pp. 251-276). Champaign, IL: Human Kinetics.

- Shott, S.R., Joseph, A., & Heithaus, D. (2001). Hearing loss in children with Down syndrome.

International Journal of Pediatric Otolaryngology, 61(3), 199-205. - Steiner, S., & Larsen, V. (1994). Integrating microcomputers into language intervention. In K. Butler (Ed.),

Best Practices II: The Classroom as an Intervention Context (18-31). Gaithersburg, MD: Aspen Publishers. - Wishart, J. G. (1993). Learning the hard way: Avoidance strategies in young children with Down syndrome.

Down Syndrome Research and Practice 1(2), 47–55. - Zazelenchuk, T., Sortland, K., Genov, A., Sazegari, S., & Keavney, M. (2008). Using participants‘ real data in usability testing: Lessons learned.

Proceedings of the ACM Conference on Human Factors in Computing Systems

(CHI; pp. 2229-2236). Florence, Italy: ACM.

Pilot Sessions

Two pilot sessions took place before the research method was finalized. First, an observation was made of a 28-year old female with Down syndrome, who showed us how she uses technology involving touch-screens. There was no formal data collection or methodology, only an observation of her usage patterns. After the observation of the 28-year old female, the task list was drafted and a methodology was developed. A second, more formal pilot study was made, involving a 23-year old male with Down syndrome attempting to perform the tasks on the initially developed task list. Modifications were made to the wording of the task list as well as some of the tasks themselves. For instance, one of the original tasks was to have the participant accept a “friend request” on Facebook, but we learned that due to the high privacy settings that many people with Down syndrome have—they often cannot receive friend requests without parental permission. We also added a “warm-up task” to help the participants get comfortable with the researchers and with the iPad. The warm-up task was selected to be a weather related task where participants must find out the local temperature using a Web site. Also, a question about whether any computer classes had been taken was added to the demographic questions at the beginning of the data collection.

There are different approaches to moderator involvement in usability testing. For some types of usability testing, the researchers rarely assist or speak to the participants during the usability testing session. In other types of usability testing, participants are verbally encouraged by the moderators or given a hint to allow them to move to the next step in a task, but at no point are told what to do or how to accomplish the task. In yet other forms of usability testing involving one large task with multiple sub-tasks, if a participant cannot perform a task and asks for help, the researchers will perform an “intervention” that is well-documented in their notes (Lazar, Olalere, & Wentz, 2012). Based on our experience from the pilot studies, in the current research project, the participants received verbal encouragement from us and infrequently received a hint for how to move forward one step. However, at no time did we tell the participants specifically what steps to take or perform an intervention (Dumas & Loring, 2008). Participants were encouraged to think aloud as they were attempting their tasks, but the level of think-aloud varied depending on the personality of the participants. While parents or support people for the individuals were allowed to stay in the research room if they preferred (some did and some didn’t), at no point were they allowed to tell the participants how to do anything, with one exception: when participants had trouble remembering their passwords (as mentioned in later sections of the paper), parents or support people were allowed to provide the passwords. The sessions typically lasted between one and three hours, usually with a short break half-way through the tasks.

Participants

Ten participants took part in the study. All participants were required to meet the following criteria:

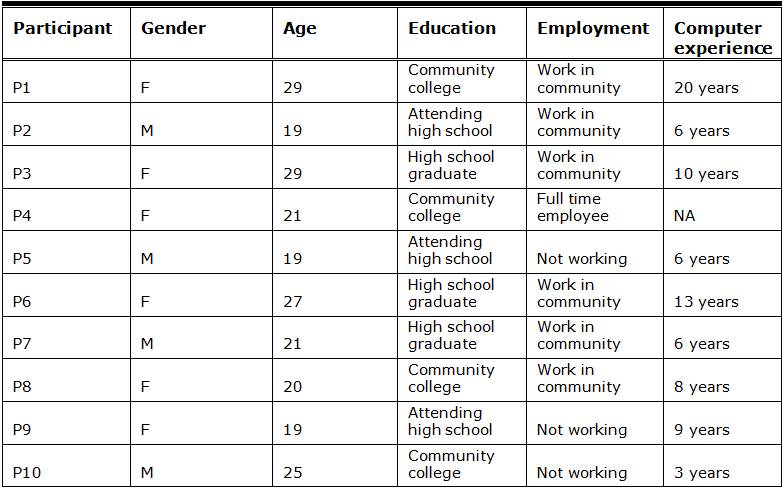

Demographic questionnaires were sent to the participants or their parents prior to the study so that they could have sufficient time to think and answer the questions. The demographic information of the 10 participants is listed in Table 1. Note that students receiving special education services in the US can attend high school until 21 years of age, which explains a number of participants who are 19 years old and in high school. The participants ranged from 19-29 years old (average age: 22.9 years old), and there were four men and six women.

Table 1. Demographic Information for the 10 Participants

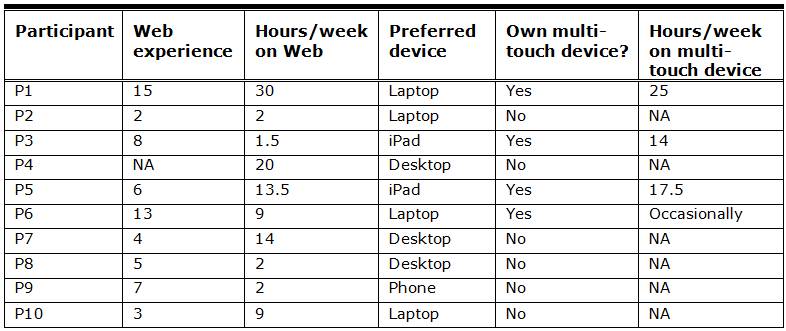

Table 2 lists the computer experience for the 10 participants. For instance, only four of the participants own their own multi-touch device, even though all of the participants have used multi-touch devices before. The experience on Web usage ranged between 2-15 years, and the average number of hours per week on the Web ranged from 2-30 hours per week. Some participants preferred using an iPad, some preferred a laptop computer, and others preferred a desktop computer.

Table 2. Computer Experience of the 10 Participants

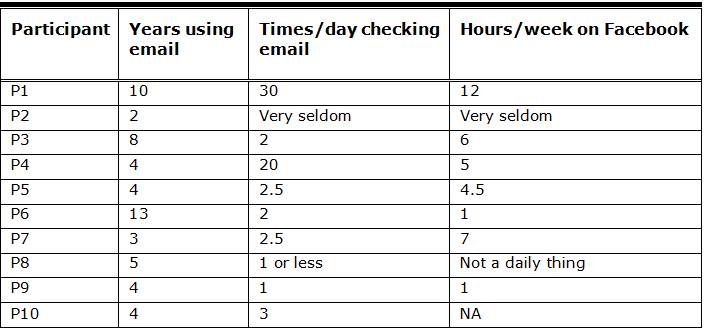

Table 3 lists the level of experience with email and Facebook. As is typical for participants with Down syndrome, there is a wide variance of experience levels. Some of the participants had only 2 years experience with email, while other participants reported as much as 13 years of experience. Some participants reported checking email once a day; while one participant reported that they checked email as often as 30 times a day. And one participant stated that they very seldom check Facebook, while another participant reported spending 12 hours a week on Facebook (which would average out to at least an hour and a half per day).

Table 3. Participant Experience with Email and Facebook

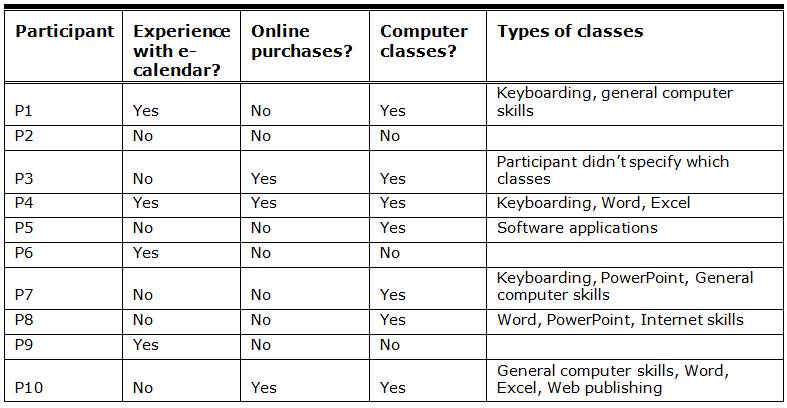

Table 4 reports the participant experience with applications such as electronic calendaring, purchasing items online, and computer classes. For instance, seven of 10 participants reported having taken formal computer classes, which is common for non-introductory computer users with Down syndrome. To fulfill all of the inclusion requirements in this study (experience with touch-screens and your own email and Facebook account), it is likely that a participant would have taken a formal computer class. While these participants are by no means expert users (the Lazar et al. 2011 study on expert users with Down syndrome required a much higher level of skill and weekly usage), the participants in this study were not likely to be introductory users. This was not a random sample of people with Down syndrome, rather this was a group of people who were interested in the topic of tablet computers and who met the inclusion requirements. Random samples of computer users with disabilities are almost impossible to implement due to the lack of directories of users with specific disabilities and the availability and/or willingness of people with disabilities to take part in research studies (Lazar et. al. 2010). In any HCI study involving people with disabilities, it is likely that people who choose to take part in a research study have an interest in technology. Therefore, this study is likely representative of people with Down syndrome who are interested in technology, not representative of all people with Down syndrome.

Only four of the 10 participants had previously used an electronic calendar. Of the 10 participants, three had independently purchased something online, although six of the participants had previously done comparative price checking on various Web sites.

Table 4. Participant Experience with Computers, Online Shopping, and Computer Classes

Tasks

Participants were asked to perform tasks in five different categories on the iPad. Five categories of tasks that are typically important for computer usage in the workplace were selected: social networking, email, calendaring/scheduling, price comparison, and basic text entry/note-taking. Social networking and email are forms of communication, and social networking has had increasing usage in the workplace. Updating a corporate social networking site is often a task assigned to certain employees. Calendaring and price comparisons are forms of information retrieval. Calendar usage and price comparison are possible work tasks that might be requested of an individual in an office assistant role. While the challenges and successes of people with Down syndrome related to keyboarding have been previously documented (Feng et al., 2008; 2010), there is no documented data on the use of a touch-screen-based keyboard interface (virtual keyboard) for text entry/note-taking tasks (as discussed in previous sections), and this was an important part of the data collection. The tasks under each category are listed below.

Social networking tasks

Email tasks

Calendar tasks

Price comparison tasks

Participants were asked to compare the price of each pair and point out which Web site offers a lower price.

Text entry/note-taking task

There were three slightly different versions of the note-taking text used. P1 and P2 used the following text:

The weather will get much colder, so I need to remember to wear my hat and gloves. I’m going to meet my friend at 4:30 p.m. to go to the new movie “War Horse.” Then I need to check the bus schedule to see if there is a different schedule on Lincoln’s birthday, which is Sunday, February 12th.

P3, P4, P5, and P6 used the following text, which was modified after the date for Lincoln’s birthday had passed to avoid confusion, replacing the date with Mother’s Day:

The weather will get much colder, so I need to remember to wear my hat and gloves. I’m going to meet my friend at 4:30 p.m. to go to the new movie “War Horse.” Then I need to check the bus schedule to see if there is a different schedule on Mother’s Day, which is Sunday, May 13th.

P7, P8, P9, and P10 used the following text, which was again modified to avoid confusion about the cold weather because it was now warm, and the word “new” removed because “War Horse” was no longer a new movie:

The weather will get much warmer, so I need to remember to wear my shorts. I’m going to meet my friend at 4:30 p.m. to go see the movie “War Horse.” Then I need to check the bus schedule to see if there is a different schedule on Mother’s Day, which is Sunday, May 13th.

To the outsider not familiar with people with Down syndrome, it may seem as if there would be no need to change the text. However, people with Down syndrome are often concerned with the accuracy and reality of details, so, they might object to writing text about an upcoming date that in fact had already passed. In later sections, an example is presented where P10 refused to enter an event into a calendar because it disagreed with the participant’s perception of what the data should be. It is important to note that the original text entered by P1 and P2 had a Flesch-Kincaide Reading Ease ranking of 80; the text typed by P3, P4, P5, and P6 had a ranking of 90.7; the third version typed by P7, P8, P9, and P10 had a ranking of 91.1. The Flesch-Kincaid ranking is a common way to assess the readability of text, and the higher score means that the text is easier to read.

Procedure

All participants received the instructions and tasks on paper, but also had the instructions and tasks read to them before they started each task. After participants completed each category, they were asked to rate the difficulty of the tasks using a 5-point Likert scale. Based on the pilot studies (and due to the visual strengths of people with Down syndrome), a visual Likert scale was created (see Figure 1).

Figure 1. Visual representation of the Likert scale used for this study

Results

The following sections discuss the results for the social networking, email, calendar, price comparison, and text entry/note taking tasks.

Social Networking

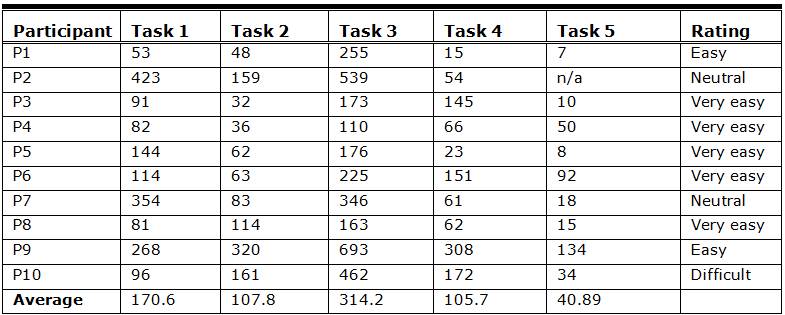

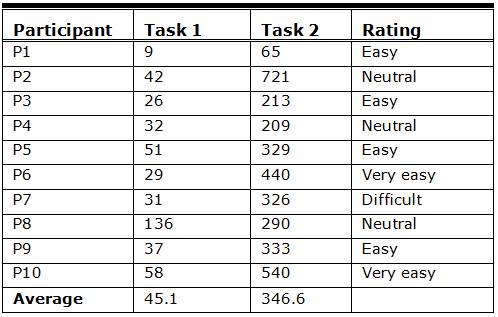

All 10 participants were able to complete the Facebook tasks. Some users required no assistance, and some users required encouragement or hints during one or more tasks. Seven out of 10 participants rated this category of tasks as “easy” or “very easy.” The time each participant spent on five tasks and the ratings are listed in Table 5. The results for each task will be explained more fully below in the paragraph for each task.

Table 5. Time (in Seconds) and Participant Ratings for Social Networking Tasks

1: Login to Facebook

Participants took an average of approximately 3 minutes to login to their Facebook account. Most of the time was spent on entering the email address and password. Most participants knew how to use the basic keyboard, but many of them did not know how to switch to (or did not feel comfortable with) the uppercase and number mode on the virtual keyboard. For example, P7 kept entering the wrong password and eventually the researchers discovered that the user entered all letters that should be capitalized in lowercase. P7 did not realize that error until it was suggested by the researchers that P7 make sure that that password was entered with the proper uppercase and lowercase (note that, because the passwords are for the participant’s own personal account, and because the password is often shown on the screen as ********, it was often not possible for the researchers to determine why a password was not entered properly). Because of the password problem, P7 took almost 6 minutes to login to Facebook. Most participants noted that they saved their email and password in their home computer, so logging in with a password was typically not an issue, because the passwords were automatically entered when using their home computer. This also means that the participants often did not have repeated practice with entering their password every time that they logged in.

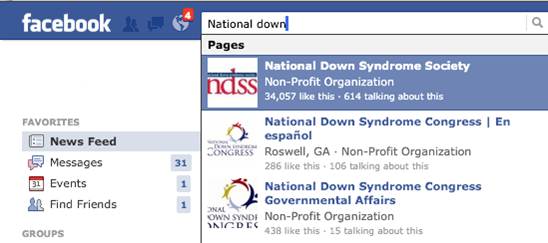

Task 2: Search for a Facebook page

Participants took an average of less than 2 minutes to search and locate the National Down Syndrome Congress (NDSC) page. Some participants spent longer time on this task than others primarily because they typed very slowly, such as P9. Figure 2 shows the search area on the Facebook home page with auto-suggested results.

Figure 2. Screenshot of the auto-suggested search results on the Facebook interface

Task 3: Send a message

The send message task took a longer time primarily because participants needed to type in (compose) and send the message. An average of 5 minutes was spent on this task, but there was a large variation in time among participants. The variability appeared to be partially related to familiarity with the iPad. Criteria for the study were that users have familiarity with touch-screen use, but they did not necessarily have familiarity with the iPad. Fast participants (such as P4) only spent less than 2 minutes to type and send the message. Some participants (such as P2 and P9) spent 9 minutes or longer on this task alone. Some participants were not familiar with the interface for writing a message on Facebook and could not figure out how to compose a new message. An illustration of this is P2, who sent a message (rather than by composing a new message) by replying to an existing message. Behaviors and personality characteristics, as well as learning strategies, also affected the time spent. P9 spent most of the time (nearly 10 minutes) trying to decide who to send the message to, despite receiving clear instructions and encouragement from us that any Facebook friend could be chosen. Some participants asked questions if they were having difficulties, and others used trial and error.

Throughout the entire data collection process, participants demonstrated various different typing styles. Most participants used multiple fingers on both hands, but in different ways. Some participants were able to use multiple fingers on both hands to type. For example, P2 used both second and third fingers on both the left and right, as well as a fifth finger infrequently; P5 used the left and right second fingers together, and infrequently used the third fingers; and P4 and P6 used all fingers on both hands from the start. Some participants (such as P3) started out only using the left index finger, then, as time went on, increased their use of other fingers. Some participants seemed to have different roles for their fingers (P7 typed using the left and right second fingers, but used the third fingers only for scrolling and the thumbs only for hitting the spacebar). One participant (P9) only used the right hand on the iPad, and at no point used the left hand. More detailed discussion on typing patterns is presented in the section on note taking (see the Text Entry/Note Taking section).

Task 4: Identify a friend’s status

Participants took an average of less than 2 minutes to identify the status of a friend. The majority of the participants completed this task very easily. P9 again spent a long time on this task because of difficulty deciding which friend to pick.

Task 5: Logout

Logging out of Facebook took an average of 40 seconds. The majority of the participants directly went to the right link and logged out. Two participants clicked the “X”’ (Exit) button on the right corner of the window to close the browser.

A majority of the 10 participants were able to complete the email tasks. Participants used their personal Web-based email accounts for these tasks because it would have been confusing to users with Down syndrome to request them to use an email account that was not actually theirs. Because the email tasks have some similarity across email applications, the findings related to email usage do have value. Participants experienced more problems with email tasks than with Facebook tasks. One problem that was encountered was that the Gmail and Yahoo app on iPad do not provide a method to add a contact and then use that contact’s email address to address a new message. Three participants were technically not able to complete the task related to address book/contact list because of the lack of this functionality on Gmail and Yahoo. Six out of 10 participants rated this category of tasks as “easy” or “very easy.” The time each participant spent on the four tasks and the ratings are listed in Table 6. Note that in some cases, Web sites were used, and in other cases, participants were automatically re-routed from the Web sites to the iPad versions, which is out of the control of the researchers and the participants.

Table 6. Time (in Seconds) and Participant Ratings for Email Tasks

Task 1: Login to email application

Most participants used the same email and password for login to both their Facebook and email account. This task was very similar to the login task of the Facebook account. Participants took an average of approximately 2 minutes to complete this task. This was, on average, a minute shorter than their first log in to Facebook.

Task 2: Create a message

Participants took an average of 4 minutes to create (compose) and send the message. Some participants had a hard time finding the “create message” icon on the screen. The create message icon on the Gmail app was difficult to understand and associate with the create/compose action. Figure 3 shows a screenshot of the icon on the Gmail app that was not intuitive to find for some users. The Yahoo! Mail interface used a similar icon for the create/compose action on its tablet version of Yahoo! Mail interface. One participant (P2) refused to complete this task because the user only read emails and had never sent or responded to any emails. The user’s inbox contained 695 messages, with no replies. This is an interesting case because this information only flowed one way, and two-way communication had never been established. This participant skipped the remaining two email tasks. In general, the participants preferred to respond to existing emails, rather than create new email messages.

Figure 3. Screenshot of the create/compose link on the Gmail iPad interface

Task 3: Add contact to address book/contacts

In addition to P2, three other participants were not able to complete the task related to the address book/contact list because the Gmail and Yahoo! app on iPad does not provide this functionality. For the six participants who completed this task, an average of 3 minutes was spent by them in order to complete this task. Some participants had difficulty locating the address book/contact list icon.

Task 4: Send email to contact in address book/contacts

Six participants completed this task and took an average of approximately 3 minutes on this task. It was most likely completed easily because the participants had just entered the contact information in the previous task and were able to click it right away to start the message.

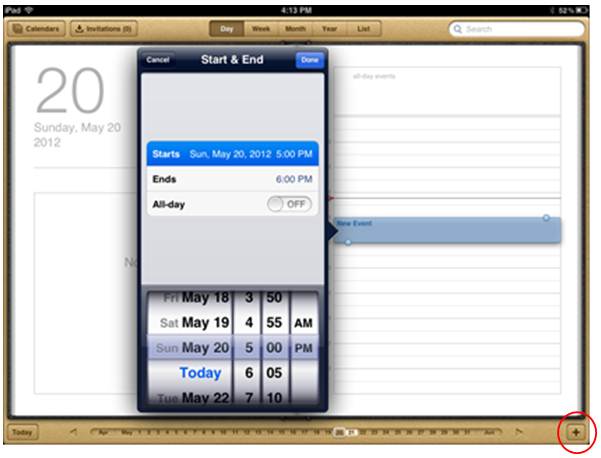

Calendar

Four participants (P1, P4, P6, and P9) had previous experience using electronic calendars. Participants were asked to locate and create an event on the iPad calendar app. Most participants easily located an existing event, but had more difficulty creating the event. Six out of 10 participants rated this category of task as “easy” or “very easy.”

Task 1: Locate an event

Nine out of 10 participants were able to locate the event on the calendar in less than 1 minute. P8 spent 2 minutes on this task because the participant spent some time first in a different calendar month. After a while, P8 was able to switch to the year view, select the correct month, and finally the correct day. Some participants used the Calendar app’s search feature to locate the event.

Task 2: Create an event

Creating an event seemed to be challenging for most of the participants. Only the first participant completed this task easily in about a minute. The other nine participants spent an average of approximately 6 minutes on this task. Some participants had problems locating the “add an event” icon (a plus sign located in the lower right hand corner of the screen). After they found it, the sensitive touch-screen interface sometimes removed/closed the box from the screen. For this task, the participants needed to specify the start time, end time, and the location of the event. Some participants had problems with the scrolling gesture in order to select the appropriate time. Figure 4 shows the time selection interface that required the scrolling gesture. Some participants accidentally tapped off the dialog box, which closed the box, forcing them to reopen, and restart the task. This happened multiple times for some participants, and it took them a substantial amount of time to figure out how to do it right.

Wishart (1993) commented on the use of social skills and charm to avoid doing tasks by people with Down syndrome. P10 took 540 seconds to create the event and was a prime example of using charm and social skills to avoid a task. The task involved creating a birthday party event on the calendar, and it took a long time, not because of difficulty with the interface, but because the participant was negotiating for a longer party. P10 did not think from noon-2 p.m. was long enough for a birthday party and refused to perform the task, despite our indication that this was a fictitious birthday party. The participant would only perform the task once we agreed to a longer time for the party.

Figure 4. Screenshot of the time selection interface requiring the scrolling gesture (also, note the plus sign, very small, in the lower right hand of the iPad screen)

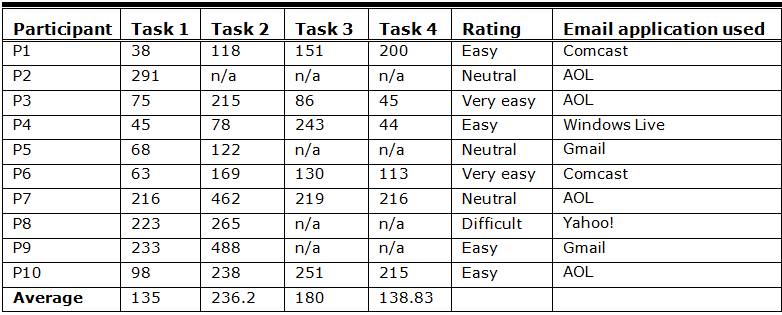

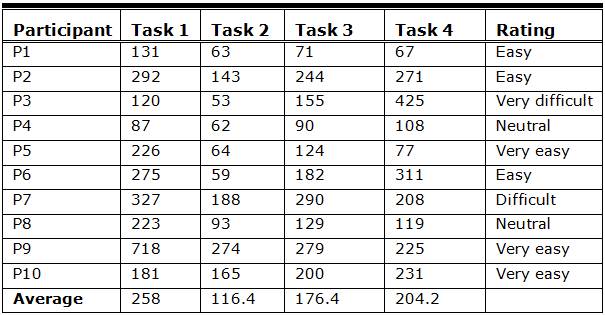

Price Comparison

All participants were able to complete the price comparison tasks successfully and within a reasonable amount of time (see Table 8 for time performance for the price comparison tasks). Six out of 10 participants rated these tasks as “easy” or “very easy.”

Table 8. Time (in Seconds) and Participant Ratings for Price Comparison Tasks

Tasks 1 and 2: Find and compare a book price from two different Web sites

Participants spent an average of more than 4 minutes to find the book on the Barnes and Noble Web site (Task 1) and less than 2 minutes on the Amazon Web site (Task 2). All participants spent less time on the Amazon Web site than the Barnes and Noble Web site, which may be partly due to the longer URL of the Barnes and Noble site. Some participants made errors when entering the URL of the Barnes and Noble Web site, and some participants used Google search to locate the Barnes and Noble Web site rather than typing the URL. It also could be related to familiarity with the task, as there could have been a learning effect. At the Barnes and Noble Web site, participants potentially could have learned how to successfully complete the task. At the second Web site, the Amazon.com site, the participants could have carried over and generalized their learning of the price comparison task. They were also familiar with the title of the book that they were searching for by the time they were typing in that title at the Amazon.com site. Figure 5 shows a screenshot of the Google search feature built into the Safari Web browser that was used by some participants to find the URL of the Web sites.

Figure 5. Screenshot of the auto-suggest Google search feature built into the Safari app

Some participants were able to take advantage of the “auto-suggest” function in the iPad search box, which saved them some time when using Google. Auto-suggest worked not only on general searching for the sites, but sometimes worked on the search engines within the product Web sites. For instance, Figure 6 shows the auto-suggest function working on the Amazon Web site (auto-suggest worked more consistently on the Amazon and Barnes and Noble sites than on the Staples and OfficeDepot sites, as discussed in later sections). Other participants did not take advantage of that function and typed in the entire name of the book with authors’ names. Once the search results appeared on the screen, some participants had problems identifying the specific book and took a substantial amount of time to find the specific book.

Figure 6. Screenshot of the auto-suggest search feature on Amazon.com

Tasks 3 and 4: Find and compare a digital recorder price from two different Web sites

Participants spent an average of 204.2 seconds (over 3 minutes) to find the digital recorder on the OfficeDepot Web site and 176.4 seconds (just under 3 minutes) on the Staples Web site. One participant wasn’t able to locate the search box on both Web sites and browsed the menu to look for the recorder, which took much longer time than searching. That participant was not able to find the item on the OfficeDepot Web site. Auto-suggest did not consistently work on the two Web sites used for the digital recorder price task, so that was an unpredictable factor. Note that the fourth task (OfficeDepot) took, on average, more time than the third task (Staples), possibly meaning that there were no learning effects, or that the Staples site was easier to use than the OfficeDepot site, or that the unpredictable appearance of auto-suggest played a role. Regardless of which site, which task, or whether auto-suggest worked on the site, there was generally a high level of task success for the price comparison tasks.

Text Entry/Note Taking

Participants spent an average of just under 8 minutes (473.8 seconds) to enter the text using the touch-screen virtual keyboard. All participants had the text printed on a handout. Figure 7 shows a screenshot of the iPad virtual keyboard. The text entry task was ranked as the easiest among the five categories, with nine out of 10 participants rating this task as “easy” or “very easy.”

Figure 7. Screenshot of the iPad virtual keyboard

Performance statistics

To compile the performance statistics, four researchers independently counted the total number of words entered by the participants. Regarding error coding, one researcher examined the text first and proposed a code list with eight error categories. Then four researchers independently coded the errors in the text entered by each participant. The word count and error coding of all researchers were compared by one researcher, who identified all inconsistent codes, re-checked the original text, and fixed incorrect coding items. The final coding list contains nine error categories, which are listed in Table 10.

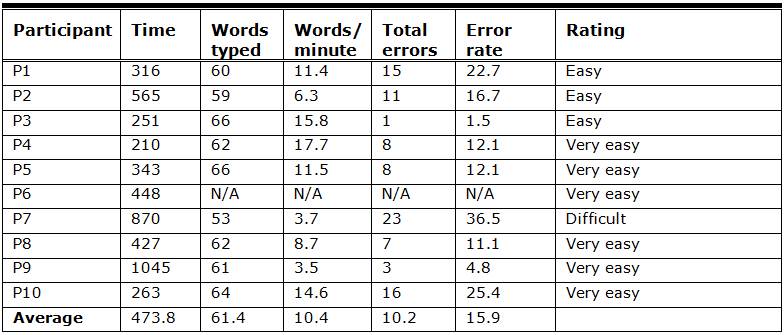

Table 9 lists the typing time, total number of words entered, speed (words per minute), total number of errors, error rate, and participant ratings for text entry tasks. The text entered by P6 was not saved due to technical problems. So the statistics are based on nine participants. Participants entered 10.4 words per minute on average. There was a large variance in the text entry speed among the 10 participants. The slowest participant (P9) only entered 3.5 words per minute on average, while the fastest participant (P4) entered 17.7 words per minute on average. The error rates among the participants also varied substantially, ranging from 1.5% to 36.5%.

Table 9. Time (in Seconds), Total Number of Words Entered, Words per Minute, Total Number of Errors, Error Rate, and Participant Ratings for Text Entry Tasks

Nine types of errors were identified (see Table 10). The most common errors were incorrect capitalization (32.6%), followed by missing punctuation (26.1%), misspelled of words (12.0%), missing space (7.6%). Missing word, extra word, and extra punctuation each contributed to 5.4% of the total number of errors. The least common errors were extra space (3.3%) and incorrect punctuation (2.2%).

Table 10. Types of Errors and the Percentage of Occurrence

Although the error rates of the participants seem to be high, the majority of the errors were minor ones that were unlikely to affect comprehension of the text, such as incorrect capitalization and missing punctuation, which together account for approximately 60% of the errors. Some of the errors might even be considered common among neurotypical users, especially when emailing or text messaging, such as using the @ sign instead of the word “at” and not using appropriate capitalization.

Some participants were able to enter the text with both high speed and high accuracy. For example, P3 entered the text fast (15.8 words per minute) with only one error. The punctuations were all correct with the only exception of the colon. All letters were capitalized as needed with appropriate spacing. The text entered by P3 is shown below:

The weather will get much colder, so I need to remember to wear my hat and gloves. I’m going to meet my friend at 4;30 p.m. to go to the new movie „War Horse.“ Then I need to check the bus schedule to see if there is a different schedule on Mother’s Day, which is Sunday, May 13th.

P2 missed all periods in the text yet entered all other punctuations accurately, including the colon and apostrophes (see below).

The weather will get much colder, so I need to remember to wear my hat and gloves I’m going to meet my friend at 4:30pm to go to the new movie war horse then I need to check the bus schedule to if there is a different schedule on Lincoln’s birthday, which is Sunday , February 12th.

It is clear that there is a gap between the ability of P7 and that of the remaining eight participants. P7 is the only participant who typed very slowly (3.7 words per minute) with a very high error rate (36.5%, the highest among all participants). P9 is similar to P7 in efficiency, but typed the text with only three errors, which is the second lowest among all participants.

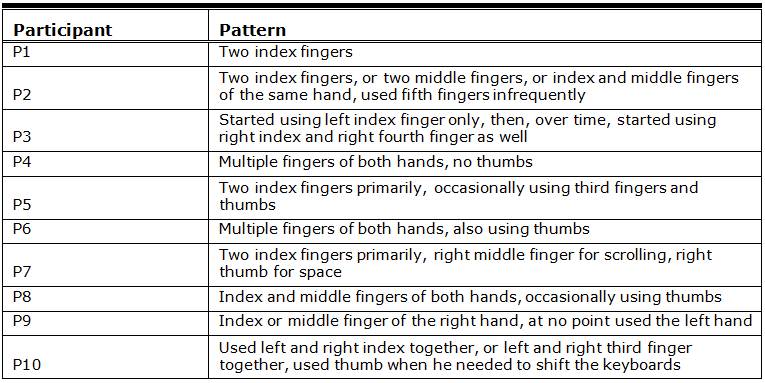

Typing patterns

Table 11 summarizes the typing patterns of the 10 participants. Five participants (P1, P2, P5, P7, and P10) typed primarily using two fingers at the same time. Three participants (P4, P6, and P8) used multiple fingers on both hands at the same time. One participant (P9) only used only one finger when typing. Another participant (P3) changed the typing pattern over time (starting with only the left index finger, but adding fingers as the usability evaluation went on) and primarily used the left index finger but typed pretty fast on the virtual keyboard (15.8 words per minute). P6 and P8 both used multiple fingers on both hands to type at the same time, but their typing speed was below the group average of 10.4 words per minute.

It is possible that there might be a disconnect between the typing pattern using the traditional physical keyboard and the touch-screen. Both P1 and P3 participated in a previous study examining the use of traditional keyboard and mouse for data entry. In that study, they both typed using multiple fingers on both hands. However, they only used one or two fingers when typing on the touch-screen.

Table 11. Typing Patterns of the 10 Participants

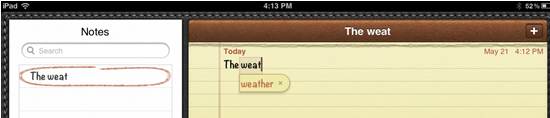

The iPad Notes app had an auto-suggest feature for words, but it was difficult for the participants to understand how to use the feature, so most participants ignored the suggestions. Participants assumed that the auto-suggested word could be tapped or selected on the touch-screen like many other auto-suggested features of applications; however, this feature only works if the user selects the space key when the word appears. Figure 8 shows an example of an auto-suggested word.

Figure 8. Example of an auto-suggested word on the iPad Notes app

With the exception of P7 (who ranked the task as difficult), the users P1, P2, and P3 all ranked the task as easy, while all other users ranked the task as very easy.

Discussion

All participants were able to complete the majority of the tasks in all five categories. Participants’ performance varied dramatically. The high-performers, such as P1 and P4, were able to complete all tasks at both high speed and high accuracy rates. Their interaction with the touch-screen interface was very similar to that of a neurotypical user. The participants who had a high typing performance were within the range of typical typing speed on touch-screen keyboards for users without Down syndrome (Findlater, Wobbrock, & Wigdor, 2011). On the other end of the spectrum, some participants, such as P2 and P9, had lots of difficulty with the tasks in every category and spent a longer time on each task, due to the spectrum of users involved. The total time that P2 and P9 spent on the tasks was three times of that of P1 and P4. The performance of the rest of the participants were somewhere in between the two extremes. Note that even though all of the participants had Trisomy 21 Down syndrome, there were clear variations in their level of speech and social interaction and a wide variety of skill levels, which is to be expected. The dramatic performance differences are most likely reflective of the dramatic differences between the participants themselves.

Some participants demonstrated attention to detail and made very few errors. This observation supports the medical/clinical research findings of increased obsessive-compulsive behavior characteristics (OCD) in some adolescents and adults with Down syndrome (McGuire & Chicoine, 2006). Some of the participants were more interactive than others. Some talked with the observation team as they were working and between tasks. Some told us stories in their own lives that related to the tasks and commented on the tasks. For example, P6 is the “weather reporter” for her family each morning, so she was familiar with looking up the weather. P10 engaged and talked continuously to us as he was working. P9 generally did not make eye contact with the researchers, talked only when asked specific questions, and took a long time to begin the task because she did not want to choose one friend to write to or make other choices. So, the longer times recorded for her task completion did not reflect difficulty with the task, but rather difficulty with decision making and social interaction.

Challenges and Opportunities

Switching from a desktop or laptop interface to the iPad touch-screen interface did present challenges to the participants. Although all participants had touch-screen experience, some had no direct experience with the iPad. It took participants a substantial amount of time to find and recognize specific icons on the apps interface. Because the icons on the iPad interface are generally smaller than those on the desktop interface, many participants failed to notice icons even when they were directly in front of them. They often scrolled up and down multiple times before they could locate specific icons. Because it has previously been noted (Lazar, Kumin, & Feng, 2011) that users with Down syndrome often look for visual icons (for network strength and battery life) due to their visual strengths, we postulate that it was the unfamiliarity with the icons that caused the problem. In several cases, we had to provide some participants with hints about the possible location or shape of icon(s).

Some participants had problems with the sensitive nature of the touch-screen and often accidentally tapped icons they didn’t want or clicked off windows or dialog boxes in the middle of a task, causing the desired window to exit/close (this was most prominent in the calendaring task that involved the scrolling selector). When this problem occurred, it took a while for the participants to realize what happened. It became frustrating for a few participants when this problem occurred repeatedly.

One area of challenge for users with Down syndrome was the small, and often unrecognizable, icons on the iPad. It might be interesting to research if other user groups (such as older users) have similar problems with the small and often cryptic icons. It could also be possible that icons that are at first cryptic, become more recognizable over time, and longitudinal studies involving users with Down syndrome might be helpful. Another area of research that seems fruitful is the use of touch-screen keyboards by people with Down syndrome, because typing patterns and approaches, as well as typing speed, was very diverse. Yet another potential area of research is doing Fitts’ Law-type studies of pointing speed and accuracy using multi-touch screens, because people with Down syndrome seem to be effective at pointing, yet the medical/clinical literature indicates that pointing would be difficult.

Observations

Many of the users who participated in this study exhibited a tendency to rely on or look for a search feature. For example, P1 used the search function to complete both Facebook tasks. P1 first searched the Facebook Web site using Google. Then she used the search feature in Facebook to find the NDSC page. Participants also used the search function extensively in order to complete the calendar tasks and the price comparison tasks. An easy-to-use search feature assisted users with locating information, and when this was combined with an extensive auto-suggest functionality, it increased the speed at which users were able to complete tasks. This is likely to result in lower levels of frustration and increased perceived capabilities on an interface. Providing multiple paths to the same goal is a key tenet of universal usability (Lazar, 2007). In the general population of users, some users will first use search boxes, and some users will first use menus, and expert users will often prefer command lines instead (Lazar, 2006). Both search boxes and auto-complete provide improved flexibility for all users, and in particular, may assist users with disabilities.

Another observation is related to security-focused interaction and functionality on interfaces. Users in our study were likely to have user accounts and passwords cached on their personal devices, and as such were not as familiar with the usernames/passwords necessary to log in from a different device, because that did not mirror their typical approaches for interaction. This is similar to the observations from previous studies on users with Down syndrome: Passwords are problematic and are often saved on the user’s computer so that they won’t need to enter the passwords (Feng et al., 2008). At the same time, the participants in this study had no problems when they encountered visual CAPTCHAs, similarly to the documented findings of (Lazar, Kumin, & Feng, 2011).

During the tasks that required messaging of some sort (social networking and email), it was also observed by the researchers that many users were much more adept and familiar with replying to incoming communications rather than initiating them (by composing a new message, for example).

Impact of Computer Training

Seven participants had taken computer related classes, while three (P2, P6, P9) had not. The three participants who did not take computing classes tended to have performance below the group average across the five categories of tasks, with the notable exception of P6 having above average performance on the email and Facebook tasks. This mirrors the pattern seen in other studies: that computer skills acquired through traditional, formal computer classes do have a big impact on performance for individuals with Down syndrome. In general, formal computer training has been decreased over the past decade, as younger adults (often known as the “millennial” or “digital native” generation) tend to use the exploratory approach, where they pick up a technology and learn by playing with it, rather than formal, procedural, training (Meiselwitz & Chakraborty, 2010). When it comes to information technology, it’s unclear whether people with Down syndrome benefit as much from exploratory learning, or whether formal procedural training would be more effective for people with Down syndrome.

Recommendations

One clear recommendation echoes the comments made in other publications: Formal computer training is especially important for users with Down syndrome. Users with Down syndrome need to take any opportunities for computer training that are available and make sure to continue practicing those skills. An area that continues to be problematic is password usage, and while users may save passwords on their home computers so that they can login quickly, this technique is often not allowed in the workplace, so users with Down syndrome need to be resolute in practicing and memorizing passwords for various accounts.

The design of interfaces and techniques that were often helpful to people with Down syndrome (such as search boxes and auto-suggest) are within the general category of universal usability and are helpful to many user populations. One exception that often does appear as a problem for users with Down syndrome is pull-down menus. For instance, multiple participants couldn’t find the logout on Facebook (which is located on a pull-down menu) and preferred to click the “X” to close the window. The fact that these menu options are hidden may be compounded by the fact that, unlike typical pull-down menus which are located on the left side of the screen, the Facebook pull-down menu is located on the right side of the screen. This problem with pull-down menus was noted as early as the case study of participatory design involving users with Down syndrome (Kirijian & Myers, 2007).

Visual learning, processing, and memory are strengths for people with Down syndrome, and because the iPad has myriad possibilities to use those visual strengths to successfully complete tasks in the workplace as well as in activities of daily living, educational policies, and guidelines should include formal classes to learn to use tablet computers. Transition planning from school to employment should include classes and internships that provide instruction as well as the opportunity to use computer skills. Assessment for employment through local, state, and federal rehabilitation/job training agencies should assess the level of computer skills, mouse, keyboard and touch-screen usage skills. Job placement currently does not frequently consider technology related skills for adults with Down syndrome. Research is now demonstrating that many adults with Down syndrome can use desktop, laptop, and touch-screen devices such as the iPad effectively, and that knowledge and skills need to be considered as policies regarding employment for adults with Down syndrome are developed and revised.

Conclusion

As previously noted, research has shown that individuals with Down syndrome can effectively use computers (Feng et al., 2008), and the results of this study confirm that at least a selected group of individuals with Down syndrome have the capability to use a multi-touch-screen device to complete office related tasks. Computer related training can help people with Down syndrome learn how to use an unfamiliar device. The high level of competency that the users exhibited in this study reveals much about the underutilized potential of this workforce-capable population of users. The ease with which most of the users interacted with a touch-screen also might suggest that a touch-screen could be used as a form of assistive technology, particularly when many desktop computers now have the option to be configured with this feature. The capability observed in this study is particularly encouraging because the majority of the participants had little or no experience using an iPad (but at least basic experience using a touch-screen) before the study. We expect that, with more training, the performance of the participants (especially those in the middle of the spectrum) could be substantially improved.