We are in an age where route to market for technological innovation is unimpeded by even the simplest of safety checks. Computer vision, for example, cannot differentiate the side of a lorry from an open patch of sky when “driving” an autonomous vehicle, whilst iris and facial recognition technologies are rolled out as infallible ID systems with huge error rates on black skin and eyes. Meanwhile, whistleblower testimony about how social media inadvertently engineers self-harm in young people and how there is more than a suggestion that our collective big data is being used in social engineering experiments at scale reinforces a growing vocal concern with ethics in professional UX circles.

The chasm into which all of this simultaneously falls into and erupts from is human factors—that traditional “add on” to technology development that is firmly in the gap between analog and automation; between people and machine; between user and design, affordance, and agency. All of these issues are thus digital transformation issues. The steady migration of personal, social, and cultural values; policy; practice; law; and ideology into computer code with greater and lesser degrees of success.

The goal of this essay is to share seminal research findings in eCommerce and games UX as a foundation for a dual cognitive or “deep user experience design” (Deep UXD) that integrates biometric insights. I suggest this can provide a fundamental basis from which to address persuasion, emotion, and trust (PET; Schaffer, 2009) in the development cycle of all human-computer interaction (HCI) applications. I conclude on the future field of application in terms of UX informed human-centered Al (HCAI) and human-in-the-loop (HITL) service design for Industry 4.0.

Ethical Considerations in User Experience

Ethics permeate all facets of user experience. Mitigating much of the unwanted outcomes—of what is effectively poor functionality—could of course be solved by a requirement for standard human-centered design that is inclusive of user groups and delivers evidence-based usability testing. The biometric ID system, for example, must be proven to work on diverse skin tones before it is allowed to be implemented in any context. The autonomous vehicle must be able to deliver complex contextual decision making at speed, like a human being, which necessarily implies far more than computer vision input can currently deliver.

Meanwhile, as is currently in the news, the personal and social affects, that is, behavioral outcomes of the user experience of much online content have thus far proved too complex and/or outside of the remit of those responsible, beyond the mass canary testing and occasional observational musings of the tech giants. Dark patterns designed into websites and apps to “trick users” (Brignull, 2010) and the capacity of big data analytics to drive system change at scale in a digital economy have driven a growing vocal concern in professional UX circles.

Many nations and global entities are also grappling with understanding and responding to this rapidly changing digital landscape. In 2019, the United Nations released “The Age of Digital Interdependence” report by the High-level Panel on Digital Cooperation that included Melissa Gates and Jack Ma as main contributors. It included a “Declaration of Digital Interdependence” describing humanity as being “in the foothills of the digital age” and laying out key risks as “exploitative behaviour by companies, regulation that stifles innovation and trade, and an unforgivable failure to realise vast potential for advancing.” The declaration frames global “aspirations and vulnerabilities” in the digital age as “deeply interconnected and interdependent” (p. 8).

Earlier this year, UK lawmakers proposed and are considering (at the time of this writing) an Online Safety Bill heralding, in a press release, “landmark laws to keep children safe, stop racial hate and protect democracy online” (U.K. Department for Digital Culture, Media & Sport, 2021). The bill would require services hosting user-generated content to “remove and limit the spread of illegal and harmful content.” The regulatory body will be given the power to fine companies failing in this new duty of care to up to £18 million or 10% of annual global turnover, whichever is the greater, and to have the power to block access to sites and charge senior managers with a criminal offense. Yet even in the face of huge fines and possible criminal charges, defining and evidencing computational harm isn’t easy and certainly isn’t automated as the content, metrics, and outcomes fall into the same semantic gap common to all human-computer interaction (HCI). As Nack and colleagues noted that HCI is “between the rich meaning that users want…and the shallowness…that we can actually compute” (Nack et al., 2001, p. 10).

Similarly, the white paper on artificial intelligence (AI) published by the European Commission (2020) indicated that as part of the future AI regulatory framework there was a need to decide on the types of legal obligations that should be imposed on those involved in the design and production of machine learning (ML) and AI systems. For AI to be trusted, key conditions need to be met such as human agency and oversight, technical robustness and safety, privacy and data governance, diversity, non-discrimination and fairness, societal and environmental wellbeing and accountability, and transparency. This is known as explainable AI (XAI) which aims to help policy makers, businesses, and end users trust that the AI is making good decisions. Yet, who decides what good is and how good is measured? There are already calls for the legal obligations to be imposed on designers and producers to include the design methods used to test and validate the AI system for applied technical, legal, and ethical standards, with mandatory certification for high risk systems assessment (Bar, 2020). Just how far current usability methods can inform and deliver on this level of regulatory complexity remains to be seen.

Even more ambitiously, in 2019, in the 5th Science and Technology Basic Plan, Japan described its vision of “Society 5.0…a human-centered society that balances economic advancement with the resolution of social problems by a system that highly integrates cyberspace and physical space” in a future society that we should all aspire to (Japanese Cabinet Office, 2019, para. 1). Enter the era of ideology in code when we are still struggling technically to move from syntax to semantics. Concurrently, for the soft robotics and human-in-the-loop services (HITL) of Industry 4.0, the need to look to human factors is being increasingly recognized as it “assumes people and technology are working together” (Combs, 2021, para. 18). It is estimated that “HCAI is emerging and has reached 5% to 20% of the target audience. Gartner [Inc.] recommends establishing HCAI as a key principle and creating an AI oversight board to review all AI plans. Companies also should use AI to focus human attention where it is most needed to support digital transformation” (Combs, 2021, para. 19).

When faced with the goal of designing for persuasion, emotion, and trust or PET (Schaffer, 2009), as frontline practitioners, how do we mitigate potential ethical issues to ensure that no harm comes from our work? How do we suggest to line management, leadership, and/or clients that what a system is being optimized to do isn’t desirable, healthy, or ethical? We are seeing in the news how much these questions can affect our society and our families. So, here we are, sitting in an affective and behavioral computational impasse, one that exists between all people and all machines, with huge responsibility for—and little control over—the type of future we are creating and rolling out on a day-to-day basis.

Nudging the User: UXD and Persuasion

At the core of human-computer interaction (HCI) and user experience design (UXD), and really all human/machine teaming scenarios from autonomous vehicles to social media, is the need to nudge—in an ethical and productive way—users’ decision-making processes to optimize the interaction and generate the desired outcomes. From eCommerce funnels and conversion rates, to interpreting a vehicle dashboard and taking physical actions, to wearables and the quantified self and future Mobile Edge services for 5G native, the principles and issues are the same. There are users, a soft interface, a hard interface, a signal, some data, a context, dependencies, stakeholders, company or personal objectives, and organizational, social, and environmental outcomes.

User experience design at its core is about designing the users’ experience to engineer the intended greater outcomes. It is a human centered, research informed, iterative design process that is a convergence of human-computer interaction (HCI), human-centered design (HCD), design, and marketing. (Cham, 2011) The degree of persuasion in any user’s experience varies widely and is dependent not only upon the aims of the system but also the quality of the team and the processes used. Traditionally, while a number of different research methods are used to narrow down the design space, from secondary research on user behaviors to ethnographic interviews of users themselves, gaze analysis using eye tracking technology has been the only biometric device that has been used to assist with quantitative insights into the layout and effective performance of a system mapped through the model of the GUI. Across the broad spectrum of all application domains (from eCommerce, games, military simulations, and the like), qualitative aspects of the UX research, such as satisfaction measures, have traditionally relied on manual observation, interviews, and reportage. However, if the design of any user experience relies on influencing decision making, thus a degree of persuasion as its core mechanic, it might serve us well to consider how we might close the semantic gap in our quantitative research to reflect not only what users say and what users do, but how users feel. This is also, of course, not an area of research that has gone unnoticed. As Tullis and Albert said in their seminal book Measuring the User Experience: “A UX metric reveals something about the interaction between the user and the product: some aspect of effectiveness (being able to complete a task), efficiency (the amount of effort required to complete the task), or satisfaction (the degree to which the user was happy with his or her experience while performing the task)” (2013, p. 7). They also espoused the use of affordable biometric devices to generate quantitative insights into users’ qualitative, affective states in usability testing.

The book also features the work of Sven Krause (now at eBay), whilst he was at Seren (now EY Seren), who was one of the key industry partners of the knowledge transfer projects (Yates, 2014) run from the Digital Media Kingston incubator set up and lead by the author at Kingston University, London (Cham, 2012). Sven’s work on consumer neuroscience with Thom Noble at Neurostrata was core to the learning that created significant and long-term impact for the research and practice of all parties.

Measuring UX Persuasion through Biometric Insights

In 2009, our group at the incubator became proud owners of an Emotiv brain-computer interface (BCI) with the full research grade software development toolkit (£10,000 with full access to the raw data at the time). We had begun to experiment with integrating electroencephalography (EEG) and other biometrics into design and production. We investigated biometric devices as both wearable natural interfaces to drive content, in neuro-games for example, and as augmentation to our user testing protocols for complex applications such as mental health interventions. We saw significant outcomes for UXD across neuromarketing, eCommerce, games, and virtual worlds.

Using methods from neuroscience, such as functional magnetic resonance imaging (fMRI) or electroencephalography (EEG), to evaluate users’ perception and feelings about products and advertising has a long history (The Lancet Neurology, 2004). Consumer neuroscience similarly involves the use of brain scanning techniques with the latest technology. Adapting techniques from neuromarketing for brand research by using a portable BCI kit was the simplest way to augment the user testing.

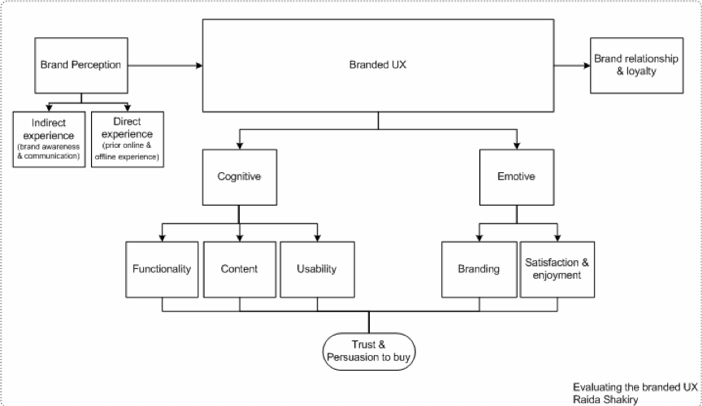

In our Masters incubator, the work of Raida Shakiry (Cham, 2016) demonstrated that only by measuring the cognitive and emotive factors that underpin trust and persuasion to purchase can a branded UX be effectively evaluated at all, as shown in Figure 1.

Figure 1. Branded UX testing framework. (Copyright: Raida Shakiry, 2013 https://www.experience-consultancy.com)

Simultaneously, the neuromarketing work of Sven Krause and Thom Noble demonstrated Daniel Kahneman’s dual cognition hypotheses, popularized in his book Thinking, Fast and Slow (2011), that emotional engagement is key to all decision making. Inspired by Kahneman, Krause’s pitch was that in UX research for persuasion, emotion, and trust, “System One” reactions are non-conscious, unarticulated, and pre-cognitive; whereas, for traditional research measures, “System Two” are conscious, articulated, post cognitive, and expressed responses. Krause and Noble had some considerable success integrating an Emotiv BCI into the design cycle of marketing emails, analysis of moving image advertisements, and quantifying the impact of poor signal speed on brand perception (Radware, 2013).

In 2011, the lead author designed a prototype of a stop smoking app that utilized the visual collateral of a well-known cigarette brand. The examples of other smoking cessation apps coming to market at the time replicated the user experience of the pharmaceutical companies to whom they hoped to sell their product. Suffice to say, the apps opened with lots of reading material, quantitative data about the negative impacts of smoking, and facts and figures. We already know from traditional information campaigns that this is an ineffective approach. HCD requires the designer to empathize with the user to the extent that design decisions become intuitive. Instinctively, holding an iPhone and thinking about a stop smoking app, it’s clear that the phone is roughly the same dimensions as packet of 10 cigarettes. How could the phone be adapted to act as a placebo and intervene in a craving?

Figure 2. Branded stop smoking app. (Credit: Karen Cham, 2011)

Two years later, as part of a student project building upon the work of this paper’s co-authors, UX researchers validated the trained designer instinct. NeuroSky’s MindWave mean results revealed that, overall, participants were more engaged and in a lower meditation state when using the branded app over the un-branded app. Overall participants reported craving a cigarette ~15% less after using the branded app which correlated with the EEG insights on pre-cognitive engagement, generating quantitative data on the persuasive affordances of the brand image and user behavior. Integrating Shakiry’s branded UX testing framework, as shown in Figure 1, in the development cycle confirmed the user experience design was affective and therefore likely to be behavioral. This work investigated the role of branding in UX and decision making to evidence an indication that all brands leverage decision making by means of emotional engagement; quantifying that a brand is a pre-cognitive mechanic. In April of 2015, and as if to prove the point, tobacco brand advertising was banned in the UK, including on packaging at point of sale.

In all the branded UX research, only a summary of attention and relaxation metrics were recorded to provide an overview of the participant’s state of mind. It was found that EEG data cannot identify the type of emotion; it can only identify the intensity or heightening of emotion. Further research was recommended to provide insights using the integrated eye tracking and emotion measurement solution by iMotion and Affectiva as well as using facial recognition software, such as NVISO emotion recognition software.

Measuring UX Immersion through Biometric Insights

We found that gameplay provided a better scenario within which to analyze users’ complex emotional states as immersive media designed for play is a more visceral user experience. Understanding what makes a good gameplay experience is the difference between success and failure. Again, traditional usability testing methods are not fully effective for complex interactions such as video games because gamers are seeking fun and immersion rather than task efficiency. When totally immersed, a gamer describes a sense of presence as being cut off from reality to such an extent that the game was all that mattered. Brown and Cairns’ (2004) research identified three incremental levels of immersion: “engagement, engrossment and total immersion.” In the seminal work, “Flow: The Psychology of Optimal Experience” (Csikszentmihalyi, 1990), the author defined total immersion as a state of “flow”—a state of complete absorption, an optimal state of intrinsic motivation. Our research focused on formulating a commercially applicable methodology to improve complex digital media usability research (gaming, retail, web) by adapting easy-to-use and commercially available biometric tools (EEG, EMG, EDA, HR, HRV, kinetic activity) combined with proven HCI testing techniques and innovative digital video/data capture software.

A key component of the methodology is the measurement of fun and the identity of immersion and flow in the experience. The main proponent in achieving this is the use of psychophysiological testing methods. Commonly used methods to present a method of modeling a user’s emotional state, based on the user’s physiology, are electroencephalogram (EEG), facial electromyography (EMG), electrodermal activity (EDA), and cardiovascular activity including heart rate (HR) and heart rate variability (HRV). For users interacting with complex emotional systems or technologies, such as video games, modeled emotions are a powerful tool as they capture usability and playability through metrics relevant to the “ludic experience,” can account for user emotion, are quantitative and objective, and are represented continuously over time.

In the process of establishing a robust methodology, it became apparent that immersion or flow on its own, in the context of video games, is potentially too narrow to explain the reality of the game usability experience and the different ways in which game designers create experiences that capture emotion. It was found that a more reliable and consistent approach is to also include getting and holding attention along with immersion and flow—which can be loosely defined as the cognitive process of paying attention to one aspect of the environment while ignoring others. Attention is one of the most widely studied and discussed subjects within the field of psychology. The following are different aspects of attention that can have an influence on gameplay and usability experience:

- Attention bottleneck: This is based on the premise that the human mind can only pay attention to one thing at a time. In a multi-player environment, an important consideration for a game designer is the vast amount of different information players need to keep track of. The designer has to take care not to overwhelm the player with attention overload.

- Vigilance: The modern psychological understanding expands on this premise to also consider our unconscious minds that are always monitoring the world for the arrival of important new information. However, we all suffer from something called “vigilance fatigue,” which means that after the first 15 minutes of paying close attention to something, we become much more likely to miss new relevant information if the sensory footprint of the new information is small or weak. This is what drives the concept of pacing in movies, for example, and the shaping of stories, which is often referred to as Freytag’s Triangle of dramatic structure.

- Reflexive attention: Big changes in the visual field exploit what is called the “orienting reflex” that unconsciously attracts players’ attention to the screen. Sudden loud sounds and motion (and even the mention of your name), anything that threatens your survival, and anything new and different have the same affect. This takes place at the back and sides of the brain and is mostly connected with Delta and Theta brainwave activity.

- Executive (voluntary) attention: This is the attention that we’re in charge of, that we choose to direct, and which we have executive control over. Executive attention is one of a group of executive functions that also includes problem solving and takes place primarily in the front of the brain and is mostly concerned with Alpha and Beta brainwave activity.

These findings provided a fundamental basis for the evaluation of any emotive UX. The original project with Seren (Yates, 2014) measured the pre-cognitive users’ experience in immersive gameplay and correlated the findings to a game’s Metacritic user review score (i.e., the user’s popularity ranking), a finding that peaks and troughs in attention span correlated to a game’s popularity. To provide further insights into significant design mechanics that might cause heightened emotions, further research was undertaken by the lead author toward the isolation of “value mechanics” (Cham, 2016), quantifying the salient moments of what users found engaging in the context of an architectural UX grammar or UXD system. This new type of parametric design was defined as “rhizometrics” (Cham, 2014), based on the French cultural concept of the rhizome: “an image of thought…ceaselessly established connections between semiotic chains… in data representation and interpretation” (Delueze & Guattari, 1987).

Closing the Semantic Gap

Despite the obvious successes in all this work, the complexity of measuring, analyzing, and applying affective data as actionable insights in a consistent and affordable manner on a commercial timetable proved a complex matter; a problem that remained in the literature as late as last year (Conley, 2020). Whilst some clients have the budget, time, and inclination to commission quality user testing services from the likes of the co-authors, many do not and/or care not to.

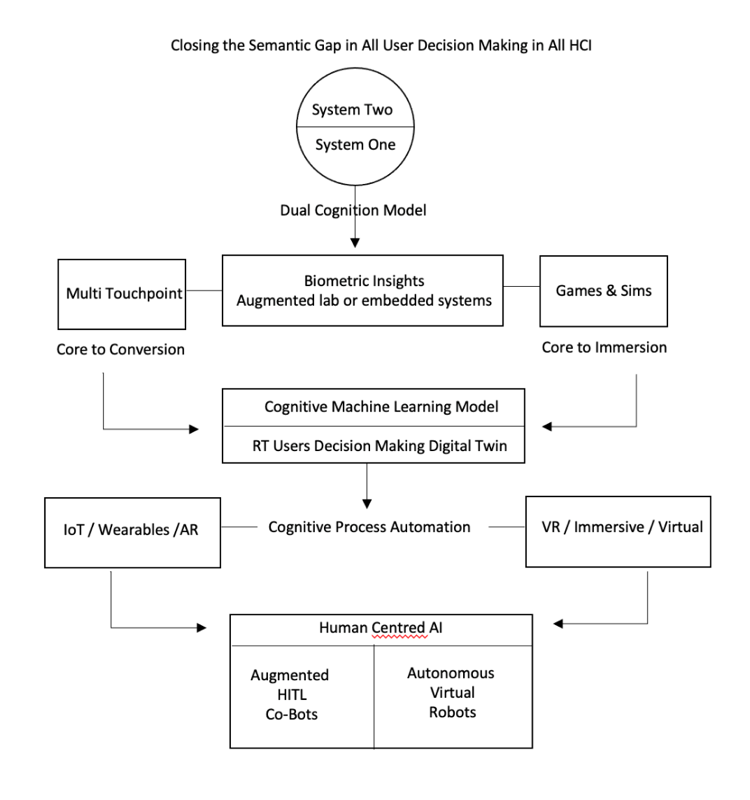

Over the last six years, the lead author of this essay has developed a method of designing, integrating, optimizing, and automating Deep UXD as “rhizometric” cognitive machine learning systems for eLearning, sports coaching, leadership and development, employee experience, and immersive training simulations. As an extended application of Kahneman’s behavioral economics dual cognition decision making hypothesis, this has led to significant progress in affective and behavioral computing and supports renewed recognition of the role of human cognition in AI development (Leito, 2021). Figure 3 below suggests the full domain of application where dual cognition Deep UXD needs to inform cognitive process automation (CPA) toward inclusive, transparent, and explainable AIs.

Figure 3. “Deep UXD”: PET informed CPA for explainable, inclusive, and transparent HCAIs. (Credit: Karen Cham, 2020)

Conclusions

Many innovations go to market with no user involvement. In the UK, we already have laws on equality that wouldn’t have to stretch too far to provide an ethical basis for inclusive design and the necessary legal requirement for evidence-based standard usability testing.

Furthermore, this paper summarizes preliminary key experiments, findings, and outcomes toward a transferrable set of principles for measuring, developing, and delivering Deep UXD; a cognitive and affective evidence-based interaction design methodology that engineers personal, organizational, and social outcomes. A dual cognitive UX ML model and use of biometrics insights into pre-cognitive responses provides a starting point for quantifying how users feel, potentially supporting UX designers, managers, and agencies toward evidencing ethical decision making and delivering explainable AI requirements.

On the evidence to date, such a practice has the potential to see us well into designing, evaluating, and automating the next generation of transformative and convergent personal and social human/machine systems and engineering our preferred outcomes within our laws. This practice is evidence based and user centered and supports the design and testing necessary to build human-centered AIs.

Acknowledgments

I would like to thank Dr. Darrel Greenhill for sharing his Emotiv helmet; Sven Krause for the Vulcan Mind Meld; the genius of Thom Noble and Amanda Squires as equal supporting acts on the star deck; Dr. Bill Albert for the invitation to write this—and being one of my all-time rock star heroes. Finally, and as always, thanks to Aminata and Neneh Cham for loving and living with a mother like me, and knowing that design, research, and innovation always, but always, comes before housework.

References

Bar, G. (2020). Explainability as a legal requirement for artificial intelligence. Medium. https://medium.com/womeninai/explainability-as-a-legal-requirement-for-artificial-intelligence-systems-66da5a0aa693#_ftn1

Brignull, H. (2010). https://www.darkpatterns.org

Brown, E., & Cairns, P. (2004). A grounded investigation of game immersion. CHI ’04 Extended Abstracts on Human Factors in Computing Systems (pp. 1297–1300). https://doi.org/10.1145/985921.986048

Canton, M. (2018). A customer-centric approach must drive digital transformation. Forbes. https://www.forbes.com/sites/forbestechcouncil/2018/01/24/a-customer-centric-approach-must-drive-digital-transformation/

Cham, K. (2011). A semiotic systems approach to user experience design. 1st International Conference on Semiotics & Visual Communication: From theory to practice, Limassol, Cyprus.

Cham, K. (2012). Changing the University genome: A case study of Digital Media Kingston. Networks (8).

Cham, K. (2014). Design by spambot? How can social interaction be successfully integrated into high end fashion design process. Digital Research in the Humanities & Arts, University of Greenwich, London, UK

Cham, K. (2016). Consumer as producer; value mechanics in digital transformation design, process, practice and outcomes. In M. Shiach & T. Virani (Eds.) Cultural policy, innovation, and the creative economy (pp. 61–81). Palgrave McMillan.

Combs, V. (2021, September 9). Gartner: AI is moving fast and will be ready for prime time sooner than you think. TechRepublic. https://www.techrepublic.com/article/gartner-ai-is-moving-fast-and-will-be-ready-for-prime-time-sooner-than-you-think/

Conley, Q. (2020). Learning User Experience Design (LUX): Adding the “L” to UX research using biometric sensors. In E. Romero-Hall (Ed.), Research methods in learning design and technology. Routledge

Csikszentmihalyi, M. (1990). Flow: The Psychology of Optimal Experience. Journal of Leisure Research, 24(1), pp. 93–94.

Delueze, G., & Guattari, F. (1987). A thousand plateaus: Capitalism & schizophrenia. University of Minnesota Press.

European Commission (2020). On artificial intelligence: European approach to excellence and trust (White Paper). https://ec.europa.eu/info/sites/default/files/commission-white-paper-artificial-intelligence-feb2020_en.pdf

Japanese Cabinet Office, Science and Technology Policy. Council for Science, Technology and Innovation (2019). Society 5.0. https://www8.cao.go.jp/cstp/english/society5_0/index.html

Kahneman, D. (2011). Thinking, fast and slow. Farrar, Straus and Giroux.

Leito, A. (2021). Cognitive design for artificial minds. Routledge.

Nack, F., Dorai, C., & Venkatesh, S. (2001). Computational media aesthetics: Finding meaning beautiful. IEEE MultiMedia, 8(4).

Radware (2013). Mobile web stress: The impact of network speed on emotional engagement and brand perception [White Paper]. Acknowledgements Everts, Krause, and Noble. https://www.immagic.com/eLibrary/ARCHIVES/GENERAL/RDWR_IL/R131206M.pdf

Schaffer, E. (2009). Beyond usability: Designing web sites for persuasion, emotion, and trust beyond usability. UX Matters. http://www.uxmatters.com/mt/archives/2009/01/beyond-usability-designing-web-sites-for-persuasion-emotion-and-trust.php

The Lancet Neurology (2004). Neuromarketing: Beyond branding. The Lancet Neurology, 3(2), p. 71. https://doi.org/10.1016/S1474-4422(03)00643-4

Tullis, T., & Albert, B. (2013). Measuring the user experience: Collecting, analyzing, and presenting usability metrics (2nd Ed.). Elsevier: Morgan Kaufmann.

U.K. Department for Culture, Media & Sport, Home Office (2021). Landmark laws to keep children safe, stop racial hate and protect democracy online published. [Press Release]. https://www.gov.uk/government/news/landmark-laws-to-keep-children-safe-stop-racial-hate-and-protect-democracy-online-published

United Nations (2019). The age of digital interdependence: Report of the UN Secretary-General’s high-level panel on digital cooperation. https://www.un.org/en/pdfs/DigitalCooperation-report-for%20web.pdf

Yates, C. (2014). Methodologies for using biofeedback data in user experience and usability studies [Project Report]. http://www.creativeworkslondon.org.uk/wp-content/uploads/2013/11/London_fusion_project_report_v1-3.pdf